cnn-visualization-keras-tf2

cnn-visualization-keras-tf2 copied to clipboard

cnn-visualization-keras-tf2 copied to clipboard

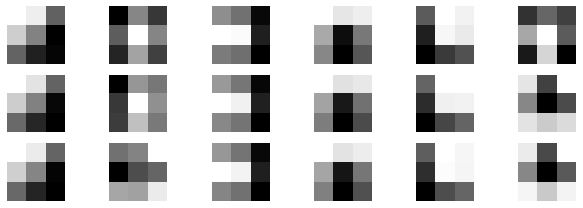

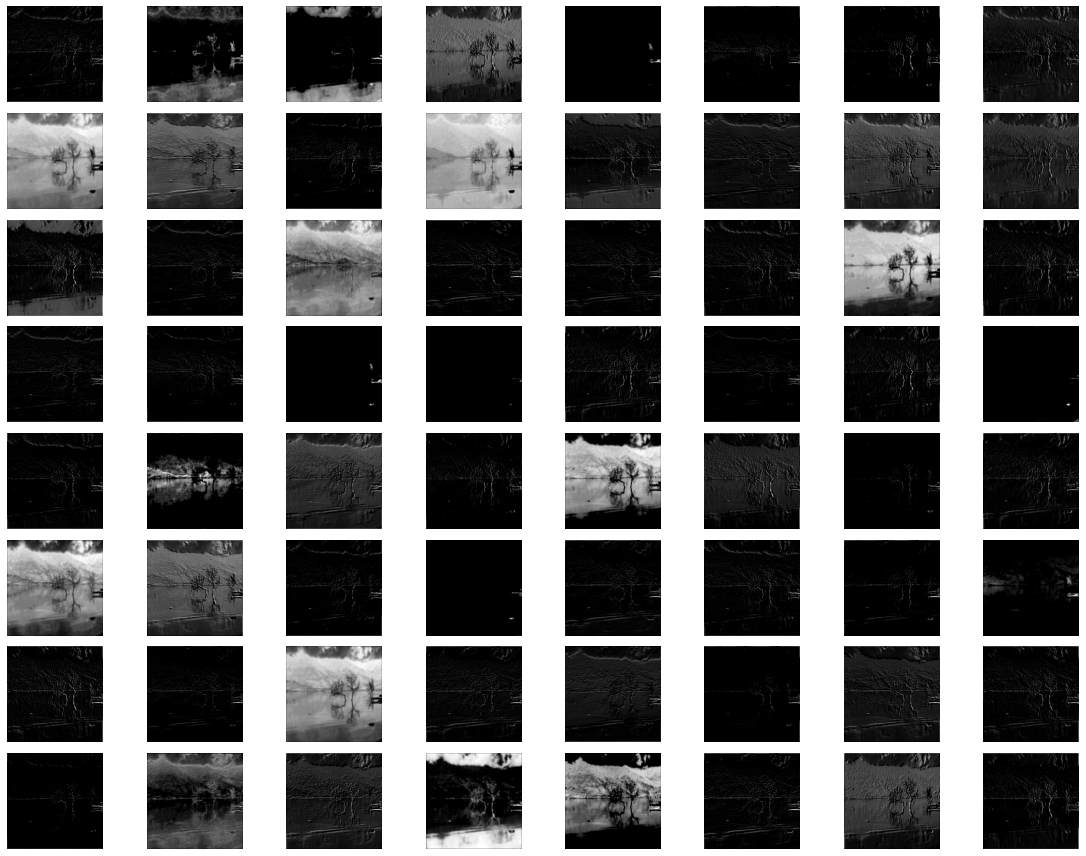

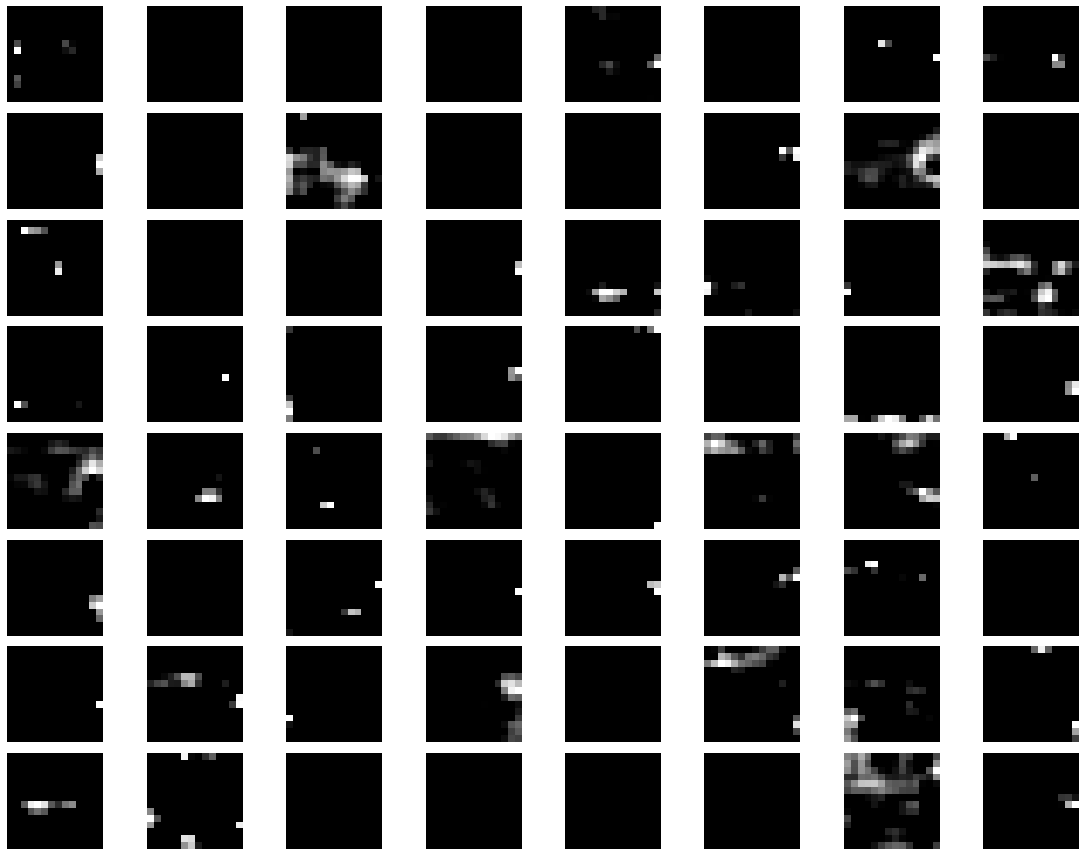

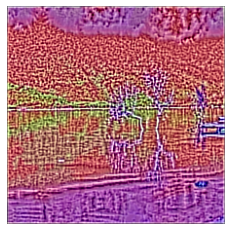

Filter visualization, Feature map visualization, Guided Backprop, GradCAM, Guided-GradCAM, Deep Dream

CNN Visualization and Explanation

This project aims to visualize filters, feature maps, guided backpropagation from any convolutional layers of all pre-trained models on ImageNet available in tf.keras.applications (TF 2.3). This will help you observe how filters and feature maps change through each convolution layer from input to output.

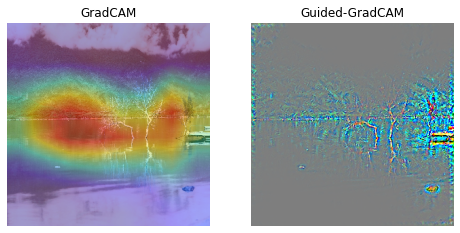

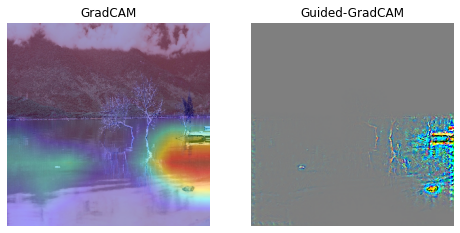

With any image uploaded, you can also make the classification with any of the above models and generate GradCAM, Guided-GradCAM to see the important features based on which the model makes its decision.

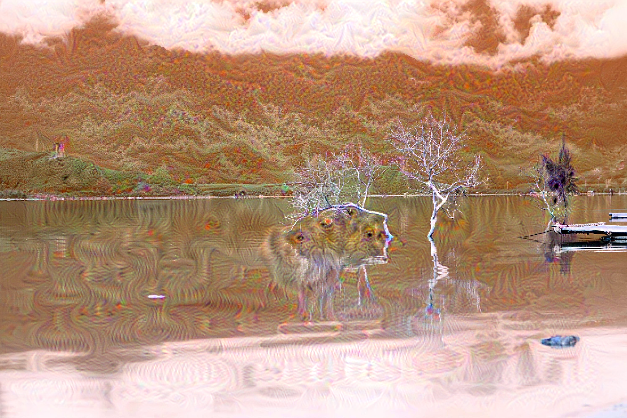

If "art" is in your blood, you can use any model to generate hallucination-like visuals from your original images. For this feature, personally, I highly recommend trying with "InceptionV3" model as the deep-dream images generated from this model are appealing.

With the current version, there are 26 pre-trained models.

How to use

Run with your resource

- Clone this repo:

git clone https://github.com/nguyenhoa93/cnn-visualization-keras-tf2

cd cnn-visualization-keras-tf2

- Create virtualev:

conda create -n cnn-vis python=3.6

conda activate cnn-vs

bash requirements.txt

- Run demo with the file

visualization.ipynb

Run on Google Colab

- Go to this link: https://colab.research.google.com/github/nguyenhoa93/cnn-visualization-keras-tf2/blob/master/visualization.ipynb

- Change your runtime type to

Python3 - Choose GPU as your hardware accelerator.

- Run the code.

Voila! You got it.

Briefs

References

- How to Visualize Filters and Feature Maps in Convolutional Neural Networks by Machine Learning Mastery

- Pytorch CNN visualzaton by utkuozbulak: https://github.com/utkuozbulak

- CNN visualization with TF 1.3 by conan7882: https://github.com/conan7882/CNN-Visualization

- Deep Dream Tutorial from François Chollet: https://keras.io/examples/generative/deep_dream/