apps

apps copied to clipboard

apps copied to clipboard

evaluation on multiple solutions at once causes memory leak

Hi @xksteven , I have a question about why you advise to run the evaluation code for one solution at a time instead of doing it for all generations at once? I have added the metric to the HuggingFace hub https://huggingface.co/spaces/codeparrot/apps_metric (I didn’t change the core script testing_util.py) with evaluation done for all solutions at once and I sometimes get a memory leak for which I can’t identify the source because when I do the evaluation on the same solutions separately this doesn’t happen.

Below is the code that causes memory saturation:

from evaluate import load

generations = [["s = input()\nn = len(s)\nm = 0\n\nfor i in range(n):\n\tc = s[i]\n\tif c == '|':\n\t\tif m < 2:\n\t\t\tm = 2\n\t\telse:\n\t\t\tm += 1\n\telif c == '\\n':\n\t\tif m < 2:\n\t\t\tm = 2\n\t\telse:\n\t\t\tm += 1\n\nif m < 2:\n\tprint(-1)\nelse:\n\tprint(m * 2 - 1)\n"], ["\nx = int(input())\n\nl = list(range(x+1))\n\nm = next(l)\n\ns = sum(list([int(i) for i in str(m)]))\n\nif s > sum(list([int(i) for i in str(m)])) :\n\tm = next(l)\n\t\nprint(m)\n"]]

metric = load("codeparrot/apps_metric")

results = metric.compute(predictions=generations, level="all", debug=False)

While this works fine:

generation_1 = generations[:1]

generation_2 = generations[1:2]

results_1 = metric.compute(predictions=generation_1, level="all", debug=False)

results_2 = metric.compute(predictions=generation_2, level="all", debug=False)

print(results_1)

print(results_2)

{'avg_accuracy': 0.23185840707964603, 'strict_accuracy': 0.0, 'pass_at_k': None}

{'avg_accuracy': 0.0, 'strict_accuracy': 0.0, 'pass_at_k': None}

The evaluations didn't need any GPUs and could run on CPUs so we optimized to run the evaluations in parallel across many CPUs across many nodes with our data. That was probably why we didn't notice any memory leak with the evaluations of running all of them on one node.

Since the code crashes depending on the output of the model I can imagine there will be memory leaks. I've had models give me segmentation faults with python code which are quite strange and difficult to debug.

I'll see if I can look at the code it generated to figure out what's causing that.

Honestly I think it's probably best to do something more sophisticated though and do better encapsulation for the code the model generates. Running arbitrary code from a transformer could cause bad effects locally even with simple stuff if the model outputs

import subsystem

subsystem.call(["rm", "-rf", "/*")

Not sure if you have any suggestions for this to be honest?

Actually what’s weird is that the evaluation of a sample should be the same but here it seems that calling run_test a second time after doing the evaluation of the first generation causes the memory leak, and this doesn’t happen if it’s called once for this same generation. You can experiment with this code:

from datasets import load_dataset

from testing_util import run_test

DATASET = "codeparrot/apps"

generations = [["s = input()\nn = len(s)\nm = 0\n\nfor i in range(n):\n\tc = s[i]\n\tif c == '|':\n\t\tif m < 2:\n\t\t\tm = 2\n\t\telse:\n\t\t\tm += 1\n\telif c == '\\n':\n\t\tif m < 2:\n\t\t\tm = 2\n\t\telse:\n\t\t\tm += 1\n\nif m < 2:\n\tprint(-1)\nelse:\n\tprint(m * 2 - 1)\n"], ["\nx = int(input())\n\nl = list(range(x+1))\n\nm = next(l)\n\ns = sum(list([int(i) for i in str(m)]))\n\nif s > sum(list([int(i) for i in str(m)])) :\n\tm = next(l)\n\t\nprint(m)\n"]]

generation_1 = generations[:1]

generation_2 = generations[1:2]

apps_eval = load_dataset(DATASET, split="test", difficulties=["all"])

# this code doens't work on generations(memory leak) but works for generation_1 and generation_2

for index in range(len(generations)):

gen = generations[index][0]

print(f"Evaluating problem {index}")

# get corresponding samples from APPS dataset

sample = apps_eval[index]

curr_res = run_test(sample, test=gen, debug=True)

print(curr_res)

Regarding security, you’re right it’s an issue but the easiest thing is to let the user be aware of it and decide where they want to run the generation(use a sandbox for instance..) Example from HumanEval:

N.B. This metric exists to run untrusted model-generated code. Users are strongly encouraged not to do so outside of a robust security sandbox. Before running this metric and once you’ve taken the necessary precautions, you will need to set the HF_ALLOW_CODE_EVAL environment variable. Use it at your own risk:

import os

os.environ["HF_ALLOW_CODE_EVAL"] = "1"`

Update: There are also some reliability guards in the metric code https://huggingface.co/spaces/evaluate-metric/code_eval/blob/main/execute.py#L158

@loubnabnl Do you know how I can run the code from https://huggingface.co/spaces/codeparrot/apps_metric/tree/main ? Not sure how to download it. I'm not sure if the code from their has diverged at all so I'd ideally like to test against the same code.

I tried to run the following but it gets killed

from evaluate import load

apps_metric = load('loubnabnl/apps_metric')

results = apps_metric.compute(predictions=generations, debug=True)

>>> python test_script.py

....

not passed output = ['Call-based runtime error or time limit exceeded error = TypeError("\'list\' object is not an iterator")\'list\' object is not an iterator'], test outputs = 99999999

, inputs = 100000000 new-line , <class 'str'>, False

time: 12:02:49.600193 testing index = 20 inputs = 1000000000

, <class 'str'>. type = CODE_TYPE.standard_input

Killed

It's actually a git repo you can just clone it

git clone https://huggingface.co/spaces/codeparrot/apps_metric

The process gets killed because it runs out of memory, if you monitor the CPU RAM during evaluation you will notice that

Just to be clear we're not interested in debugging the OOM issue or we are? The following just evaluating the second example on the second test problem will give me an OOM issue. If it's not the issue we care about then it will take me more time as I don't have access to compute to be able to run with a much larger RAM.

from datasets import load_dataset

from testing_util import run_test

DATASET = "codeparrot/apps"

generations = [["s = input()\nn = len(s)\nm = 0\n\nfor i in range(n):\n\tc = s[i]\n\tif c == '|':\n\t\tif m < 2:\n\t\t\tm = 2\n\t\telse:\n\t\t\tm += 1\n\telif c == '\\n':\n\t\tif m < 2:\n\t\t\tm = 2\n\t\telse:\n\t\t\tm += 1\n\nif m < 2:\n\tprint(-1)\nelse:\n\tprint(m * 2 - 1)\n"], ["\nx = int(input())\n\nl = list(range(x+1))\n\nm = next(l)\n\ns = sum(list([int(i) for i in str(m)]))\n\nif s > sum(list([int(i) for i in str(m)])) :\n\tm = next(l)\n\t\nprint(m)\n"]]

generation_1 = generations[:1]

generation_2 = generations[1:2]

apps_eval = load_dataset(DATASET, split="test", difficulties=["all"])

gen = generations[1][0]

gen = generation_1

print(f"Evaluating problem {1}")

# get corresponding samples from APPS dataset

sample = apps_eval[1]

curr_res = run_test(sample, test=gen, debug=True)

print(curr_res)

My guess as to why it's getting OOM is due to

sum(list([int(i) for i in str(m)]))

the fact that it calls sum and creates an internal list() object and another list [] implicitly within it as well.

Indeed the problem seems to be from this generation alone and not the fact that we're doing multiple evaluations.

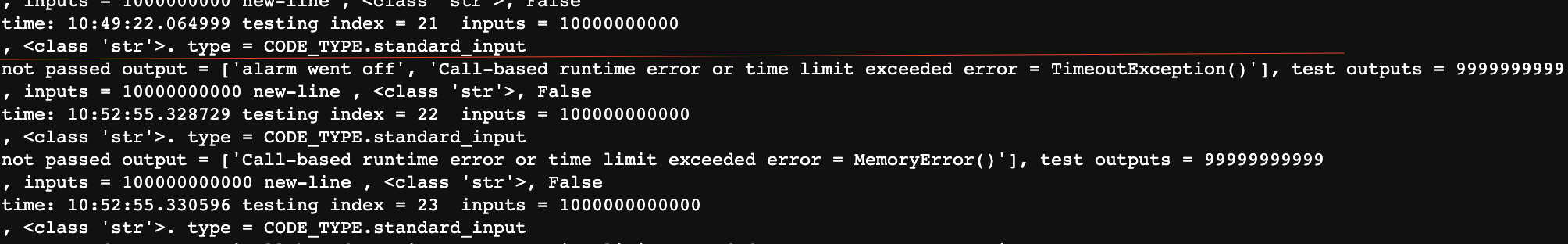

But I think you already have timeouts in the code which should prevent the code from running for so long (hence stopping memory leaks)? If it helps this is what debug mode with large memory looks like, the memory leak happens after test index 21 and takes up to 350GB then starts going down and does the rest of the tests in a normal way.

Maybe a better solution would be to add a timeout around run_test, it's also the case for HumanEval where the timeout is done for the whole code with all tests (4 seconds), but in APPS sometimes there are lots of tests what do you think a good timeout threshold could be?

For security I just realized we also have this in HumanEval metric https://huggingface.co/spaces/evaluate-metric/code_eval/blob/main/execute.py#L158, we can add a call to this function inside run_test as a base safety guard

Thanks for the link! I'll rerun the test again with a much smaller memory limit. Hopefully that'll fix the issue.

I don't know if you've been able to run it or fix it. Mine gets OOM killed on example 20 when running locally. I imagine the HF servers have more RAM so not sure how helpful it is for me to debug the values locally though? Not sure how to best proceed here tbh.

Hi sorry for not updating you earlier. So the memory leak happens this line (for both indexes 20 and 21 ) https://github.com/hendrycks/apps/blob/1b052764e10804ae79cf12c24801aaa818ea36ab/eval/testing_util.py#L303 the timeout there doesn’t work, it seems overwritten by call_method I didn’t manage to fix it. I used a workaround by adding a global timeout for all tests https://huggingface.co/spaces/codeparrot/apps_metric/blob/main/utils.py#L12

import json

import multiprocessing

from datasets import load_dataset

from testing_util import run_test

DATASET = "codeparrot/apps"

apps_eval = load_dataset(DATASET, split="test", difficulties=["all"])

def check_correctness(sample, generation, timeout, debug=True):

def _temp_run(sample, generation, debug, result):

result.append(run_test(sample, test=generation, debug=debug))

manager = multiprocessing.Manager()

result = manager.list()

p = multiprocessing.Process(target=_temp_run, args=(sample, generation, debug, result))

p.start()

p.join(timeout=timeout + 1)

if p.is_alive():

p.kill()

if not result:

in_outs = json.loads(sample["input_output"])

#consider that all tests failed

result = [[-1 for i in range(len(in_outs["inputs"]))]]

if debug:

print(f"global timeout")

return result[0]

generation = "\nx = int(input())\n\nl = list(range(x+1))\n\nm = next(l)\n\ns = sum(list([int(i) for i in str(m)]))\n\nif s > sum(list([int(i) for i in str(m)])) :\n\tm = next(l)\n\t\nprint(m)\n"

sample = apps_eval[1]

print(check_correctness(sample, generation, timeout=10, debug=False))

I still need to make some tests to make sure this doesn’t heavily affect the scores, but I think it shouldn’t as 10 seconds seems like a large threshold to me. Happy to open a PR if you want to add this in your repo.

If after your testing you could do a PR please we'd be happy to accept it. :)

Closing as completed with new PR #16