vault

vault copied to clipboard

vault copied to clipboard

Database plugin MongoDB: writeconcern set by user is not applied in user deletion

Description When Vault deletes a user on a MongoDB replica, it always uses WriteConcern = 'majority' despite the setting in the connection configuration.

This is proven by this function:

dropUserCmd := &dropUserCommand{

Username: req.Username,

WriteConcern: writeconcern.New(writeconcern.WMajority()),

}

in \plugins\database\mongodb\mongodb.go line 213.

To Reproduce Steps to reproduce the behavior:

- Set up a replica set of MongoDB (also on Docker in a local instance)

- Configire in Vault a MongoDB connection with setting

WriteConcern = 1i.e. using

{

"W": 1,

}

- Change log level in MongoDB:

db.setLogLevel(5, 'command') - Generate a credential for MongoDB

- Revoke the token in Vault (or generate the credential with a short lived TTL and wait)

- Have a look to the MongoDB log file where you can see that the deletion is made by Vault with write concern "majority"

Expected behavior Also the deletion must be done using the write concern specified in the connection setting

Environment:

- Vault Server Version (retrieve with

vault status): 1.12.0

Hi everybody, any update on this issue? is everything clear? Do you already schedule when it will be fixed?

@enrico-e Hello! Thank you for bringing this to our attention. We are looking into this. Could you please provide the exact log line you are using(step 6)? We are trying to confirm something on our side. Thank you!

``Dear @Zlaticanin yes of course. On my instance the log looks like this:

{"t":{"$date":"2022-12-20T15:23:24.037+01:00"},"s":"I", "c":"COMMAND", "id":51803, "ctx":"conn2551632","msg":"Slow query","attr":{"type":"command","ns":"admin.$cmd","command":{"dropUser":"v-approle-mongo-XXX-XXX-XXX","writeConcern":{"w":"majority"},"lsid":{"id":{"$uuid":"d859da16-498d-48b1-8245-9d3a9259977f"}},"$clusterTime":{"clusterTime":{"$timestamp":{"t":1671546204,"i":43}},"signature":{"hash":{"$binary":{"base64":"I2BD4sv1nybqH5ttcQFd8RnVAt8=","subType":"0"}},"keyId":7121727823185707175}},"$db":"admin","$readPreference":{"mode":"primary"}},"numYields":0,"reslen":163,"locks":{"ParallelBatchWriterMode":{"acquireCount":{"r":2}},"ReplicationStateTransition":{"acquireCount":{"w":5}},"Global":{"acquireCount":{"r":3,"w":2}},"Database":{"acquireCount":{"w":2}},"Collection":{"acquireCount":{"w":2}},"Mutex":{"acquireCount":{"r":2}}},"flowControl":{"acquireCount":2,"timeAcquiringMicros":2},"writeConcern":{"w":"majority","wtimeout":0,"provenance":"clientSupplied"},"storage":{},"remote":"XXX.XXX.XXX.XXX:YYYY","protocol":"op_msg","durationMillis":7}}

As you may see from the above log: "writeConcern":{"w":"majority"}.

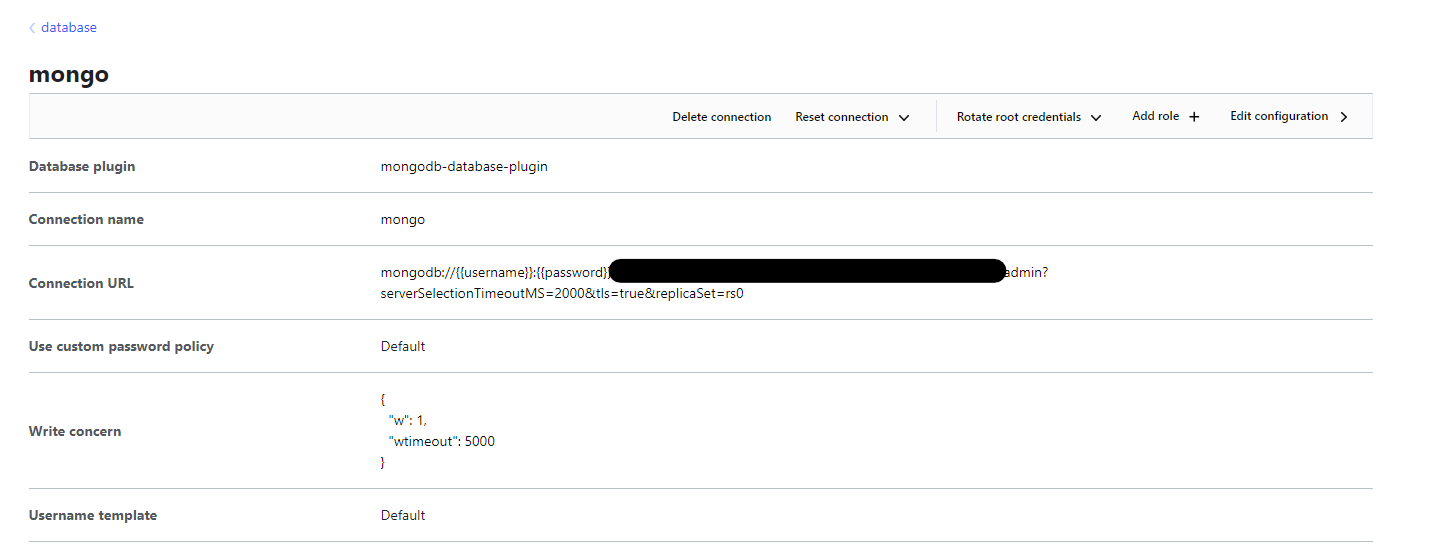

However, my definition of the MongoDB connection in Hashi Corp Vault is the following:

In addition to this, please find attached here below the MongoDB log about the creation of the same user of the deletion log above. As you may notice, here the writeConcern is correctly applied. Only deletion has this bug.

{"t":{"$date":"2022-12-20T15:23:22.459+01:00"},"s":"I", "c":"COMMAND", "id":51803, "ctx":"conn2551632","msg":"Slow query","attr":{"type":"command","ns":"admin.$cmd","command":{"createUser":"v-approle-mongo-XXX-XXX-XXX","pwd":"xxx","roles":[{"role":"readWrite","db":"euler"}],"lsid":{"id":{"$uuid":"d859da16-498d-48b1-8245-9d3a9259977f"}},"$clusterTime":{"clusterTime":{"$timestamp":{"t":1671546202,"i":83}},"signature":{"hash":{"$binary":{"base64":"mU1q0CzvWQItVJ14yqvzW254U8c=","subType":"0"}},"keyId":7121727823185707175}},"$db":"admin","$readPreference":{"mode":"primary"}},"numYields":0,"reslen":163,"locks":{"ParallelBatchWriterMode":{"acquireCount":{"r":2}},"ReplicationStateTransition":{"acquireCount":{"w":5}},"Global":{"acquireCount":{"r":3,"w":2}},"Database":{"acquireCount":{"w":2}},"Collection":{"acquireCount":{"w":2}},"Mutex":{"acquireCount":{"r":2}}},"flowControl":{"acquireCount":2,"timeAcquiringMicros":2},"writeConcern":{"w":1,"wtimeout":0,"provenance":"customDefault"},"storage":{},"remote":"XXX.XXX.XXX.XXX:YYYY","protocol":"op_msg","durationMillis":40}}

@enrico-e Thank you for the fast response. I am having issues reproducing this bug. So, I followed your instructions:

- Set up MongoDB replica set with local docker

- Configured a Vault connection including the

write_concern="{\"W\": 1}" - Changed the log level in MongoDB sh

- Generated a credential setting the

default_ttl="1m"and waiting for the cred to be revoked

Now, I tried looking for that log line on various MongoDB instances, but I can't find anything similar anywhere. Am I missing something?

Dear @Zlaticanin, could be due to the fact that probably in your environment the dropUser does not lead to a Slow Query. In this case, can you confirm that you can see a log like this:

{"t":{"$date":"2022-12-20T15:20:37.485+01:00"},"s":"D2", "c":"COMMAND", "id":21965, "ctx":"conn668145","msg":"About to run the command","attr":{"db":"admin","commandArgs":{"dropUser":"v-approle-mongo-XXX-XXX-XXX","writeConcern":{"w":"majority"},"lsid":{"id":{"$uuid":"baca4eb0-9206-46be-bc5b-6633e3870d25"}},"$clusterTime":{"clusterTime":{"$timestamp":{"t":1671546037,"i":72}},"signature":{"hash":{"$binary":{"base64":"8PtX9aamf3VcSZa46Y5wVbGHpF8=","subType":"0"}},"keyId":7121727823185707175}},"$db":"admin","$readPreference":{"mode":"primary"}}}}

Also in this log you can see {"w":"majority"} attribute.

I am looking forward to hearing from you. Best regards

Somehow I missed your reply today. I apologize. @enrico-e

This is how my logs look like after I set up everything following your steps and configure a Vault connection with the write_concern="{\"W\": 1}".

{"t":{"$date":"2022-12-21T20:35:03.213+00:00"},"s":"I", "c":"REPL", "id":21392, "ctx":"ReplCoord-234","msg":"New replica set config in use","attr":{"config":{"_id":"myReplicaSet","version":1,"term":14,"members":[{"_id":0,"host":"mongo1:27017","arbiterOnly":false,"buildIndexes":true,"hidden":false,"priority":1,"tags":{},"secondaryDelaySecs":0,"votes":1},{"_id":1,"host":"mongo2:27017","arbiterOnly":false,"buildIndexes":true,"hidden":false,"priority":1,"tags":{},"secondaryDelaySecs":0,"votes":1},{"_id":2,"host":"mongo3:27017","arbiterOnly":false,"buildIndexes":true,"hidden":false,"priority":1,"tags":{},"secondaryDelaySecs":0,"votes":1}],"protocolVersion":1,"writeConcernMajorityJournalDefault":true,"settings":{"chainingAllowed":true,"heartbeatIntervalMillis":2000,"heartbeatTimeoutSecs":10,"electionTimeoutMillis":10000,"catchUpTimeoutMillis":-1,"catchUpTakeoverDelayMillis":30000,"getLastErrorModes":{},"getLastErrorDefaults":{"w":1,"wtimeout":0},"replicaSetId":{"$oid":"63a0c9778b1fcb3697f763f7"}}}}}

Pretty different. Still trying to reproduce yours.

I think I am able to reproduce it now. This is how my logs look like now:

{"t":{"$date":"2022-12-21T23:58:20.295+00:00"},"s":"I", "c":"COMMAND", "id":51803, "ctx":"conn52","msg":"Slow query","attr":{"type":"command","ns":"admin.$cmd","appName":"mongosh 1.6.1","command":{"dropUser":"v-token-XXXXX-XXXXXXXXXXX-XXXXXX","writeConcern":{"w":"majority"},"lsid":{"id":{"$uuid":"503728f4-f4a7-45bc-bee5-cd9f36bf831b"}},"$clusterTime":{"clusterTime":{"$timestamp":{"t":1671667098,"i":1}},"signature":{"hash":{"$binary":{"base64":"AAAAAAAAAAAAAAAAAAAAAAAAAAA=","subType":"0"}},"keyId":0}},"$db":"admin","$readPreference":{"mode":"primaryPreferred"}},"numYields":0,"reslen":163,"locks":{"ParallelBatchWriterMode":{"acquireCount":{"r":2}},"FeatureCompatibilityVersion":{"acquireCount":{"r":3,"w":2}},"ReplicationStateTransition":{"acquireCount":{"w":5}},"Global":{"acquireCount":{"r":3,"w":2}},"Database":{"acquireCount":{"w":2}},"Collection":{"acquireCount":{"w":2}},"Mutex":{"acquireCount":{"r":2}}},"flowControl":{"acquireCount":2,"timeAcquiringMicros":1},"writeConcern":{"w":"majority","wtimeout":0,"provenance":"clientSupplied"},"storage":{},"remote":"172.24.0.1:62688","protocol":"op_msg","durationMillis":5}}

I will investigate and test this further tomorrow morning. Thank you for your help!

Dear @Zlaticanin thanks a lor for your continuous updating on this topic and for considering it in a serious manner.

I confirm that you finally got the right log from MongoDB! This log appears on the primary node of the MongoDB replica only if you set db.setLogLevel(5, 'command') (always on the primary).

As you can see, the dropUser command is raised by Vault using "writeConcern":{"w":"majority"}, despite your configuration on the Vault connection was write_concern="{\"W\": 1}".

Vault should not behave in this way: the writeConcern set on Vault must be applied to any query made on the database. Under some topology of the MongoDB replica set, when one node goes offline (e.g. due to maintenance) this Vault bug can cause the complete lock of the database cluster! This because the dropUser command will never receive the acknowledge from the replicaset and the Vault will not be able to create any other new user (being the collection locked by the dropUser command).

In addition, did you have a look at the source code of the Vault I mentioned on my initial post? I think the bug lies in \plugins\database\mongodb\mongodb.go on line 213:

dropUserCmd := &dropUserCommand{

Username: req.Username,

WriteConcern: writeconcern.New(writeconcern.WMajority()),

}

As you may see, the source code specify the writeconcern.WMajority(), and not the one set by the user.

I am really looking forward to hearing from you.

Hello @enrico-e ! The bug is now fixed (#18546). Thank you so much for catching this and your help!

Results from the log with the fix:

{"t":{"$date":"2022-12-23T21:26:35.031+00:00"},"s":"I", "c":"COMMAND", "id":51803, "ctx":"conn76","msg":"Slow query","attr":{"type":"command","ns":"admin.$cmd","appName":"mongosh 1.6.1","command":{"dropUser":"v-token-my-role-XXXXX-XXXXXXXXXXX-XXXXXX","writeConcern":{"w":1},"lsid":{"id":{"$uuid":"07037c7b-6701-4cbb-8547-4ee40ba97542"}},"$clusterTime":{"clusterTime":{"$timestamp":{"t":1671830794,"i":1}},"signature":{"hash":{"$binary":{"base64":"AAAAAAAAAAAAAAAAAAAAAAAAAAA=","subType":"0"}},"keyId":0}},"$db":"admin","$readPreference":{"mode":"primaryPreferred"}},"numYields":0,"reslen":163,"locks":{"ParallelBatchWriterMode":{"acquireCount":{"r":2}},"FeatureCompatibilityVersion":{"acquireCount":{"r":3,"w":2}},"ReplicationStateTransition":{"acquireCount":{"w":5}},"Global":{"acquireCount":{"r":3,"w":2}},"Database":{"acquireCount":{"w":2}},"Collection":{"acquireCount":{"w":2}},"Mutex":{"acquireCount":{"r":2}}},"flowControl":{"acquireCount":2,"timeAcquiringMicros":1},"writeConcern":{"w":1,"wtimeout":0,"provenance":"clientSupplied"},"storage":{},"remote":"172.20.0.1:56816","protocol":"op_msg","durationMillis":1}}

Dear @Zlaticanin thanks to you for your effort. I would really like to test it and put in production on my environment. Do you know when the fix will be available for downloading?

By the way Milena @Zlaticanin, can you figure our why deleting a user results always in a Slow Query?