packer-plugin-amazon

packer-plugin-amazon copied to clipboard

packer-plugin-amazon copied to clipboard

amazon-ebs builder timeout error for AWS API endpoints

This issue was originally opened by @ranjb as hashicorp/packer#6162. It was migrated here as a result of the Packer plugin split. The original body of the issue is below.

The amazon-ebs builder randomly times out when interacting with the AWS API. This can happen at any stage during the build, either at the beginning or at the end after the AMI is created and packer is trying to shut down the instance or sometimes when attempting to tag the AMI etc. The build script runs more than one packer build in sequence and sometimes the first one builds fine while it times out on the second or sometimes it fails right at the beginning of the first.

Example: --> amazon-ebs: Error stopping instance: RequestError: send request failed caused by: Post https://ec2.us-east-1.amazonaws.com/: dial tcp 54.239.28.176:443: i/o timeout

- Packer version 1.2.2

- Host platform: AWS Ubuntu 14.04 Docker container running on AWS via AWS CodeBuild

- Debug log using PACKER_LOG=1 https://gist.github.com/ranjb/cf8bd0d93242d4a935854acf2c4514de

This is problem (or similar) has greatly increased in frequency for us in the past month. It's never been completely absent, but has been at the tolerable annoyance level. Now it's serious issue as it's burning time with far too many failed builds from this one cause.

~$ packer --version

1.7.3

From jenkins invocation of packer:

00:00:32.187 + ./bin/packer build -var ACCOUNT_ID=380631558036 -var aws_access_key=**** -var aws_secret_key=**** -var RELEASE_CYCLE=5.1.0 -var DEFAULT_USER=ubuntu -var APP_BRANCH=master -var APT_DEPENDENCIES=null -var BRANCH_NAME=develop -var MATURITY=dev -var SOURCE_AMI_VERSION=rc4 -var UBUNTU_VERSION=18.04 -var AWS_REGION=eu-central-1 -var SSH_PRIVATE_KEY_FILE=**** -var STOP_AFTER_COMPLETE=false -var INSTANCE_TYPE=m5a.large -var JFROG_CREDS=**** packer/ami_builder.json

00:00:32.457 amazon-ebs: output will be in this color.

00:00:32.457

00:00:33.416 ==> amazon-ebs: Prevalidating any provided VPC information

00:00:33.416 ==> amazon-ebs: Prevalidating AMI Name: bioprod-5.1.0-1627325582

00:00:43.480 ==> amazon-ebs: Error querying AMI: RequestError: send request failed

00:00:43.481 ==> amazon-ebs: caused by: Post "https://ec2.eu-central-1.amazonaws.com/": net/http: TLS handshake timeout

00:00:43.481 Build 'amazon-ebs' errored after 10 seconds 877 milliseconds: Error querying AMI: RequestError: send request failed

00:00:43.481 caused by: Post "https://ec2.eu-central-1.amazonaws.com/": net/http: TLS handshake timeout

00:00:43.481

Seems to happen most often when there's multiple concurrent builds in progress.

Our VPN's IP gateway has only a handful of IPs, (OK, 2) to NAT all of us to the internet. That is something we're working on, but for now, there seems to be no way to configure the packer retires for the https://www.packer.io/docs/builders/amazon/ebs#ami-builder-ebs-backed builder.

In fact, there is zero occurrences of the word "retry" on that page. There are however a couple of timeout parameters:

ssh_timeout

ssh_read_write_timeout

Adding these, in addition to bumping the aws_polling from 50 (which already seems ridiculous) to 100:

"aws_polling": {

"delay_seconds": 20,

"max_attempts": 100

},

"ssh_timeout": "15m",

"ssh_read_write_timeout": "30m",

don't appear to help. 2 out of 10 builds still failing with the same error.

Is there no reporting on the number of retries that actually took place? Some output like:

retry number XXX in progress...

would be super appreciated as one would know that that was indeed a problem as opposed to randomly increasing all retry/timeout thresholds that seem relevant.

Already filed a ticket with AWS and they tell me that there has been NO API throttling on their end.

The specific cause may be entirely within our corporate network but the lack of control/visibility within packer is definitely a hindrance.

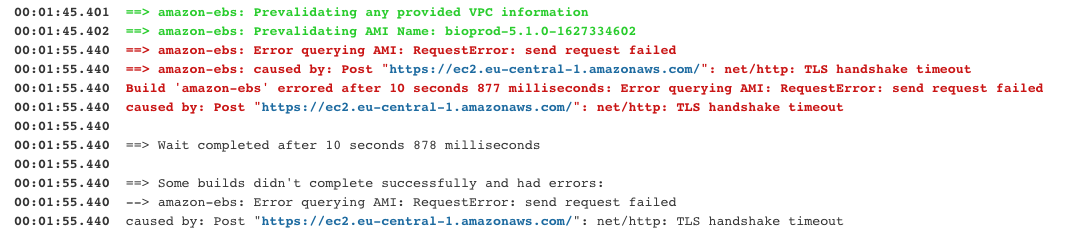

Screenshot if above error in case the highlighting helps:

Any updates on this? I just started using packer and it seems even the tutorial in the docs is broken.

This is problem (or similar) has greatly increased in frequency for us in the past month. It's never been completely absent, but has been at the tolerable annoyance level. Now it's serious issue as it's burning time with far too many failed builds from this one cause.

~$ packer --version 1.7.3From jenkins invocation of packer:

00:00:32.187 + ./bin/packer build -var ACCOUNT_ID=380631558036 -var aws_access_key=**** -var aws_secret_key=**** -var RELEASE_CYCLE=5.1.0 -var DEFAULT_USER=ubuntu -var APP_BRANCH=master -var APT_DEPENDENCIES=null -var BRANCH_NAME=develop -var MATURITY=dev -var SOURCE_AMI_VERSION=rc4 -var UBUNTU_VERSION=18.04 -var AWS_REGION=eu-central-1 -var SSH_PRIVATE_KEY_FILE=**** -var STOP_AFTER_COMPLETE=false -var INSTANCE_TYPE=m5a.large -var JFROG_CREDS=**** packer/ami_builder.json 00:00:32.457 amazon-ebs: output will be in this color. 00:00:32.457 00:00:33.416 ==> amazon-ebs: Prevalidating any provided VPC information 00:00:33.416 ==> amazon-ebs: Prevalidating AMI Name: bioprod-5.1.0-1627325582 00:00:43.480 ==> amazon-ebs: Error querying AMI: RequestError: send request failed 00:00:43.481 ==> amazon-ebs: caused by: Post "https://ec2.eu-central-1.amazonaws.com/": net/http: TLS handshake timeout 00:00:43.481 Build 'amazon-ebs' errored after 10 seconds 877 milliseconds: Error querying AMI: RequestError: send request failed 00:00:43.481 caused by: Post "https://ec2.eu-central-1.amazonaws.com/": net/http: TLS handshake timeout 00:00:43.481Seems to happen most often when there's multiple concurrent builds in progress.

Our VPN's IP gateway has only a handful of IPs, (OK, 2) to NAT all of us to the internet. That is something we're working on, but for now, there seems to be no way to configure the packer retires for the https://www.packer.io/docs/builders/amazon/ebs#ami-builder-ebs-backed builder.

In fact, there is zero occurrences of the word "retry" on that page. There are however a couple of timeout parameters:

ssh_timeout ssh_read_write_timeoutAdding these, in addition to bumping the

aws_pollingfrom 50 (which already seems ridiculous) to 100:"aws_polling": { "delay_seconds": 20, "max_attempts": 100 }, "ssh_timeout": "15m", "ssh_read_write_timeout": "30m",don't appear to help. 2 out of 10 builds still failing with the same error.

Is there no reporting on the number of retries that actually took place? Some output like:

retry number XXX in progress...would be super appreciated as one would know that that was indeed a problem as opposed to randomly increasing all retry/timeout thresholds that seem relevant.

Already filed a ticket with AWS and they tell me that there has been NO API throttling on their end.

The specific cause may be entirely within our corporate network but the lack of control/visibility within packer is definitely a hindrance.

Screenshot if above error in case the highlighting helps:

Hey Bedge,

I know you ran into this issue with Jenkins and Packer around 2 years back, but were you ever able to resolve the issue? We are running into the exact same error with Packer builds failing. The error in Packer really does not tell us much.

Hi akshtray, Yeah, I remember banging my head against a wall on this. I looked at every layer that had some level of timeout/retry control. I don't know what finally did it, or even if the problem just went away. It was most infuriating. Took a while to find this... I'm many projects past this one now :)

Packer:

"aws_polling": {

"delay_seconds": 20,

"max_attempts": 50

},

calling env:

# help with "Post https://iam.amazonaws.com/: net/http: TLS handshake timeout"

export AWS_STS_REGIONAL_ENDPOINTS=regional

export AWS_MAX_ATTEMPTS=400

export AWS_RETRY_MODE=standard

export AWS_POLL_DELAY_SECONDS=15

export AWS_METADATA_SERVICE_NUM_ATTEMPTS=10 # Guessing

export AWS_METADATA_SERVICE_TIMEOUT # Default = 1

IIRC it was setting the above in the packer caller env that finally did the trick.

Hope that helps.