nomad

nomad copied to clipboard

nomad copied to clipboard

Incorrect default host address with multiple host_network entries

Nomad version

Nomad v1.4.2 (039d70eeef5888164cad05e7ddfd8b6f8220923b)

(and problem still present with Nomad v1.4.3 (f464aca721d222ae9c1f3df643b3c3aaa20e2da7))

Operating system and Environment details

Ubuntu 22.04.1 LTS on amd64

Issue

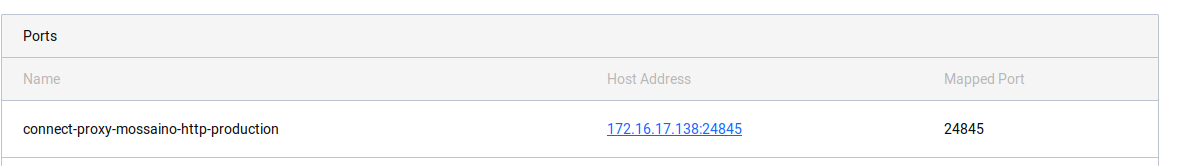

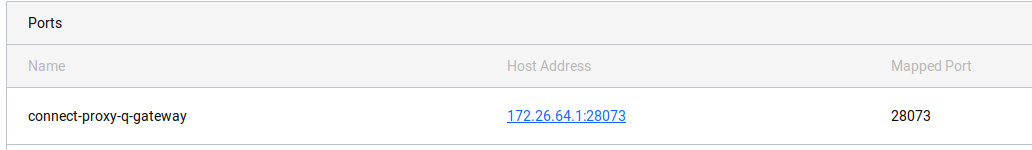

The allocated HostIP (also called "Host Address") for the connect-proxy port is incorrectly set when host_network "default" and a host_network "something" are defined.

Reproduction steps

Expected Result

A Host Address that's on one of the advertise addresses, e.g. 172.18.1.102:24845

Actual Result

A Host Address that's one of the internal addresses of the host_network "default", e.g. 172.26.64.1:23241

Job file (if appropriate)

job "q-gateway" {

datacenters = [ "dc1" ]

type = "service"

group "haproxy" {

network {

mode = "bridge"

}

service {

name = "q-gateway"

connect {

sidecar_service {}

}

}

}

# And the rest just like normal, using docker

}

Nomad Client config

data_dir = "/opt/nomad/data"

bind_addr = "0.0.0.0"

region = "global"

datacenter = "dc1"

advertise {

http = "172.18.1.102"

rpc = "172.18.1.102"

serf = "172.18.1.102"

}

server {

enabled = false

}

client {

enabled = true

servers = ["nomad01"]

# Known issues. See https://github.com/hashicorp/nomad/issues/10915

# By adding these two networks, the issues start:

host_network "default" {

cidr = "172.26.64.0/24"

}

host_network "q-gateway" {

cidr = "172.18.1.102/32"

}

}

acl {

enabled = true

token_ttl = "30s"

policy_ttl = "60s"

}

consul {

token = "redacted"

}

vault {

enabled = true

address = "https://redacted"

create_from_role = "nomad-cluster"

allow_unauthenticated = false

token = "redacted"

}

tls {

http = true

rpc = true

ca_file = "/opt/nomad/agent-certs/ca.crt"

cert_file = "/opt/nomad/agent-certs/agent.crt"

key_file = "/opt/nomad/agent-certs/agent.key"

verify_server_hostname = true

verify_https_client = true

}

I have one cluster where it works as expected, and a different cluster where it's just not working - both with identical configs. I've ran out of ideas where the issue could be. Manually defining host_network "default" does not cause any issues, but as soon as I add another host_network, the connect proxy gets assigned the address of the host_network "default" ...

This causes issues when a different host (B) wants to connect to this service, because (presumably) they will try to access the HostIP, but since it's a local IP of host (A) it just can't connect ( Consul Connect - delayed connect error: 111).

Hi @EtienneBruines!

If we look at the jobspec docs on Host Networks:

In some cases a port should only be allocated to a specific interface or address on the host. The

host_networkfield of a port will constrain port allocation to a single named host network. Ifhost_networkis set for a port, Nomad will schedule the allocations on a node which has defined ahost_networkwith the given name. If not set the "default" host network is used which is commonly the address with a default route associated with it.

Your jobspec doesn't set a host_network for a port, which means it's supposed to use "the address with a default route associated with it." So I suspect the reason you're seeing differences in behavior is that hosts have different route tables. If you check the IPs for ip route and look for the default entry, are those the IPs we're seeing here?

That being said, you're saying the value changes if we add the default network. There may be an interaction here around using the name "default" for a host network, but I can't quite reproduce the behavior you're seeing. It may be that it only happens with a particular combination around the ip route, so let's start there.

Thank you for your reply @tgross

On the cluster that "just works":

default via 172.16.17.254 dev ens224 proto static

172.16.17.0/24 dev ens224 proto kernel scope link src 172.16.17.136

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

172.26.64.0/20 dev nomad proto kernel scope link src 172.26.64.1

On the cluster that doesn't:

default via 172.18.1.254 dev ens192 proto static

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

172.18.1.0/24 dev ens192 proto kernel scope link src 172.18.1.102

172.26.64.0/20 dev nomad proto kernel scope link src 172.26.64.1

Other than the order of entries, I don't see any real differences (they're in different subnets). The default route does point to the correct IP in both instances (an IP in the ens-network interface). And I imagine the order might be sorted numerically, so that might also be just fine.

Looking at the jobspec docs, I would almost imagine the default host_network to look something like this:

client {

host_network "default" {

cidr = "172.18.1.0/24"

}

}

But two years ago I painstakingly found that the default host_network is actually the nomad network, e.g.:

4: nomad: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether aa:b1:34:19:13:eb brd ff:ff:ff:ff:ff:ff

inet 172.26.64.1/20 brd 172.26.79.255 scope global nomad

valid_lft forever preferred_lft forever

inet6 fe80::a8b1:34ff:fe19:13eb/64 scope link

valid_lft forever preferred_lft forever

(And thus that is what I defined.)

Note that if I do not manually define host_network "default" (next to the other host_network that I defined), normal jobs that don't specify a host_network will fail to allocate.

Okay, the theme/consul/connect label can probably be removed.

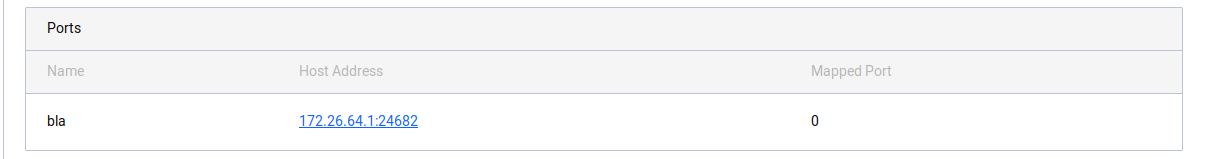

The job file I used to reproduce, without consul:

job "dummy-service" {

datacenters = [ "dc1" ]

namespace = "default"

type = "service"

group "redirect-service" {

network {

mode = "bridge"

port "bla" {}

}

task "docker-web-redirect" {

driver = "docker"

config {

image = "morbz/docker-web-redirect:latest"

}

template {

data = <<EOF

REDIRECT_TARGET=https://google.com

EOF

destination = "local/.env"

env = true

}

}

}

}

Logs

Dec 05 09:26:24 hashivault02-del nomad[2748317]: 2022-12-05T09:26:14.261Z [DEBUG] client.fingerprint_mgr.network: link speed detected: interface=ens192 mbits=10000

Dec 05 09:26:24 hashivault02-del nomad[2748317]: 2022-12-05T09:26:14.261Z [DEBUG] client.fingerprint_mgr.network: detected interface IP: interface=ens192 IP=172.18.1.102

It seems like it correctly identified the default network interface (with name ens192).

When allocating, this log appears:

Dec 05 09:58:31 hashivault02-del nomad[2751918]: 2022-12-05T09:58:31.454Z [DEBUG] client.alloc_runner.runner_hook: received result from CNI: alloc_id=599d54db-7074-a4cb-a383-1576171279aa result="{\"Interfaces\":{\"eth0\":{\"IPConfigs\":[{\"IP\":\"172.26.64.68\",\"Gateway\":\"172.26.64.1\"}],\"Mac\":\"aa:fc:29:23:b4:4e\",\"Sandbox\":\"/var/run/docker/netns/31a4fc545b69\"},\"nomad\":{\"IPConfigs\":null,\"Mac\":\"36:43:ac:0b:fc:94\",\"Sandbox\":\"\"},\"vethf42c8414\":{\"IPConfigs\":null,\"Mac\":\"82:c1:42:28:8a:da\",\"Sandbox\":\"\"}},\"DNS\":[{}],\"Routes\":[{\"dst\":\"0.0.0.0/0\"}]}"

Debugging journey

No idea where to even start debugging, so I just started here: https://github.com/hashicorp/nomad/blob/2d4611a00cd22ccd0590c14d0a39c051e5764f59/client/allocrunner/networking_cni.go#L102

The alloc.AllocatedResources.Shared.Ports in this scope contains the reference to 172.26.64.1, whilst alloc.AllocatedResources.Shared.Networks contains a reference to 172.18.1.102. This makes Nomad request a CNI-Portmap to HostIP 172.26.64.1 Unfortunately, I cannot figure out where alloc.AllocatedResources.Shared.Ports gets its value. It seems to be the only reference to 172.26.64.1 in the structs.Allocation.

I am able to compile and run that custom version of Nomad on the "not working" cluster, so if you'd need any information at all - I can probably add a debug log statement for it.

Debugging journey part 2

On the scheduling node (server), there is something called a NetworkIndex that is used by the BinPackIterator to AssignPorts. The HostNetwork for this NetworkIndex has differing values:

- manually specifying

host_network default:{ipv4 default 172.26.64.1 } {bla 20123 80 default} - not specifying any

host_network:{ipv4 default 172.18.1.102 } {bla 20123 80 default}

Debugging journey part 3

While fingerprinting the client, what I configure in my nomad.hcl makes a difference:

- Not defining any

host_network:{host ens192 00:50:56:8b:03:ac 10000 [{ipv4 default 172.18.1.102 }]}{host docker0 02:42:05:6d:37:52 1000 []}{host nomad 36:43:ac:0b:fc:94 10000 []}

- Defining

host_networkwith specificcidrof172.26.64.0/24:{host ens192 00:50:56:8b:03:ac 10000 [{ipv4 q-gateway 172.18.1.102 }]}{host docker0 02:42:05:6d:37:52 1000 []}{host nomad 36:43:ac:0b:fc:94 10000 [{ipv4 default 172.26.64.1 }]}

- Defining

host_networkwithout specifyingcidr:{host ens192 00:50:56:8b:03:ac 10000 [{ipv4 default 172.18.1.102 }]}{host docker0 02:42:05:6d:37:52 1000 [{ipv4 default 172.17.0.1 }]}{host nomad 36:43:ac:0b:fc:94 10000 [{ipv4 default 172.26.64.1 }]}

When allocating a port, when I manually specify the host_network (in the client config) without a cidr, it also allocates it on the loopback address.

Only when I do not specify any host_network, it allocates it on the correct 172.18.1.102 address.

This made me think, what if I define 172.18.1.102/32 as the default cidr. I just confirmed, that it does allocate an internal IP of e.g. 172.26.64.126/20 (which is good, because that makes listening on any port private to the specific Nomad group) and then successfully allocates a port on 172.18.1.102.

Hi @EtienneBruines, sorry for the delay. The symptoms you're describing here read to me like they're entirely on the client. I'm wondering if you noticed this sort of thing appear after a client had it's configuration reloaded? There might be a somewhere we've missed a copy of the host networks struct. I'll take a look at that.

I have no idea why on one cluster it works flawlessly when I define

host_network "default" {

cidr = "172.26.64.0/24"

}

And when I do the same on the other cluster it does not work the same.

However, when I (on that cluster that gave me problems) define this network, it does work as expected:

host_network "default" {

cidr = "172.18.1.102/32"

}

Every allocation gets a nice internal IP, and only the requested ports are forwarded to that internal IP. Great!

Summary

Nomad behavior seems correct. host_network "default" has nothing to do with the nomad network bridge. At least in current versions of Nomad.

As to why I have one cluster that "works" like I wanted it to, despite incorrectly defining cidr = "172.26.64.0/24" I still do not know. And since I cannot modify the config of that server that works with cidr = "172.26.64.0/24", there's no real way to find out if it would have worked just as much had I defined cidr = "172.18.1.102/32".

@tgross I think we might be able to close this issue. If someone else encounters weird problems like this again, they can open a new issue and link to this one.

As suggested, I'm going to close this issue out. Hopefully we don't encounter it again but if we do we'll reopen and revisit.