rust-bert

rust-bert copied to clipboard

rust-bert copied to clipboard

Unable to compile the library on linux server

I have rust-bert installed on my local Linux machine and I've been developing an application with it. I wanted to try and deploy it to a VM so the app can run online, however, I'm having some problems.

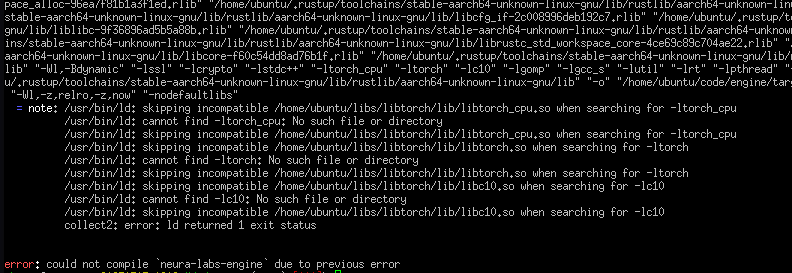

I checked many times and my paths are not the problem. The C linker can find the libtorch headers but for some reason both the ltorch_cpu.so and libc10.so files are being skilled even though they exist on my system.

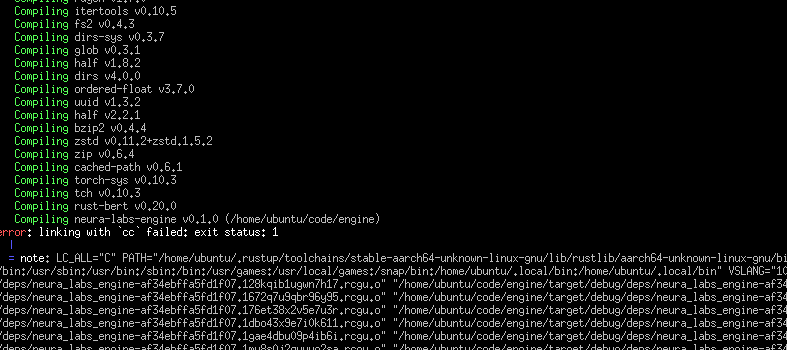

and at the end, I get this error message:

Im not sure what to do. I'm using libtorch version 1.13.1 just like the docs said and again, all this is working locally. Could I be missing some C library on my VM?

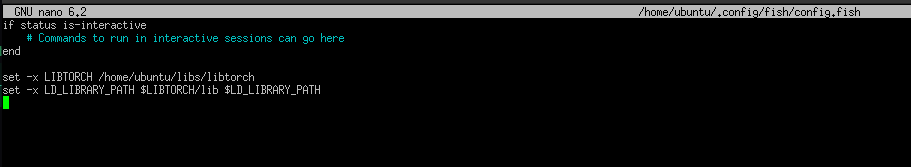

here is my config file for my PATH variables.

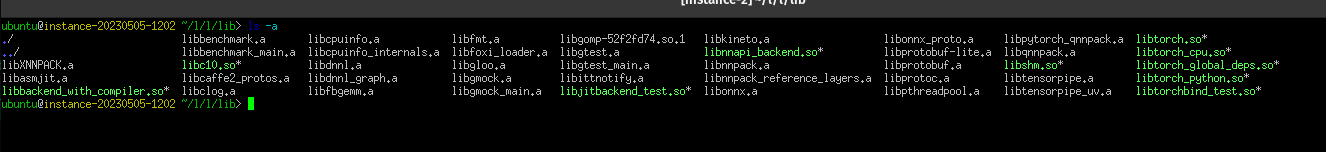

and when I looked in my libtorch folder I can see the files its saying it cant use?

Not really sure whats going on here, and just to test to make sure my error was not because of the path. When I edit the path to something false I get a different error like:

cargo:warning=libtch/torch_api.cpp:1:9: fatal error: torch/csrc/autograd/engine.h: No such file or directory

cargo:warning= 1 | #include<torch/csrc/autograd/engine.h>

If anyone knows how to help, please let me know, thanks!

Oh and I'm using the latest version from crates.io.

Update: I switched to the git repo to see if any updates will change the problem.

Im not using pyTorch v2.0.0 and bert v0.20.1-alpha. Im still getting the same results. The build works on my local machine, but it fails to compile on the VM.

One thought after debugging is maybe because I'm running this on a 3-core vps, the CPU binary are not supported for the model?

One thought after debugging is maybe because I'm running this on a 3-core vps, the CPU binary are not supported for the model?

Nope, downgraded my system to a 2core vps now and its still the same error. don't know what to do about this. If anyone knows, please let me know thanks!

@guillaume-be I don't know too much about the limitations of the PyTorch cpp API but is it possible that virtual CPUs are not supported by the library? Is this why on my local machine everything builds fine and runs but on a cloud VM it crashes even with the bindings installed? Because the CPU is not compatible?

This is just a thought, after thinking about the problem.

Hello @ThatGuyJamal ,

I unfortunately am not sure if there are limitations tied to vCPU for the libtorch C++ library. You may be able to get more support on the repository of the torch bindings dependency: tch-rs or on the Pytorch forum directly.

Okay thanks, I will check on these forms. Another thought was maybe Arm CPU's are not supported by PyTorch. I will ask them on the forms.

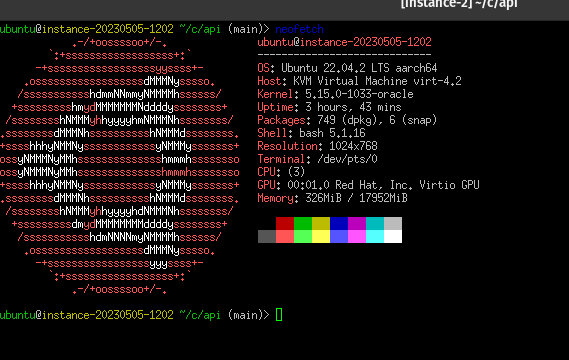

My VM System:

Architecture: aarch64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 3

Vendor ID: ARM

Model name: Neoverse-N1

Model: 1

Thread(s) per core: 1

Core(s) per socket: 3

Socket(s): 1

Stepping: r3p1

BogoMIPS: 50.00