RetryingCall leaks entries in the writeBuffer if the call is long lived

I have a retryable bidirectional stream that is long lived and leaks entries in the writeBuffer. There does not appear to be any code to recycle the writeBuffer so it seems to grow indefinitely.

I have published version 1.8.11 with a change to this behavior so it will no longer accumulate those "FREED" objects. Please try it out.

I have published version 1.8.11 with a change to this behavior so it will no longer accumulate those "FREED" objects. Please try it out.

I will give this a go as soon as I can and report back :)

Hello @murgatroid99

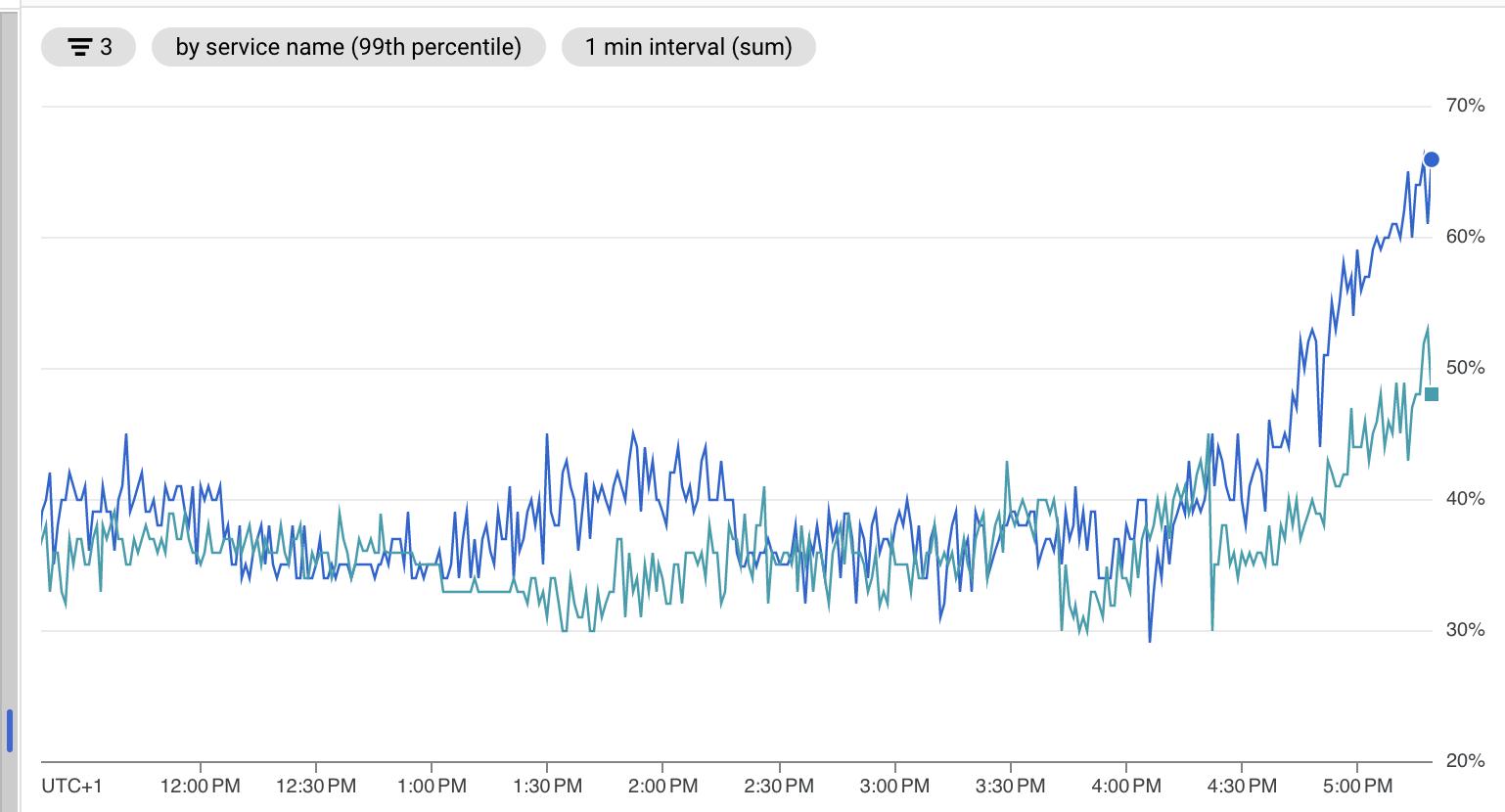

We tried the new version 1.8.11 today and we observe the same memory leak as 1.8.10. See attached screenshot, before 4pm its 1.7.3, after 4pm it's v1.8.11

I don't know if it is the exactly same issue as mentioned here because I cannot easily make a memory dump. We do not use the grpc-js library directly, but we do use subscription to pub/sub server and we communicate with cloud task using officiel Google node JS library which i assume use this grpc module.

Anyway we will stick to 1.7.3 for now.

Hope that helps

Thank you for the information. If someone can provide more details, I can investigate.

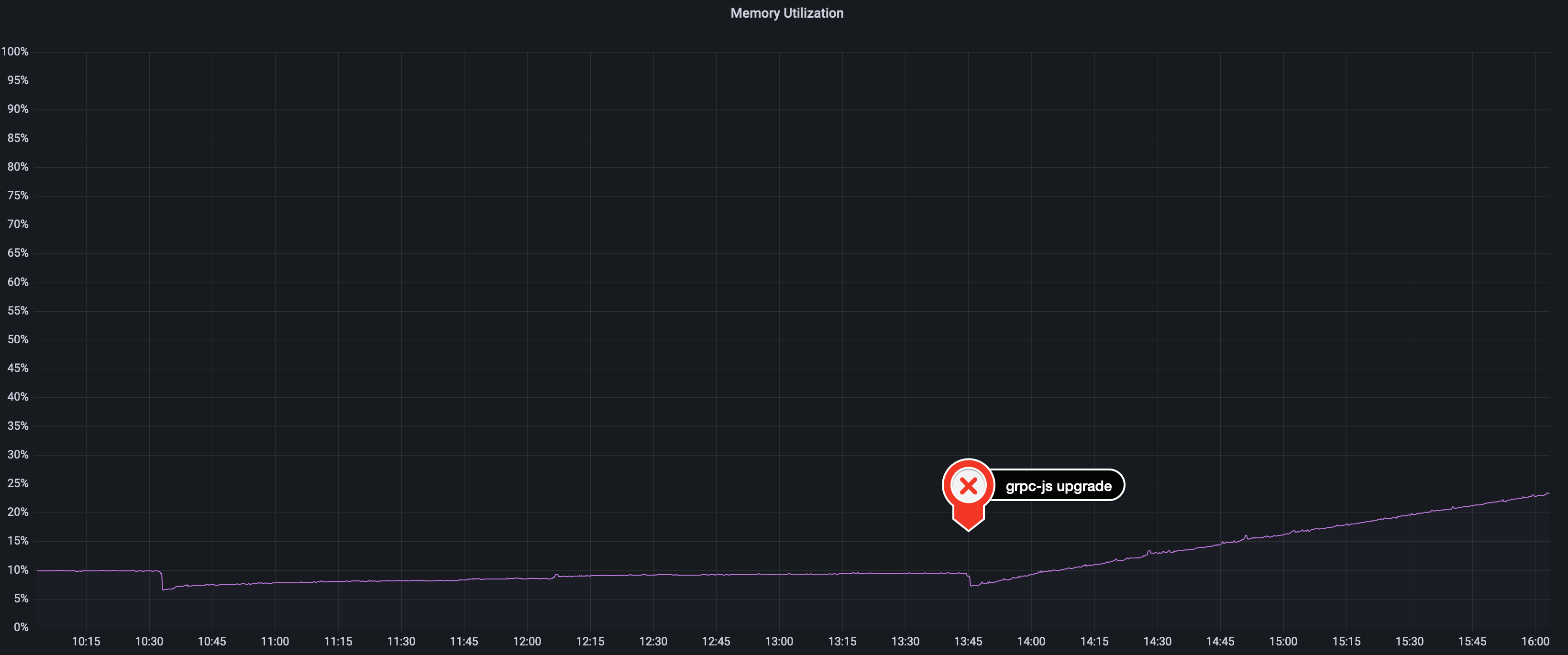

We're unfortunately seeing a similar problem on 1.8.11 too, memory usage slowly gets to a point where our services are being OOM killed:

This is with very minimal traffic (< 10RPS).

We're doing a simple unary call, without any explicit retry logic.

Here's how we instantiate the client:

return new OurServiceClient(endpoint, credentials.createInsecure(), {

'grpc.keepalive_time_ms': 2000,

'grpc.keepalive_permit_without_calls': 1,

});

And how we call it:

return async (params: Params): Promise<Response | undefined> => {

const metadata = new Metadata();

metadata.set('requestId', requestId);

metadata.set('authentication', await getAuthTokenFn());

return new Promise((resolve, reject) => {

client.ourRpcMethod(

params,

metadata,

{

deadline: Date.now() + timeout,

},

(err, value) => {

if (err) {

return reject(err);

}

return resolve(value);

},

);

});

};

The server is running with:

const server = new Server({

'grpc.keepalive_permit_without_calls': 1,

'grpc.max_connection_age_ms': 5000,

});

And here's the (redacted) protobuf:

syntax = "proto3";

service OurService {

rpc GetServiceData (Request) returns (Response) {}

}

message Request {

string id = 1;

string name = 2;

}

message Response {

bool enabled = 1;

some.Custom.Data some_custom_data = 2;

string name = 3;

uint64 created_at_timestamp = 4;

}

The endpoint is an internal Kubernetes service DNS endpoint (e.g. our-service.namespace.svc.cluster.local).

We can reproduce this very consistently unfortunately and have rolled the package version back for now.

@chinthakagodawita if you're able to take a heapdump this should be fairly straightforward to debug. Obviously this will contain sensitive information of your application so if you're not confident looking at the heapdump yourself I could look at this privately for you if you were willing to share it (I need to be confident this doesn't leak so I don't mind investing some time into this). Also note that I was also not using any explicit retry logic but it seems to be enabled by default, which is quite a dangerous change to apply by default IMO but this might be the expected default behaviour of gRPC. I disabled retries and the channelz feature (in both the client and server) and I'm seeing better behaviour. I was suspicious of the channelz feature leaking.

I did this by setting client options:

'grpc.enable_retries': 0, // Retries cause BiDi calls to buffer indefinitely (memory leak)

'grpc.enable_channelz': 0 // Channelz implementation in grpc-js appears to leak

server options:

'grpc.enable_channelz': 0

It would be great if you could help unblock v1.8

@Matt-Esch Are you still seeing the buffering in BiDi calls after upgrading to v1.8.11? If so, can you provide more details? Can you also share more details about the channelz memory leak you are seeing?

Sorry @Matt-Esch, we're quite under the pump at the moment and I haven't had a chance to stand up a test stack to capture a heapdump yet!

FYI today I published version 1.8.13 with a fix for a memory leak. Please test using that one in case it fixed the memory leak you are experiencing.

A little update - we upgraded to 1.8.17 which doesn't seem to exhibit the same memory leak issue we were seeing on 1.8.11

That's good to hear. Thanks for the update.

@Matt-Esch can you confirm whether you are still experiencing the memory leak with the latest version of grpc-js?