graphql-js

graphql-js copied to clipboard

graphql-js copied to clipboard

Event loop blocked for large responses in completeListValue

We have an application where we regularly respond with object lists of multiple thousand objects. Completing the list containing these objects can take several seconds, during which the event loop is busy and the server non-responsive, which is far from ideal. The problematic forEach call in completeListValue here: https://github.com/graphql/graphql-js/blob/master/src/execution/execute.js#L911

Would it be interesting to divide this work into smaller synchronous chunks of work in order to return to the event loop more frequently?

I have made a working solution below that may be used by anyone who has the same problem and are fine with monkey-patching inside the execute module.

The chunked implementation only starts using chunks if the completion time goes above a given time-threshold (e.g. 50 ms) and uses a variable chunk size in order to minimize overhead.

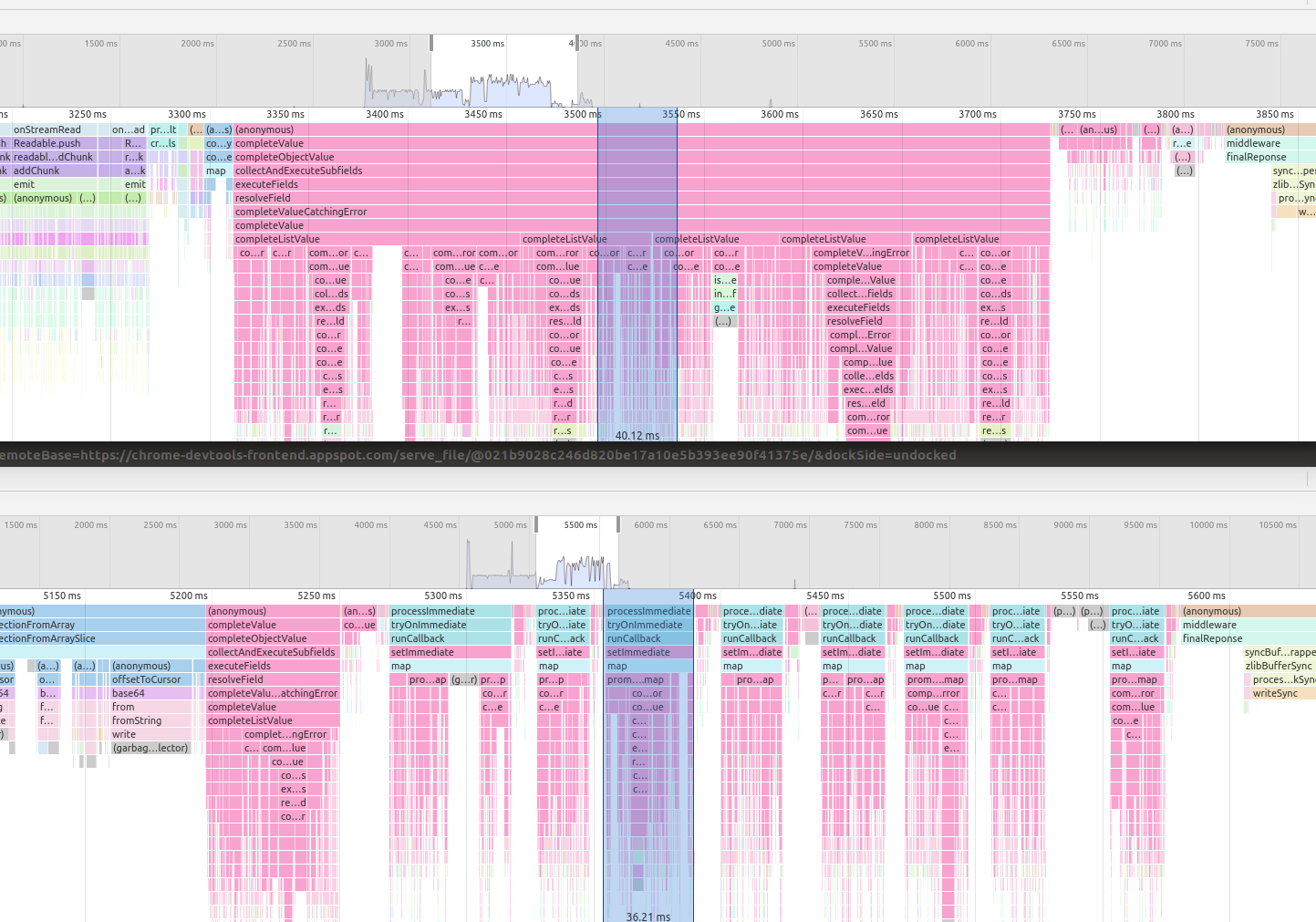

Profiles before and after chunkification:

const rewire = require('rewire');

const executeModule = rewire('graphql/execution/execute');

const completeValueCatchingError = executeModule.__get__( 'completeValueCatchingError');

const { GraphQLError } = require('graphql');

const _ = require('lodash');

function completeListValueChunked(

exeContext,

returnType,

fieldNodes,

info,

path,

result

) {

if (!_.isArray(result)) {

throw new GraphQLError(

'Expected Iterable, but did not find one for field '

.concat(info.parentType.name, '.')

.concat(info.fieldName, '.')

);

}

const itemType = returnType.ofType;

const completedResults = [];

let containsPromise = false;

let fieldPath;

const t0 = new Date().getTime();

let breakIdx;

for (const [idx, item] of result.entries()) {

// Check every Nth item (e.g. 20th) if the elapsed time is larger than 50 ms.

// If so, break and divide work into chunks using chained then+setImmediate

if (idx % 20 === 0 && idx > 0 && new Date().getTime() - t0 > 50) {

breakIdx = idx; // Used as chunk size

break;

}

fieldPath = { prev: path, key: idx }; // =addPath behaviour in execute.js

const completedItem = completeValueCatchingError(

exeContext,

itemType,

fieldNodes,

info,

fieldPath,

item

);

if (!containsPromise && completedItem instanceof Promise) {

containsPromise = true;

}

completedResults.push(completedItem);

}

if (breakIdx) {

const chunkSize = breakIdx;

const returnPromise = _.chunk(result.slice(breakIdx), chunkSize).reduce(

(prevPromise, chunk, chunkIdx) =>

prevPromise.then(

async reductionResults =>

await Promise.all(

await new Promise(resolve =>

setImmediate(() => // We want to execute this in the next tick

resolve(

reductionResults.concat(

[...chunk.entries()].map(([idx, item]) => {

fieldPath = {

prev: path,

key: breakIdx + chunkIdx * chunkSize + idx,

};

const completedValue = completeValueCatchingError(

exeContext,

itemType,

fieldNodes,

info,

fieldPath,

item

);

return completedValue;

})

)

)

)

)

)

),

Promise.all(completedResults)

);

return returnPromise;

} else {

return containsPromise ? Promise.all(completedResults) : completedResults;

}

}

// Monkey-patch the completeListValue function inside the execute module using rewire

executeModule.__set__('completeListValue', completeListValueChunked);

// Use the rewired execute method in the actual server

const rewiredExecute = rewiredExecuteModule.execute;

@lenolib Thanks for the detailed description 👍

Can you achieve the same by returning an async generator instead of an Array? We already support sync generators: https://github.com/graphql/graphql-js/blob/52157dc5b44597d15d53775db867e9bc0b24aa1f/src/execution/tests/lists-test.js#L61-L72 So we can consider also supporting async generators as a more generic solution.

Async generators does seem to improve the situation slightly, but the main problem with blocking for a long time persists:

Implemented simply by:

Implemented simply by:

.

.

ctx.logger.debug('Returning %s objs', objs.length);

function* myGenerator(array) {

while (array.length) {

yield Promise.resolve(array.shift());

}

}

return myGenerator(objs);

// return objs;

Original / baseline without async generator:

@lenolib Thanks for detail investigation 👍

Currently, we support only function* which is sync generators and as you showed doesn't resolve the problem. What I'm suggesting is to add support for async function* so you can create resolver wrapper similar to this one:

function listDelayWrapper(resolver) {

return (source, args, context, info) => {

const result = resolver(source, args, context, info);

return async function*() {

const t0 = new Date().getTime();

for (const [idx, item] of result.entries()) {

// Check every Nth item (e.g. 20th) if the elapsed time is larger than 50 ms.

// If so, break and divide work into chunks using setImmediate

if (idx % 20 === 0 && idx > 0 && new Date().getTime() - t0 > 50) {

await new Promise((resolve) => setImmediate(resolve))

}

yield item;

}

};

};

}

BTW. Here is a good example of async generators: https://javascript.info/async-iterators-generators#async-generators

Hi,

is there any update on this issue? We see the same behaviour on our project. Would be nice if there could be an official fix.

Same thing, we sometimes have queries that take 30+ seconds, and we are suffering from this as well

Any update on this? Seems like it would fix a problem I'm facing as well

This keeps affecting my production servers :/

In case it is useful for anyone, I've updated my monkey patch to work with the latest version. Can be found here: https://gist.github.com/lenolib/e801737a949f810fdc2f1dc64926ebd8 (Update 16th April: fixed a missing return statement)

This is also a huge problem for us - we're not even working with giant lists of entities, just deeply nested, and a list of 300 entities can block the event loop of upwards of 10 seconds while it resolves.

We had a similar issue due to nested objects. Currently using v17.0.0-alpha.2 as there's no official 17.x release yet. I rewrote some of the code for readability from Ivan's comment above which solved it for us. Putting it here if anyone wants to try it out:

const MAX_EVENTLOOP_BLOCK_TIME_IN_MS = 50

const waitForNextEventCycle = () => new Promise(resolve => setImmediate(resolve))

function listDelayWrapper(resolver) {

return async function* (source, args, context, info) {

const startTime = new Date().getTime()

const resultArray = await resolver(source, args, context, info)

for (const [idx, item] of resultArray.entries()) {

const is20thElement = idx % 20 === 0 && idx > 0

const currentTime = new Date().getTime()

const deltaFromStartTime = currentTime - startTime

if (is20thElement && deltaFromStartTime > MAX_EVENTLOOP_BLOCK_TIME_IN_MS) {

await waitForNextEventCycle()

}

yield item

}

}

}

Then use it like this:

resolve: listDelayWrapper(whatever function was here before)

In case it is useful for anyone, I've updated my monkey patch to work with the latest version. Can be found here: https://gist.github.com/lenolib/e801737a949f810fdc2f1dc64926ebd8 (Update 16th April: fixed a missing return statement)

It is, given v17 is still in alpha 😊 I have a TS version of your gist, I will share it once I'm sure it works. I also updated it to the latest v16 (just minor changes re-using the helper method they now use).

// Use the rewired execute method in the actual server const rewiredExecute = rewiredExecuteModule.execute;

Hey @lenolib, where do you actually use this last line? For instance, we are using Apollo server, I'm not sure to understand where to set/use rewiredExecute.

I thought rewire would do the trick and that calling rewiredExecuteModule.__set__('completeListValue', completeListValueChunked) would ensure the new method would be executed instead of the original completeListValue.

Am I missing something here?

@philippemiguet I'm afraid we gave up using the patching above anymore after we moved to graphql-yoga. I remember the library we used before allowed us specify the execute function when setting up the server: https://github.com/graphql-community/koa-graphql

Thanks @lenolib - It seems our best shot is using GraphQL v17.alpha with an async generator as Torhgal suggests. Out of curiosity, how did you end up solving the problem on your side?

Closing as resolved in v17 alpha.