Add option to register IPv6 address

Is your feature request related to a problem? Please describe.

compactor, distributor, ingester, querier and query-frontend cannot start on IPv6 Only clusters (eg EKS in Full IPv6)

This seem to be due to https://github.com/grafana/tempo/blob/main/pkg/util/net.go which only recover first IPv4 address and don't take care of IPv6.

Describe the solution you'd like

We must be able to specify which protolcol will be used by pods (IPv4 or IPv6 or both ? default to IPv4)

So pods can start even if they had not IPv4 address.

Describe alternatives you've considered

Additional context

Step to reproduce:

- Launch an EKS cluster with IPv6 -- my reference cluster was launched using https://github.com/aws-ia/terraform-aws-eks-blueprints/tree/main/examples/ipv6-eks-cluster

- Deploy

tempohelm chart -- https://github.com/grafana/helm-charts/tree/main/charts/tempo-distributed - View error from pods

logs from distributor pods

kubectl logs -n monitoring grafana-tempo-distributed-distributor-68dd6fc7bb-ddfz2

level=info ts=2022-07-06T08:12:20.776290206Z caller=main.go:191 msg="initialising OpenTracing tracer"

level=info ts=2022-07-06T08:12:20.794048062Z caller=main.go:106 msg="Starting Tempo" version="(version=, branch=HEAD, revision=d3880a979)"

level=info ts=2022-07-06T08:12:20.794518191Z caller=server.go:260 http=[::]:3100 grpc=[::]:9095 msg="server listening on addresses"

level=info ts=2022-07-06T08:12:20.795326576Z caller=memberlist_client.go:394 msg="Using memberlist cluster node name" name=grafana-tempo-distributed-distributor-68dd6fc7bb-ddfz2-b084e2d1

ts=2022-07-06T08:12:20.795392905Z caller=memberlist_logger.go:74 level=debug msg="configured Transport is not a NodeAwareTransport and some features may not work as desired"

level=debug ts=2022-07-06T08:12:20.795408427Z caller=tcp_transport.go:393 component="memberlist TCPTransport" msg=FinalAdvertiseAddr advertiseAddr=:: advertisePort=7946

level=debug ts=2022-07-06T08:12:20.795431802Z caller=tcp_transport.go:393 component="memberlist TCPTransport" msg=FinalAdvertiseAddr advertiseAddr=:: advertisePort=7946

level=error ts=2022-07-06T08:12:20.796419855Z caller=main.go:109 msg="error running Tempo" err="failed to init module services error initialising module: distributor: failed to create distributor No address found for [eth0]"

Avalaible network configuration on pods

ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: eth0@if57: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 9001 qdisc noqueue state UP

link/ether 5a:d2:2f:40:89:dc brd ff:ff:ff:ff:ff:ff

inet6 2a05:d012:dcb:5e01:f127::2/128 scope global

valid_lft forever preferred_lft forever

inet6 fe80::58d2:2fff:fe40:89dc/64 scope link

valid_lft forever preferred_lft forever

5: v4if0@if58: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 9001 qdisc noqueue state UP

link/ether 1e:d3:c0:e7:d9:e6 brd ff:ff:ff:ff:ff:ff

inet 169.254.172.29/22 brd 169.254.175.255 scope global v4if0

valid_lft forever preferred_lft forever

Other

It seems there is a similar issue on loki https://github.com/grafana/loki/issues/6251

And Pull Request is open on cortex in order to register IPv6 in the ring https://github.com/cortexproject/cortex/pull/4340

Interesting, thank you for the report. I don't have a great way to reproduce this at the moment, but looking a little more, there maybe some dskit work needed here too.

https://github.com/grafana/dskit/blob/main/ring/util.go#L53

This appears to be used by the compactor, and the metrics generator.

I see some warning messages in that code. Do you get any errors or warnings when trying this on an ipv6-only cluster?

Also for completeness, could you include the output of ip addr from one of the containers on this cluster? I suspect there are at least two v6 addresses on the interface, but would like to confirm.

Also for completeness, could you include the output of

ip addrfrom one of the containers on this cluster? I suspect there are at least two v6 addresses on the interface, but would like to confirm.

Hi @zalegrala i complete issue description with more information let me know if it's OK for you

Thanks @chubchubsancho, that confirms what I was after. One link local v6 address and one public v6 address on the eth0 interface. I don't think I'll be able to get to it this week, but the change shouldn't be too difficult. Are you in a position to test a different docker image if I can work up a PR? I'd like to confirm that it works as we expect before merging, but as mentioned I don't have a v6 only environment with which to test.

No problem for me to test different docker image

zalegrala/canary-tempo:aa0d125ee has a change that might work. If you want to try it out, it was build from main, so check the CHANGELOG for any breaking changes you might need to adjust in your configuration since the last release.

Hi @zalegrala,

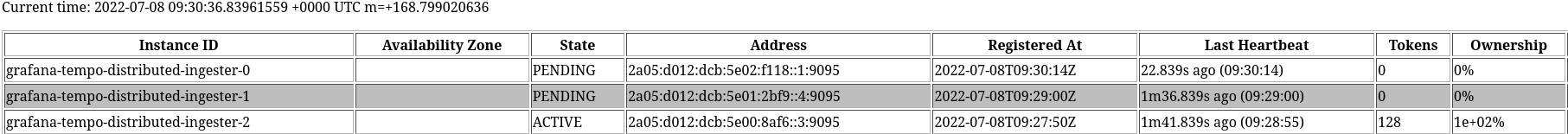

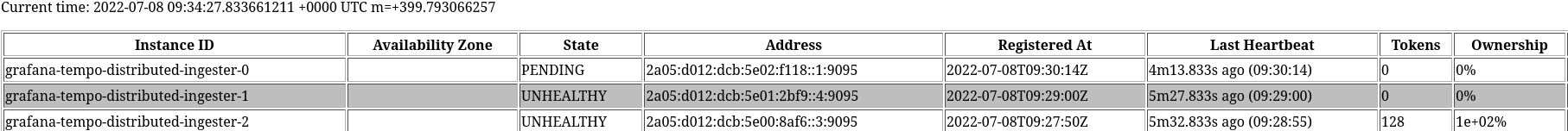

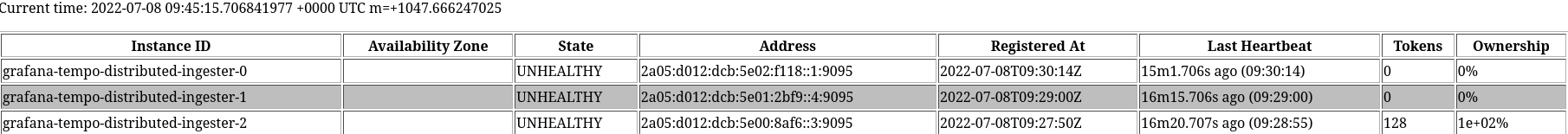

I try zalegrala/canary-tempo:aa0d125ee, Pods start without any problem after adding bind: '::' to memberlist config. But I was unable to make ingester stay ACTIVEinto the ring :disappointed: Firstingesterjoin ring without any problems. The two other stay in pending state for some times the becomUNHEALTHYLater allingesterbecomeUNHEALTHY`

ingester logs: ingester-0:

level=info ts=2022-07-08T09:02:54.731844705Z caller=main.go:191 msg="initialising OpenTracing tracer"

level=info ts=2022-07-08T09:02:54.749780182Z caller=main.go:106 msg="Starting Tempo" version="(version=inet6-aa0d125-WIP, branch=inet6, revision=aa0d125ee)"

level=info ts=2022-07-08T09:02:54.753147723Z caller=server.go:288 http=[::]:3100 grpc=[::]:9095 msg="server listening on addresses"

level=info ts=2022-07-08T09:02:54.754022451Z caller=memberlist_client.go:407 msg="Using memberlist cluster node name" name=grafana-tempo-distributed-ingester-0-e7f9d069

level=debug ts=2022-07-08T09:02:54.75403292Z caller=module_service.go:72 msg="module waiting for initialization" module=memberlist-kv waiting_for=server

level=debug ts=2022-07-08T09:02:54.754065105Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=memberlist-kv

level=info ts=2022-07-08T09:02:54.754081063Z caller=module_service.go:82 msg=initialising module=store

ts=2022-07-08T09:02:54.754086442Z caller=memberlist_logger.go:74 level=debug msg="configured Transport is not a NodeAwareTransport and some features may not work as desired"

level=debug ts=2022-07-08T09:02:54.754106598Z caller=tcp_transport.go:393 component="memberlist TCPTransport" msg=FinalAdvertiseAddr advertiseAddr=:: advertisePort=7946

level=debug ts=2022-07-08T09:02:54.754108118Z caller=module_service.go:72 msg="module waiting for initialization" module=overrides waiting_for=server

level=debug ts=2022-07-08T09:02:54.754139489Z caller=tcp_transport.go:393 component="memberlist TCPTransport" msg=FinalAdvertiseAddr advertiseAddr=:: advertisePort=7946

level=info ts=2022-07-08T09:02:54.754127488Z caller=module_service.go:82 msg=initialising module=server

level=info ts=2022-07-08T09:02:54.754244583Z caller=module_service.go:82 msg=initialising module=overrides

level=info ts=2022-07-08T09:02:54.754268268Z caller=module_service.go:82 msg=initialising module=memberlist-kv

level=debug ts=2022-07-08T09:02:54.754311341Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=overrides

level=debug ts=2022-07-08T09:02:54.754421035Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=server

level=debug ts=2022-07-08T09:02:54.754438384Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=store

level=info ts=2022-07-08T09:02:54.754445848Z caller=module_service.go:82 msg=initialising module=ingester

level=info ts=2022-07-08T09:02:54.754470272Z caller=ingester.go:328 msg="beginning wal replay"

level=warn ts=2022-07-08T09:02:54.754515958Z caller=rescan_blocks.go:24 msg="failed to open search wal directory" err="open /var/tempo/wal/search: no such file or directory"

level=info ts=2022-07-08T09:02:54.754540856Z caller=ingester.go:413 msg="wal replay complete"

level=info ts=2022-07-08T09:02:54.754559341Z caller=ingester.go:427 msg="reloading local blocks" tenants=0

level=info ts=2022-07-08T09:02:54.754597879Z caller=app.go:327 msg="Tempo started"

level=info ts=2022-07-08T09:02:54.75463536Z caller=lifecycler.go:576 msg="instance not found in ring, adding with no tokens" ring=ingester

level=debug ts=2022-07-08T09:02:54.754752081Z caller=lifecycler.go:412 msg="JoinAfter expired" ring=ingester

level=info ts=2022-07-08T09:02:54.754766395Z caller=lifecycler.go:416 msg="auto-joining cluster after timeout" ring=ingester

level=debug ts=2022-07-08T09:02:54.754954046Z caller=memberlist_client.go:833 msg="CAS attempt failed" err="no change detected" retry=true

ts=2022-07-08T09:02:54.755765062Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e02:f118::1]:7946"

ts=2022-07-08T09:02:54.757359268Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e00:8af6::2]:7946"

ts=2022-07-08T09:02:54.759755721Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e01:2bf9::4]:7946"

ts=2022-07-08T09:02:54.762188978Z caller=memberlist_logger.go:74 level=debug msg="Failed to join 2a05:d012:dcb:5e01:2bf9::6: dial tcp [2a05:d012:dcb:5e01:2bf9::6]:7946: connect: connection refused"

ts=2022-07-08T09:02:54.763281893Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e01:2bf9::5]:7946"

ts=2022-07-08T09:02:54.765603347Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e00:8af6::4]:7946"

level=info ts=2022-07-08T09:02:54.766870645Z caller=memberlist_client.go:525 msg="joined memberlist cluster" reached_nodes=5

level=debug ts=2022-07-08T09:02:55.755324437Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=5 oldVersion=1 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

ts=2022-07-08T09:02:59.754995661Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56' from=[::]:7946"

ts=2022-07-08T09:03:01.75578097Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 (timeout reached)"

ts=2022-07-08T09:03:01.756208016Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:35012"

ts=2022-07-08T09:03:01.756246774Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56' from=[::]:7946"

ts=2022-07-08T09:03:01.756275024Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 from=[::1]:35012"

ts=2022-07-08T09:03:01.756337437Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T09:03:04.755606553Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 has failed, no acks received"

ts=2022-07-08T09:03:04.755988005Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-distributor-7d9774d76d-dznbk-0cb018ea' from=[::]:7946"

ts=2022-07-08T09:03:06.756820194Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-distributor-7d9774d76d-dznbk-0cb018ea (timeout reached)"

ts=2022-07-08T09:03:06.757221312Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:52640"

ts=2022-07-08T09:03:06.757275105Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-distributor-7d9774d76d-dznbk-0cb018ea from=[::1]:52640"

ts=2022-07-08T09:03:06.757315657Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-distributor-7d9774d76d-dznbk-0cb018ea' from=[::]:7946"

ts=2022-07-08T09:03:06.757352432Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T09:03:09.755745315Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-distributor-7d9774d76d-dznbk-0cb018ea has failed, no acks received"

ts=2022-07-08T09:03:09.756074874Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-2-fb416a2d' from=[::]:7946"

ts=2022-07-08T09:03:11.756691044Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-2-fb416a2d (timeout reached)"

ts=2022-07-08T09:03:11.756918988Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:52662"

ts=2022-07-08T09:03:11.756980253Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-2-fb416a2d from=[::1]:52662"

ts=2022-07-08T09:03:11.757047672Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T09:03:14.756242621Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-2-fb416a2d has failed, no acks received"

ts=2022-07-08T09:03:14.75653177Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-querier-69699487d-w94th-37f736e8' from=[::]:7946"

ts=2022-07-08T09:03:16.757558577Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 (timeout reached)"

ts=2022-07-08T09:03:16.757804581Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:55008"

ts=2022-07-08T09:03:16.757857451Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 from=[::1]:55008"

ts=2022-07-08T09:03:16.757955613Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T09:03:24.756586402Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 has failed, no acks received"

ts=2022-07-08T09:03:24.756863025Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-1-64922bd4' from=[::]:7946"

ts=2022-07-08T09:03:26.757661095Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-1-64922bd4 (timeout reached)"

ts=2022-07-08T09:03:26.757951123Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:51496"

ts=2022-07-08T09:03:26.758020539Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-1-64922bd4 from=[::1]:51496"

ts=2022-07-08T09:03:26.75810625Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T09:03:31.850335244Z caller=logging.go:76 traceID=6c2df51ded92dbef msg="GET /ready (503) 94.523µs"

ts=2022-07-08T09:03:39.757353566Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-1-64922bd4 has failed, no acks received"

ts=2022-07-08T09:03:39.757696642Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-querier-69699487d-w94th-37f736e8' from=[::]:7946"

ts=2022-07-08T09:03:41.758458695Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 (timeout reached)"

ts=2022-07-08T09:03:41.758695741Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:47346"

ts=2022-07-08T09:03:41.758763761Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 from=[::1]:47346"

ts=2022-07-08T09:03:41.758849222Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T09:03:41.850257522Z caller=logging.go:76 traceID=07fbdf41a9c9e54b msg="GET /ready (503) 61.396µs"

level=debug ts=2022-07-08T09:03:51.850284726Z caller=logging.go:76 traceID=425fa3f27a8443ff msg="GET /ready (200) 73.811µs"

ts=2022-07-08T09:03:59.757696327Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 has failed, no acks received"

ts=2022-07-08T09:03:59.758051818Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-1-64922bd4' from=[::]:7946"

ts=2022-07-08T09:04:01.758888409Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-1-64922bd4 (timeout reached)"

ts=2022-07-08T09:04:01.759114871Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:43438"

ts=2022-07-08T09:04:01.759166312Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-1-64922bd4 from=[::1]:43438"

ts=2022-07-08T09:04:01.759243601Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T09:04:01.849737752Z caller=logging.go:76 traceID=6e42388534869814 msg="GET /ready (200) 35.193µs"

level=debug ts=2022-07-08T09:04:11.85015427Z caller=logging.go:76 traceID=461ed1980be31d67 msg="GET /ready (200) 41.717µs"

level=debug ts=2022-07-08T09:04:21.850270908Z caller=logging.go:76 traceID=70609e1680c996fe msg="GET /ready (200) 37.973µs"

ts=2022-07-08T09:04:24.758090473Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-1-64922bd4 has failed, no acks received"

ts=2022-07-08T09:04:24.758425073Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56' from=[::]:7946"

ts=2022-07-08T09:04:26.759274124Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 (timeout reached)"

ts=2022-07-08T09:04:26.759550175Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:39462"

ts=2022-07-08T09:04:26.75960903Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 from=[::1]:39462"

ts=2022-07-08T09:04:26.759694248Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T09:04:31.849741333Z caller=logging.go:76 traceID=076de476a72d662d msg="GET /ready (200) 43.96µs"

level=debug ts=2022-07-08T09:04:41.84989776Z caller=logging.go:76 traceID=74e1ada8e70a43c7 msg="GET /ready (200) 39.592µs"

level=debug ts=2022-07-08T09:04:51.849980261Z caller=logging.go:76 traceID=057e367d306d9d92 msg="GET /ready (200) 36.214µs"

ts=2022-07-08T09:04:54.758530496Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 has failed, no acks received"

ts=2022-07-08T09:04:54.758795534Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-2-fb416a2d' from=[::]:7946"

ts=2022-07-08T09:04:54.761919191Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-distributor-7d9774d76d-dznbk-0cb018ea as failed, suspect timeout reached (0 peer confirmations)"

ts=2022-07-08T09:04:54.761964776Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-querier-69699487d-w94th-37f736e8 as failed, suspect timeout reached (0 peer confirmations)"

ts=2022-07-08T09:04:54.767045252Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-ingester-1-64922bd4 as failed, suspect timeout reached (0 peer confirmations)"

ts=2022-07-08T09:04:54.767094654Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-compactor-c5879c655-zb8lc-b4c94d56 as failed, suspect timeout reached (0 peer confirmations)"

ts=2022-07-08T09:04:56.759064479Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-2-fb416a2d (timeout reached)"

ts=2022-07-08T09:04:56.759327377Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:54310"

ts=2022-07-08T09:04:56.75940327Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-2-fb416a2d from=[::1]:54310"

ts=2022-07-08T09:04:56.759480652Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T09:05:01.850413612Z caller=logging.go:76 traceID=266277171ea9dc0b msg="GET /ready (200) 38.066µs"

level=debug ts=2022-07-08T09:05:11.850134134Z caller=logging.go:76 traceID=780a1812a42cccb9 msg="GET /ready (200) 40.401µs"

ts=2022-07-08T09:05:14.756808813Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-ingester-2-fb416a2d as failed, suspect timeout reached (0 peer confirmations)"

level=debug ts=2022-07-08T09:05:21.850191027Z caller=logging.go:76 traceID=7e119eee1f09e301 msg="GET /ready (200) 44.281µs"

ts=2022-07-08T09:05:29.758676892Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-2-fb416a2d has failed, no acks received"

level=debug ts=2022-07-08T09:05:31.849934102Z caller=logging.go:76 traceID=61f14f401af9142a msg="GET /ready (200) 43.548µs"

level=debug ts=2022-07-08T09:05:41.850387486Z caller=logging.go:76 traceID=64d1ab63ccd66dd5 msg="GET /ready (200) 39.56µs"

level=debug ts=2022-07-08T09:05:49.75501964Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=40 oldVersion=39 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T09:05:51.849470457Z caller=logging.go:76 traceID=5b55ad20022db9a1 msg="GET /ready (200) 35.836µs"

level=debug ts=2022-07-08T09:05:54.755625805Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=41 oldVersion=40 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T09:05:59.755679149Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=42 oldVersion=41 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T09:06:01.849876181Z caller=logging.go:76 traceID=3e4575f2a80b0516 msg="GET /ready (200) 36.214µs"

level=debug ts=2022-07-08T09:06:04.755444518Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=43 oldVersion=42 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T09:06:09.755762024Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=44 oldVersion=43 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

Just for grins, try using bind ::0 in the memberlist config. Messages like Got ping for unexpected node grafana-tempo-distributed-ingester-2-fb416a2d from=[::1]:54310" make me think that maybe :: isn't enough.

Also, can you confirm with netstat -luntp from within the container that the listen address is what we expect it to be?

I try updating bind for memberlist but this does not seem to work.

At startup all ingester becom ACTIVE in ring but after few minutes they become UNHEALTHY

tempo.yaml:

multitenancy_enabled: false

search_enabled: true

metrics_generator_enabled: false

compactor:

compaction:

block_retention: 48h

ring:

kvstore:

store: memberlist

instance_interface_names:

- eth0

distributor:

ring:

kvstore:

store: memberlist

instance_interface_names:

- eth0

receivers:

jaeger:

protocols:

thrift_compact:

endpoint: 0.0.0.0:6831

thrift_binary:

endpoint: 0.0.0.0:6832

thrift_http:

endpoint: 0.0.0.0:14268

grpc:

endpoint: 0.0.0.0:14250

otlp:

protocols:

http:

endpoint: 0.0.0.0:55681

grpc:

endpoint: 0.0.0.0:4317

querier:

frontend_worker:

frontend_address: grafana-tempo-distributed-query-frontend-discovery:9095

ingester:

lifecycler:

ring:

replication_factor: 3

kvstore:

store: memberlist

interface_names:

- eth0

tokens_file_path: /var/tempo/tokens.json

memberlist:

abort_if_cluster_join_fails: false

join_members:

- grafana-tempo-distributed-gossip-ring

bind_addr:

- '::0'

overrides:

ingestion_rate_strategy: global

per_tenant_override_config: /conf/overrides.yaml

server:

http_listen_port: 3100

log_level: debug

log_format: logfmt

grpc_server_max_recv_msg_size: 4.194304e+06

grpc_server_max_send_msg_size: 4.194304e+06

storage:

trace:

backend: local

blocklist_poll: 5m

local:

path: /var/tempo/traces

wal:

path: /var/tempo/wal

cache: memcached

memcached:

consistent_hash: true

host: grafana-tempo-distributed-memcached

service: memcached-client

timeout: 500ms

netstat on ingester:

/ # netstat -luntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 :::3100 :::* LISTEN 1/tempo

tcp 0 0 :::9095 :::* LISTEN 1/tempo

tcp 0 0 :::7946 :::* LISTEN 1/tempo

netstat on distributor:

/ # netstat -lptun

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 :::14268 :::* LISTEN 1/tempo

tcp 0 0 :::3100 :::* LISTEN 1/tempo

tcp 0 0 :::4317 :::* LISTEN 1/tempo

tcp 0 0 :::55681 :::* LISTEN 1/tempo

tcp 0 0 :::9095 :::* LISTEN 1/tempo

tcp 0 0 :::14250 :::* LISTEN 1/tempo

tcp 0 0 :::7946 :::* LISTEN 1/tempo

udp 0 0 :::6831 :::* 1/tempo

udp 0 0 :::6832 :::* 1/tempo

logs:

kubectl logs -n monitoring grafana-tempo-distributed-ingester-0 -f

level=info ts=2022-07-08T14:20:08.364135101Z caller=main.go:191 msg="initialising OpenTracing tracer"

level=info ts=2022-07-08T14:20:08.385235479Z caller=main.go:106 msg="Starting Tempo" version="(version=inet6-aa0d125-WIP, branch=inet6, revision=aa0d125ee)"

level=info ts=2022-07-08T14:20:08.388712996Z caller=server.go:288 http=[::]:3100 grpc=[::]:9095 msg="server listening on addresses"

level=info ts=2022-07-08T14:20:08.389620583Z caller=memberlist_client.go:407 msg="Using memberlist cluster node name" name=grafana-tempo-distributed-ingester-0-2a21ef8d

ts=2022-07-08T14:20:08.389682323Z caller=memberlist_logger.go:74 level=debug msg="configured Transport is not a NodeAwareTransport and some features may not work as desired"

level=debug ts=2022-07-08T14:20:08.389699361Z caller=tcp_transport.go:393 component="memberlist TCPTransport" msg=FinalAdvertiseAddr advertiseAddr=:: advertisePort=7946

level=debug ts=2022-07-08T14:20:08.389713209Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=memberlist-kv

level=debug ts=2022-07-08T14:20:08.389722011Z caller=tcp_transport.go:393 component="memberlist TCPTransport" msg=FinalAdvertiseAddr advertiseAddr=:: advertisePort=7946

level=debug ts=2022-07-08T14:20:08.389747413Z caller=module_service.go:72 msg="module waiting for initialization" module=memberlist-kv waiting_for=server

level=info ts=2022-07-08T14:20:08.389755813Z caller=module_service.go:82 msg=initialising module=server

level=debug ts=2022-07-08T14:20:08.389768528Z caller=module_service.go:72 msg="module waiting for initialization" module=overrides waiting_for=server

level=info ts=2022-07-08T14:20:08.389787172Z caller=module_service.go:82 msg=initialising module=store

level=info ts=2022-07-08T14:20:08.389865032Z caller=module_service.go:82 msg=initialising module=overrides

level=info ts=2022-07-08T14:20:08.390056084Z caller=module_service.go:82 msg=initialising module=memberlist-kv

level=debug ts=2022-07-08T14:20:08.390089664Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=overrides

level=debug ts=2022-07-08T14:20:08.390103597Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=server

level=debug ts=2022-07-08T14:20:08.39011469Z caller=module_service.go:72 msg="module waiting for initialization" module=ingester waiting_for=store

level=info ts=2022-07-08T14:20:08.390121118Z caller=module_service.go:82 msg=initialising module=ingester

level=info ts=2022-07-08T14:20:08.390132297Z caller=ingester.go:328 msg="beginning wal replay"

level=warn ts=2022-07-08T14:20:08.390163064Z caller=rescan_blocks.go:24 msg="failed to open search wal directory" err="open /var/tempo/wal/search: no such file or directory"

level=info ts=2022-07-08T14:20:08.390183332Z caller=ingester.go:413 msg="wal replay complete"

level=info ts=2022-07-08T14:20:08.390199772Z caller=ingester.go:427 msg="reloading local blocks" tenants=0

level=info ts=2022-07-08T14:20:08.390258851Z caller=lifecycler.go:576 msg="instance not found in ring, adding with no tokens" ring=ingester

level=info ts=2022-07-08T14:20:08.390307791Z caller=app.go:327 msg="Tempo started"

level=debug ts=2022-07-08T14:20:08.390364369Z caller=lifecycler.go:412 msg="JoinAfter expired" ring=ingester

level=info ts=2022-07-08T14:20:08.390375723Z caller=lifecycler.go:416 msg="auto-joining cluster after timeout" ring=ingester

level=debug ts=2022-07-08T14:20:08.390532008Z caller=memberlist_client.go:833 msg="CAS attempt failed" err="no change detected" retry=true

ts=2022-07-08T14:20:08.39562628Z caller=memberlist_logger.go:74 level=warn msg="Failed to resolve grafana-tempo-distributed-gossip-ring: lookup grafana-tempo-distributed-gossip-ring on [fd11:8308:43d1::a]:53: no such host"

level=debug ts=2022-07-08T14:20:08.395645101Z caller=memberlist_client.go:533 msg="attempt to join memberlist cluster failed" retries=0 err="1 error occurred:\n\t* Failed to resolve grafana-tempo-distributed-gossip-ring: lookup grafana-tempo-distributed-gossip-ring on [fd11:8308:43d1::a]:53: no such host\n\n"

level=debug ts=2022-07-08T14:20:09.391729891Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=2 oldVersion=1 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

ts=2022-07-08T14:20:09.406152033Z caller=memberlist_logger.go:74 level=warn msg="Failed to resolve grafana-tempo-distributed-gossip-ring: lookup grafana-tempo-distributed-gossip-ring on [fd11:8308:43d1::a]:53: no such host"

level=debug ts=2022-07-08T14:20:09.406174681Z caller=memberlist_client.go:550 msg="attempt to join memberlist cluster failed" retries=1 err="1 error occurred:\n\t* Failed to resolve grafana-tempo-distributed-gossip-ring: lookup grafana-tempo-distributed-gossip-ring on [fd11:8308:43d1::a]:53: no such host\n\n"

ts=2022-07-08T14:20:13.154930269Z caller=memberlist_logger.go:74 level=warn msg="Failed to resolve grafana-tempo-distributed-gossip-ring: lookup grafana-tempo-distributed-gossip-ring on [fd11:8308:43d1::a]:53: no such host"

level=debug ts=2022-07-08T14:20:13.154958033Z caller=memberlist_client.go:550 msg="attempt to join memberlist cluster failed" retries=2 err="1 error occurred:\n\t* Failed to resolve grafana-tempo-distributed-gossip-ring: lookup grafana-tempo-distributed-gossip-ring on [fd11:8308:43d1::a]:53: no such host\n\n"

level=debug ts=2022-07-08T14:20:13.390563476Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=3 oldVersion=2 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T14:20:18.390705549Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=4 oldVersion=3 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

ts=2022-07-08T14:20:18.492509362Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e00:4a5b::1]:7946"

ts=2022-07-08T14:20:18.493213329Z caller=memberlist_logger.go:74 level=debug msg="Failed to join 2a05:d012:dcb:5e00:4a5b::3: dial tcp [2a05:d012:dcb:5e00:4a5b::3]:7946: connect: connection refused"

ts=2022-07-08T14:20:18.494089535Z caller=memberlist_logger.go:74 level=debug msg="Initiating push/pull sync with: [2a05:d012:dcb:5e01:a32a::3]:7946"

level=info ts=2022-07-08T14:20:18.4951947Z caller=memberlist_client.go:554 msg="joined memberlist cluster" reached_nodes=2

ts=2022-07-08T14:20:23.390790057Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53' from=[::]:7946"

ts=2022-07-08T14:20:25.391593075Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 (timeout reached)"

ts=2022-07-08T14:20:25.392108143Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:45834"

ts=2022-07-08T14:20:25.392177585Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 from=[::1]:45834"

ts=2022-07-08T14:20:25.392237542Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53' from=[::]:7946"

ts=2022-07-08T14:20:25.392267839Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53' from=[::]:7946"

ts=2022-07-08T14:20:25.392345145Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T14:20:28.391376258Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 has failed, no acks received"

ts=2022-07-08T14:20:28.391750326Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db' from=[::]:7946"

ts=2022-07-08T14:20:30.392624626Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db (timeout reached)"

ts=2022-07-08T14:20:30.393076033Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:45880"

ts=2022-07-08T14:20:30.393115768Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db' from=[::]:7946"

ts=2022-07-08T14:20:30.393149305Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db from=[::1]:45880"

ts=2022-07-08T14:20:30.393236931Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T14:20:33.391804303Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db has failed, no acks received"

ts=2022-07-08T14:20:33.392118365Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-2-a1730743' from=[::]:7946"

ts=2022-07-08T14:20:35.392819637Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-2-a1730743 (timeout reached)"

ts=2022-07-08T14:20:35.393074723Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:37712"

ts=2022-07-08T14:20:35.393143581Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-2-a1730743 from=[::1]:37712"

ts=2022-07-08T14:20:35.393233389Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

ts=2022-07-08T14:20:38.392414648Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-2-a1730743 has failed, no acks received"

ts=2022-07-08T14:20:38.392739241Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-1-200fdd5f' from=[::]:7946"

ts=2022-07-08T14:20:40.393507726Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-1-200fdd5f (timeout reached)"

ts=2022-07-08T14:20:40.393790244Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:37744"

ts=2022-07-08T14:20:40.393855292Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-1-200fdd5f from=[::1]:37744"

ts=2022-07-08T14:20:40.39394172Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T14:20:44.066172442Z caller=logging.go:76 traceID=5cb4129d0e80e887 msg="GET /ready (503) 87.984µs"

ts=2022-07-08T14:20:48.392762486Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-1-200fdd5f has failed, no acks received"

ts=2022-07-08T14:20:48.393066608Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-1-200fdd5f' from=[::]:7946"

ts=2022-07-08T14:20:50.393938028Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-1-200fdd5f (timeout reached)"

ts=2022-07-08T14:20:50.394187229Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:42650"

ts=2022-07-08T14:20:50.394251268Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-1-200fdd5f from=[::1]:42650"

ts=2022-07-08T14:20:50.394340497Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T14:20:54.066064536Z caller=logging.go:76 traceID=7a12ea8d2ea0add8 msg="GET /ready (503) 65.677µs"

ts=2022-07-08T14:21:03.39380646Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-1-200fdd5f has failed, no acks received"

ts=2022-07-08T14:21:03.394154671Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db' from=[::]:7946"

level=debug ts=2022-07-08T14:21:04.065682342Z caller=logging.go:76 traceID=488e8813fd09e617 msg="GET /ready (200) 76.978µs"

ts=2022-07-08T14:21:05.394767831Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db (timeout reached)"

ts=2022-07-08T14:21:05.395001378Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:48458"

ts=2022-07-08T14:21:05.395068442Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db from=[::1]:48458"

ts=2022-07-08T14:21:05.395149221Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T14:21:14.066158479Z caller=logging.go:76 traceID=48ba80491d3516bc msg="GET /ready (200) 36.155µs"

ts=2022-07-08T14:21:23.394430013Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db has failed, no acks received"

ts=2022-07-08T14:21:23.394760902Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-2-a1730743' from=[::]:7946"

level=debug ts=2022-07-08T14:21:24.066169226Z caller=logging.go:76 traceID=26b66dc170c88374 msg="GET /ready (200) 40.443µs"

ts=2022-07-08T14:21:25.395280094Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-2-a1730743 (timeout reached)"

ts=2022-07-08T14:21:25.395567765Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:53252"

ts=2022-07-08T14:21:25.395629639Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-2-a1730743 from=[::1]:53252"

ts=2022-07-08T14:21:25.395719174Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T14:21:34.065310985Z caller=logging.go:76 traceID=672a61e377fece3e msg="GET /ready (200) 33.791µs"

level=debug ts=2022-07-08T14:21:44.065484296Z caller=logging.go:76 traceID=69eb9d2f30a846a6 msg="GET /ready (200) 42.176µs"

ts=2022-07-08T14:21:48.395397491Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-2-a1730743 has failed, no acks received"

ts=2022-07-08T14:21:48.395744355Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53' from=[::]:7946"

ts=2022-07-08T14:21:50.396582938Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 (timeout reached)"

ts=2022-07-08T14:21:50.396851734Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:35456"

ts=2022-07-08T14:21:50.39692176Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 from=[::1]:35456"

ts=2022-07-08T14:21:50.39699893Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T14:21:54.065317846Z caller=logging.go:76 traceID=5efc8931f2c4a175 msg="GET /ready (200) 39.829µs"

level=debug ts=2022-07-08T14:22:04.065500165Z caller=logging.go:76 traceID=3ea6d7b45f2a3b53 msg="GET /ready (200) 38.656µs"

level=debug ts=2022-07-08T14:22:14.066100867Z caller=logging.go:76 traceID=2342c5560f31258b msg="GET /ready (200) 33.596µs"

ts=2022-07-08T14:22:18.395813128Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 has failed, no acks received"

ts=2022-07-08T14:22:18.396122079Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node 'grafana-tempo-distributed-ingester-2-a1730743' from=[::]:7946"

ts=2022-07-08T14:22:18.495215243Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-ingester-1-200fdd5f as failed, suspect timeout reached (0 peer confirmations)"

ts=2022-07-08T14:22:20.396927348Z caller=memberlist_logger.go:74 level=debug msg="Failed ping: grafana-tempo-distributed-ingester-2-a1730743 (timeout reached)"

ts=2022-07-08T14:22:20.397187101Z caller=memberlist_logger.go:74 level=debug msg="Stream connection from=[::1]:38164"

ts=2022-07-08T14:22:20.397249755Z caller=memberlist_logger.go:74 level=warn msg="Got ping for unexpected node grafana-tempo-distributed-ingester-2-a1730743 from=[::1]:38164"

ts=2022-07-08T14:22:20.397379079Z caller=memberlist_logger.go:74 level=error msg="Failed fallback ping: EOF"

level=debug ts=2022-07-08T14:22:24.065351772Z caller=logging.go:76 traceID=2ad32f7b2b011f67 msg="GET /ready (200) 35.084µs"

ts=2022-07-08T14:22:28.392327572Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-querier-849f899dbb-qtzg2-f6a2eb53 as failed, suspect timeout reached (0 peer confirmations)"

ts=2022-07-08T14:22:33.392748512Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-compactor-886479d9f-s98pj-f90c21db as failed, suspect timeout reached (0 peer confirmations)"

level=debug ts=2022-07-08T14:22:34.066171563Z caller=logging.go:76 traceID=6009de54512365a8 msg="GET /ready (200) 38.831µs"

ts=2022-07-08T14:22:38.39282936Z caller=memberlist_logger.go:74 level=info msg="Marking grafana-tempo-distributed-ingester-2-a1730743 as failed, suspect timeout reached (0 peer confirmations)"

level=debug ts=2022-07-08T14:22:44.065783242Z caller=logging.go:76 traceID=4c93e946b6348403 msg="GET /ready (200) 44.376µs"

ts=2022-07-08T14:22:53.396682003Z caller=memberlist_logger.go:74 level=info msg="Suspect grafana-tempo-distributed-ingester-2-a1730743 has failed, no acks received"

level=debug ts=2022-07-08T14:22:54.065832807Z caller=logging.go:76 traceID=356cb616ca2a1ae1 msg="GET /ready (200) 33.153µs"

level=debug ts=2022-07-08T14:23:04.065399621Z caller=logging.go:76 traceID=76656da73306d58f msg="GET /ready (200) 35.055µs"

level=debug ts=2022-07-08T14:23:13.390631858Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=40 oldVersion=39 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T14:23:14.066172601Z caller=logging.go:76 traceID=50e9b39d58245121 msg="GET /ready (200) 48.15µs"

level=debug ts=2022-07-08T14:23:18.390468818Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=41 oldVersion=40 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

level=debug ts=2022-07-08T14:23:23.390512234Z caller=broadcast.go:48 msg="Invalidating forwarded broadcast" key=collectors/ring version=42 oldVersion=41 content=[grafana-tempo-distributed-ingester-0] oldContent=[grafana-tempo-distributed-ingester-0]

I tried a couple other changes last week but wasn't able to get something working just yet. I think I'll need to drag in some memberlist expertise. It seemed like the addresses for the initial pull/push of memberlist were correct, but then the advertised addresses after that were not reflecting the reachable addresses.

Also, it occurs to me that the change I made in dskit will only ever use IPv6 if IPv4 is unavailable. I think it might be better if we could say something like you mentioned initially of "prefer v6" even if v4 is present. I think if we could prove that out in code, then handling the IPv6-only case should be simple, and also allow folks with a dual-stack environment (like me) to test the code.

I've got some priorities, so I'll need to come back to this after I ask around about memberlist.

I believe zalegrala/canary-tempo:inet6-59f2d71d5z has enough change to get everything communicating over IPv6, I think. I'm running this on a dual stack environment, but I think the address detection may work for an IPv6-only environment. I have some upstream work to do yet, so this will take a little time, but just proving it out.

I had to make the following configuration adjustments. You probably won't need to include the service updates, since you only have IPv6 available.

https://github.com/zalegrala/tempo/blob/inet6/operations/jsonnet/microservices/common.libsonnet#L17

If any of that is unclear, I'll come back and try to give you a better config for testing.

I try to try this this week :pray:

Okay sounds good. I've updated the branches in question but didn't build a new image for it yet.

I've pushed a zalegrala/tempo:inet6-dirty image based on main as of today. I'm using the following config additions with success in a dual stack environment. I expect this would also work in an IPv6-only environment.

memberlist:

bind_addr:

- '[::0]'

bind_port: 7946

compactor:

ring:

kvstore:

store: memberlist

prefer_inet6: true

metrics_generator:

ring:

prefer_inet6: true

ingester:

lifecycler:

address: '[::0]'

prefer_inet6: true

server:

grpc_listen_address: '[::0]'

grpc_listen_port: 9095

http_listen_address: '[::0]'

http_listen_port: 3200

If anyone would like to test and report feedback, I'd appreciate it.

Hi @zalegrala hope i can take time to test it quickly.

This issue has been automatically marked as stale because it has not had any activity in the past 60 days. The next time this stale check runs, the stale label will be removed if there is new activity. The issue will be closed after 15 days if there is no new activity. Please apply keepalive label to exempt this Issue.

Hello

I made a test with your image @zalegrala that seems almost good, the pods are starting correctly, but the query fails.

upstream: (500) error querying ingesters in Querier.Search: rpc error: code = Unimplemented desc = unknown service tempopb.Querier

Resulting config:

compactor:

compaction:

block_retention: 720h

ring:

kvstore:

store: memberlist

prefer_inet6: true

distributor:

receivers:

jaeger:

protocols:

grpc:

endpoint: '[::0]:14250'

otlp:

protocols:

grpc:

endpoint: '[::0]:4317'

ring:

kvstore:

store: memberlist

ingester:

lifecycler:

address: '[::0]'

prefer_inet6: true

ring:

kvstore:

store: memberlist

replication_factor: 3

tokens_file_path: /var/tempo/tokens.json

memberlist:

abort_if_cluster_join_fails: false

bind_addr:

- '[::0]'

join_members:

- tempodb-gossip-ring

metrics_generator:

ring:

prefer_inet6: true

metrics_generator_enabled: false

multitenancy_enabled: false

overrides:

per_tenant_override_config: /conf/overrides.yaml

querier:

frontend_worker:

frontend_address: tempodb-query-frontend-discovery:9095

query_frontend:

search:

max_duration: 24h

search_enabled: true

server:

grpc_listen_address: '[::0]'

grpc_server_max_recv_msg_size: 4194304

grpc_server_max_send_msg_size: 4194304

http_listen_address: '[::0]'

http_listen_port: 3100

log_format: logfmt

log_level: info

storage:

trace:

backend: s3

blocklist_poll: 5m

cache: memcached

local:

path: /var/tempo/traces

memcached:

consistent_hash: true

host: tempodb-memcached

service: memcached-client

timeout: 500ms

usage_report:

reporting_enabled: false

Any idea on what is wrong ?

Maybe an idea, the distributor seems not able to contact the ingest or build it own distributor ring (the error is not clear)

But I see there is no distibutor.ring.prefer_inet6 option existing. So that could be the issue.

level=info ts=2023-01-20T13:58:09.083848382Z caller=main.go:200 msg="initialising OpenTracing tracer"

level=info ts=2023-01-20T13:58:09.924863397Z caller=main.go:115 msg="Starting Tempo" version="(version=inet6-616d5cf-WIP, branch=inet6, revision=616d5cf74)"

level=info ts=2023-01-20T13:58:09.925896904Z caller=server.go:323 http=[::]:3100 grpc=[::]:9095 msg="server listening on addresses"

ts=2023-01-20T13:58:09Z level=info msg="OTel Shim Logger Initialized" component=tempo

level=info ts=2023-01-20T13:58:09.92825036Z caller=memberlist_client.go:436 msg="Using memberlist cluster label and node name" cluster_label= node=tempodb-distributor-858569bdc4-5hsn8-9ec044d5

ts=2023-01-20T13:58:09.929507347Z caller=memberlist_logger.go:74 level=warn msg="Binding to public address without encryption!"

level=info ts=2023-01-20T13:58:09.929718531Z caller=memberlist_client.go:543 msg="memberlist fast-join starting" nodes_found=1 to_join=2

level=info ts=2023-01-20T13:58:09.928703338Z caller=module_service.go:82 msg=initialising module=server

level=info ts=2023-01-20T13:58:09.930410483Z caller=module_service.go:82 msg=initialising module=memberlist-kv

level=info ts=2023-01-20T13:58:09.930510525Z caller=module_service.go:82 msg=initialising module=overrides

level=info ts=2023-01-20T13:58:09.930851764Z caller=module_service.go:82 msg=initialising module=ring

level=info ts=2023-01-20T13:58:09.942638588Z caller=memberlist_client.go:563 msg="memberlist fast-join finished" joined_nodes=7 elapsed_time=12.926977ms

level=info ts=2023-01-20T13:58:09.942787419Z caller=memberlist_client.go:576 msg="joining memberlist cluster" join_members=tempodb-gossip-ring

level=info ts=2023-01-20T13:58:09.943420872Z caller=module_service.go:82 msg=initialising module=distributor

ts=2023-01-20T13:58:09Z level=info msg="Starting GRPC server on endpoint [::0]:4317" component=tempo

ts=2023-01-20T13:58:09Z level=info msg="No sampling strategies provided or URL is unavailable, using defaults" component=tempo

level=info ts=2023-01-20T13:58:09.944259819Z caller=app.go:195 msg="Tempo started"

level=info ts=2023-01-20T13:58:09.949394979Z caller=memberlist_client.go:595 msg="joining memberlist cluster succeeded" reached_nodes=7 elapsed_time=6.60874ms

level=warn ts=2023-01-20T13:58:42.930930626Z caller=tcp_transport.go:453 component="memberlist TCPTransport" msg="WriteTo failed" addr=[2600:1f10:4444:9d02:e6c9::3]:7946 err="dial tcp [2600:1f10:4

4444:9d02:e6c9::3]:7946: i/o timeout"

[...]

level=error ts=2023-01-20T13:59:04.362852385Z caller=rate_limited_logger.go:27 msg="pusher failed to consume trace data" err="rpc error: code = Unimplemented desc = unknown service tempopb.Pusher"

[...]

ts=2023-01-20T13:59:14.930653575Z caller=memberlist_logger.go:74 level=info msg="Suspect tempodb-distributor-858569bdc4-f9bz9-bc20dabc has failed, no acks received"

moreover the memberlist is correct...

tempodb-compactor-855b7864d6-6zrv7-34cc3fee | [2600:1f10:4444:9d02:e6c9::8]:7946 | 0

tempodb-distributor-858569bdc4-5hsn8-9ec044d5 | [2600:1f10:4444:9d02:8568::8]:7946 | 0

tempodb-ingester-0-0c3c2c83 | [2600:1f10:4444:9d02:e6c9::7]:7946 | 0

tempodb-ingester-1-3c6eaa93 | [2600:1f10:4444:9d02:8568::6]:7946 | 0

tempodb-ingester-2-7670a62f | [2600:1f10:4444:9d02:e6c9::6]:7946 | 0

tempodb-querier-b46d889dc-v7ktr-d97621a3 | [2600:1f10:4444:9d02:e6c9::2]:7946 | 0

But the IP 2600:1f10:4444:9d02:e6c9::3 in logs above exists nowhere in my infrastructure. I have no idea from where the distributor is gessing this IP...

EDIT: This behaviour was probably exceptional at the begining of my tests. I didn't reproduced it again. But I still have unknown service tempopb.Pusher on distributor and unknown service tempopb.Querier at querier.

The /ingester/ring is correct, but I can't reach any /distributor/ring, I have a 404 on any component where I try.

Additional notes :

# HELP tempo_distributor_ingester_append_failures_total The total number of failed batch appends sent to ingesters.

# TYPE tempo_distributor_ingester_append_failures_total counter

tempo_distributor_ingester_append_failures_total{ingester="[::0]:9095"} 1149

Solution found !!!

ingester:

lifecycler:

address: tempodb-ingester-discovery # Replace '[::0]' from your guidelines

I'm not really sure if it's "correct" because all the ingester have the same DNS (corresponding to a kubernetes service) but it works. Any advice around it ?

EDIT: In fact just removing address from the config seems to be the best solution !

I passed my day on it 😭

Side effect of removing address, the querier has a very unexpected error when it calls the ingesters ^^

transport: Error while dialing dial tcp: address 2600:1f10:4f40:9d02:e6c9::8:9095: too many colons in address

@gillg Thank you for testing. Much appreciated. Looks like you bumped into the same issue reported on the dskit pr. https://github.com/grafana/dskit/pull/185#discussion_r1072704469

@gillg Thank you for testing. Much appreciated. Looks like you bumped into the same issue reported on the dskit pr. grafana/dskit#185 (comment)

Indeed! It's related to the way the RFC represent an IPv6 as string. I added a comment in the related PR.

But my current workaround is to use the service name as address, that points to an IPv6 beind and it works like a charm. I would be happy to validate your last fixes and using an official image soon :) By the way my next candidate is Loki because I have an old version running standalone to migrate in my IPv6 cluster.

I had a chance to test this again yesterday. I've published a zalegrala/tempo:inet6-dirty image yesteray that is based on main, so any testers will want to make updates mentioned in the 2.0 upgrade documentation. Of note, users probably want to avoid flipping from 1.5 to 2.0 and then back. Once migrated to 2.x, perhaps stay there.

A similar config as above, with a few keys changed is required to test this. Note the change from [::0] to :: in some address specifications, but not all.

memberlist:

bind_addr:

- '::'

bind_port: 7946

compactor:

ring:

kvstore:

store: memberlist

enable_inet6: true

metrics_generator:

ring:

enable_inet6: true

ingester:

lifecycler:

address: '::'

enable_inet6: true

server:

grpc_listen_address: '[::0]'

grpc_listen_port: 9095

http_listen_address: '[::0]'

http_listen_port: 3200

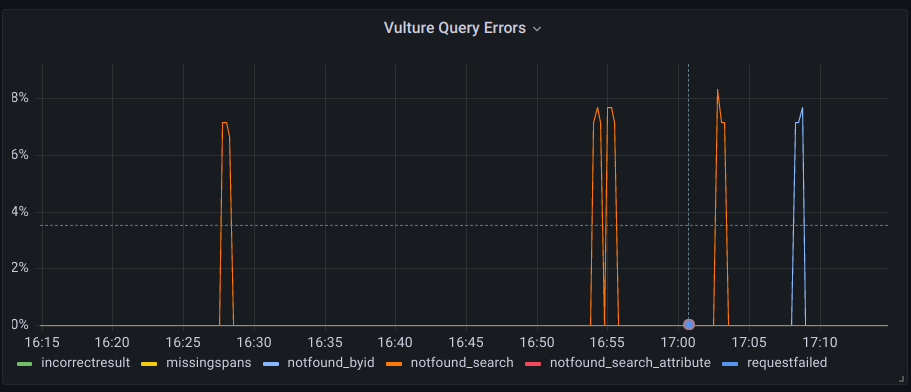

In my tests, writes worked fine, but reads were failing most of the time. I'll spend a little more time with this soon, but if other testers want to dig in and see if we can identify additional details it would be helpful.

In my tests, writes worked fine, but reads were failing most of the time. I'll spend a little more time with this soon, but if other testers want to dig in and see if we can identify additional details it would be helpful.

@zalegrala On my side reads are working, the cluster is fully functional. Searches, queries, ingest, etc... What is your problem exactly ?

Nice, that's good to hear things are working for you. I'm using the vulture, and just seeing that some searches are failing. That graph is pretty much flat in normal circumstances. Can you hammer both read and write and see how it goes?

Ah... I'm in early deployment stage of tempodb so I don't really have feedback to compare and not yet dashboards. Any query advice I can run on Prometheus directly to check ?

Upstream was merged a couple weeks ago. I've updated the the PR and included the docs mentioned in this issue. Thanks for the review here.

For dashboards, we have the json output from our jsonnet in the repo at operations/tempo-mixin/dashboards/tempo-operational.json, which you can import into grafana.