Add Agent Benchmarking

Context

A lot of people are asking about various performance characteristics of pyroscope. Common asks include:

- how many individual clients can one server support?

- how much does pyroscope agent affect performance? How much extra memory do I need to provision per pod for pyroscope agent?

We did some of this testing about 6 months ago and came up with the 1-2% number that we quote for agent performance hit. The problem is that:

- the number is pretty much anecdotal

- not reproducible / verifiable by users

- we did this testing once a long time ago, and since then made a bunch of changes, so performance could have improved or could have gotten worse, we don't really know.

We already started doing some benchmarking, the code can be found here: /benchmark. However, this suite needs more polishing and it currently only benchmarks the server. We should probably use it as a base for this project.

Proposed Solution

We need to come up with a suite of benchmarks for both server and all types of clients we support (.net, ruby, go, etc).

Other Considerations

- This benchmark suite has to be reproducible by users and developers

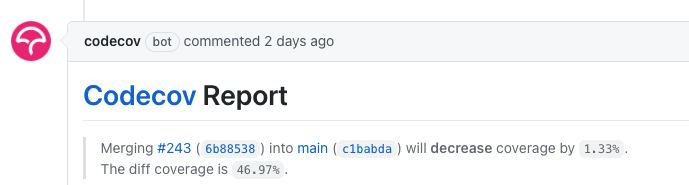

- We need to have some automation around this and have benchmarking results stored somewhere for each release, and ideally for each commit. This way we can track progress over time. It would be the best if we could have some job run on jenkins for each PR and generate a diff of performance metrics for main branch and given PR, e.g something similar to what codecov does:

- I imagine for some of these metrics there would be significant differences depending on configuration parameters provided. For example, I imagine server throughput would change significantly between having a) 30 clients report data for the same app vs b) having 30 clients report data for 30 different apps. I propose that when we pick configuration parameters we make best guesses about what an average user's app configuration is going to be. Would love to hear other suggestions though.

Metrics For Server

- [ ] throughput in profiles per seconds or something like that

- [ ] how many clients can one server support

- [ ] amount of storage required per day of profiling data

- [ ] TODO: come up with more metrics

Metrics For Agents

- [ ] CPU overhead of adding pyroscope integration

- [ ] #361

- [ ] network overhead (e.g what's the size of one 10-second profile chunk)

- [ ] TODO: come up with more metrics

Proposed Milestones

- [x] finish the list metrics we want to track (see a few paragraphs above)

- [x] have a good server benchmark suite

- [ ] have a good client benchmark suite for each integration

- [ ] have jenkins track performance over time

- [ ] have jenkins also generate diffs / summaries for each PR

We could also look at https://github.com/grafana/k6 too for benchmarking

also this might be overkill, but there may be something useful in timescales benchmarking suite that compares it to other dbs: https://github.com/timescale/tsbs

https://blog.timescale.com/blog/what-is-high-cardinality-how-do-time-series-databases-influxdb-timescaledb-compare/

In an effort of making this issue more scoped out, here are the goals and clear next steps for the project:

Goals:

- The benchmark suite should help us debug performance issues in both server and integrations (go, ruby and python are the most important ones for now)

- We should have key performance metrics stored for each commit

- for server:

- average throughput per run

- for clients (for each integration):

- cpu utilization overhead

- used memory overhead

- for server:

- This benchmark suite should be reproducible by end users (e.g this is why we're using

docker-composefor this)

Next Steps:

- [ ] add go apps with and without pyroscope to benchmark docker-compose.yml

- [ ] start.sh file in benchmark should print the key metrics (described above) after each run

- [ ] we should set up a jenkins job to run for each commit (on all branches).

- [ ] we should set up this jenkins job to report these key metrics to our main prometheus server

- [ ] we should set up this jenkins job to send summaries about the changes to each PR as PR comments (see codecov integration for an example of that)

^ This will give us a good foundation on which we can build other things in the future, e.g:

- benchmarks for ruby, python integrations

- more key metrics

Curious for the:

add go apps with and without pyroscope to benchmark docker-compose.yml

Do you have any thoughts of what the characteristics of that app should be @petethepig ? For example could the app be just a while loop? Could it be a medium app (i.e. Jaegers Hotrod demo)? Should it be a large app (i.e. Google Microservices Demo)?

@Rperry2174 Good question. I think there are 2 dimensions for picking an optimal app:

-

how complex an app is (or how big is the call tree, or how many individual nodes are in the resulting profile)

-

how much work does an app do (e.g what's the baseline cpu utilization for the app)

-

If the app is not very complex and the profile only has a few nodes (e.g a while loop) then there's not going to be a lot of overhead and also that's not what most users will run anyway, so it's not a very good app.

-

if the baseline cpu utilization is 100% then it's going to be very tricky to measure pyroscope agent overhead.

-

if the baseline cpu utilization is close to 0% that might be fine but in that case it's going to be hard to have a complex tree. Plus it's also not the environment most users would run their apps in.

So given all of these things I think we should aim to be at about 75 percentile on both dimensions. So that means that:

- if you take a 100 go apps running in production and sort them by how complex their call trees are we should pick the 75th one. I understand that this is perhaps hard to do. I'd say for now let's not overthink it and let's just use our best judgement here.

- we should aim for 75% baseline cpu utilization. 75% is also an industry standard when it comes to optimal cpu utilization, so we can assume most users will be running close to those numbers

Closing #398 but when we get around to this that Pr shoudl be revisited

Following the ideas of https://github.com/pyroscope-io/pyroscope/issues/249#issuecomment-905851308, I started working on agent benchmarking in a new repo https://github.com/pyroscope-io/agent-benchmarks. There are some differences with the original proposal:

- The server is left-out of the agent benchmark performance and replaced by an ingestor server that can have the behaviour we want (and thus, allow us to benchmark the agents under different server situations, like downtime, congestion, etc.)

- Docker SDK is used instead of docker-compose, which gives some further flexibility: control over the start/stop, more precise time measurements, etc.

- Benchmarks are container-based and easy to create. This also gives some further flexibility: instead of trying to find a one size fits all approach, we can actually validate the different scenarios.

Since agents may have some fixed start/stop time, I think each benchmark should have some minimal duration to avoid overestimating the profiling costs, which may make them unsuitable for running per commit, maybe a nightly run would make more sense. It would be interesting to store the different benchmark suite runs to be able to compare them, something like https://perf.golang.org/ or https://perf.golang.org/search?q=upload:20220222.10

For agent benchmarks, I think https://github.com/pyroscope-io/agent-benchmarks repo and its issues should replace this one. There's also some server-related benchmarks discussion in this issue, not sure if we should use this issue for those or use some more specific one for those and close this one.