loki

loki copied to clipboard

loki copied to clipboard

[helm/loki-simple-scalable] Are PVCs really required? / Retention not working?

My Goal

- Run Loki only with Object Storage from MinIO, if it is possible.

- I want to run Loki scalable and easy as possible, that's why I decided to use loki-simple-scalable resp. the new location .

My Question

- Is it possible to run loki-simple-scalable without PVCs (only S3 from MinIO)?

- If yes, how does the configuration look like?

- If no, why are PVCs required?, Which contents are saved in my PVCs?, Why are my PVCs filling up?

- Are the retentions configured in the

compactorandlimits_configworking with S3 or do I need to configure the retention on my MinIO Bucket? As I understand the release notes of Loki 2.3 there is no need for external (not loki) retentions (Loki now has the ability to apply custom retention based on stream selectors! This will allow much finer control over log retention all of which is now handled by Loki, no longer requiring the use of object store configs for retention.) -> If yes, how does the configuration look like? - Just for my interest: What's the difference between configure retention in the

compactorcomponent and configure retention intable manager? In the documentation the following written: Retention through the Table Manager is achieved by relying on the object store TTL feature, and will work for both boltdb-shipper store and chunk/index store. However retention through the Compactor is supported only with the boltdb-shipper store. But in the section of the Table Manager it contradicts itself: When using S3 or GCS, the bucket storing the chunks needs to have the expiry policy set correctly. For more details check S3’s documentation or GCS’s documentation.

My current configuration

gateway:

replicas: 2

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 3

targetCPUUtilizationPercentage: 60

targetMemoryUtilizationPercentage: 80

ingress:

enabled: true

resources:

limits:

#cpu: 50m

memory: 30Mi

requests:

cpu: 5m

memory: 15Mi

loki:

# The value below "config" must be a string instead of a map

config: |

auth_enabled: false

common:

path_prefix: /var/loki

storage:

filesystem: null

s3:

insecure: true

s3: http://USERNAME:[email protected]:9000/loki

s3forcepathstyle: true

storage_config:

boltdb_shipper:

active_index_directory: /var/loki/index

cache_location: /var/loki/cache

cache_ttl: 24h # Can be increased for faster performance over longer query periods, uses more disk space

shared_store: s3

schema_config:

configs:

- from: "2020-09-07"

store: boltdb-shipper

object_store: s3

schema: v12

index:

period: 24h

prefix: index_

limits_config:

retention_period: 1h

enforce_metric_name: false

max_cache_freshness_per_query: 10m

reject_old_samples: true

reject_old_samples_max_age: 168h

split_queries_by_interval: 15m

compactor:

compaction_interval: 10m

retention_enabled: true

retention_delete_delay: 2h

retention_delete_worker_count: 150

table_manager:

retention_deletes_enabled: true

retention_period: 1h

chunk_store_config:

max_look_back_period: 1h

query_scheduler:

max_outstanding_requests_per_tenant: 1000

memberlist:

join_members:

- loki-memberlist

server:

http_listen_port: 3100

grpc_listen_port: 9095

write:

replicas: 2

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 3

targetCPUUtilizationPercentage: 60

targetMemoryUtilizationPercentage: 80

persistence:

size: 5Gi

storageClass: longhorn

resources:

limits:

#cpu: 400m

memory: 1200Mi # Don't decrease this limit. Loki uses enough memory to prevent dataloss (when storage backend unavailable)

requests:

cpu: 35m

memory: 300Mi # Don't decrease this limit. Loki can fastly grow up in memory and this can cause OOM-Killing of other pods

read:

replicas: 2

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 3

targetCPUUtilizationPercentage: 60

targetMemoryUtilizationPercentage: 80

persistence:

size: 1Gi

storageClass: longhorn

resources:

limits:

#cpu: 400m

memory: 150Mi

requests:

cpu: 50m

memory: 75Mi

serviceMonitor:

enabled: true

monitoring:

selfMonitoring:

enabled: false

grafanaAgent:

installOperator: false

- Environment specific Helm values:

gateway:

ingress:

hosts:

- host: loki.apps-test-1....

paths:

- path: /

pathType: ImplementationSpecific

tls:

- hosts:

- loki.apps-test-1....

secretName: ingress-wildcard-cert

-> I already opened this issue in the previous location of the Loki-Simple-Scalable Helm Chart, but because the Helm chart moved (thanks to @zanhsieh), I opened a new issue here.

There are other users with the same issue.

This problem has been bothering me

Im having the same issue...but after reading the docs over and over again i think the retention configured here for compactor is only for the index (boltdb_shipper store). So no logs older than retention are displayed in grafana. The chunks arent deleted by compactor.

out of https://grafana.com/docs/loki/latest/operations/storage/retention/ "When using S3 or GCS, the bucket storing the chunks needs to have the expiry policy set correctly. For more details check S3’s documentation or GCS’s documentation."

Although @R-Studio you are right, the 2.3 Patchnotes sound bit different.

@atze234 thanks for your reply. It is little bit confusing because in the docs for compactor following is written:

The Compactor can deduplicate index entries. It can also apply granular retention. When applying retention with the Compactor, the Table Manager is unnecessary.

-> It would be helpful when the docs also adds following sentence for compactor: When using S3 or GCS, the bucket storing the chunks needs to have the expiry policy set correctly.

Anyway, do you know how to configure loki in "scalable mode" without having persistent volumes? (so that loki only uses S3)?

@atze234 @R-Studio The compactor docs also say: "The chunks will be deleted by the compactor asynchronously when swept." and "Marked chunks will only be deleted after retention_delete_delay configured is expired" (plus an explanation of why that is). So I'd say no expiry policy on the object store is necessary with the current Loki version. (Purely based on reading the docs, I hope to have time soon to try it out)

@R-Studio With the current chart its not possible to configure the PVC away. You may use an ephemeral Storage Class maybe. Although i think there will be data loss in case of restarts, so i wont do that.

@davdr I tried with just the compactor and no chunks were deleted out of S3. I tried with 7days retention and even after 4 weeks there were old chunks in s3. Thats why i came here :)

@R-Studio

The table_manager docs say: "The retention period must be a multiple of the index / chunks", the compactor docs say The minimum retention period is 24h., and the schema_config docs mentions that the default period of index and chunks is 168h.

Can you check your configuration and try again?

@atze234 Thanks for your reply, but why is it possible with the "Single Binary" mode?

@guoew If I have understood you correctly, then the following configuration should work with the retention of 4 days:

loki:

# The value below "config" must be a string instead of a map

config: |

auth_enabled: false

common:

path_prefix: /var/loki

replication_factor: 3

storage:

filesystem: null

s3:

insecure: true

s3: http://<USER:PASSWORD>@minio.minio.svc.cluster.local:9000/loki

s3forcepathstyle: true

storage_config:

boltdb_shipper:

active_index_directory: /var/loki/index

cache_location: /var/loki/cache

cache_ttl: 24h # Can be increased for faster performance over longer query periods, uses more disk space

shared_store: s3

schema_config:

configs:

- from: "2020-09-07"

store: boltdb-shipper

object_store: s3

schema: v12

index:

period: 24h

prefix: index_

limits_config:

retention_period: 4d

enforce_metric_name: false

max_cache_freshness_per_query: 10m

reject_old_samples: true

reject_old_samples_max_age: 168h

split_queries_by_interval: 15m

compactor:

compaction_interval: 10m

retention_enabled: true

retention_delete_delay: 2h

retention_delete_worker_count: 150

table_manager:

retention_deletes_enabled: true

retention_period: 4d

query_scheduler:

max_outstanding_requests_per_tenant: 1000

memberlist:

join_members:

- loki-memberlist

server:

http_listen_port: 3100

grpc_listen_port: 9095

grpc_server_max_recv_msg_size: 104857600

grpc_server_max_send_msg_size: 104857600

ingester_client:

grpc_client_config:

max_recv_msg_size: 104857600

ruler:

alertmanager_url: http://alertmanager-operated.cattle-monitoring-system.svc.cluster.local:9093

enable_alertmanager_v2: true

enable_api: true

enable_sharding: true

-> Until now the retention is not working, but maybe I have to wait. (I will get back to you later)

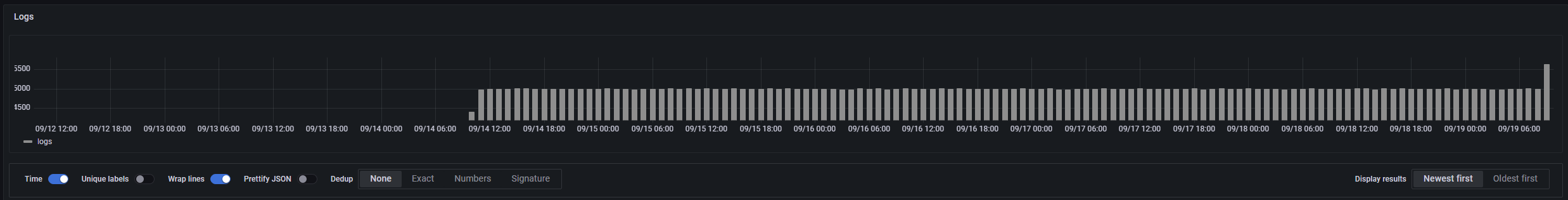

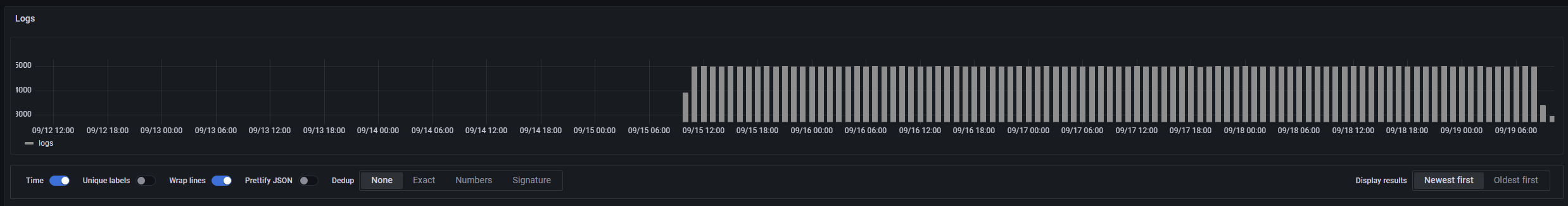

@guoew It looks like the retention is working now:

Before changing the retention to 4 days:

After changing the retention to 4 days:

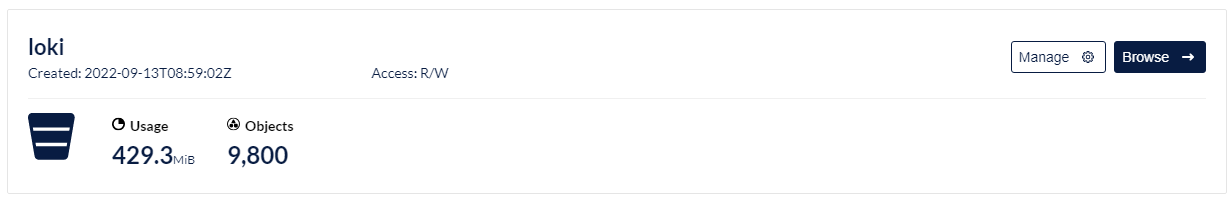

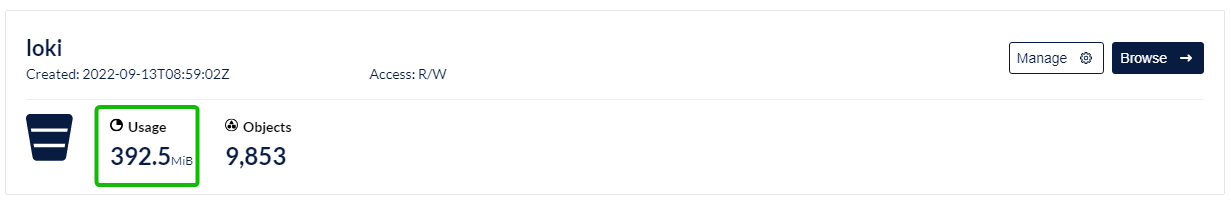

But on the S3 (MinIO) I don't see that loki has deleted anything. But maybe this takes some time for MinIO to really delete the files.

Before changing the retention to 4 days:

After changing the retention to 4 days:

@R-Studio You can change schema_config to the following configuration and try again.

schema_config

configs:

- from: "2020-09-07"

store: boltdb-shipper

object_store: s3

schema: v12

index:

period: 24h

prefix: index_

chunks:

period: 24h

@guoew what does this mean/change? And does it work if I set it only now?

I changed it, but no difference:

Now it works, thanks! 👍😊😉

If I have understood the loki retention correctly, you have to set the following configuration to make the retention working, correct? (Example of a retention of 7 days (only the retention-relevant configuration):

schema_config:

configs:

- index:

period: 24h

chunks:

period: 24h

limits_config:

retention_period: 7d

compactor:

retention_enabled: true

table_manager:

retention_deletes_enabled: true

retention_period: 7d

-> @atze234 maybe this helps also for you.

For me its not deleting anything out of chunks :-1: aws-cli easily finds files older than 7 days. My config:

auth_enabled: true

common:

path_prefix: /var/loki

replication_factor: 3

storage:

s3:

bucketnames: <redacted>

endpoint: s3.eu-central-1.amazonaws.com

insecure: false

region: eu-central-1

s3: <redacted>

s3forcepathstyle: false

compactor:

compaction_interval: 10m

retention_delete_delay: 2h

retention_delete_worker_count: 150

retention_enabled: true

shared_store: s3

working_directory: /var/loki/compactor

limits_config:

enforce_metric_name: false

max_cache_freshness_per_query: 10m

reject_old_samples: true

reject_old_samples_max_age: 168h

retention_period: 7d

split_queries_by_interval: 15m

memberlist:

join_members:

- loki-memberlist

query_range:

align_queries_with_step: true

query_scheduler:

max_outstanding_requests_per_tenant: 2048

ruler:

storage:

s3:

bucketnames: <redacted>

endpoint: s3.eu-central-1.amazonaws.com

insecure: false

region: eu-central-1

s3: <redacted>

s3forcepathstyle: false

schema_config:

configs:

- chunks:

period: 24h

from: "2022-01-11"

index:

period: 24h

prefix: loki_index_

object_store: s3

schema: v12

store: boltdb-shipper

server:

grpc_listen_port: 9095

http_listen_port: 3100

storage_config:

aws:

s3: s3://eu-central-1/<redacted>

boltdb_shipper:

active_index_directory: /var/loki/index

cache_location: /var/loki/boltdb-cache

resync_interval: 5s

shared_store: s3

hedging:

at: 250ms

max_per_second: 20

up_to: 3

table_manager:

retention_deletes_enabled: true

retention_period: 7d

Increase every day

Increase every day

Given the conversation in #7210 is it ok to close this issue?

@trevorwhitney

Hi still there is no clear answer what loki supports and what it can do. So I have same issue, S3 integration with loki. Everything works well except retention, I set it for 168h, using compactor but nothing happen, logs are there forever.

- Does loki support automatic delete of chunks from S3 like API (in my case ceph object storage)?

- Does simple scalable chart has compactor included or we need to add it manually somehow? How?

- If loki doesn't support retention on S3, then I believe I need to set retention policies in bucket?

- How can I find compactor logs? I examined all pods of simple-scalable deployment and I can't find any pod or container in existing pods for compactor. Is it a part of some other container/pod or it is just missing?

Looking at the documentation it is confusing as mentioned above. https://grafana.com/docs/loki/latest/storage/ part of the configuration looks like an answer, that it is not supported for S3, but there is a information "For more information, see the retention configuration documentation."

When I go there I will find nothing more about S3 case.

...and I believe when this part "https://grafana.com/docs/loki/latest/operations/storage/retention/" says "The chunks will be deleted by the compactor asynchronously when swept. I believe it means they will be deleted only from boltdb-shipper, but not from S3. Mu suggestion will be to add information in the "https://grafana.com/docs/loki/latest/operations/storage/retention/", that clearly says deletion of chunks from S3 (maybe others also) is not supported and compactor/table-manager only remove chunks from cache/index (boltdb-shipper or simillar).

@bbroniewski the compactor is in the read component of simple scalable deployment. The compators logs will be part of the read pod. If you just want to make sure it's running, you could look for caller=compactor.go. You should set retention policies in your bucket (ie TTL), and the compactor will handle removing old chunks from the index so they no longer return in queries.

I agree the docs could probably use a bit of clarification regarding retention. Would you mind submitting a PR?

@trevorwhitney Thank you for the answer! I can prepare enhancement of docs, but I will need your support to understand it perfectly. Is there any channel where I can ask you some question and get answer quicker during preparation of document modification?

I am in the community Grafana slack, but I would encourage you open a PR (even if it's not perfect) and we can collaborate through the PR comment/review process.

Can i somehow select which logs will be kept and which log files will have a retention policy or is it a "catch-all" thing?

@SnoozeFreddo you can use retention per stream selector: https://grafana.com/docs/loki/latest/operations/storage/retention/#configuring-the-retention-period

@atze234

try removing storage_config.aws configuration

the compactor is in the

readcomponent of simple scalable deployment. The compators logs will be part of the read pod. If you just want to make sure it's running, you could look forcaller=compactor.go.

As of grafana/loki:3.0.0 I was able to find caller=compactor.go only in component=backend of simple scalable deployment.