ferry

ferry copied to clipboard

ferry copied to clipboard

The cache causes memory leak

Setting a FetchPolicy that read data in cache cause memory leak

Each time, a widget start to read the cache, memory usage increase and never go down.

Here are the result of the leak by FetchPolicy and type of Store:

| CacheOnly | CacheFirst | CacheAndNetwork | NetworkOnly | NoCache | |

|---|---|---|---|---|---|

| HiveStore | Leak | Leak | Leak | No Leak | No Leak |

| Memory Store | Leak | Leak | Leak | No Leak | No Leak |

By elimination, the top method that causes the leak should be CacheTypedLink.request.

Minimal step to reproduce the bug:

https://github.com/T-moz/ferry_memory_leak

Pubspec:

name: ferry_memory_leak

description: A new Flutter project.

publish_to: 'none' # Remove this line if you wish to publish to pub.dev

version: 1.0.0+1

environment:

sdk: ">=2.16.1 <3.0.0"

dependencies:

flutter:

sdk: flutter

cupertino_icons: ^1.0.2

ferry: ^0.10.5-dev.3

gql_http_link: ^0.4.0

hive: ^2.0.5

hive_flutter: ^1.1.0

ferry_hive_store: ^0.4.4

ferry_flutter: ^0.5.5-dev.3

dev_dependencies:

flutter_test:

sdk: flutter

ferry_generator: ^0.5.0-dev.10

build_runner: ^2.1.7

gql_exec: ^0.4.0

gql_build: ^0.4.0

flutter_lints: ^1.0.0

flutter:

uses-material-design: true

This way to reproduce the bug can look kind of dumb. But the real life problem is, the more a user use the app, the more the memory usage will go up.

Thanks for your work. I'm available to help fix this if needed

Are you sending the exact same request every time (same parameters)? Because if you do, it shouldn't increase the cache size, right?

Yes that's the same request. I the demo app, I set up a timer that will display then hide a QueryBuilder.

But the experience shows that the cache size increases when it should not.

Hey, so I did a bit of investigation and it may not be as bad as we thought.

In your sample repo, you're not creating any Store. Therefore, ferry will default to using the MemoryStore. Now if you look at the implementation, it's basically a stream where we're always adding elements that's why it keeps growing.

I then created my own store which only prints method calls to understand how the store is being used by ferry. By running your code with it, I could see in the console that we only call get and put, never delete(All) or clear.

My Store

import 'package:ferry/ferry.dart';

class MyStore extends Store {

@override

void clear() {

print('clear');

}

@override

void delete(String dataId) {

print('delete ' + dataId);

}

@override

void deleteAll(Iterable<String> dataIds) {

print('delete all');

}

@override

Map<String, dynamic>? get(String dataId) {

print('get ' + dataId);

return null;

}

@override

Iterable<String> get keys {

print('get keys');

return [];

}

@override

void put(String dataId, Map<String, dynamic>? value) {

print('put ' + dataId);

}

@override

void putAll(Map<String, Map<String, dynamic>?> data) {

print('putAll ' + data.keys.join(', '));

}

@override

Stream<Map<String, dynamic>?> watch(String dataId) {

print('watch ' + dataId);

return const Stream.empty();

}

}

Finally, I used the HiveStore and by running the code on the web, I could see that the number of entries remain constant (around 418 I think).

Since it doesn't seem that we can deactivate the cache completely, my recommendation would be to use the HiveStore...

Thanks for your response ! Hover, I did try to use HiveStore in a variation of the sample repo. The problem wasn't fixed. Also if I remember correctly, I did look the number of entries in the store, and it wasn't growing. A better indicator to measure the problem is the memory tab of the debugger.

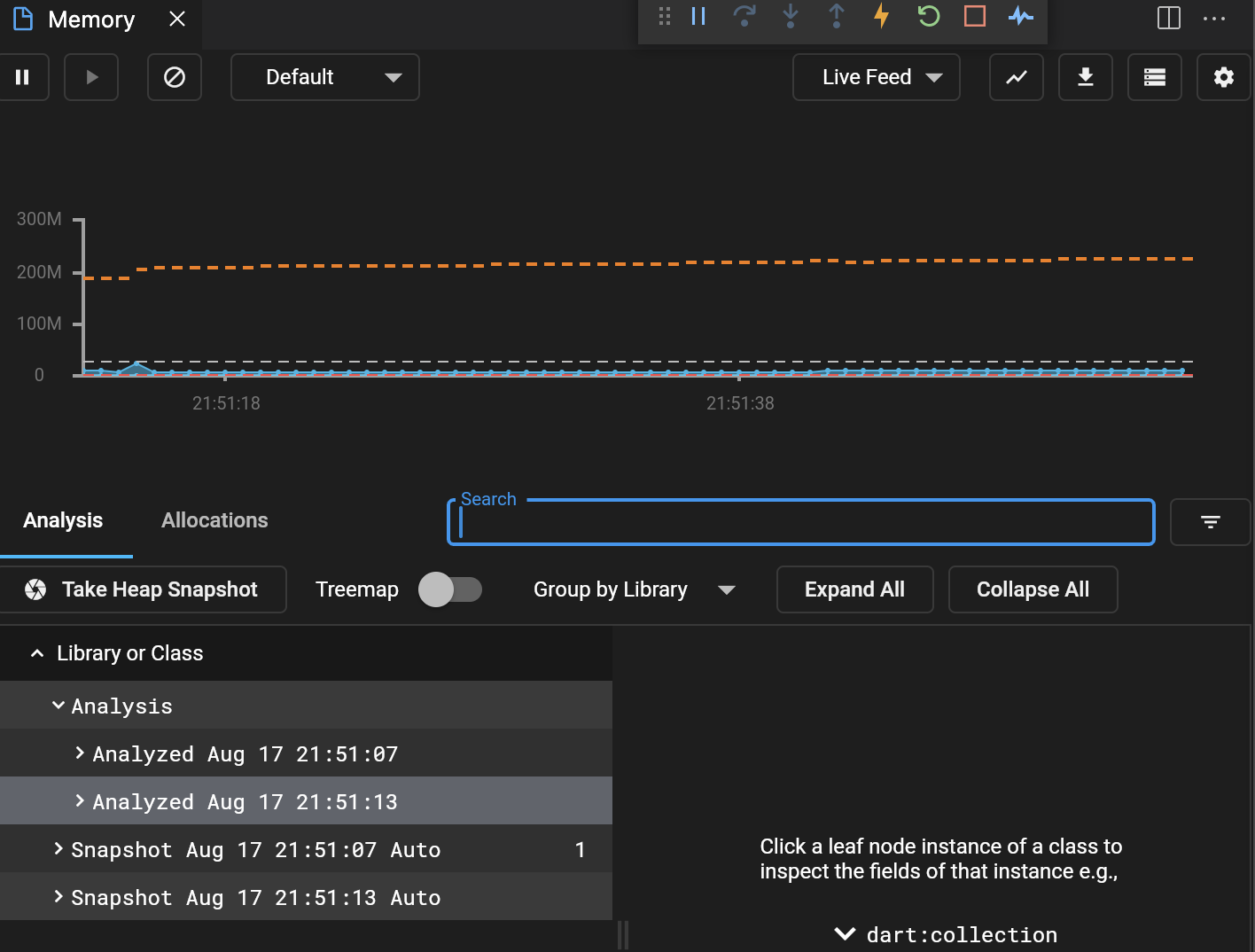

I see what you mean. I re-run the app in profile mode on an Android emulator and this _Closure from dart:core keeps growing. But I don't know where it comes from...

Memory screenshot

Thank you. It really seems that there is a memory leak somewhere. I don't yet know where exactly and I probably won't have time to dig deeper into this in the next weeks.

If someone wants to spend some time to research, some suggestions:

-

find out if a leak occurs if when using the Cache instance directly (calling e.g. watchQuery on the cache) or if it is the FetchPolicyTypedLink or the CacheTypedLink

-

see if you can trigger similar behavior using the cache implementation of the

graphqlpackage (which is similar on also based onnormalize, but a different implementation

Actually, the issue is also with Hive. I looked at the implementation and it seems that the HiveStore keeps data both in memory and in the indexedDB. There is also this section of the docs that seems to indicate that this could be an issue.

If I replace the store implementation with my own above (which only prints), then the memory stays constant:

Memory screenshot

I'm going to work on making the Store async so that we could use the LazyBox instead of Isar but that will be a breaking change.

The EmptyStore probably does not cause a leak because you return an empty Stream on watch(). You can try creating a StreamController and return its .stream and see if that leaks. (a subscription to an empty is cancelled immediately so there is less chance of leaked subscriptions, which are probably the cause)

I tried replacing MyStore.watch() with this and the leak didn't happen:

@override

Stream<Map<String, dynamic>?> watch(String dataId) {

final controller = StreamController<Map<String, dynamic>?>();

return controller.stream;

}

MyStore full implementation

import 'dart:async';

import 'package:ferry/ferry.dart';

class MyStore extends Store {

@override

void clear() {

print('clear');

}

@override

void delete(String dataId) {

print('delete ' + dataId);

}

@override

void deleteAll(Iterable<String> dataIds) {

print('delete all');

}

@override

Map<String, dynamic>? get(String dataId) {

print('get ' + dataId);

return null;

}

@override

Iterable<String> get keys {

print('get keys');

return [];

}

@override

void put(String dataId, Map<String, dynamic>? value) {

print('put ' + dataId);

}

@override

void putAll(Map<String, Map<String, dynamic>?> data) {

print('putAll ' + data.keys.join(', '));

}

@override

Stream<Map<String, dynamic>?> watch(String dataId) {

print('watch ' + dataId);

final controller = StreamController<Map<String, dynamic>?>();

return controller.stream;

// return const Stream.empty();

}

}

Then I change the strategy to test using the Cache instance directly: instead of toggle the display of EpisodesList which makes the request:

- I display it all the time

- I extracted the request in a global variable for step 3

- When you click on the bottom, I launch a periodic timer which does cache.write(request, data) with some generated data

The leak didn't happen (tested with MyStore, InMemoryStore, HiveStore).

Full code

import 'dart:async';

import 'package:ferry/ferry.dart';

import 'package:ferry_flutter/ferry_flutter.dart';

import 'package:ferry_hive_store/ferry_hive_store.dart';

import 'package:ferry_memory_leak/__generated__/episodes.data.gql.dart';

import 'package:ferry_memory_leak/__generated__/episodes.req.gql.dart';

import 'package:ferry_memory_leak/__generated__/episodes.var.gql.dart';

import 'package:flutter/material.dart';

import 'package:gql_http_link/gql_http_link.dart';

import 'package:hive_flutter/hive_flutter.dart';

void main() async {

await Hive.initFlutter('hive_data');

final box = await Hive.openBox("graphql", compactionStrategy: (total, deleted) => total > 100 || deleted > 100);

final store = HiveStore(box);

// final cache = Cache(store: MyStore());

final cache = Cache(store: store);

final client = Client(

link: link,

cache: cache,

defaultFetchPolicies: defaultFetchPolicies,

);

runApp(MyApp(client));

}

final defaultFetchPolicies = {

OperationType.query: FetchPolicy.CacheFirst,

};

final link = HttpLink("https://rickandmortyapi.com/graphql");

class MyApp extends StatelessWidget {

const MyApp(this.client, {Key? key}) : super(key: key);

final Client client;

@override

Widget build(BuildContext context) {

return MaterialApp(

title: 'Ferry memory leak',

theme: ThemeData(

primarySwatch: Colors.blue,

),

home: MyHomePage(client, title: 'Ferry memory leak'),

);

}

}

class MyHomePage extends StatefulWidget {

const MyHomePage(this.client, {Key? key, required this.title})

: super(key: key);

final String title;

final Client client;

@override

State<MyHomePage> createState() => _MyHomePageState();

}

final req = GgetEpisodesReq((b) => b.vars.page = 1);

class _MyHomePageState extends State<MyHomePage> {

bool _isLooping = false;

Timer? _timer;

void _writeCache() {

widget.client.cache.writeQuery(req, GgetEpisodesData((data) {

final episodes = List.generate(

100,

(index) => GgetEpisodesData_episodes_results((episode) {

episode

..id = '$index'

..name = 'Episode $index'

..episode = '$index'

..air_date = '$index'

..created = '$index';

episode.characters.addAll([]);

}));

data.episodes.info.count = episodes.length;

data.episodes.results.addAll(episodes);

}));

}

void _loopToggleDisplayEpisodes() {

if (!_isLooping) {

_timer = Timer.periodic(const Duration(milliseconds: 250), (_) {

_writeCache();

});

} else {

_timer?.cancel();

}

setState(() {

_isLooping = !_isLooping;

});

}

@override

Widget build(BuildContext context) {

return Scaffold(

appBar: AppBar(

title: Text(widget.title),

),

body: Column(

mainAxisSize: MainAxisSize.min,

mainAxisAlignment: MainAxisAlignment.center,

children: [

Row(

mainAxisAlignment: MainAxisAlignment.spaceAround,

children: [

ElevatedButton(

onPressed: _writeCache,

child: const Text("Toggle episodes"),

),

ElevatedButton(

onPressed: _loopToggleDisplayEpisodes,

child: Text(_isLooping ? "Stop" : "Trigger memory leak"),

),

],

),

Expanded(child: EpisodesList(widget.client)),

],

),

);

}

}

class EpisodesList extends StatelessWidget {

const EpisodesList(this.client, {Key? key}) : super(key: key);

final Client client;

Widget builder(

BuildContext context,

OperationResponse<GgetEpisodesData, GgetEpisodesVars>? response,

Object? error,

) {

final data = response?.data;

if (data == null || (response?.loading ?? true)) {

return const Center(child: CircularProgressIndicator());

}

return ListView.builder(

itemCount: data.episodes?.results?.length,

itemBuilder: ((context, index) => ListTile(

title: Text(data.episodes?.results?[index].name ?? ""),

)),

);

}

@override

Widget build(BuildContext context) {

return Operation(

client: client,

operationRequest: req,

builder: builder,

);

}

}