Reimplementing a lighter sdk-server

Is your feature request related to a problem? Please describe.

As far as I know, each sdk-server is currently connected to the apiserver and resyncs every 30 seconds, so when there are a lot of game-servers, resyncing will cause a lot of requests and affect the whole cluster

Describe the solution you'd like

Describe alternatives you've considered

One idea is to pull out what the sdk-server does into a new service that is responsible for managing the state of the game-server across the cluster. This would be more complex, and this approach would make sdk-server lighter and faster to start.

Additional context

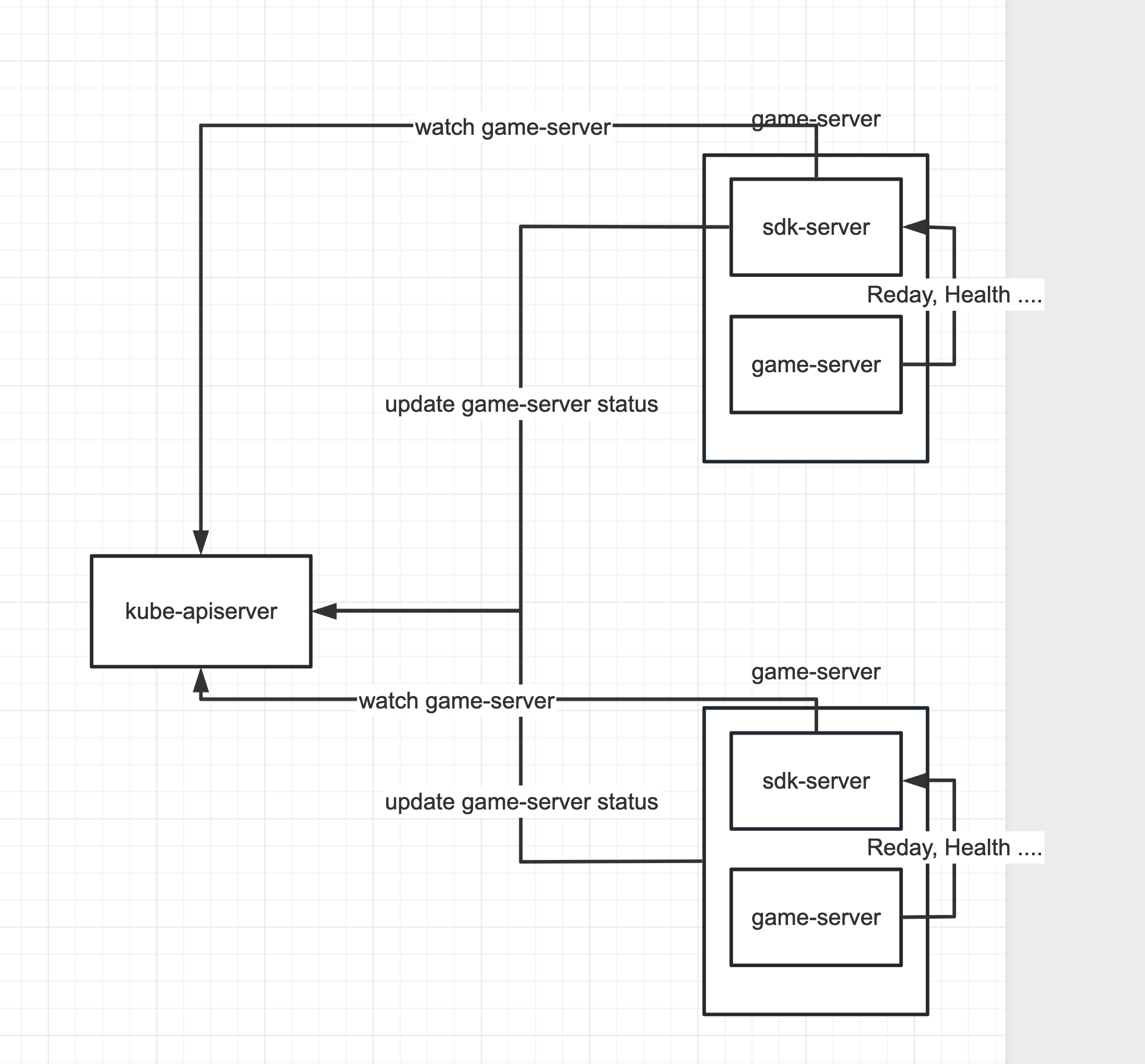

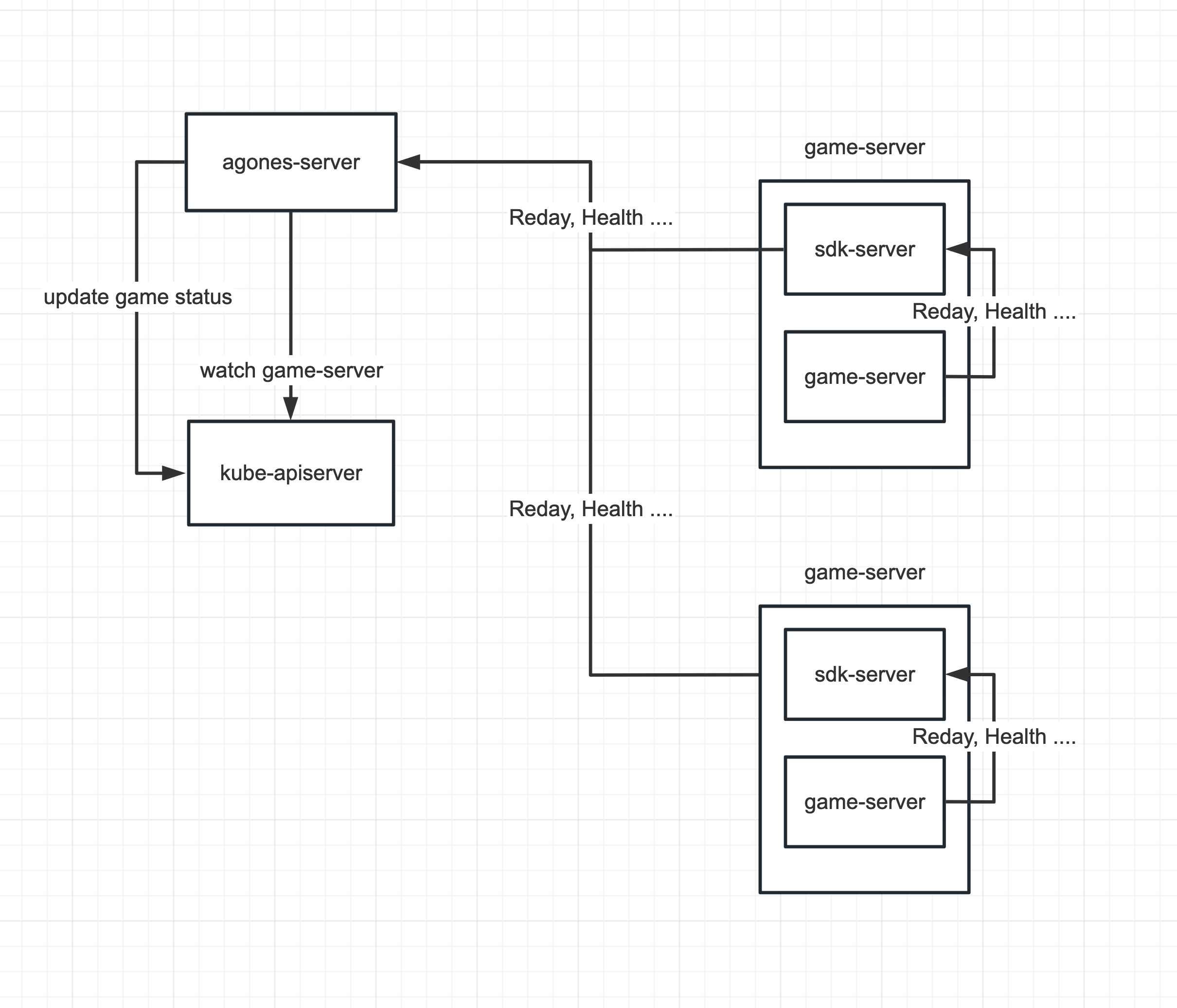

Here is a diagram I drew

This is the flow chart before I understood it,I don't know if my understanding is completely correct

This is the program I want to change to

We've had this conversation pretty much since day 1 with Agones -- the biggest question always is - is the overhead of the watches on the K8s API surface enough to build out and maintain all this extra infrastructure, when tying to the K8s API solves so many problems for us and gives us libraries for testing, integration, and we don't have to maintain any of it.

So... I guess this is way of saying - we'd need a lot of data to consider this I think.

I have an idea

Is it possible to implement a proxy that provides the same style as apiserver, so that the sdk-server has very few or no changes?

The proxy would handle all requests from the sdk-server via client-go, and would call the apiserver directly for calls that are not cacheable, and would always return to the sdk-server from memory for list and watch and get.

In my cluster, I had some problems with a large number of game-server that made my cluster apiserver particularly slow to respond, and when I removed some of the game-server, the cluster returned to normal. I didn't confirm if the problem was caused by the sdk-server. agones-controller also restarted repeatedly due to request timeout issue

That's still a tonne of work to do and maintain. Unless there is some kind of existing "K8s API proxy" project that we could use?

But even then, there's a lot of new complexity there. So it still comes back to having real data.

This came up again in an external conversation about running lots of game servers on a node, and the resources the sdk sidecar take up (which is totally valid!)

The other thing that always worried me about having the daemonset approach was that the sidecar approach, we can lock the sidecar API to only access the GameServer it was hosted with.

On a daemonset, it should be able to access the data of any GameServer on that node -- so there is a bunch of work we would have to do to mitigate this in the case of a compromised GameServer binary. So there is a potential extra security work that would need to happen there.

Not insurmountable, but potentially complicated and would need extensive testing (and probably some security audits?) to ensure that if a game server does get comprimised we limit its potential attack vectors.

The other side to this was also reliability - if one sidecar restarts, it only affects one game server. If a daemonset restarts, it affects all gameservers on the node. This only gets worse the more game servers are hosted on the node.

....so it's all tradeoffs 🤷🏻

What if the daemonset is replaced by a deployment, which is evenly distributed to each node through affinity, and then the sidecar is given priority to access the dameonset on the local node through a service policy.

Or a small number of deployments can do the job, and the sidecar will be less affected by the rolling update of the deployment

If it's a Deployment, then each Pod has to have access to all GameServers across the entire cluster, since there is no guarantee that a GameServer will connect to a Pod on the same node it resides -- which is a even larger potential attack vector.

So yes, we get better reliability (in theory, we would need to handle disconnections from the central service if a deployment as well, since rolling updates), but we lose some security.

So yep - tradeoffs 😄

I'm not sure I fully understand what you're saying about security, maybe we can consider network policies, and mtls.

If we go for a more complex solution, a set of deployments inside a namespace, so that we are limited to the current namespace. And sidecar only communicates with the current deployment, which might satisfy some security.

If the kube-apiserver is blocked by a large number of sidecar, it may affect more gameserver

Yes, tradeoffs。

I'm not sure I fully understand what you're saying about security, maybe we can consider network policies, and mtls.

Since we run code that we (as an open source project, and application platform) don't have any control over, and is (generally) exposed publicly, we have to always consider "what are the ramifications of if a gameserver container is totally compromised" -- so what information can they access, what can they modify, etc etc.

So access to GameServer information is what I'm particularly referring to in this case. The more information a compromised game server container can get access to, the more potential for malicious operations can occur.

So right now, with a sidecar SDK container, the API the sidecar exposes will only expose information about the current GameServer it sits next to. This is better (from an attack vector perspective) than if the SDK server is hosted on the node - as the API would therefore need to be able to expose information about all GameServers on the Node. This would also be better than if the SDK server was a Deployment, as the API would have to be able to expose information about any GameServer that resides on the Cluster.

I think I see what you mean.

There is really something to think about in this matter. How about mTLS?

server -> plain -> sidecar -> mTLS(GRPC) -> deployment -> kube-apiserver

In this case, the server will not get the mtls certificate even if it is attacked, so it will only affect the current gameserver

I have had conversations where people have asked to drop the sidecar entirely, to save on resources (which I get the incentive for as well). But that also introduces a backward compatibility issue for when people have rolled their own SDKs and/or forcing a SDK upgrade to work.

There is really something to think about in this matter. How about mTLS?

I think you are saying that the mTLS cert would also be provided to the Deployment basically as a way to say "if you get a connection with this cert, you only allow access to this GameServer resource" -- which also makes sense. Whether a full mTLS certificate is required or just a cryptographically random API token is sufficient.

But that could potentially work 🤔

'This issue is marked as Stale due to inactivity for more than 30 days. To avoid being marked as 'stale' please add 'awaiting-maintainer' label or add a comment. Thank you for your contributions '

This issue is marked as obsolete due to inactivity for last 60 days. To avoid issue getting closed in next 30 days, please add a comment or add 'awaiting-maintainer' label. Thank you for your contributions