New Fuzzer: MCCE

Thanks for your pull request! It looks like this may be your first contribution to a Google open source project. Before we can look at your pull request, you'll need to sign a Contributor License Agreement (CLA).

View this failed invocation of the CLA check for more information.

For the most up to date status, view the checks section at the bottom of the pull request.

Is there a paper associated with this? Why does this modify so many things outside of fuzzers/ ? @Alan32Liu can you please run an experiment with this but not merge it.

Hey Jonathan! Yes, we are hoping to submit the paper to CCS in a few weeks. The modifications outside of fuzzers fall into the following categories:

- speeding up local testing by installing common dependencies in the base-image instead of each container

- our fork of SymCC requires a mandatory static library to be linked in with additional instrumented code. sqlite3 builds some binaries without passing

LDFLAGSandLIBSto the compiler, so that was changed. In hindsight it would have been better to simply integrate that library directly into the compiler wrapper, but with the deadline relatively close I'd prefer to keep it as-is for now. - Helper scripts

I wasn't aware that they were all intended to be merged, once the deadline is passed, I'm happy to remove and refactor it to be merged. Most of the changes outside fuzzers/ should be able to be undone without causing too many issues.

@jonathanmetzman @Alan32Liu Would it be possible to request an experiment of this with no seed corpus? Looking through the service code it looks like there's no way to pass said option through the service requests YAML.

Hi @Lukas-Dresel!

@jonathanmetzman @Alan32Liu Would it be possible to request an experiment of this with no seed corpus?

Yep, we have an option --no-seeds (or -ns) that conducts all trials without seed corpora.

We can also disable dictionaries via -nd if needed.

I will include it when launching experiments from this PR.

@jonathanmetzman, please comment if they cease to work : )

Meanwhile, would you please make three changes to the code if it is not too much trouble?

- Decide on the experiment configurations. I noticed that symcts_experiment_config.yaml specifies

max_total_time: 172800 trials: 2while your service/experiment-requests.yaml definesmax_total_time: 28800 trials: 10. Could you please place the intended config inservice/experiment-config.yaml? I will use it as your main config to run experiments. Thanks!

BTW, @jonathanmetzman, would we expect many trials failing if it takes 2 days (172800 seconds)?

-

Make a trivial modification to

service/gcbrun_experiment.py. This will allow me to launch experiments in this PR without merging. Here is an example to add a dummy comment. -

Resolve the conflict in this PR. It may block experiments, IIUC.

In addition, just to double-check if I understand you correctly. The experiment intends to evaluate the code coverage performance of the following fuzzers:

- symcts

- symcts_afl

- symcts_symqemu

- symctf_symqemu-afl

- symsan

- symcc_aflplusplus

- aflplusplus

- afl_companion

on the following benchmarks:

- curl_curl_fuzzer_http

- harfbuzz_hb-shape-fuzzer

- jsoncpp_jsoncpp_fuzzer

- libpng_libpng_read_fuzzer

- stb_stbi_read_fuzzer

- libpcap_fuzz_both

- libxml2_xml

- libxslt_xpath

- re2_fuzzer

- vorbis_decode_fuzzer

- woff2_convert_woff2ttf_fuzzer

- zlib_zlib_uncompress_fuzzer

The experiment name 2023-04-20-symcts-the-first-act might be too long (it is required to be within 30 chars), I hope you would not mind if I rename it to 2023-04-20-symcts-1st-act?

Thanks!

@Alan32Liu I'm happy to make those modifications. I will note, the reason I specified a lower timeout of 8 hours was that I wanted to make a first test run to ensure everything is working. I can dial that down to even just one hour to simply get a first assurance that everything works as it does locally.

Ideally, I think a 2 day experiment would be good, however, if it's too much trouble we can go for the default of 23 hours as well.

I'm currently using the --no-seeds and --no-dictionaries option in my local tests, I just didn't see a way to request that through the service-requests.yaml :).

I also wanted to check, how do the no-seeds and no-dictionary options affect the ability to use the non-private experiment results? I'd imagine those are with seed corpora.

I can dial that down to even just one hour to simply get a first assurance that everything works as it does locally.

Sure, We can start with that too! If the time is urgent (I recall the paper's due date is in weeks), I am also happy to skip the one-hour testing (which will take a few hours in total to build and run).

Ideally, I think a 2 day experiment would be good, however, if it's too much trouble we can go for the default of 23 hours as well.

Let's wait for @jonathanmetzman's confirmation : )

I'm currently using the

--no-seedsand--no-dictionariesoption in my local tests, I just didn't see a way to request that through the service-requests.yaml :).

OK, I will use both when I run experiment here. I am unsure if we could specify them in the yaml file either.

I also wanted to check, how do the no-seeds and no-dictionary options affect the ability to use the non-private experiment results? I'd imagine those are with seed corpora.

IIUC, the experiment will not be merged with non-private results? @jonathanmetzman. Some benchmarks were updated recently, so their old results are not usable anyway. If you'd like to compare your fuzzers with other baseline fuzzers, I can include them in my commands when launching the experiment to ensure all are running fairly under the same condition.

A quick reminder for myself on the command line flags to use later when the three modifications above are ready:

--no-seeds

--no-dictionaries

--experiment-config /opt/fuzzbench/service/experiment-config.yaml

--experiment-name 2023-04-20-symcts

--fuzzers symcts symcts_afl symcts_symqemu symcts_symqemu_afl symsan symcc_aflplusplus aflplusplus honggfuzz libfuzzer afl_companion

--benchmarks curl_curl_fuzzer_http harfbuzz_hb-shape-fuzzer jsoncpp_jsoncpp_fuzzer libpng_libpng_read_fuzzer stb_stbi_read_fuzzer libpcap_fuzz_both libxml2_xml libxslt_xpath re2_fuzzer vorbis_decode_fuzzer woff2_convert_woff2ttf_fuzzer zlib_zlib_uncompress_fuzzer

Btw, I noticed a typo with underscores in my fuzzer names in the request and am making sure to get an up-to-date buildable target list, please make sure to update the command with the list from the experiment-requests.yaml :)

Will I be able to see the in-progress report data for the running experiment? If so, we can start with the 2 days and if I notice anything obviously wrong I can let you know.

Similarly, I'd like to perform some other analysis on the resulting corpuses for the paper, is there a way I could get access to the experiment-data after the fact as well?

After fixing the Symsan integration with Ju Chen's help, I've rebuilt the targets, and with the exception of mbedtls, they all build, so the final target list should be

bloaty_fuzz_target

curl_curl_fuzzer_http

freetype2_ftfuzzer

harfbuzz_hb-shape-fuzzer

jsoncpp_jsoncpp_fuzzer

lcms_cms_transform_fuzzer

libjpeg-turbo_libjpeg_turbo_fuzzer

libpcap_fuzz_both

libpng_libpng_read_fuzzer

libxml2_xml

libxslt_xpath

openh264_decoder_fuzzer

openssl_x509

openthread_ot-ip6-send-fuzzer

proj4_proj_crs_to_crs_fuzzer

re2_fuzzer

sqlite3_ossfuzz

stb_stbi_read_fuzzer

systemd_fuzz-link-parser

vorbis_decode_fuzzer

woff2_convert_woff2ttf_fuzzer

zlib_zlib_uncompress_fuzzer

Fuzzers I've verified build correctly so far are

- [x] symcts

- [x] symcts_afl

- [ ] symcts_symqemu

- [ ] symcts_symqemu_afl

- [x] symsan

- [ ] symcc_aflplusplus

- [ ] aflplusplus

- [x] honggfuzz

- [x] libfuzzer

- [x] centipede

@Alan32Liu I think I've address all of the 3 points mentioned above, please refer to my previous comment or the updated experiment-requests.yaml for the targets and benchmark list. Thanks! :)

@Alan32Liu @jonathanmetzman Now that everything is finalized so far if you could start a run of the CI to ensure it works on your end too, that would be great!

Btw, I noticed a typo with underscores in my fuzzer names in the request and am making sure to get an up-to-date buildable target list, please make sure to update the command with the list from the

experiment-requests.yaml:)

Yep, I noticed that change on symcts_symqemu_afl yesterday and updated my note accordingly : )

Will I be able to see the in-progress report data for the running experiment? If so, we can start with the 2 days and if I notice anything obviously wrong I can let you know. Similarly, I'd like to perform some other analysis on the resulting corpuses for the paper, is there a way I could get access to the

experiment-dataafter the fact as well?

Yep, I can post the links to experiment data and report here, once they are created.

/gcbrun run_experiment.py --no-seeds --no-dictionaries --experiment-config /opt/fuzzbench/service/experiment-config.yaml --experiment-name 2023-04-20-symcts --fuzzers symcts symcts_afl symcts_symqemu symcts_symqemu_afl symsan symcc_aflplusplus aflplusplus honggfuzz libfuzzer centipede --benchmarks bloaty_fuzz_target curl_curl_fuzzer_http freetype2_ftfuzzer harfbuzz_hb-shape-fuzzer jsoncpp_jsoncpp_fuzzer lcms_cms_transform_fuzzer libjpeg-turbo_libjpeg_turbo_fuzzer libpcap_fuzz_both libpng_libpng_read_fuzzer libxml2_xml libxslt_xpath openh264_decoder_fuzzer openssl_x509 openthread_ot-ip6-send-fuzzer proj4_proj_crs_to_crs_fuzzer re2_fuzzer sqlite3_ossfuzz stb_stbi_read_fuzzer systemd_fuzz-link-parser vorbis_decode_fuzzer woff2_convert_woff2ttf_fuzzer

/gcbrun run_experiment.py --no-seeds --no-dictionaries --experiment-config /opt/fuzzbench/service/experiment-config.yaml --experiment-name 2023-04-20-symcts --fuzzers symcts symcts_afl symcts_symqemu symcts_symqemu_afl symsan symcc_aflplusplus aflplusplus honggfuzz libfuzzer centipede --benchmarks bloaty_fuzz_target curl_curl_fuzzer_http freetype2_ftfuzzer harfbuzz_hb-shape-fuzzer jsoncpp_jsoncpp_fuzzer lcms_cms_transform_fuzzer libjpeg-turbo_libjpeg_turbo_fuzzer libpcap_fuzz_both libpng_libpng_read_fuzzer libxml2_xml libxslt_xpath openh264_decoder_fuzzer openssl_x509 openthread_ot-ip6-send-fuzzer proj4_proj_crs_to_crs_fuzzer re2_fuzzer sqlite3_ossfuzz stb_stbi_read_fuzzer systemd_fuzz-link-parser vorbis_decode_fuzzer woff2_convert_woff2ttf_fuzzer -a

Everything seems to be fine now, but I will come back after a while to double-check and update the report directory link.

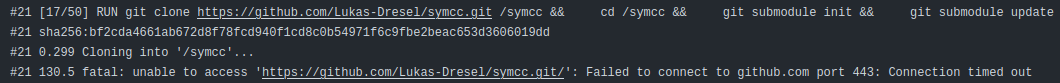

@Alan32Liu I think the CI run is having some networking issues?

Awesome, thanks!

@Alan32Liu I think the CI run is having some networking issues?

That should be fine: They are separated from our experiments.

Our experiment's build logs look OK.

Report link updated above : )

We can also disable dictionaries via

-ndif needed.

fyi afl++ creates it's own dictionaries while building the target (dict2file feature), so if that is not wished in an experiment, an aflplusplus variant should be created and that build keyword removed.

@Alan32Liu Do you know why we would have so few samples sometimes? Quite a few of the plots say "at least 2 trials per fuzzer" or some low number like that. Looking at the tables for them, it's usually one or two fuzzers that have almost no samples.

Is it concerning that the libxml example seems to be frozen at the 8h45m mark, when the rest of the experiment is two hours ahead? Also, some benchmark plots seem to have disappeared completely, see the openh254_decoder_fuzzer.

Lastly, how come there are cases where the coverage metric goes down over time more permanently. I've gotten used to it spuriously going down as fuzzer instances drop and reappear, however, e.g. symsan in the openthread example seems to have lost some better performing instances for longer periods of time.

@vanhauser-thc could that be the reason why aflplusplus is the only one (other than centipede) making progress on sqlite3?

@Alan32Liu Do you know why we would have so few samples sometimes?

Generally, there are two reasons:

- Fuzzer crashed. If a fuzzer crashes during a trial (say, after 10 hours), the report will only record its data in the first 10 hours and won't plot the rest. Hence those data are 'missing'. Fuzzer logs are available in the experiment folders in the experiment data directory.

- Virtual machine preempted. Each experiment is conducted in a preemptible VM in the cloud, meaning Google Cloud may reclaim and reallocate those VMs to other tasks. When that happens, the data will appear 'missing' until the experiment trial automatically restarts and the new data are ready.

Is it concerning that the

libxmlexample seems to be frozen at the 8h45m mark, when the rest of the experiment is two hours ahead? Also, some benchmark plots seem to have disappeared completely, see theopenh254_decoder_fuzzer.

libxml2 is plot is up to 18h30m now, so I reckon it's not frozen. I noticed that symcts_afl only has 4 trials, so I randomly picked 5 fuzzer logs of it (from the link above), and they all seem OK so far. BTW, I noticed that its logs tend to be huge (over 100 MB), is that intended?

Sometimes a plot may appear to be frozen, particularly when there are a lot of benchmarks or input to execute and measure, because the measurement is currently a bottleneck. We are working toward optimizing that.

I am unsure why symcts_symqemu_af is missing on openh264 at this moment.

I looked into two fuzzer logs of it and the gcloud logs but did not notice any failures.

Unfortunately, the cloud log is not publicly available yet. That's another improvement we are working on.

The result data seems to include some coverage information of it, shall we leave it running for a while and come back to it later?

Lastly, how come there are cases where the coverage metric goes down over time more permanently.

Yeah, this can be due to the same reason as missing data: When the data of a good-performing instance were lost, the median/mean values will drop.

@Alan32Liu did you restart them?? The start has fully reset from scratch.

@Alan32Liu did you restart them?? The start has fully reset from scratch.

I did not. Strange, I don't think this has ever happened before.

If you check the corpus in the data directory, they are still from yesterday. So I don't think anyone restarted the experiment. It's likely re-running the measurement for some reason. @jonathanmetzman would you happen to have a similar experience in the past where FB re-runs measurement or re-generates the report?