[ALBERT]: In run_squad_sp, convert_examples_to_features gives error in case sentence piece model is not provided.

I am trying to run Albert model on SQUAD dataset. In case SP model is not used, convert_examples_to_features will not go through. Please let me know, where I can find SP model.

Download the model from tensorflow hub. The downloaded models will have an assets folder. Inside that .vocab and .model is present. .model represents spm model.

With no SPM Model

vocab_file = '/albert_base/assets/30k-clean.vocab'

spm_model_file = None

tokenizer = tokenization.FullTokenizer(

vocab_file=vocab_file, do_lower_case=True,

spm_model_file=spm_model_file)

text_a = "Hello how are you"

tokens_a = tokenizer.tokenize(text_a)

Output

['hello', 'how', 'are', 'you']

With SPM Model

vocab_file = '/albert_base/assets/30k-clean.vocab'

spm_model_file ='/albert_base/assets/30k-clean.model'

tokenizer = tokenization.FullTokenizer(

vocab_file=vocab_file, do_lower_case=True,

spm_model_file=spm_model_file)

text_a = "Hello how are you"

tokens_a = tokenizer.tokenize(text_a)

Output

['▁', 'H', 'ello', '▁how', '▁are', '▁you']

I had a similar issue, workaround was to use the convert_examples_to_features from XLNet's run_squad.py and prepare_utils and make necessary changes. This helped me bypass it.

Thanks @s4sarath and @np-2019, I am able to process data with 30k-clean.model. I also incorporated convert_examples_to_features from XLNet with other changes. I am not bypassing SP model.

The trained model is uncased, so the returned value of do_lower_case in create_tokenizer_from_hub_module() is True

But in class FullTokenizer, when spm_model_file is not None, the current code ignore the the value of do_lower_case. To fix this, first, in the constructor function of FullTokenizer, add one line self.do_lower_case = do_lower_case, then in def tokenize(self, text) , lowercase the text when you are using sentence piece model ` i.e.

if self.sp_model:

if self.do_lower_case:

text = text.lower()

Hope this works.

@np-2019 - It is better not to use XLNET preprocessing. Here things are bit different. The provided code runs without any error. If you are familiar with BERT preprocessing, it is very close except the usage of SentencePiece Model.

The trained model is uncased, so the returned value of

do_lower_caseincreate_tokenizer_from_hub_module()isTrueBut in

class FullTokenizer, whenspm_model_fileis not None, the current code ignore the the value ofdo_lower_case. To fix this, first, in the constructor function ofFullTokenizer, add one lineself.do_lower_case = do_lower_case, then indef tokenize(self, text), lowercase the text when you are using sentence piece model ` i.e.if self.sp_model: if self.do_lower_case: text = text.lower()Hope this works.

Thanks @wxp16 it helped.

Sharing my learning, using XLNet pre processing will not help. As sequence of tokens in XLnet and Albert differs. SQUAD2.0 will get pre processed but training will not converge. Better to make selective changes in Albert Code only.

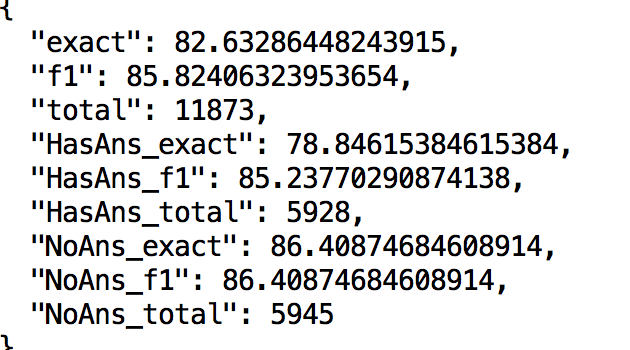

FYI, @Rachnas and @s4sarath , using Xlnet preprocessing I could achieve following results on squad-2.0

@np-2019 - Thats pretty good results. Which Albert model ( large, xlarge and version (v1 or v2) ) you have used?

@np-2019 , Its very nice that you are able to reproduce the results successfully.

according to XLnet paper: section 2.5: "We only reuse the memory that belongs to the same context. Specifically, the input to our model is similar to BERT: [A, SEP, B, SEP, CLS]," According to Albert paper: section 4.1: "We format our inputs as “[CLS] x1 [SEP] x2 [SEP]”,

As we can see, CLS token has different locations, will it not cause any problem if we format data according to XLNet ?