mujoco

mujoco copied to clipboard

mujoco copied to clipboard

Python bindings for simulate

This PR is to get initial feedback about my Python bindings for simulate in order to determine if they are worth polishing until they are mergable. Right now they are at a "look it works" level of quality. I have been using the bindings for a few months on Linux, however some careful consideration of how glfw linkage should work is needed before they will work on Windows or Mac.

Here are some questions:

- Is the approach taken to write the bindings acceptable and do they fit into the future plans for MuJoCo/Simulate? They are most similar to the

rolloutexample in the python package and so are only loosely integrated with the rest of the MuJoCo Python bindings. - If so, would it be good to add the examples for simulate shown below to this PR?

If the answer to the first question is no, then we can close this and I will instead make a PR to add a CMake option that installs the mjsimulate library as discussed in #201. Then these bindings can be easily maintained in a fork.

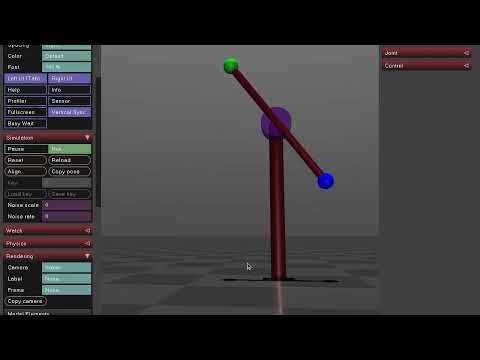

Here are two examples of what the bindings make easy:

Thanks for doing this! We're just starting to look at your pybind11 code and will have more detailed comments in due course. I do have a few comments after a cursory glance at your code, though.

- The API exposed by the bindings aren't super Pythonic. I expect that we're going to need to iterate a few times on it.

- I don't like the fact that the Python bindings now depend on GLFW. I'm thinking about abstracting away GLFW-specific logic into a mini library and have simulate talk to that abstraction layer. This is partly to do with our own technical requirements at DeepMind as well, where we think that having an SDL2 implementation of Simulate would be useful, and obviously we don't want to end up with two separate copies of simulate floating about.

I agree on both points.

Linking to GLFW from the bindings isn't desirable to begin with, however it gets even worse when you consider that the Python glfw package brings in yet another build of GLFW as a shared library and so there are then two copies of GFLW interacting runtime. Danger zone!

I like the idea of abstracting GLFW so it can be swapped with SDL, that would fix both problems.

Another way, which I think is compatible with the abstraction layer idea, requires two steps:

- Move the physics loop out of

main.cppand into eitherSimulate.cppor a new file. Bindings for the physics loop could then replace the physics loop that is currently re-implemented insimulate.py(code duplication). Then Python side should no longer need the Pythonglfwpackage. - To make the Python bindings stop needing to link to "glfw" the

Simulate.hheader needs to drop the dependency onglfw3.hwhich can by done by replacingSimulate'sGLFWvidmode vmodemember with something akin tovoid* vmode(unfortunately IIRCGLFWvidmode* vmodewill not work because of how GLFW declares it's types, I believe I tried that already). Then MuJoCo's Python bindings can just statically link tomjsimulateandmujocoand all should be well.

Do those sound like good options? If a windowing abstraction layer appears later, I think it should be easy to switch over and the Python bindings won't be impacted.

Hi,

I have updated the bindings so that the Python bindings no longer link to glfw and the C++ physics loop is reused. The bindings are very different from the first version and several tricky things were done to make this work, so some more at-a-glance feedback would be helpful.

Instead of needing bindings for numerous members of the Simulate class, just one member is bound for the render loop. A few methods are moved from main.cc to Simulate.c/h so that they can be reused in Python, these methods are bound.

In order to create MjModel and MjData objects that are wrapped by MjModelWrapper and MjDataWrapper instances a system of 3 callbacks is used by the C++ physics loops to call Python code that creates the objects. This has to be done in Python because _simulate cannot be linked with _structs and so the constructors and static methods are unavailable to _simulate. Wrapped MjModel and MjData objects are needed so that MjWrapperLookup succeeds when calling user registered callbacks.

Setting up callbacks from C++ to Python outside of the _callbacks module required moving about 50% of callbacks.cc to a new header callbacks.h.

There is one methodological hurdle still. Python creates the MjModelWrapper and MjDataWrapper objects and returns just the pointer to C++. Thus, Python wants to immediately destroy these objects. Currently I've worked around this by storing global reference to the current MjModelWrapper/MjDataWrapper objects, but there has to be a better way.

I've only tested on Ubuntu still, but I will work through any issues with Mac/Windows soon.

I've literally just pushed out 3240596c3ab17f7bcb47c3caa0d273a8623ccc48 to help you manage GLFW dependency 😅

Haven't had a chance to look into your latest round of code changes yet, but my main concern is to make sure that the simulate Python bindings work correctly with import glfw, and any other Python code that maybe depending on GLFW via that route. The commit above modifies Simulate to call GLFW functions through a dispatch table that is accessed via the mujoco::Glfw() function in C++. By default the dispatch table resolves GLFW function pointers statically at build time, and expects you to link the binary against GLFW as we do now. However, if you set the mjGLFW_DYNAMIC_SYMBOLS macro then the symbol lookup is performed the first time mujoco::Glfw() is called, and therefore you no longer need to link the binary against GLFW.

My idea was to ask you to do import glfw via pybind11 code, then grab the integer glfw._glfw.handle, then reinterpret_cast it to void* and pass it to mujoco::Glfw. After that point libsimulate should just automagically resolve GLFW functions through the same GLFW library as the Python package. Does that make sense?

What timing! I think it all works out in the end.

The latest bindings do not need to import glfw nor know anything about glfw (they don't even need the headers). Everything is hidden by privately, statically linking glfw to libmjsimulate and using the C++ PhysicsThread instead of a Python rewrite. GLFW's Init and Terminate functions needed to be wrapped, and there are some interesting C++ to Python callbacks, but it all worked out.

If a future user wanted to import glfw while using the Simulate Python bindings (which seems like a reasonable thing to do, especially if they want to launch more windows of something) they will need the dispatch table. I will rebase soon and try what you suggested.

Incidentally, I think that suggests that Simulate's render loop should be modified to be a render function that can be called from a super loop responsible for multiple glfw windows (since all glfw calls need to be on the main thread in MacOS).

I think leaving open the possibility of Python users ending up with two copies of GLFW is potentially asking for trouble. If we make Python simulate bindings depend on PyGLFW, and only have PyGLFW bring in GLFW library that would be the safest solution. WDYT?

I agree. I did not mean to imply that the dispatch table wasn't needed, it definitely is for the reason you said. I just mean that the 2nd version of bindings is no longer using two copies of GLFW like the 1st version did.

Rebasing to get the new dispatch table and then going through the process with glfw._glfw.handle and mujoco::Glfw will ensure the Python simulate bindings depend on PyGLFW right? (not sure if I missed something)

Yes, I think we're on the same page :)

BTW if you don't agree with my idea please do let me know!

Ok the dynamic dispatch table works, the latest commit is sets the handle and mixes Python and C++ glfw calls. Very cool! :)

However, I had to remove the dlclose call in dynamic_dispatch.cc to get it to work, else there was an immediate segfault once a GLFW function was called from Python or C++. I think the dlclose was actually unloading the shared library being used everywhere.

There is an issue, and I think it might be related to running dlopen over and over. the physics loop in the Python version is now running as fast as possible (which is apparent because gravity is obviously too strong). The Physics thread measures time using glfw, and the only difference is glfw calls are going through the dispatch table, so I think it likely has something with the dispatch table. Any ideas?

Still need to clean up the build system (right now I hardcoded dynamic dispatch to be on for now), get this to work on other systems, and fix some memory management bugs/bad practices.

Hmm, where is the repeated dlopening?

I only see it in the initialiser?

Could it be that the glfw timers are stateful and their state is reset at dlopen?

Sorry just found the issue, it's something very basic, as usual :). The Glfw() prefixes were missing in the physics loop. In this PR I move code from main.cc to simulate.cc and so the Glfw() prefixes didn't get copied over while rebasing.

Also yes dlopen is called once, I misunderstood the code slightly. But when I thought it was being called over and over I suspected the timers were being reset like you said.

Should libsimulate remain a static library?

Previous versions of this PR built libsimulate as shared so that GLFW/lodepng symbols were present in liblibsimulate.so which was necessary because CMake won't copy a static libraries dependencies into the final .a file. However, it looks like this approach could be used add the symbols manually. The latest commit switches back to static, but comments out lodepng calls, GLFW symbols are no longer needed because of the dispatch table.

Additionally, mjGLFW_DYNAMIC_SYMBOLS needs to be off when building libsimulate for C++ simulate and on when building for libsimulate for Python simulate. To resolve this two versions of the library could be built or the dispatch mechanism could be adjusted to allow selecting statically linked glfw or dynamically linked glfw at runtime (this would require more symbols to be copied into the final .a).

Also, to build the python bindings the library has to be installed to the binary release. It feels like installing a shared library is "nicer" than installing a static one.

I don't have a strong opinion either way (and I have very little experience with these things), it just seems like a shared library is easier than combining a bunch of static libraries with ar in custom CMake targets and building two versions of libsimulate.

My thinking is:

- For our binary releases on GitHub, we don't have libsimulate at all. Instead we continue to ship simulate as a binary, with libsimulate (and also GLFW) statically linked in, exactly as we do now.

- For the Python bindings (specifically, bdist wheels), we ship libsimulate.so along with libmujoco.so. (We should statically link lodepng into libsimulate.so).

Would that work?

That will work! Thanks for the quick response.

Hi,

This should be ready for a detailed review.

I've been able to test Ubuntu and MacOS but not Windows. For some reason Windows is taking excessively long to compile the Python module (I think it might take several hours on my machine). Is this a known issue? I noticed that the Python bindings are not built for Windows in the CI either. Either way, it would be great to get to the bottom of this because I'm not sure I got the dynamic library names in setup.py right.

On MacOS the main.py (drop in for C++ simulate main.cc) and double_pendulum.py example work. The fixation.py example does not work, it segfaults when opening a GLContext in the load_callback. However, I believe that limitation not related to this PR and more to do with the particulars of multithreaded rendering on MacOS.

Yes, MSVC seems to have trouble compiling a couple of files in the python/ package. If you're able to set up LLVM/Clang for Windows, that'll build the bindings for you just fine (it's what we use to build our Windows releases).

I've tested on Windows. main.py, double_pendulum.py, and fixation.py all work.

Could you please rebase to HEAD?

No problem! It is rebased now.

Hi, sorry, just pushed a small change to simulate.cc, could you rebase again? Cheers

Re-rebasing, nevermind, all sorted.

I can confirm that double_pendulum.py works on windows, python 3.10.0

After offline discussion the decision was made to reimplement the physics loop in Python as in the first commit of this PR instead of reusing the C++ version of the physics loop as in the subsequent commits. The reasoning was that the callbacks module was too difficult to use across translation units and that having the physics loop in Python would allow for more powerful features in the future without introducing too much code duplication.

Those commits that re-used the C++ physics loop have been force pushed away, and all that is left is the fixes to glfw usage, build system, and updating the Python physics loop to use the new timing scheme introduced on main since the beginning of this PR.

I will go through this a few more times to check for small issues. There were comments before that the interface needs to be more Pythonic. I'm happy to make any requested changes towards that. I did not consider changing any part of the interface when going from C++ to Python, I just bound the various methods in the most straightforward way.

Sorry for the delay in getting this merged. I've made some modifications to the API and added REPL functionality. Also removed some stuff that I don't see being used in this PR. If I removed anything that you are depending on externally please send a followup PR. Thanks again for your help on this!

Fantastic! Glad we were able to get this through. REPL functionality looks very interesting.

It looks like the on control callback registering/deregistering was what was removed (which was required for the double pendulum and fixation examples to work properly when the reload button was used). I'm not sure I was doing that in the best way anyway. I'll circle back soon.

Hi, I have an error when using the double-pendulum.py. See the below message, I have mujoco 2.3.7 installed with pip.

mjParseXML: could not open file './double_pendulum.xml'

Try using the full path instead like, /a/b/c/d/double_pendulum.xml. There is a bug with relative paths on MacOS, see #745 .

Is built-in 'simulate' viewer working only for Python? Can I use it with C?

Thanks @aftersomemath the double pendulum is working now with Python.

You can use it with C++. The main.cc which is compiled into the bin/simulate executable in the binary distribution could be used as a starting point.