emergent_in_context_learning

emergent_in_context_learning copied to clipboard

emergent_in_context_learning copied to clipboard

Question about your work

Hi! It's a very interesting work "Data Distributional Properties Drive Emergent In-Context Learning in Transformers" and I try to reproduce the results in your paper. However, I ran the code in the readme file with the default config, namely images_all_exemplars.py, and I get some unfamiliar results, detailed in https://github.com/deepmind/emergent_in_context_learning/issues/2#issue-1452546848.

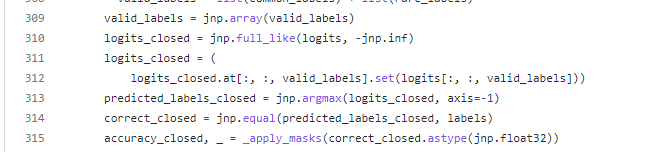

Besides, I found there may be some mistakes in the repo for the evaluation of in-context learning. You just set classes beyond 0,1 in logits to -inf. However, the prediction logits[:,:,0] is large doesn't mean that the query is similar to the 0 class in the context, it just means that the query is more similar to class 0 in the training set. Is it right? Maybe it is this bug leading to wrong results as I mentioned above.