Allow depth-writing shaders to work with shadow maps

Solution to Proposal-1942

- Allowed objects with a DEPTH setting fragment shader to receive shadows and cast shadow on themselves.

- The change allows fragment shaders to modify the VERTEX variable, so they can be update it consistently to DEPTH change.

- There is still a FIXME comment in the code change. I need a hint there how to add a shader compilation time condition.

Tested only on Windows with the Vulkan renderer. Please see the test results in my comment on the proposal above.

You can add the conditional by adding a usage_define to the shader, here is how it is added for NORMAL in the clustered renderer:

https://github.com/godotengine/godot/blob/b6d102c7c2d5e9f39f368bf1ebc91a48bf762c42/servers/rendering/renderer_rd/forward_clustered/scene_shader_forward_clustered.cpp#L717

You would just have to add a similar line for the Vulkan Mobile renderer and the GLES3 renderer as well.

@clayjohn

vertex.z = in_view_space(-gl_FragDepth);

It would indeed solve the problem in a compatible way. But performance and precision may not be optimal. Such a fragment shader would likely have the vertex.z already available, so calculating it again from gl_FragDepth is not optimal.

Relevant part of such a user fragment shader:

vec3 vertex;

// Comes up with view space vertex position based on the carved out geometry (changes only the depth)

vec4 ndc = PROJECTION_MATRIX * vec4(vertex, 1.0);

DEPTH = ndc.z / ndc.w;

We could introduce a new out float LINEAR_DEPTH variable to allow overriding the Z component of the vertex and also to offload the projected (nonlinear) DEPTH calculation to Godot's shader code. Then the user fragment shader could be simplified to just setting LINEAR_DEPTH and not having to deal with DEPTH at all.

- If the user fragment shader sets

DEPTH, we override thevertex.zin Godot's shader (your suggestion) for compatibility. - If the user fragment shader sets

LINEAR_DEPTH, thenvertex.zis overridden andDEPTHis calculated by Godot's shader. - If both are set, then

LINEAR_DEPTHtakes precedence.

We would encourage the use of LINEAR_DEPTH for better performance and simpler user fragment shader code whenever the updated vertex Z coordinate is available.

It would also reduce any confusion about how to set DEPTH, since the use of PROJECTION_MATRIX is non-trivial for new shader developers.

The value is LINEAR_DEPTH is usually negative, since it is just a replacement Z component of the VERTEX vector which is in the view space. I could have added a few negations to make it positive (as a depth), but that would just complicate the arithmetic involved. Whoever develops such advanced depth writing shaders should easily accommodate to the negative value anyway.

Any problems with this approach? Any possible performance issues on mobile?

@clayjohn Implement your suggestion:

In scene_shader_forward_clustered.cpp defined:

actions.usage_defines["DEPTH"] = "#define DEPTH_USED\n";

In scene_forward_clustered.glsl at the beginning of LIGHTING section:

#ifdef DEPTH_USED

vec3 ndc = vec3(screen_uv * 2.0 - 1.0, gl_FragDepth);

vec4 view_pos = inv_projection_matrix * vec4(ndc, 1.0);

vertex.z = view_pos.z / view_pos.w;

#ifdef USE_MULTIVIEW

view = -normalize(vertex - scene_data.eye_offset[ViewIndex].xyz);

#else

view = -normalize(vertex);

#endif

#endif

The depth calculation is based on the advanced post-processing documentation.

Tested with the same good result, but without having to set VERTEX from the user fragment shader.

Also reverted the VERTEX variable to be read-only.

As you can see it takes a lot of work to calculate the vertex.z from the depth. Better performance could be achieved if the user fragment shader already have the Z component available.

Now the PR has both a compatible shadow fix (requires writing the DEPTH only) and LINEAR_DEPTH as an alternative (see my comment above).

It has not been ported to the mobile Vulkan renderer and the GLES3 driver yet. I will do the porting and the documentation update once we agree about this solution in general.

Thank you for reviewing it.

Preliminary fragment shader documentation update:

+----------------------------------------+--------------------------------------------------------------------------------------------------+

| out float **DEPTH** | Custom depth value (0..1). Consider setting LINEAR_DEPTH instead if that is easier to produce. |

+----------------------------------------+--------------------------------------------------------------------------------------------------+

| out float **LINEAR_DEPTH** | Custom linear depth value. Replaces the Z coordinate in view space for lighting calculations. |

| | Sets DEPTH as well by projecting the updated surface coordinate. Usually has a negative value. |

+----------------------------------------+--------------------------------------------------------------------------------------------------+

Do you agree with the above solution? (Compatible fix if only DEPTH is set. Providing LINEAR_DEPTH as an alternative.)

If you do agree, then I would go ahead and complete the PR by porting the change to GLES3 and mobile Vulkan.

I'm not sure I agree, exposing both DEPTH and LINEAR_DEPTH seems like it will be confusing to users (especially since both can be set, but then DEPTH will silently be ignored.

Ultimately, the only benefit of using LINEAR_DEPTH is saving a few instructions in the case where the user manipulates VERTEX in view space. In my opinion its not worth adding a new built-in just to save a single matrix multiplication.

Also, there is no point in doing a full matrix multiplication and then immediately throwing out the xy and w components. It is likely more efficient to only do the calculations necessary to calculate the z-channel. That way you can reduce the overhead to as few instructions as possible and maintain a simple API for users.

I'm a bit unsure if clayjohn's approach might cause floating point precision errors. I'd only be worried about z-fighting between two shaders which had different view matrices but tried to write the same depth value.

But I'd rather try @clayjohn's approach of only using DEPTH, until a need for the more complicated LINEAR_DEPTH or other system is deemed necessary. Godot strives to keep systems as simple as possible until a need for a more complex system is demonstrated. A performance bottleneck has not been demonstrated here. Depth-writing shader performance in my experience will be dominated by overdraw and loss of early depth test optimization.

(If we're going to bring up performance, the first thing to implement would be this: https://registry.khronos.org/OpenGL/extensions/ARB/ARB_conservative_depth.txt with a render_mode depth_less or DEPTH_LESS = variable - but honestly IMHO we have bigger fish to fry in 4.0, so I wouldn't really push for conservative depth until a later 4 version once people have had time to try things enough to demonstrate a bottleneck.)

I'm not sure I agree, exposing both

DEPTHandLINEAR_DEPTHseems like it will be confusing to users (especially since both can be set, but thenDEPTHwill silently be ignored.Ultimately, the only benefit of using

LINEAR_DEPTHis saving a few instructions in the case where the user manipulatesVERTEXin view space. In my opinion its not worth adding a new built-in just to save a single matrix multiplication.Also, there is no point in doing a full matrix multiplication and then immediately throwing out the xy and w components. It is likely more efficient to only do the calculations necessary to calculate the z-channel. That way you can reduce the overhead to as few instructions as possible and maintain a simple API for users.

I have to disagree here. My last VisualShader PR added the Linear Depth node anyway. (I would have added it in my other PR before as well in as a built-in if I knew this workaround.) So currently we have Depth Texture and Linear Depth in Visual Shaders. I do not see why having both DEPTH and Linear Depth can't be there (as long as both are useful). I asked myself before already why this wasn't simplified since I have never encountered a usecase for using a different depth value scale (except for internal uses perhaps).

Do you agree that not receiving shadow (correctly) in case of depth writing shaders is actually a bug?

I think fixing this at least for the DEPTH case should go into Godot 4.0.

That would not need the LINEAR_DEPTH, although I would really like to have it for my case to avoid the extra calculations.

As a compromise, I suggest restricting this PR to fix the shadow with no change to the shader variables.

ARB_conservative_depth

Later we can have another PR to rework the depth as mentioned by @lyuma above. That could include a linear depth option.

Also, there is no point in doing a full matrix multiplication and then immediately throwing out the xy and w components.

The compiler's optimizer is expected to take care of such cases, so we can have more readable code closer to the math involved. Sorry if I have been naïve in this regard. I need to read-up on how good GLSL optimizers are these days.

Do you agree that not receiving shadow (correctly) in case of depth writing shaders is actually a bug? I think fixing this at least for the DEPTH case should go into Godot 4.0.

Yes, I do agree this is a bug and should be fixed for the DEPTH case for 4.0 without a change to the API.

After that, we can certainly discuss adding LINEAR_DEPTH in a proposal. With things like this it is always a tradeoff between complexity/usability/performance. So having input from more users can definitely sway the decision one way or the other. My gut tells me that the tradeoff is not worth it, but I am happy to hear from other users and contributors who would benefit from the change.

Fixed the shadow issue of depth writing shaders for rendering backends:

- Vulkan desktop, tested OK

- Vulkan mobile, tested OK on desktop, not tested on actual mobile device

- GLES3: My project does not have any shadows on OpenGL, so could not test it. It does not crash at least.

Should we keep the fix only for Vulkan? Does it worth the effort to fix it for GLES3?

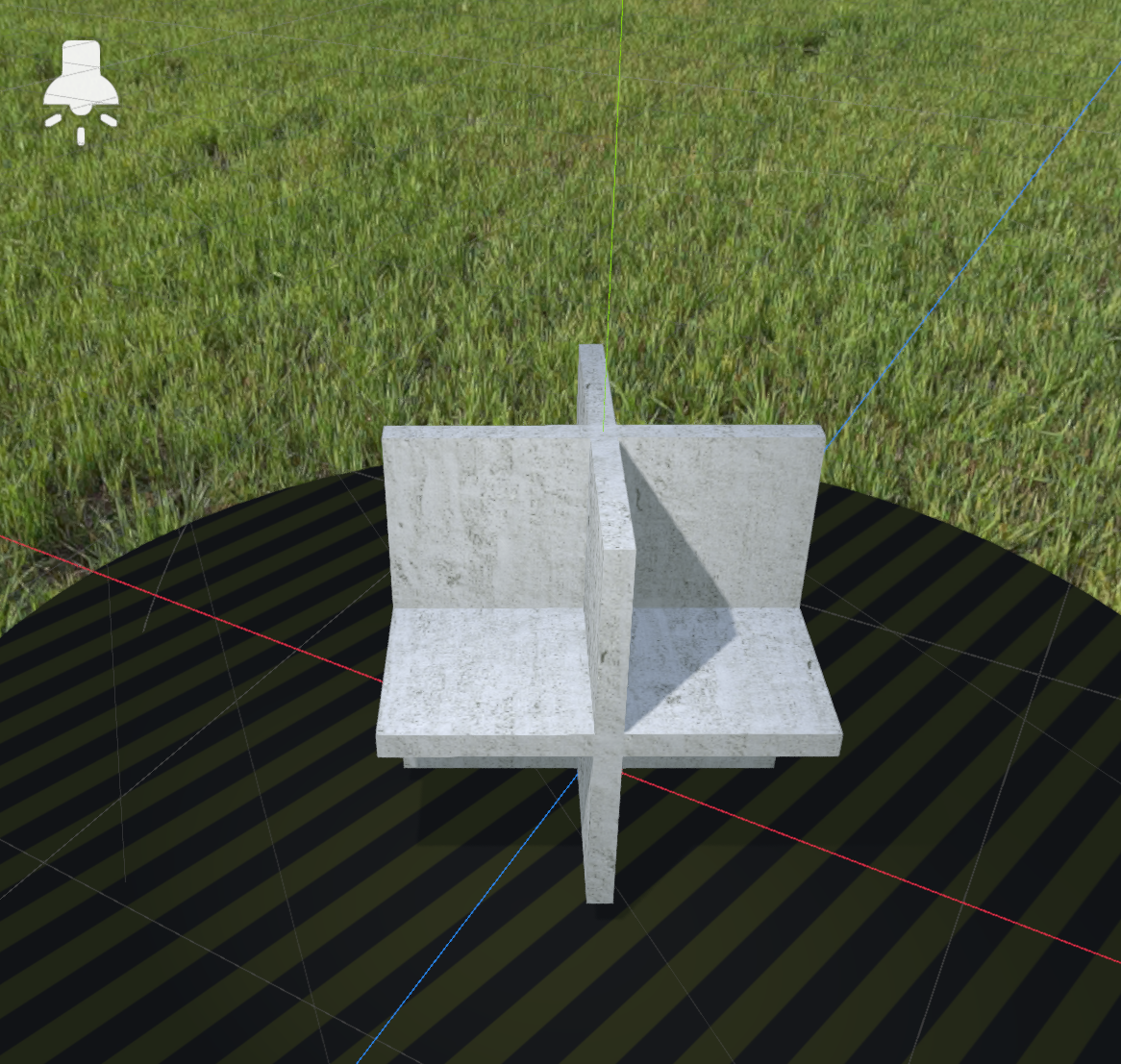

Test project for the depth setting shader + shadow case: UPDATE: Outdated, please use DepthSettingShaderTest.zip from my later comment. ~~DepthSettingShaderTest.zip~~

Alpha 16:

Fixed editor built from the PR's branch:

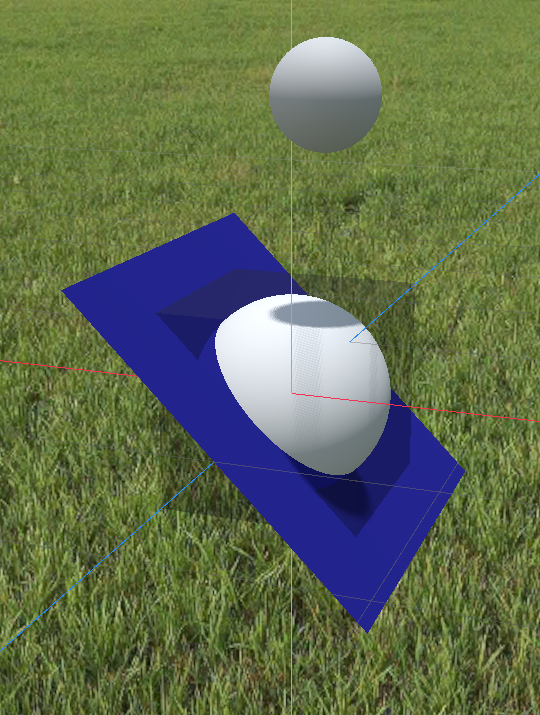

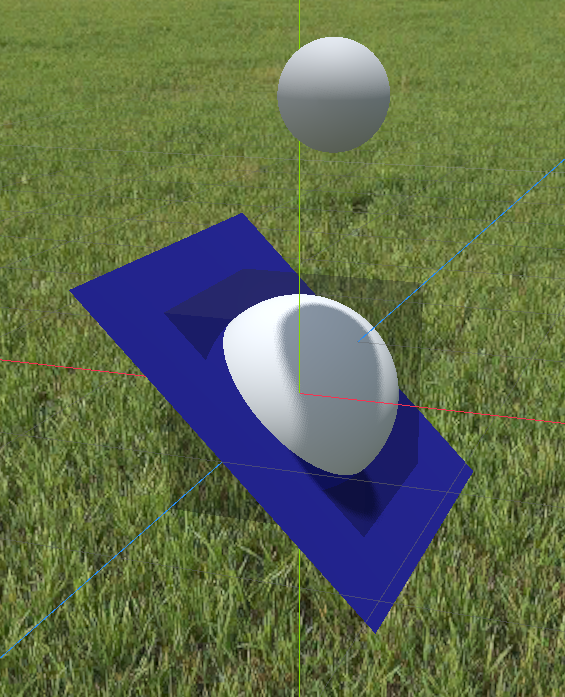

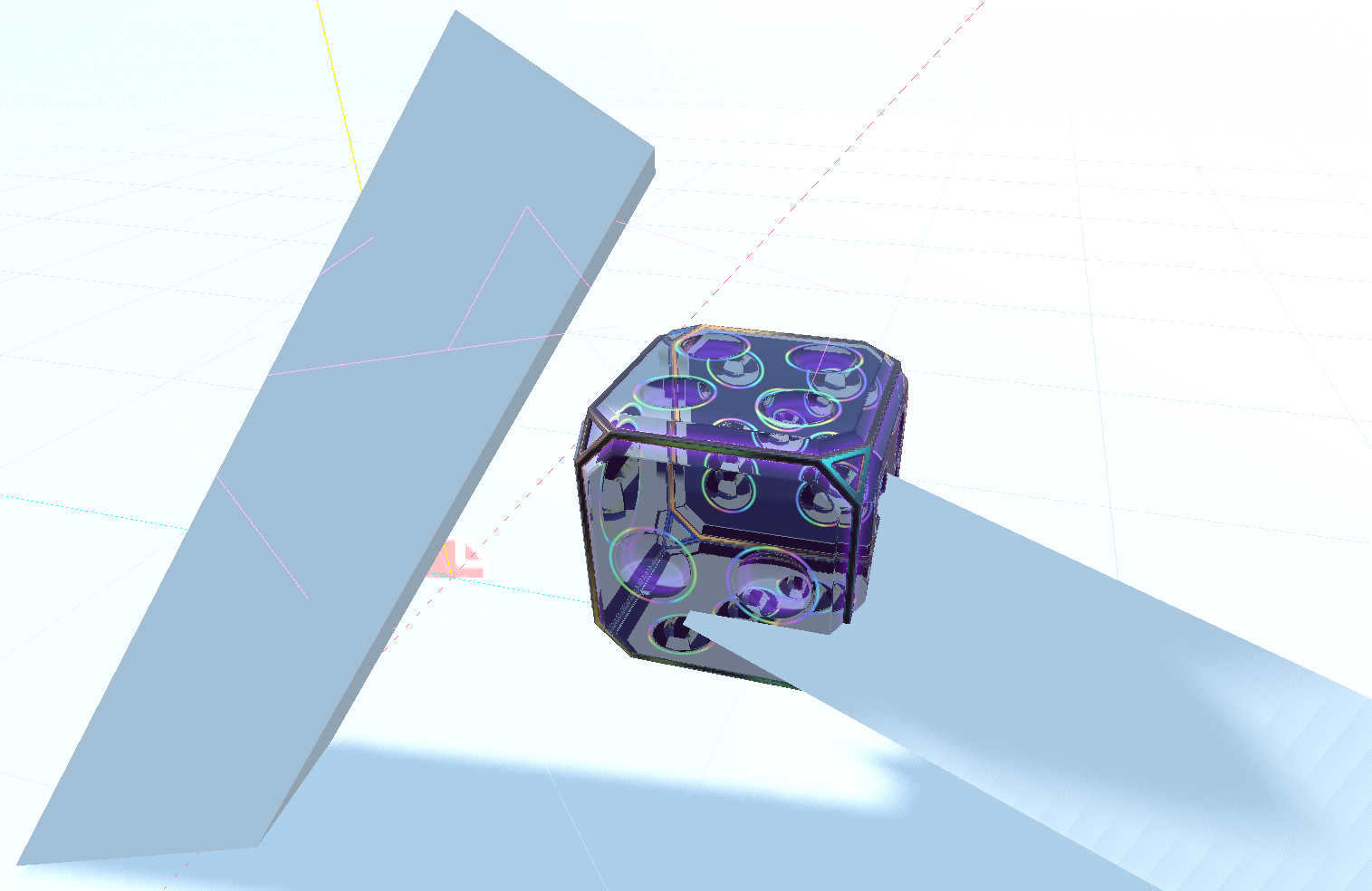

The shader carves out a sphere from a cube. A directional light points directly downwards.

The intersecting blue plane is to prove that the DEPTH is correct.

The shader has a bool uniform to enable/disable showing the enclosing cube with transparency for reference.

- Vulkan desktop and mobile works.

- GLES3 does not show shadow and the plane intersection is not there, I don't know why...

Enjoy!

Better version of the test scene with correct DEPTH on GLES3. Had to special case it, because the DEPTH range is different from vulkan. See here

With the current PR code ae46dc67 it works well on Vulkan. I'm done working on this, have spent days of free time on it. Can still revert the GLES3 part if we cannot test it.

I think we should take the fix for Vulkan as is, because it works.

In the future I suggest adding a render mode to reinterpret FRAGCOORD.z and DEPTH on the linear scale. The non-linear depth buffer value is implementation dependent, so we should not force game devs to deal with that. There is a big confusion about it on the forums and very little reliable documentation.

A related observation:

Included render_mode cull_front in the shader of the test scene attached above. It allows the camera to go closer to the sphere without unwanted culling at the corners of the enclosing cube, so it has practical use.

It renders the same sphere as expected, but the shadow becomes broken: Smaller and moving on the sphere as the camera moves around, which it should not do at all. It happens despite the shader sets the exact same DEPTH and NORMAL for each pixel.

I tried to flip the mesh faces to achieve the same effect while using the default culling mode in the shader. This gives the exact same broken shadow. Apparently the face orientation on the mesh has nothing to do with the problem, only the rendered face orientation.

As far as I understand this is a problem or limitation with the shadow mapping or the directional light's shadow calculation. I tried to tweak the shadow parameters both in the light and in project settings, but they had no effect at all.

So there is still some other limitation here with the lighting model which makes these depth writing shaders casting wrong shadows.

I will add a separate ticket for this issue once this PR is merged. Until then even the problem reproduction would not work on master.

Okay, I will resolve, rebase and squash it tomorrow, so this will be ready. The GLES3 addition will be commented out, since I could not test it (there was no shadow rendered).

Okay, I will resolve, rebase and squash it tomorrow, so this will be ready. The GLES3 addition will be commented out, since I could not test it (there was no shadow rendered).

Please leave it in. The code is fine, I will be adding shadows to GLES3 soon and I know I will forget to add this in when I do.

Squashed, rebased, verified.

Kept the GLES3 fix as requested.

I did not know shadows are not there yet on GLES3.

Found more problems related to this fix. Lights and shadow are using vertex_ddx and vertex_ddy. These are not updated properly if DEPTH changes, which would affect projectors (currently not testable) and decals.

Vulkan: Fixed the issue with vertex_ddx and vertex_ddy by fixing vertex and view right after the inclusion of the user FRAGMENT code (moved the newly added code up from the LIGHTING section). This way vertex is updated before the derivatives are taken.

GLES3: Same code reordering for consistency, but found not such derivatives there.

@clayjohn This PR is incomplete, please do not merge it yet.

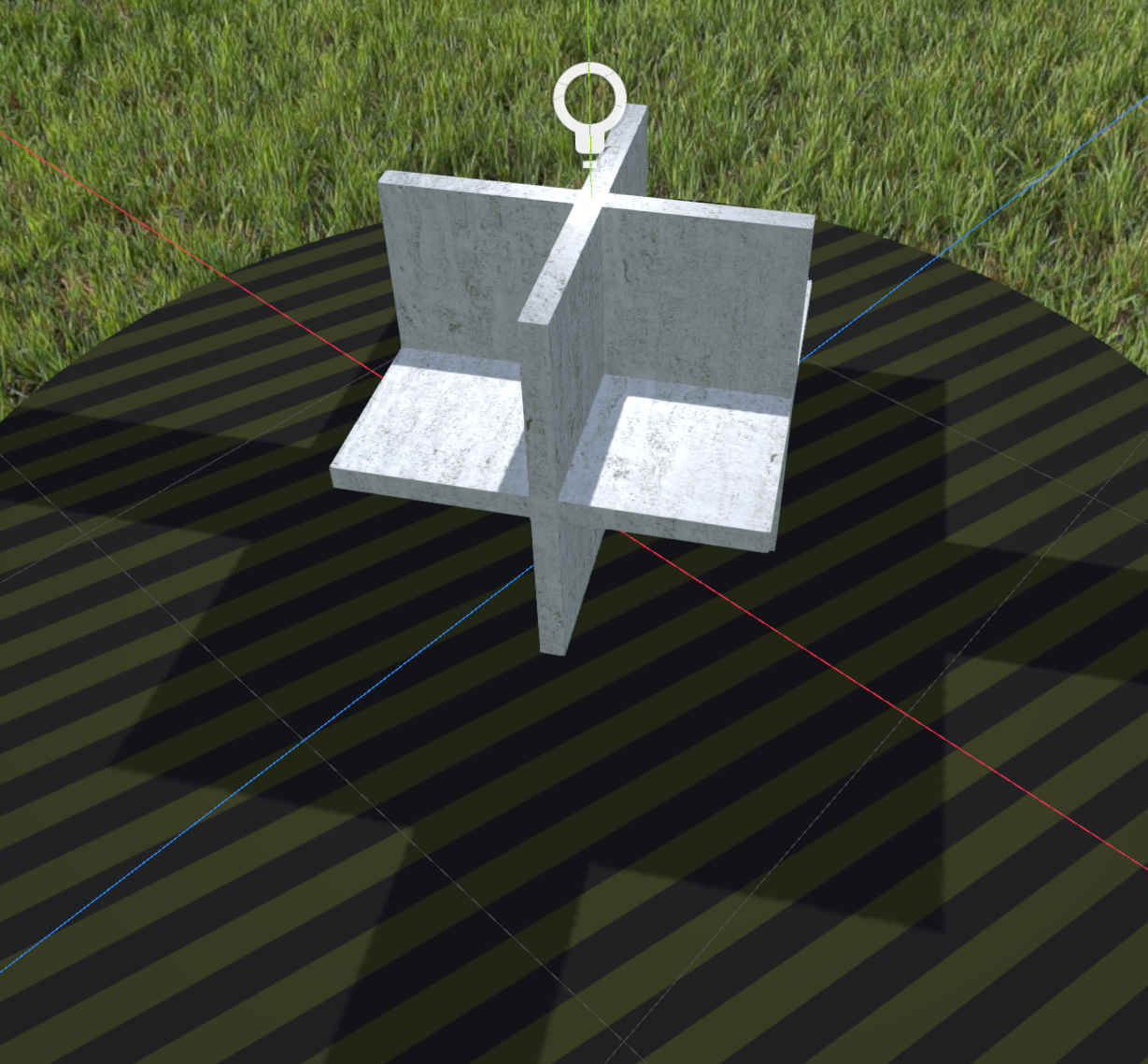

Without the PR a decal properly renders on a back-face rendered cube with a DEPTH setting shader, altough the depth is obviously not what the shader wants:

With the PR enable the depth is good (the cube appears to be filled, the surface texture is correct), but the decal renders incorrectly:

Same applies to all received shadows and lights.

I'm working on extending the PR to fix these cases as well.

Works well now.

Problem was that the whole vertex needs to be set, since view rays are not parallel in view space. Also optimized out a few redundant normalizations of normal and view vectors. Also made sure normal is used after FRAGMENT, not normal_interp which cannot be changed in the user fragment shader code.

Examples:

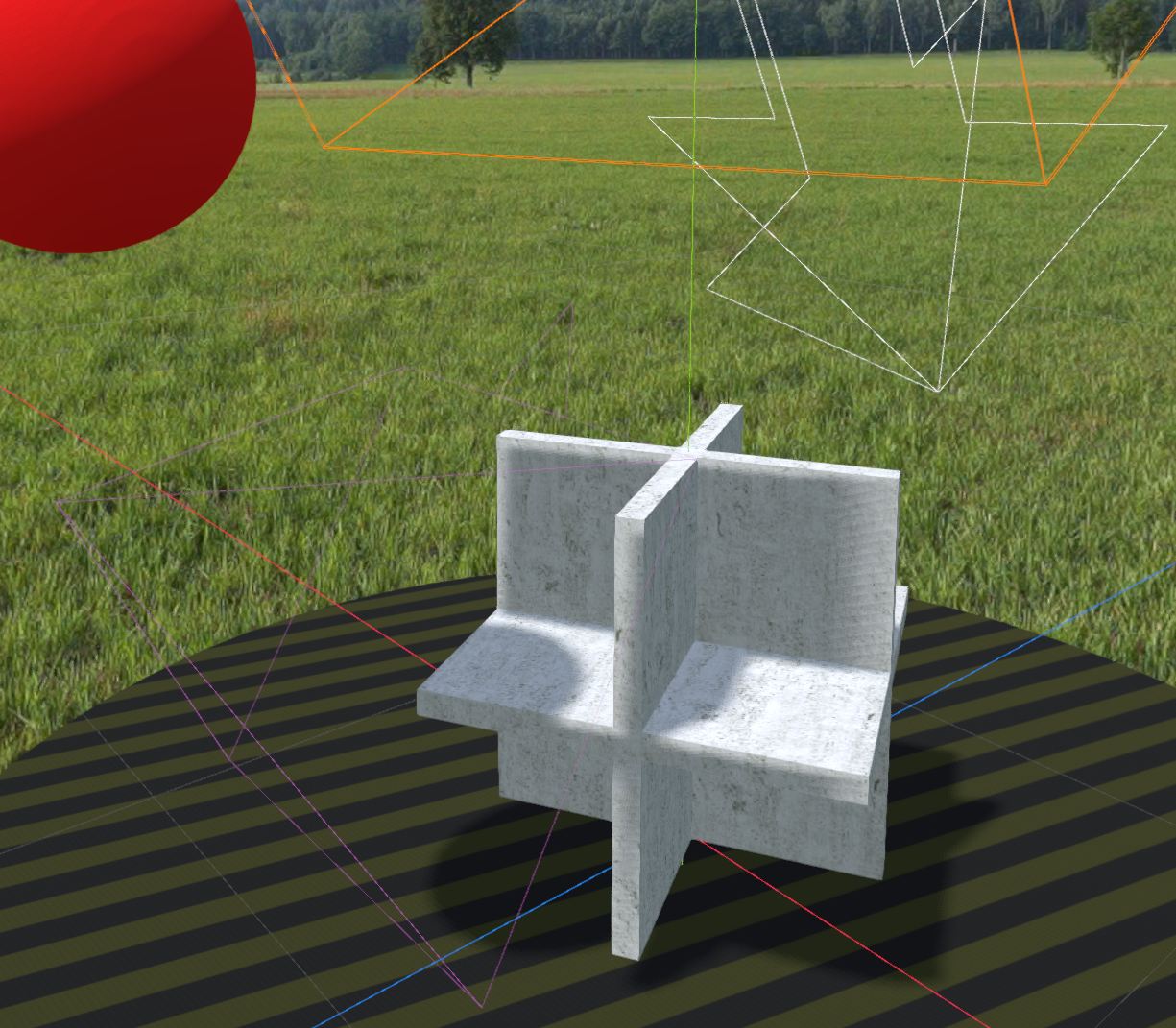

Directional light, received shadow, self-shadow, drop shadow:

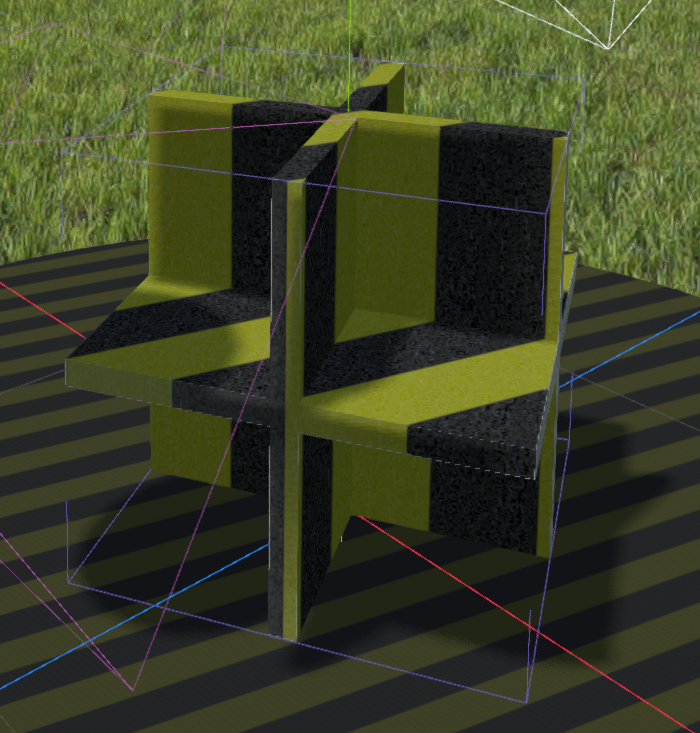

Decal:

Spotlight:

Omni light 20cm above (cube has 1m side):

Could not test with projector lights, but since decals work that should work as well once they are fixed by another PR.

IMO this is a good case for supplying custom base shaders and not add the feature in core, so I would wait on this.

I disagree. It is a bug in the existing GLSL templates, not a new feature. User fragment shaders are allowed to write DEPTH, which is expected to work correctly. This PR fixes just that without adding any new variables or semantics. Also, it does not decrease the performance of shaders not writing DEPTH.

IMO this is a good case for supplying custom base shaders and not add the feature in core, so I would wait on this.

If performance is a problem for some shaders, can we make this toggleable in the project settings? After doing this, we may want to consider disabling this by default on mobile.

I agree that a system for customizing base shaders should be added in a future 4.x release, but this PR seems logical to have in core on its own.

Regarding the use of geometric normals (normalize(normal_interp)) in the fragment shader template:

- Introduced a new

geometric_normalvariable to make it clear whenever it is used. - The

geometric_normalis set fromnormalright before applying the normal map ifNORMAL_MAP_USEDis defined. - If no normal map is used, then

#define geometric_normal normalfor best performance. (Don't rely on optimizers...) - After that point replaced all

normalize(normal_interp)withgeometric_normal. - Both

normalandgeometric_normalare guaranteed to be normal vectors, so no need for the redundantnormalizecalls.

The above guarantees that no backwards incompatible changes made to lighting which would affect the normal without the influence of normal maps.

Thanks goes to @lyuma for pointing out this issue.

IMO this is a good case for supplying custom base shaders and not add the feature in core, so I would wait on this.

I respectfully disagree: this is a bugfix, not a feature.

If DEPTH is supported in Godot, it should be possible to use it without sacrificing core rendering features such as shadows.

By the way, I didn't test your patch or anything yet, but I was able to use a depth writing shader with a directional light on Godot 4.0 Beta1. I'd be curious if you could try this with your patch as well.

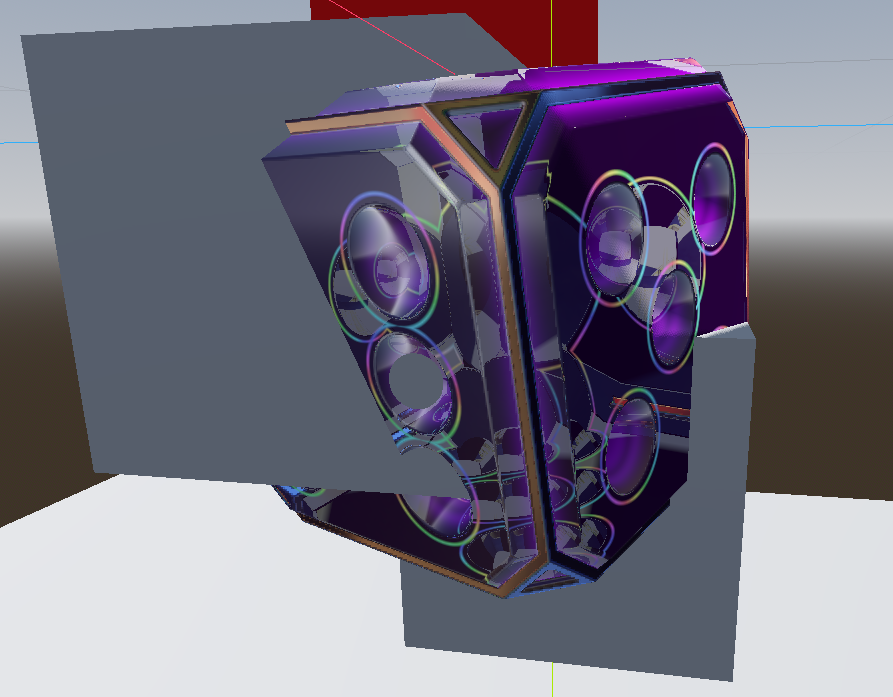

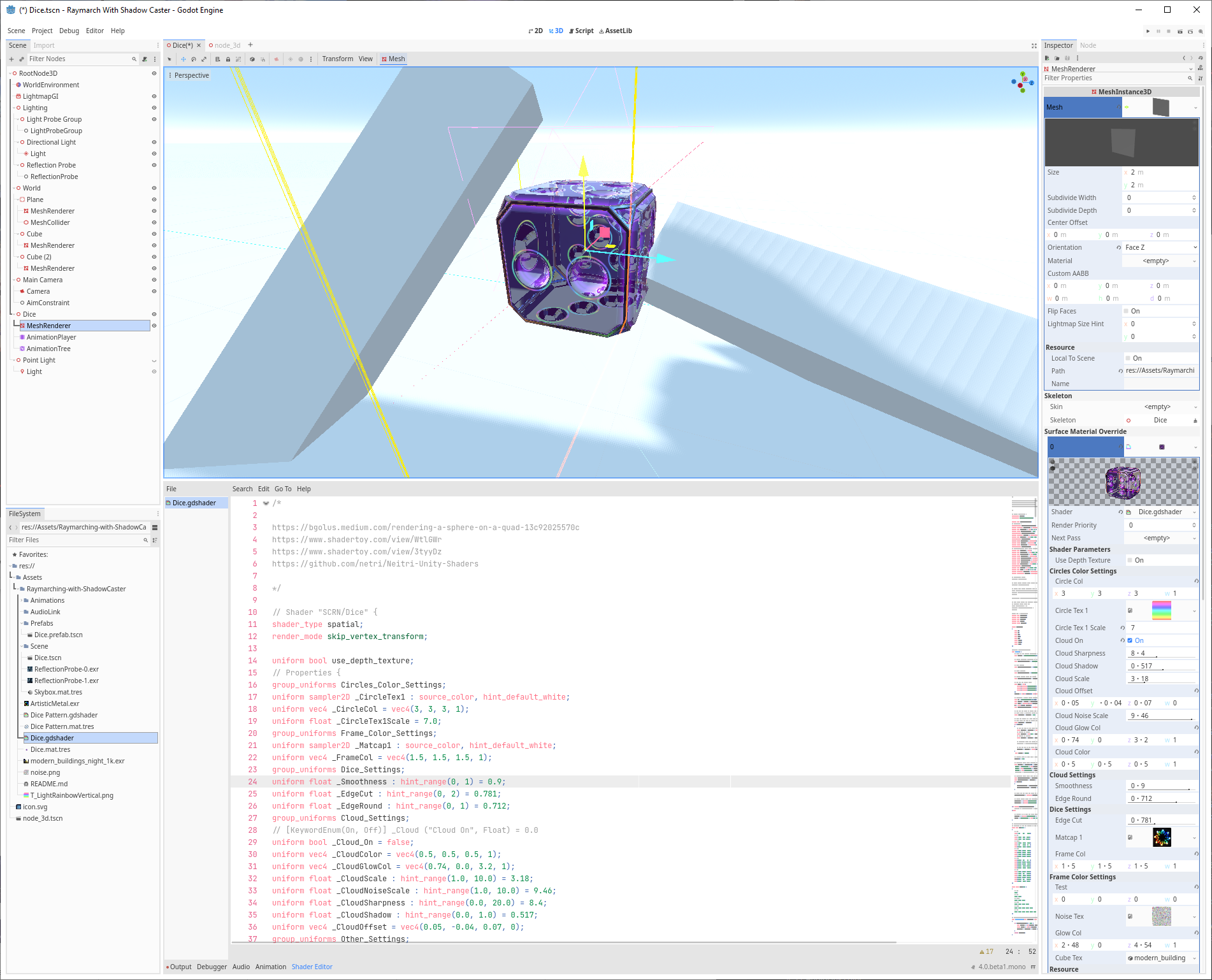

The original is for Unity by SCRN, and it is supposed to support lighting as well. I haven't implemented lighting yet. Here is the original code: https://github.com/SCRN-VRC/Raymarching-with-ShadowCaster

And here is my Godot port of the project with material if you want to test. RaymarchWithShadowCaster.zip

Here's just the shader code uploaded as a gist: https://gist.github.com/lyuma/e755620e2abe2593de94aed5021cdd3f

If you want to see the difference with / without depth & discards, uncomment //#define DEBUG_VERTEX_SHADER right above the vertex function.

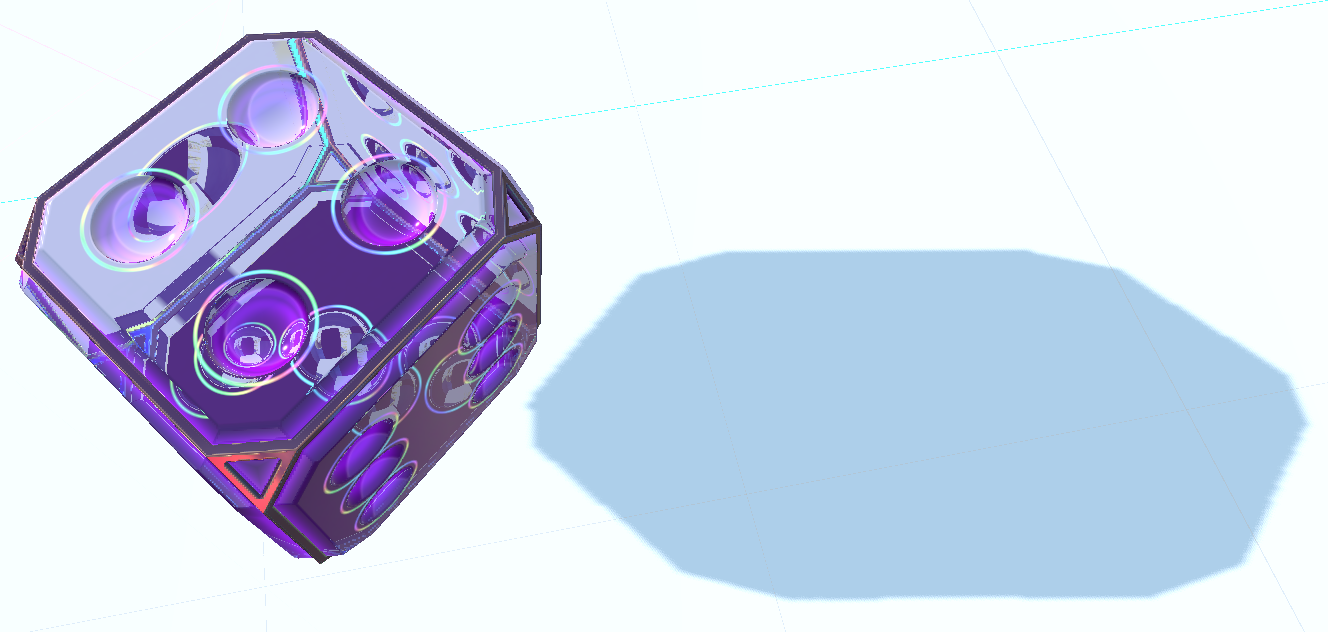

Seems to work correctly with the PR, as far as I can tell. Drops shadow, receives shadow an intersects properly:

The received shadow is not very visible, but it is clearly there if I move the left side box which drops the shadow on that fancy dice. The shadow also moves correctly over the cut corners, so the lighting looks correct.

Lowered the max distance of the light's shadow to 20m to make it sharper. It uncovers that the shadow correctly considers the cut corners of the dice, they are clearly visible and look correct:

Video of shadow moving over the corner working correctly (light max distance is lowered to 20m to make the shadow sharper):