Carbonserver panic and Out of Memory

Hello here,

we're running a graphite production cluster based on a carbon-c-relay frontend and go-carbon as a backend.

The cluster is composed by 3 go-carbon nodes running on well-equipped servers (128GB of memory and a bunch of 8 core cpus).

We've recently upgraded to go-carbon version 0.10.0. Futhermore we're currently running a nightly cron task to clean up the stale wsp files.

The problem we've experienced today was an out of memory, followed by the OOM intervention on all the 3 nodes, presumably caused by the carbonserver component.

What we've seen was the OOM intervention:

[20944265.175516] Out of memory: Kill process 30346 (go-carbon) score 726 or sacrifice child

[20944265.175782] Killed process 30346 (go-carbon) total-vm:115783724kB, anon-rss:99334204kB, file-rss:0kB

[20946896.158156] perf samples too long (10010 > 10000), lowering kernel.perf_event_max_sample_rate to 12500

[21361096.704491] TCP: TCP: Possible SYN flooding on port 2003. Sending cookies. Check SNMP counters.

associated with the following error stacktrace in the go-carbon logs:

[2017-07-06T10:45:33.416Z] ERROR [carbonserver] panic encountered {"handler": "fileListUpdated", "stack": "github.com/lomik/go-carbon/vendor/go.uber.org/zap.Stack\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/vendor/go.uber.org/zap/field.go:209\ngithub.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).updateFileList.func1\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:340\nruntime.call32\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/asm_amd64.s:514\nruntime.gopanic\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/panic.go:489\nruntime.panicmem\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/panic.go:63\nruntime.sigpanic\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/signal_unix.go:290\ngithub.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).updateFileList\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:418\ngithub.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).fileListUpdater\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:331", "error": "runtime error: invalid memory address or nil pointer dereference"}

[2017-07-06T10:46:02.420Z] ERROR [persister] failed to open whisper file {"path": "/data/graphite/whisper/sprcom/servers/qa/rabbitmq_mothership-qa/queues-MOCacheInvalidationTopic_sgw_SI2_/r

eturn.wsp", "error": "open /data/graphite/whisper/sprcom/servers/qa/rabbitmq_mothership-qa/queues-MOCacheInvalidationTopic_sgw_SI2_/return.wsp: too many open files"}

[2017-07-06T10:46:02.420Z] ERROR [persister] failed to open whisper file {"path": "/data/graphite/whisper/servers/sl-live-aim-03/irq/irq-72.wsp", "error": "open /data/graphite/whisper/serve

rs/sl-live-aim-03/irq/irq-72.wsp: too many open files"}

[2017-07-06T10:46:02.420Z] ERROR [persister] failed to open whisper file {"path": "/data/graphite/whisper/servers/sl-live-rone-ml-master-11_springer-sbm_com/irq/irq-108.wsp", "error": "open

/data/graphite/whisper/servers/sl-live-rone-ml-master-11_springer-sbm_com/irq/irq-108.wsp: too many open files"}

[2017-07-06T10:46:02.420Z] ERROR [persister] failed to open whisper file {"path": "/data/graphite/whisper/servers/sl-live-static-content-02/df-a05/df_complex-reserved.wsp", "error": "open /

data/graphite/whisper/servers/sl-live-static-content-02/df-a05/df_complex-reserved.wsp: too many open files"}

From our metrics dashboards (which include an external Datadog) we could not see the memory usage spike, and we think it actually happened extremely sudden.

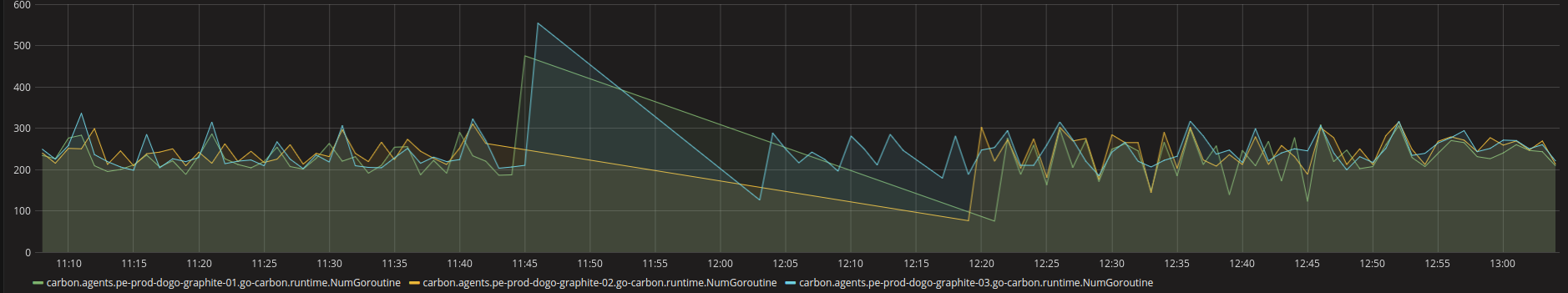

The only evident oddity is, maybe, a visible spike in the number of goroutines just before the processes crashed:

Looking at the source, on the (seemingly) faulty path, we also noticed that the Unlock call to the mutex here is not deferred.

Any help or support on this would be really appreciated :)!

Many thanks, Claudio Benfatto

I tried and managed to reproduce the bug:

I initially set the scan-frequency carbonserver parameter to a relatively small value:

scan-frequency = "10s"

- then I sent 5 metrics to graphite:

for i in $(seq 1 5);do echo "test.metric.$i 1 `date +%s`" | nc -w 1 localhost 20030; done;

- accessed and then deleted them:

curl -X GET "http://localhost:10000/render?format=json&target=test.metric.*"

rm -rf /data/graphite/whisper/test/metric/*

- sent them again:

for i in $(seq 1 5);do echo "test.metric.$i 1 `date +%s`" | nc -w 1 localhost 20030; done;

- At this point I see a panic stacktrace in the

go-carbonlog file:

[2017-07-07T09:44:30.779Z] ERROR [carbonserver] panic encountered {"handler": "fileListUpdated", "stack": "github.com/lomik/go-carbon/vendor/go.uber.org/zap.Stack\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/vendor/go.uber.org/zap/field.go:209\ngithub.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).updateFileList.func1\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:340\nruntime.call32\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/asm_amd64.s:514\nruntime.gopanic\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/panic.go:489\nruntime.panicmem\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/panic.go:63\nruntime.sigpanic\n\t/home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/signal_unix.go:290\ngithub.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).updateFileList\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:418\ngithub.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).fileListUpdater\n\t/home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:331", "error": "runtime error: invalid memory address or nil pointer dereference"}

- and if I render the metrics again:

curl -X GET "http://localhost:10000/render?format=json&target=test.metric.*"

- this is what I get:

root@graphite2:~# lsof -p 31809 | grep CLOSE_WAIT

go-carbon 31809 root 11u IPv6 23789142 0t0 TCP graphite2.domain.com:http-alt->graphite2.domain.com:43314 (CLOSE_WAIT)

go-carbon 31809 root 14u IPv6 23789147 0t0 TCP graphite2.domain.com:http-alt->graphite2.domain.com:43315 (CLOSE_WAIT)

go-carbon 31809 root 15u IPv6 23789152 0t0 TCP graphite2.domain.com:http-alt->graphite2.domain.com:43316 (CLOSE_WAIT)

go-carbon 31809 root 16u IPv6 23789157 0t0 TCP graphite2.domain.com:http-alt->graphite2.domain.com:43317 (CLOSE_WAIT)

go-carbon 31809 root 17u IPv6 23789162 0t0 TCP graphite2.domain.com:http-alt->graphite2.domain.com:43318 (CLOSE_WAIT)

- done it 2 more times:

root@graphite2:~# lsof -p 31809 | grep CLOSE_WAIT | wc -l

10

[]root@graphite2:~lsof -p 31809 | grep CLOSE_WAIT | wc -l*"

15

- and finally after enabling

pprofand looking at the active goroutines:

goroutine profile: total 52

15 @ 0x42e25a 0x42e33e 0x43ebb1 0x43e894 0x46e2ad 0x46e4a4 0x6f94e8 0x6f83c4 0x6f85c6 0x814654 0x7e8bad 0x7eb926 0x6e5c70 0x45b381

# 0x43e893 sync.runtime_SemacquireMutex+0x33 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/sema.go:62

# 0x46e2ac sync.(*Mutex).Lock+0x9c /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/sync/mutex.go:87

# 0x46e4a3 sync.(*Once).Do+0x43 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/sync/once.go:40

# 0x6f94e7 github.com/lomik/go-carbon/cache.(*WriteoutQueue).makeRebuildCallback.func1+0xa7 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/cache/writeout_queue.go:62

# 0x6f83c3 github.com/lomik/go-carbon/cache.(*WriteoutQueue).get+0x1a3 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/cache/writeout_queue.go:101

# 0x6f85c5 github.com/lomik/go-carbon/cache.(*WriteoutQueue).GetNotConfirmed+0x65 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/cache/writeout_queue.go:117

# 0x814653 github.com/lomik/go-carbon/cache.(*WriteoutQueue).GetNotConfirmed-fm+0x33 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbon/app.go:220

# 0x7e8bac github.com/lomik/go-carbon/persister.(*Whisper).worker+0xec /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/persister/whisper.go:218

# 0x7eb925 github.com/lomik/go-carbon/persister.(*Whisper).Start.func1.1+0x45 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/persister/whisper.go:272

# 0x6e5c6f github.com/lomik/go-carbon/helper.(*Stoppable).StartFunc.func1.1+0x2f /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/helper/stoppable.go:42

15 @ 0x42e25a 0x42e33e 0x43ebb1 0x43e894 0x46e2ad 0x7c9fbb 0x7d6a00 0x7e1948 0x66e834 0x7e00e2 0x783a8d 0x783bd0 0x66e834 0x66fc70 0x77dadd 0x66e834 0x6710f2 0x66d3a2 0x45b381

# 0x43e893 sync.runtime_SemacquireMutex+0x33 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/runtime/sema.go:62

# 0x46e2ac sync.(*Mutex).Lock+0x9c /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/sync/mutex.go:87

# 0x7c9fba github.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).UpdateMetricsAccessTimes+0x7a /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:309

# 0x7d69ff github.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).renderHandler+0x1d7f /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:1041

# 0x7e1947 github.com/lomik/go-carbon/carbonserver.(*CarbonserverListener).(github.com/lomik/go-carbon/carbonserver.renderHandler)-fm+0x47 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:1547

# 0x66e833 net/http.HandlerFunc.ServeHTTP+0x43 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/net/http/server.go:1942

# 0x7e00e1 github.com/lomik/go-carbon/carbonserver.TraceHandler.func1+0x251 /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/carbonserver/carbonserver.go:140

# 0x783a8c github.com/lomik/go-carbon/vendor/github.com/dgryski/httputil.TimeHandler.func1+0x7c /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/vendor/github.com/dgryski/httputil/times.go:26

# 0x783bcf github.com/lomik/go-carbon/vendor/github.com/dgryski/httputil.TrackConnections.func1+0xef /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/vendor/github.com/dgryski/httputil/track.go:40

# 0x66e833 net/http.HandlerFunc.ServeHTTP+0x43 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/net/http/server.go:1942

# 0x66fc6f net/http.(*ServeMux).ServeHTTP+0x12f /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/net/http/server.go:2238

# 0x77dadc github.com/lomik/go-carbon/vendor/github.com/NYTimes/gziphandler.NewGzipLevelHandler.func1.1+0x15c /home/travis/gopath/src/github.com/lomik/go-carbon/_vendor/src/github.com/lomik/go-carbon/vendor/github.com/NYTimes/gziphandler/gzip.go:167

# 0x66e833 net/http.HandlerFunc.ServeHTTP+0x43 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/net/http/server.go:1942

# 0x6710f1 net/http.serverHandler.ServeHTTP+0x91 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/net/http/server.go:2568

# 0x66d3a1 net/http.(*conn).serve+0x611 /home/travis/.gimme/versions/go1.8.1.linux.amd64/src/net/http/server.go:1825

I hope this could help fixing the issue ;)

Managed to reproduce this with 0.10.1 too (same procedure)

I tried this change: https://gist.github.com/claudio-benfatto/a443ae2452b97ca6d661fe8c81936248 and it helped fixing the bug caused by the panic in combination with the unreleased lock.

While this would mitigate the effects of the panic itself, it does not solve its root cause, which is to attribute, I guess, to some, not synchronized memory references. The references seem to be updated by a subsequently render operation only

Thanks for the detailed report! @Civil, FYI

I'll have a look at that monday or so.

Can you please check (and post trace) if you can reproduce that with master branch?

https://github.com/lomik/go-carbon/commit/c539e3466bf3124b56ce548aeb7bd5509b25cffa - this commit changed the logic quite a bit and honestly I can't reproduce that even on 0.10.1

hello, @Civil I confirm that it doesn't seem to happen when using the master branch.

It definitely happens in v0.10.1, and I think this is expected given that the logic didn't really change much in respect to v0.10.0.

The reason why this happens is that at this point: https://github.com/lomik/go-carbon/blob/v0.10.1/carbonserver/carbonserver.go#L410-L421

if fidx != nil {

fidx.Lock()

for m := range fidx.accessedMetrics {

accessedMetrics[m] = struct{}{}

}

for m := range fidx.accessedMetrics {

if d, ok := details[m]; ok {

d.RdTime = fidx.details[m].RdTime

}

}

fidx.Unlock()

}

if you follow the steps I posted, the details dictionary will contain the re-added metrics, while the fidx won't.

And this is because the fidx index was updated during the previous iteration: https://github.com/lomik/go-carbon/blob/v0.10.1/carbonserver/carbonserver.go#L425-L432 when the files were still absent because deleted.

So a panic is generated here: https://github.com/lomik/go-carbon/blob/v0.10.1/carbonserver/carbonserver.go#L418 when you access fidx.details[m].

Anyhow, imho a defer for that lock would be good anyhow, defensively speaking, as the cost of introducing a bug in that area of code, would most likely result in crashing the servers (and the whole cluster).

In case of panic carbonserver should actually stop working, that's saner than trying to run despite of that.

And again if the panic happens there, then the bug is somewhere in how accessedMetrics is populated, because it should contain only keys that are present in fidx.details.

As about this lock - in current code state it shouldn't be deferred, because it might lead to double unlock in case of failure in some other parts of this function. It might though if I'll move that loop into it's own function. Not sure that it's worth it.

I have only a superficial knowledge of the carbonserver code, therefore I'm not going to discuss further the points you highlighted.

However, I want to remark that a panic in that section, given that the goroutines are not bounded, would bring the entire server to resource starvation, and this means that both go-carbon and carbonserver (which are co-located), would stop working (breaking not only the carbonapi api side but also the metric ingestion).

Hi @Civil, I am a colleague of Claudio. About this issue, one of the collateral effects we saw was the persister threads of go-carbon stop working because it runs out of FD (our hard limit is 4096, which was more than enough until we found this issue):

[2017-07-06T10:46:02.421Z] ERROR [persister] failed to open whisper file {"path": "/data/graphite/whisper/sprcom/servers/qa/rabbitmq_mothership-qa/queues-EBooksListenerMDB/rabbitmq_details-messagesrate.wsp", "error": "open /data/graphite/whisper/sprcom/servers/qa/rabbitmq_mothership-qa/queues-EBooksListenerMDB/rabbitmq_details-messages/rate.wsp: too many open files"}

The second effect is go-carbon process does do not die and it keeps throwing those messages in the logs each time it cannot open a file (also filling /var).

The point is, even if the carbonserver panics and stops working cleanly, there is still running the persister part, so, the process is not working properly: it is not doing what it should do and the only way to recover it is restarting the process. In my opinion, your approach of let the carbonserver panics is fine if it runs on its own process and then it crashes and exits (with error code). But, as the persister part (go-carbon) is also running on the same process now both parts of the service (persister and "API" are affected.

We have panic issue with enabled carbonserver as well.

@maggnus the same questions:

- Is it still happening with go-carbon from master branch?

- If it happens - what's the error message and a stack trace for the panic?

@jriguera well, that's I think very good question how to behave in such situations. My opinion is that we should shutdown go-carbon in that case. It will be impossible to reliably and correctly handle all the cases.

For example in that case we end up having fidx in some unknown state which I don't think is possible to reliably recover.

@Civil

- yes

# /opt/go-carbon/bin/go-carbon --version

0.10.1

- I did not see any stack trace messages, just OOM Killer notification

Out of memory: Kill process 18913 (go-carbon) score 266 or sacrifice child

18.18% go-carbon go-carbon [.] runtime.greyobject

11.47% go-carbon go-carbon [.] runtime.heapBitsForObject

7.95% go-carbon go-carbon [.] strings.genSplit

5.59% go-carbon go-carbon [.] runtime.mallocgc

4.99% go-carbon [vdso] [.] __vdso_clock_gettime

4.27% go-carbon go-carbon [.] runtime.scanobject

3.67% go-carbon go-carbon [.] runtime.mapaccess2_faststr

2.81% go-carbon go-carbon [.] github.com/lomik/go-carbon/cache.fnv32

1.87% go-carbon go-carbon [.] sync.(*RWMutex).Unlock

1.81% go-carbon go-carbon [.] runtime.memeqbody

1.51% go-carbon go-carbon [.] runtime.memmove

1.48% go-carbon go-carbon [.] runtime.memclrNoHeapPointers

1.43% go-carbon go-carbon [.] strconv.readFloat

1.36% go-carbon go-carbon [.] runtime.heapBitsSetType

1.20% go-carbon go-carbon [.] runtime.unlock

1.15% go-carbon go-carbon [.] github.com/lomik/go-carbon/cache.(*Cache).Add

0.92% go-carbon go-carbon [.] runtime.indexbytebody

0.91% go-carbon go-carbon [.] runtime.lock

@Civil Looks like trigram-index = false help us.

@maggnus still seems to be first of all - separate issue, and secondly - that's still not enough info. What kind of HW/VM do you have? What's the amount of metrics? What's the config file? etc.