git-credential-manager

git-credential-manager copied to clipboard

git-credential-manager copied to clipboard

`git-credential-manager-core`'s CPU usage peaks on Mac

Which version of GCM are you using?

2.0.785+6074e4e3d3 (macOS Monterey 12.4, M1 pro)

I have a CPU usage issue with My Mac.

Suddenly Mac's CPU usage peaks, and I found that multiple git-credential-manager-core processes were consuming my CPU.

This happens occasionally (like 2 times a day..?) Is there any possible reason for this?

Hello @ANTARES-KOR,

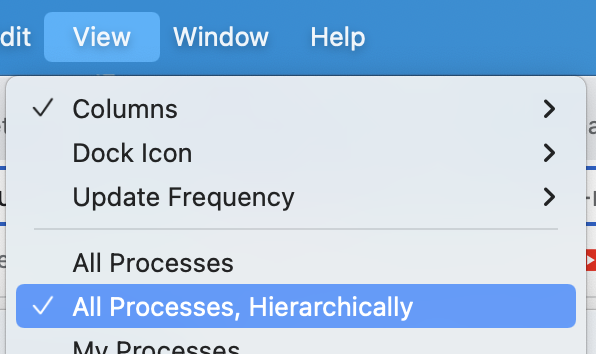

That's a lot of instances running! Something must be running Git commands in the background periodically. Please can switch Activity Monitor to the "All Processes, Hierarchically" view and see the parent processes from GCM to see what is triggering us?

Do you use any 'auto-fetch' features in any editors/IDEs, or use any Git tools other than the git command-line?

You can also set the GCM_TRACE environment variable to an absolute file path to have all GCM instances write out trace information to that file, on the next GCM start. (remember to restart all terminal instances and tools after updating the profile)

# zsh

echo "export GCM_TRACE=/tmp/gcm.log" >> ~/.zshrc

# bash

echo "export GCM_TRACE=/tmp/gcm.log" >> ~/.bashrc

I'm currently using Github Desktop & vscode with auto fetch enabled. Maybe this could be a reason!

I'll test with these options disabled. If problem happens again, I'll update to this issue.

Thanks for all your support and fast response! :)

Going to close for now - @ANTARES-KOR please feel free to re-open if disabling the options doesn't work or you start to see this issue again.

I seem to be having a somewhat similar issue to this. There aren't a lot of instances, however it's using an entire CPU core if I keep the Terminal open, from the moment I run a git command. It seems something is persisting?

If I run ps aux | grep git, the only entry aside from the grep is /usr/local/share/gcm-core/git-credential-manager-core get

Running macOS 13.0.1

@me4502 - can you please follow the TRACE instructions that @mjcheetham called out above, re-run the Git command in question, and send over the results (redacting as needed)? This will hopefully help us get some additional visibility into your issue.

FWIW, since upgrading GCM a couple days ago AND moving to use OAuth and not PATs I see similar behavior - VS code UI lags, and mouse pointer jitter.

System is M1 Pro macOS 12.4. GCM is 2.0.785+6074e4e3d3

Most noticeable, when opening a VS code workspace with ~10 repos. VS Code has it's "source control" panel open, Git Lens, Git Graph, Github PRs extensions installed. (But disabling all extensions did not seem to have an affect - maybe VS code itself pushes this to the limit).

I was able to spot the activity monitor showing > 7 instances of GCM running just like the OP had - all with high CPU consumption. Was not on the "hierarchical view" though. (Azure DevOps repos mostly, and a couple of GH repos)

Last. I have been working with all those extensions and the multi repo for a long while without trouble. The only difference was moving to OAuth from PAT and upgrading GCM.

Looking into this some more, Here are some findings:

- Def, the VS code instance, almost every time it gets focus will issue fetches on all repos within the workspace. As I said I must assume this is not a new behavior.

- The GCM is invoked from VS code, as a sub process of

git fetch - All fetches run in parallel, all start their own GCM instance and all GCM instances take a large amount of CPU for a couple of seconds.

- This happens even if a minute ago all fetches ran already - looks like no caching is in place to stop git/GCM from doing what ever it is doing.

This is an htop screenshot, showing one such tree from VS code down to GCM. I removed what I did not think is relevant - but it is possible all fetches come from an extension (cannot see from this view - command line too long). But also, maybe it's the VS code built in source control integration.

Attached also a log file (redacted a bit, sorry) while such GCM storm takes place. It's a single file so the processes output is mangled together. gcm_mlti.log

I haven't been able to reproduce the issue I mentioned with the logging enabled. My only guess is that because logging seems to make it take ~10-20 seconds to do a push, it might be changing the run order of whatever was causing the issue.

For me, I see that VS Code is configured to perform "AutoFetch" and do that every "180" (secs).

Every time this auto fetch process runs, the VS code would show logs for the "git" like the following:

2022-11-27 13:24:10.268 [info] > git fetch [4831ms]

2022-11-27 13:24:10.288 [info] > git remote --verbose [17ms]

2022-11-27 13:24:10.290 [info] > git config --get commit.template [17ms]

2022-11-27 13:24:10.292 [info] > git status -z -uall [20ms]

... more - for every repo in the workspace...

That "fetch" row takes anywhere from 2 - 6 seconds normally. While this takes place, sometimes it will go up to 15-20 seconds while CPU is high (GCM being the consumer). So it's not on every Fetch - I assume it is only for fetches that ask GCM to provide auth.

I will disable the AutoFetch - but again - this is something I have used for years. This is not the root cause here - but rather the time it takes to perform this auto fetch and the amount of CPU it consumes.

@ldennington Would love to know if we can provide more info on this to move fwd with researching.

@ldennington I had to look a bit deeper since this is such a bad experience I am having.

Some more info:

- From all the traces I gathered - seems like no caching is used - every time an a remote access is needed the oAuth flow is invoked (while oAuth flow is enabled - I am using Azure Devops repos).

- Checking the code - indeed it seems like for PATs a cache is consulted but for oAuth it is not? https://github.com/GitCredentialManager/git-credential-manager/blob/02204ac1ffb78a7a361d57b8f9acd03e00de79aa/src/shared/Microsoft.AzureRepos/AzureReposHostProvider.cs#L76-L111

Specifically - looks like PAT is the only method that will have a cache consulted for AzureReposHostProvider.

Am I reading this right? Since this is 100% aligned with the fact this problem started when I moved to oAuth from PATs (which were bad, but in a different way).

Thanks!

Hi. Also having this issue, in macOS Ventura running on M1. We configured Sourcetree is indeed configured to do periodic polling, but the issue is that the number of processes that end up being launched get 100% CPU usage for a time much greater than before. We do indeed have most of the open repos in Azure DevOps, configured with PATs. Thanks

Curious that this only affects Azure Repos for OAuth mode (not PAT mode). This could indicate a concurrency issue with the MSAL Cache Synchronization library. For those experiencing this problem, the workaround is to switch to PAT mode as we continue to investigate.

I got annoyed enough at this that I created a python script to just automatically kill long running git-credential-manager instances

automatically kill long running git-credential-manager instances

Mmm, I would do that if I thought that would help - those long running processes are doing something. So you kill the and the git action that follows is not failing? It is able to use the stored oauth credentials?

@ldennington

as we continue to investigate.

Thanks - I think my last reply about not using the cache is not correct. I do see cache is used in the code - for some reason I did not see it being used in the traces so I will have to repro. https://github.com/git-ecosystem/git-credential-manager/blame/65e5a883bacd1e3e54fc40726812237a1066c8f4/src/shared/Microsoft.AzureRepos/AzureReposHostProvider.cs#L236

Hi. From the point of view of someone using git-credential-manager from SourceTree with some 20 open repos on azure devops (https auth), even a 32GB macbook pro M1 basically freezes for several minutes due to high number of processes launched, their high CPU usage, and the time they take to complete. Prob this happens whenever SourceTree decides to refresh something, but this wasn't an issue when we were using ssh for other providers like gitlab. Thanks

automatically kill long running git-credential-manager instances

Mmm, I would do that if I thought that would help - those long running processes are doing something. So you kill the and the git action that follows is not failing? It is able to use the stored oauth credentials?

I kill it and nothing bad happens, because they've probably been launched from background git fetches which can just be re-done at some other point. Like in the original screenshot, I can see like 6 gcm instances running and apparently doing nothing, and I dont see anything complain when I kill them. The script only kills them when they've been running for at least 5 minutes, so if they havent finished it by then, they've already failed. Like, the linux git repo is 1.5GB, and on my connection, that wouldnt take more than 1 minute to download.

Besides, this is just a dirty workaround so i dont have to manually kill those runaway instances, not a permanent fix.

You were more patient... I just do a pkill -9 git-credential-manager whenever the machine starts crawling for no apparent reason...

These also drain the battery!

Any news on this front? Just to reinforce - opening git GUI clients sometimes launches 80 git-credential-manager processes and simply stops all other activity... Also happens once a day when the client decides it's time to fetch changes.

Please can you try installing the latest version of GCM v2.1.2, which has had some changes to improve performance.

With this latest version, you can also set the Git config variable credential.trace and capture trace info about what GCM is doing at the point that it hangs, the next time it occurs.

After installing the latest GCM, please can you run the following, and reproduce the issue, and then send/attach a copy of the log file:

git config --global credential.trace /tmp/gcm.log

Closing - this should be resolved on newer versions of GCM.

Yup, I can confirm that this is resolved, as I haven't noticed the issue in the past few months (and I havent had to run my script). Thanks for the fix!