Compute perplexity over prompt

This is a prototype of computing perplexity over the prompt input. It does so by using n_ctx - 1 tokens as the input to the model, and computes the softmax probability of the output logits for the next token. It's also pretty slow, running one prediction at at time on my 32 core machine for the 7B model, takes 20 minutes or so to complete wikitext2.

Note: when doing prediction over large prompts, the default 10% expansion for the memory buffer is not sufficient - there is definitely a non-linear scaling factor in there somewhere.

Example:

- Download/extract: https://s3.amazonaws.com/research.metamind.io/wikitext/wikitext-2-raw-v1.zip?ref=salesforce-research

- Run

./main --perplexity -m models/7B/ggml-model-q4_0.bin -f wiki.test.raw - Output:

perplexity: 13.5106 [114/114]

As a baseline: https://paperswithcode.com/sota/language-modelling-on-wikitext-2, shows fine-tuned GPT-2 at 15.17, and OPT-175B at 8.34. So, 13.51 on 7B model, w/ 512 context limit seems like it might be in the ballpark.

However, I was most interested in comparing to this article: https://nolanoorg.substack.com/p/int-4-llama-is-not-enough-int-3-and, where they compare 4-bit to GPTQ quantization. From there, the results are way off, but reading closer, they might have used wikitext original?

I also haven't tried running on the unquantized inputs yet.

Got results for 7B, ctx=1024: perplexity: 11.4921 [57/57], so that seems promising.

This is indeed very cpu time consuming. I had it running for 25min and only got this far:

perplexity: 12.5934 [39/649] for 7B q4_0 ctx=512 (everything default)

Note: when doing prediction over large prompts, the default 10% expansion for the memory buffer is not sufficient - there is definitely a non-linear scaling factor in there somewhere.

this is likely related to https://github.com/ggerganov/llama.cpp/pull/213

Very useful work. I think this can be significantly made faster if we have the option for the eval method to return the logits even for the past tokens:

https://github.com/ggerganov/llama.cpp/blob/7392f1cd2cef4dfed41f4db7c4160ab86c0dfcd9/main.cpp#L733-L735

@glinscott How is it you're seeing [x/114]? With the default context size (512), I'm seeing [x/649]. But from the code that should only depend on tokens.size() / params.n_ctx, and those should be constant across machines. Were you using a different dataset or something?

Anyway I ran it on the 7B FP16 model before your most recent commits (at commit e94bd9c7b90541ac82a7ccc161914a87e61f73a0), with

./main -m ./models/7B/ggml-model-f16.bin -n 128 -t 8 --perplexity -f ./wikitext-2-raw/wiki.test.raw

and got

perplexity: 10.4625 [649/649]

Very useful work. I think this can be significantly made faster if we have the option for the eval method to return the logits even for the past tokens:

Yes, thanks! I was prototyping this last night, just got it working (I think).

Current output with:

$ ./main --perplexity -m models/7B/ggml-model-q4_0.bin -f wiki.test.raw

...

perplexity: 13.0231 [39/114]

So, it's consistent with the old one, but much more accurate (256x more tokens for 512 size window).

Anyway I ran it on the 7B FP16 model before your most recent commits (at commit e94bd9c), with

./main -m ./models/7B/ggml-model-f16.bin -n 128 -t 8 --perplexity -f ./wikitext-2-raw/wiki.test.rawand got

perplexity: 10.4625 [649/649]

I did exactly the same :see_no_evil: . but with a slightly different batch size.

$ ./main --perplexity -t 8 -c 512 -b 32 -f wikitext-2-raw/wiki.test.raw -m models/7B/ggml-model-f16.bin

perplexity: 10.4624 [649/649]

@glinscott How is it you're seeing [x/114]? With the default context size (512), I'm seeing [x/649]. But from the code that should only depend on tokens.size() / params.n_ctx, and those should be constant across machines. Were you using a different dataset or something?

@glinscott can you check your wikitext file is correct?

@glinscott How is it you're seeing

[x/114]? With the default context size (512), I'm seeing[x/649]. But from the code that should only depend ontokens.size() / params.n_ctx, and those should be constant across machines. Were you using a different dataset or something?

There are a couple of possibilities. I get this error tokenizing:

failed to tokenize string at 1067123!

So, I assume it truncates the string there? Do other folks not see that?

Other possibility is my dataset is wrong, can someone double check? It's 1290590 bytes.

$ sha256sum wiki.test.raw

173c87a53759e0201f33e0ccf978e510c2042d7f2cb78229d9a50d79b9e7dd08 wiki.test.raw

$ sha256sum wikitext-2-raw/wiki.test.raw

173c87a53759e0201f33e0ccf978e510c2042d7f2cb78229d9a50d79b9e7dd08 wikitext-2-raw/wiki.test.raw

hmm, so file hash checks out

One thing to note, I don't think the params.n_batch has any effect - I think adding support for that shouldn't be too hard though.

Can someone try adding this debugging printf() in?

--- a/main.cpp

+++ b/main.cpp

@@ -776,6 +776,7 @@ void perplexity(const gpt_vocab &vocab, const llama_model &model, const gpt_para

int count = 0;

double nll = 0.0;

int seq_count = tokens.size() / params.n_ctx;

+ printf("params.prompt.size() = %d, tokens.size() = %d, params.n_ctx = %d, seq_count = %d\n", params.prompt.size(), tokens.size(), params.n_ctx, seq_count);

I get this:

params.prompt.size() = 1290589, tokens.size() = 58773, params.n_ctx = 512, seq_count = 114

you should check your model files https://github.com/ggerganov/llama.cpp/issues/238

I get this:

params.prompt.size() = 1290589, tokens.size() = 58773, params.n_ctx = 512, seq_count = 114

I get this:

params.prompt.size() = 1290589, tokens.size() = 332762, params.n_ctx = 512, seq_count = 649

Same results as @Green-Sky here. @glinscott I suspect you need to rebuild your models; you can check against the md5 hashes listed in https://github.com/ggerganov/llama.cpp/issues/238. I don't see the "failed to tokenize string" message you report, either. I'm guessing you did the conversion before https://github.com/ggerganov/llama.cpp/pull/79.

Sure enough, my model was busted! Ok, I see consistent results now :).

params.prompt.size() = 1290589, tokens.size() = 332762, params.n_ctx = 512, seq_count = 649

perplexity: 16.0483 [16/649] 22507 ms

Now, at 22 seconds per inference pass, it's ~4 hours to do wikitext-2. So, would be great to see if we can get representative results from a much smaller subset.

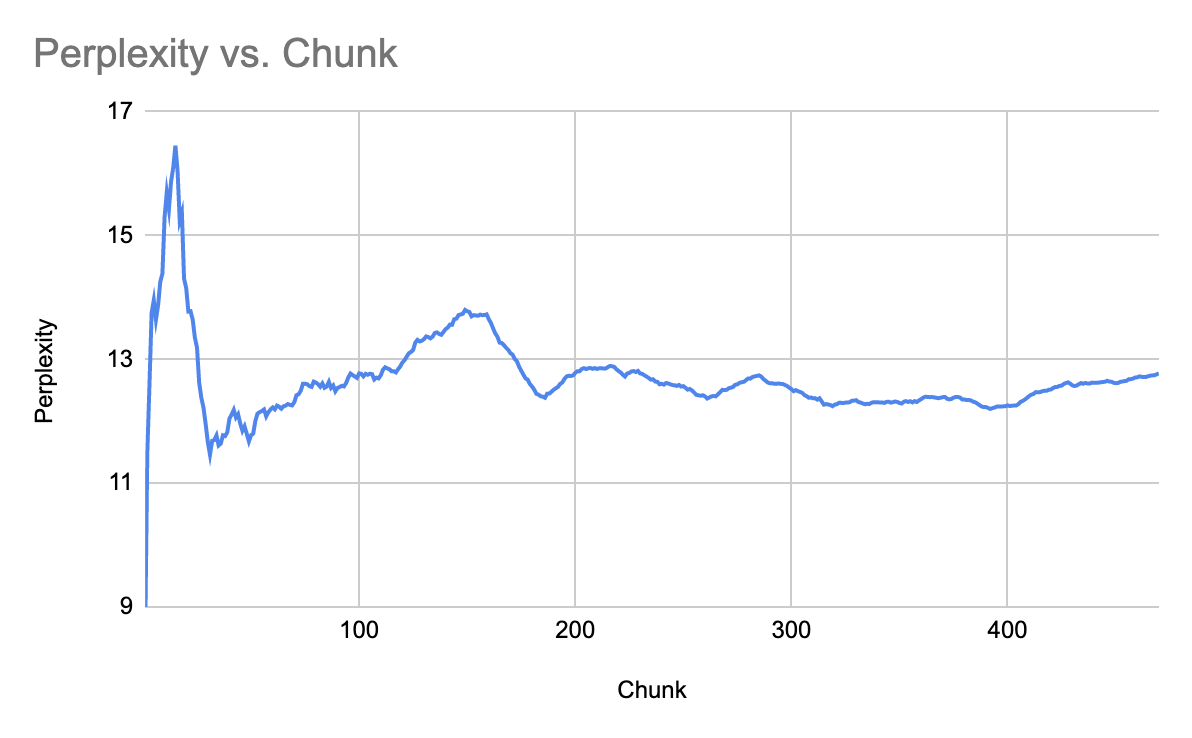

I'll do a run with:

$ ./main --perplexity -m models/7B/ggml-model-q4_0.bin -f wiki.test.raw

And log all the perplexities along the way. Once it starts to converge, it's probably a good sign we can cut the dataset off at that point. With the new method, it seems to be converging much faster hopefully. But we will see!

perplexity: 8.4400 [1/649] 24456 ms

perplexity: 11.4887 [2/649] 22491 ms

perplexity: 12.5905 [3/649] 22476 ms

perplexity: 13.7533 [4/649] 22608 ms

perplexity: 13.9558 [5/649] 22577 ms

perplexity: 13.6425 [6/649] 22604 ms

perplexity: 13.8768 [7/649] 22590 ms

I asked GPT4 for some stats advice, and it recommended:

If you only care about accuracy down to two decimal digits, then you can stop sampling when your confidence interval has a width less than or equal to 0.01. This means that you need at least n = 38416 samples to achieve an accuracy of two decimal digits with 95% confidence.

We will see :). That's [150/649] in our model (256 samples per evaluation). There are some big assumptions about uniform distribution of data in there which probably don't hold for wikitext2, but probably still reasonable. Most recent results do look like they are converging nicely:

perplexity: 12.5374 [90/649] 22718 ms

perplexity: 12.5553 [91/649] 22714 ms

perplexity: 12.5719 [92/649] 22715 ms

perplexity: 12.5630 [93/649] 22715 ms

perplexity: 12.6198 [94/649] 22766 ms

perplexity: 12.7071 [95/649] 22743 ms

perplexity: 12.7707 [96/649] 22755 ms

perplexity: 12.7453 [97/649] 22706 ms

perplexity: 12.7235 [98/649] 22700 ms

I merged in https://github.com/ggerganov/llama.cpp/pull/252 locally and am seeing a much better score: perplexity: 5.8149 [655/655]! That's a huge improvement, and much closer to the number reported in the "Int-4 is not enough" post.

This was done using the same model (7B FP16) and settings I used above (except with the model re-built to use the new tokenizer, of course), and without 91d71fe0c109227debe86536899caf2b5b2235c3, so the numbers should be directly comparable to the ones I got above (10.4625).

I'll re-run both scenarios using the new logic in this branch but I expect very similar results. (Edit: yeah, pretty similar: 11.4675 before fixing the tokenizer, 5.9565 after.)

@bakkot - wow, that is an incredible delta. Interesting, so the tokens must be subtly off with the existing tokenizer?

Not really subtly, as reported in e.g. https://github.com/ggerganov/llama.cpp/issues/167. Honestly it's impressive that it does as well as it does with the broken tokenizer it's currently using.

Ok, well, for the 7B model at 4 bit quantization, the perplexity appears to be 12.2-12.9 or so. Doing a little bit of a random walk. Going to stop at 470 since I'm excited to try out #252 :).

@glinscott Sidebar - the perplexity scores for chunks in wiktext aren't independent, because some articles are easier than others and there's multiple chunks per article. (So e.g. you might have ten chunks in a row from a really difficult article, each of which will raise the perplexity. With independent chunks that sort of consistent change in one direction would happen only very rarely.) That means perplexity isn't going to converge as fast as it should. So you might want to randomize the order in which chunks are processed, as in

// Different parts of the prompt are likely to vary in difficulty.

// For example, maybe the first half is easy to predict and the second half is hard.

// That will prevent scores from converging until the whole run finishes.

// So we randomize the order in which we consume each part of the prompt,

// so that the score converges towards the real value as rapidly as possible.

std::mt19937 gen(0x67676d6c); // use a fixed seed so results are reproducible; this seed is `ggml` in hex

std::vector<int> indexes(seq_count);

std::iota(indexes.begin(), indexes.end(), 1);

std::shuffle(indexes.begin(), indexes.end(), gen);

for (int i : indexes) {

instead of

for (int i = 0; i < seq_count; ++i) {

I haven't run this code, nor tested if it actually makes a difference in how fast the scores converge, but I expect it or something like it should work.

Results for 4-bit quantization are looking great so far with #252 merged in as well!

perplexity: 6.5217 [62/655]

perplexity: 6.5569 [63/655]

perplexity: 6.5744 [64/655]

perplexity: 6.6235 [65/655]

perplexity: 6.6335 [66/655]

perplexity: 6.6522 [67/655]

I captured the perplexity for each chunk separately (using 7B FP16, with #252 merged in).

From there I looked into how good the measurement would be if you used fewer chunks, assuming you consume the chunks in a random order. Keep in mind there's 655 chunks total. The (empirical) 90% confidence intervals for the difference from the final perplexity (for my specific conditions) after a specific number of chunks are:

- 10 chunks: ±1.0730

- 20 chunks: ±0.7697

- 50 chunks: ±0.4812

- 100 chunks: ±0.3279

- 150 chunks: ±0.2549

- 200 chunks: ±0.2104

- 400 chunks: ± 0.1122

Determining whether you can get away with fewer chunks will depend on the size of the effect you're looking at - e.g. the fixed tokenizer is obviously better after only 10 chunks, but confirming the presence of smaller effects (like from improved quantization strategies) will require significantly more.

code/data if you want to reproduce my results

// this is javascript

let data = [

4.2337, 5.2897, 7.7770, 8.3287, 6.8390, 6.0943, 7.7364, 7.2585, 10.1792, 9.7338, 9.8226, 7.5937, 6.3308, 8.0002, 11.8844, 3.3917, 5.5408, 6.1756, 2.6730, 6.4901, 4.9989, 3.6592, 5.7682, 4.5201, 6.2160, 3.2217, 2.8567, 3.7494, 3.7945, 2.5883, 4.7733, 6.2793, 4.0443, 6.6725, 6.4370, 7.0745, 5.6611, 6.0521, 7.0657, 8.0699, 6.0984, 7.5405, 4.3730, 8.8372, 5.9219, 4.7395, 6.8133, 4.7350, 5.8450, 4.1329, 5.5502, 5.2692, 8.6583, 4.9914, 4.6868, 7.5662, 6.9880, 6.9894, 6.8970, 8.8414, 5.4384, 10.6731, 8.1942, 6.8570, 9.3563, 6.5627, 7.2757, 7.0825, 7.8798, 8.5397, 7.7570, 8.8057, 12.2151, 6.5003, 7.2832, 7.1812, 7.1461, 5.2082, 8.8034, 5.7541, 7.2228, 6.5905, 3.2219, 4.8862, 5.2106, 4.6112, 2.4795, 4.2595, 4.5617, 4.9153, 8.4723, 5.5482, 6.1128, 5.8297, 9.2492, 6.0519, 5.5583, 5.5216, 4.9173, 5.9582, 8.9768, 5.6014, 8.5170, 6.8875, 6.0951, 8.1004, 6.0354, 7.6947, 5.6168, 5.7427, 9.1345, 8.8376, 6.3986, 5.7434, 6.8633, 5.2115, 6.7495, 10.5116, 9.3441, 11.9780, 8.2422, 10.0067, 12.7040, 8.8324, 5.2965, 13.4408, 12.8634, 11.5266, 4.7939, 7.5777, 5.9655, 5.5261, 4.9038, 7.8649, 5.9049, 5.1198, 5.4877, 4.3806, 5.0965, 5.8914, 3.3561, 5.8583, 3.2323, 3.9742, 5.1125, 4.7900, 6.7743, 6.3185, 5.5245, 5.6687, 6.5638, 4.9464, 4.2488, 5.0675, 7.3592, 5.5228, 9.4368, 6.9210, 6.9797, 6.6831, 8.4606, 3.0650, 4.6591, 3.4063, 2.7900, 3.0231, 2.3005, 2.6896, 4.1826, 4.5053, 2.9034, 3.7563, 3.7867, 2.5532, 3.2104, 4.2681, 3.3105, 3.0264, 3.5613, 4.4102, 3.0667, 3.3960, 3.8231, 5.6702, 4.6170, 6.0197, 7.0675, 5.1326, 10.0308, 5.9919, 11.3845, 9.7865, 9.9764, 8.3787, 11.7139, 9.7893, 11.7055, 9.7135, 6.5766, 7.0163, 5.0125, 11.0156, 7.5948, 5.6769, 8.4561, 7.5776, 5.2701, 7.9725, 6.8910, 7.1792, 8.6991, 7.6900, 8.6591, 6.5381, 6.6024, 9.9117, 11.4651, 9.6110, 6.0322, 5.3760, 5.0621, 5.6246, 4.3323, 4.6806, 5.2827, 12.8015, 8.1204, 7.3919, 7.6432, 5.4063, 11.2815, 3.9873, 3.3158, 3.5056, 2.8041, 4.7094, 4.1956, 6.7119, 3.4211, 4.0789, 6.4766, 6.9613, 5.6383, 3.8569, 5.3274, 3.8636, 3.7660, 4.4742, 5.4093, 7.2289, 4.4956, 5.2353, 4.0107, 4.7802, 3.7488, 2.8184, 3.5604, 4.2093, 5.3541, 4.1740, 4.9184, 4.6309, 4.6749, 2.1799, 5.7219, 5.4113, 4.3672, 8.6913, 5.3731, 6.1470, 8.3038, 6.8235, 5.9549, 6.5837, 8.5758, 7.7327, 12.1389, 9.3534, 9.1320, 6.7431, 9.3347, 7.7855, 11.8079, 8.6349, 8.8769, 11.3166, 5.8538, 7.8667, 4.0560, 2.8534, 2.9460, 2.9278, 3.1373, 6.6050, 5.6842, 7.4505, 5.5637, 6.8299, 5.2548, 3.4957, 5.9363, 4.0149, 3.8561, 3.8802, 4.9512, 3.1070, 6.6027, 6.8806, 2.6353, 4.4386, 4.2173, 6.5665, 4.3896, 5.3577, 2.5667, 4.4052, 2.4796, 1.9780, 10.9267, 11.2068, 7.4261, 4.6996, 4.0354, 5.0048, 10.1574, 5.8825, 6.5496, 7.2039, 8.0570, 6.7768, 11.5410, 4.9996, 8.5831, 4.3073, 4.1795, 7.2409, 5.1631, 5.6205, 4.3670, 4.5893, 9.2200, 6.8801, 7.6852, 5.9022, 6.0188, 5.0642, 7.4118, 7.1476, 6.6982, 4.8392, 6.1443, 5.8701, 4.1545, 5.8907, 7.9460, 7.0058, 4.7597, 10.0613, 6.8521, 4.7857, 5.7337, 8.9369, 11.5146, 8.5051, 8.0402, 6.3870, 9.9484, 5.0987, 6.2364, 6.4576, 4.2600, 7.9318, 7.8497, 5.3683, 5.9516, 8.9665, 4.4904, 6.9869, 8.5304, 3.6020, 4.7592, 4.3036, 5.6554, 5.7098, 5.5246, 5.7023, 5.8297, 4.6599, 4.2254, 3.7789, 3.5960, 4.5255, 5.2527, 6.9731, 5.4062, 3.6407, 9.3482, 7.5259, 9.8064, 5.9531, 6.4362, 6.2962, 6.7262, 9.0811, 3.0848, 4.7268, 5.7033, 6.5912, 12.8079, 12.4113, 12.7754, 17.2818, 12.5417, 9.9668, 8.6653, 10.1074, 13.5201, 7.5909, 9.4968, 10.9255, 13.2899, 7.9184, 9.7576, 12.5443, 10.9062, 9.3986, 8.1765, 10.7153, 8.5812, 10.7370, 16.0474, 7.9101, 5.7778, 4.5653, 6.4762, 7.2687, 11.9263, 10.3103, 4.8934, 5.7261, 4.2609, 5.4918, 6.6898, 6.2934, 5.3325, 7.2188, 7.5185, 8.2033, 5.1273, 6.5011, 4.5670, 2.2386, 3.2372, 3.9893, 6.5170, 8.6117, 7.0107, 5.1495, 6.3412, 11.4701, 4.9500, 5.4811, 8.1177, 5.5823, 4.9553, 3.3866, 6.1404, 5.9408, 7.0779, 6.2677, 4.2244, 8.4411, 4.0633, 6.6431, 3.7769, 6.8590, 3.4788, 5.5537, 9.3246, 8.5652, 6.9801, 4.2857, 4.3862, 7.0454, 5.1355, 3.8384, 5.9033, 5.0303, 4.1490, 4.9914, 4.7928, 3.8402, 4.8503, 5.2423, 5.7663, 4.4227, 3.8162, 5.2343, 4.2511, 2.8094, 3.4875, 6.0975, 5.7161, 2.9156, 7.1691, 6.3762, 3.6819, 4.2773, 5.7032, 7.9792, 8.8110, 8.0352, 7.0775, 10.1292, 3.7952, 5.5346, 6.5626, 5.8085, 7.7367, 7.3946, 6.6608, 7.5490, 6.3721, 9.8001, 7.8648, 6.5025, 7.0076, 3.8873, 6.2564, 3.9161, 5.4713, 8.9538, 7.3572, 5.1763, 7.2523, 3.7586, 4.9993, 9.2871, 6.6082, 8.3411, 6.1726, 6.6453, 6.9063, 6.6387, 5.0784, 6.4587, 4.1723, 3.9443, 6.0791, 4.6138, 4.4106, 4.9176, 4.3316, 4.9980, 4.5371, 5.7626, 7.3694, 4.2320, 5.8014, 5.9095, 6.1267, 4.9075, 5.7717, 8.9320, 7.2476, 5.8910, 4.9010, 6.3294, 5.2988, 7.7972, 6.2766, 6.5831, 6.0055, 4.2898, 5.6208, 5.9260, 5.2393, 5.0046, 6.2955, 3.2518, 4.2156, 5.3862, 6.4839, 6.1187, 2.9040, 3.1042, 6.2950, 9.5657, 9.8978, 8.0166, 7.3498, 5.3361, 4.3502, 6.6131, 4.7414, 8.2340, 4.8954, 4.4713, 7.3732, 5.7101, 5.1141, 6.5039, 7.8757, 6.4675, 8.4179, 7.3042, 5.0399, 4.3175, 6.4821, 8.5142, 5.0135, 7.7970, 4.1496, 3.6323, 2.8987, 7.8440, 3.2591, 3.6729, 3.4526, 1.4961, 2.9882, 5.0225, 6.9797, 6.2451, 6.0565, 5.2908, 7.4791, 6.0146, 5.6742, 8.2883, 10.7090, 10.6945, 5.1382, 8.5528, 6.3640, 4.1532, 4.1070, 7.6952, 4.2944, 6.5832, 6.0564, 11.9188, 7.4791, 6.7621, 4.8915, 9.0851, 3.8716, 6.5621, 6.0976, 8.9491, 10.5537, 6.6961, 9.0845, 3.0358, 5.5621,

];

function combinePerp(acc, a, len) {

let avg = Math.log(acc);

let sum = avg * len;

sum += Math.log(a);

avg = sum / (len + 1);

return Math.exp(avg);

}

function chunksToRunningScores(chunks) {

let intermediatePerpScores = [chunks[0]];

for (let i = 1; i < chunks.length; ++i) {

intermediatePerpScores[i] = combinePerp(intermediatePerpScores[i - 1], chunks[i], i);

}

return intermediatePerpScores;

}

function fyShuffle(data) {

let out = [...data];

let i = out.length;

while (--i > 0) {

let idx = Math.floor(Math.random() * (i + 1));

[out[idx], out[i]] = [out[i], out[idx]];

}

return out;

}

let test_N = 20000;

let Ns = data.map(() => []);

for (let i = 0; i < test_N; ++i) {

let randomized = fyShuffle(data);

let running = chunksToRunningScores(randomized);

for (let N = 1; N < data.length; ++N) {

Ns[N].push(Math.abs(5.9565 - running[N]));

}

}

Ns.forEach(x => x.sort((a, b) => a - b));

let idx = Math.floor(.9 * test_N); // 90% CI

for (let N = 1; N < Ns.length; ++N) {

console.log(N, Ns[N][idx].toFixed(4));

}

Well, good news, the 4 bit quantization looks pretty good (although definitely not matching f16 results)! I got this result last night from the 4-bit quantized weights, after merging in #252 and reconverting everything:

./main --perplexity -m models/7B/ggml-model-q4_0.bin -f wiki.test.raw

...

perplexity: 6.5949 [655/655]

And, just to validate, all the perplexities match after merging in master this morning. Going to do an f16 run now to compare on my machine. So far the f16 run matches @bakkot's very closely, so probably no surprises expected, but good to double check.

Determining whether you can get away with fewer chunks will depend on the size of the effect you're looking at - e.g. the fixed tokenizer is obviously better after only 10 chunks, but confirming the presence of smaller effects (like from improved quantization strategies) will require significantly more.

@bakkot thank you for running this experiment! Ok, so it looks like for smaller effects, the full run might be required, and is safest. Measuring the perplexity on one chunk is pretty fast, and could be a great way to test that changes are non-functional though. Maybe that's the best way to use it in fast mode, as a test that nothing is changing.

@glinscott it would be very interesting to see the impact of --memory_f16 .

@Green-Sky cool, will run that.

Okay, here are latest results (and btw, I had copied the wrong perplexity in my above comment - it should be 6.5949 [655/655] for 4bit quantized. and 5.9565 for 16-bit floating. All arguments are set to default (major one being 512 context window).

7B 4bit - 6.5949 [655/655]

7B 16f - 5.9565 [655/655]

So, there is a clear perplexity hit from the current 4B quantization for the smaller model. The good news is it's quite consistent!

Raw data:

0,4.5970,4.2341 1,5.1807,4.7324 2,6.0382,5.5847 3,6.7327,6.1713 4,6.8538,6.2994 5,6.8503,6.2648 6,7.0369,6.4564 7,7.1289,6.5515 8,7.5324,6.8803 9,7.7853,7.1232 10,8.0535,7.3344 11,8.0808,7.3556 12,7.9857,7.2712 13,8.0870,7.3211 14,8.3566,7.5614 15,7.9362,7.1918 16,7.8087,7.0823 17,7.7664,7.0286 18,7.3693,6.6799 19,7.3458,6.6703 20,7.2453,6.5793 21,7.0686,6.4062 22,7.0277,6.3770 23,6.9379,6.2862 24,6.9391,6.2834 25,6.7612,6.1240 26,6.5683,5.9535 27,6.4599,5.8560 28,6.3715,5.7690 29,6.1979,5.6169 30,6.1666,5.5875 31,6.1827,5.6079 32,6.1160,5.5527 33,6.1558,5.5827 34,6.1829,5.6055 35,6.2309,5.6419 36,6.2414,5.6424 37,6.2551,5.6528 38,6.2901,5.6852 39,6.3436,5.7352 40,6.3492,5.7438 41,6.3893,5.7812 42,6.3411,5.7437 43,6.3969,5.8003 44,6.4019,5.8029 45,6.3742,5.7775 46,6.3975,5.7978 47,6.3648,5.7734 48,6.3730,5.7748 49,6.3363,5.7363 50,6.3303,5.7326 51,6.3210,5.7233 52,6.3650,5.7682 53,6.3437,5.7527 54,6.3204,5.7313 55,6.3612,5.7598 56,6.3838,5.7794 57,6.4065,5.7984 58,6.4192,5.8155 59,6.4684,5.8562 60,6.4571,5.8491 61,6.5217,5.9061 62,6.5569,5.9369 63,6.5744,5.9503 64,6.6235,5.9919 65,6.6335,6.0001 66,6.6522,6.0174 67,6.6653,6.0318 68,6.6945,6.0552 69,6.7271,6.0851 70,6.7512,6.1059 71,6.7821,6.1370 72,6.8491,6.1952 73,6.8546,6.1992 74,6.8694,6.2125 75,6.8854,6.2244 76,6.8988,6.2355 77,6.8823,6.2212 78,6.9115,6.2486 79,6.9034,6.2421 80,6.9262,6.2534 81,6.9320,6.2574 82,6.8747,6.2075 83,6.8602,6.1899 84,6.8500,6.1774 85,6.8266,6.1564 86,6.7643,6.0924 87,6.7348,6.0676 88,6.7131,6.0482 89,6.6957,6.0343 90,6.7257,6.0569 91,6.7208,6.0511 92,6.7225,6.0517 93,6.7210,6.0493 94,6.7507,6.0764 95,6.7466,6.0762 96,6.7399,6.0706 97,6.7318,6.0647 98,6.7172,6.0519 99,6.7159,6.0509 100,6.7406,6.0746 101,6.7349,6.0698 102,6.7577,6.0898 103,6.7638,6.0970 104,6.7641,6.0970 105,6.7813,6.1133 106,6.7818,6.1126 107,6.7953,6.1256 108,6.7869,6.1208 109,6.7854,6.1172 110,6.8098,6.1394 111,6.8302,6.1594 112,6.8315,6.1614 113,6.8278,6.1576 114,6.8360,6.1635 115,6.8284,6.1545 116,6.8331,6.1594 117,6.8635,6.1874 118,6.8841,6.2088 119,6.9201,6.2429 120,6.9382,6.2573 121,6.9633,6.2814 122,7.0051,6.3175 123,7.0258,6.3346 124,7.0160,6.3255 125,7.0565,6.3635 126,7.0949,6.3988 127,7.1226,6.4283 128,7.1026,6.4137 129,7.1137,6.4219 130,7.1067,6.4183 131,7.0970,6.4111 132,7.0824,6.3981 133,7.0930,6.4080 134,7.0899,6.4041 135,7.0784,6.3936 136,7.0706,6.3865 137,7.0535,6.3691 138,7.0423,6.3588 139,7.0386,6.3554 140,7.0098,6.3267 141,7.0047,6.3232 142,6.9779,6.2936 143,6.9560,6.2736 144,6.9440,6.2647 145,6.9299,6.2532 146,6.9369,6.2566 147,6.9374,6.2570 148,6.9312,6.2518 149,6.9288,6.2477 150,6.9308,6.2498 151,6.9214,6.2402 152,6.9033,6.2245 153,6.8948,6.2162 154,6.9010,6.2230 155,6.8967,6.2182 156,6.9141,6.2348 157,6.9175,6.2389 158,6.9240,6.2433 159,6.9277,6.2459 160,6.9408,6.2577 161,6.9093,6.2302 162,6.8961,6.2191 163,6.8673,6.1963 164,6.8323,6.1664 165,6.8019,6.1400 166,6.7615,6.1040 167,6.7275,6.0743 168,6.7114,6.0609 169,6.6981,6.0504 170,6.6678,6.0244 171,6.6479,6.0079 172,6.6285,5.9919 173,6.5954,5.9626 174,6.5725,5.9415 175,6.5606,5.9304 176,6.5395,5.9109 177,6.5149,5.8887 178,6.4974,5.8722 179,6.4877,5.8628 180,6.4631,5.8419 181,6.4440,5.8245 182,6.4298,5.8111 183,6.4300,5.8103 184,6.4217,5.8031 185,6.4233,5.8043 186,6.4286,5.8104 187,6.4239,5.8066 188,6.4428,5.8234 189,6.4445,5.8242 190,6.4658,5.8447 191,6.4831,5.8604 192,6.5023,5.8766 193,6.5147,5.8874 194,6.5362,5.9082 195,6.5539,5.9234 196,6.5765,5.9439 197,6.5936,5.9587 198,6.5962,5.9616 199,6.6005,5.9665 200,6.5970,5.9613 201,6.6177,5.9795 202,6.6241,5.9865 203,6.6261,5.9850 204,6.6381,5.9951 205,6.6459,6.0019 206,6.6419,5.9981 207,6.6497,6.0063 208,6.6558,6.0103 209,6.6614,6.0154 210,6.6726,6.0259 211,6.6806,6.0328 212,6.6913,6.0431 213,6.6962,6.0453 214,6.7008,6.0478 215,6.7154,6.0616 216,6.7339,6.0795 217,6.7490,6.0922 218,6.7494,6.0920 219,6.7463,6.0885 220,6.7418,6.0834 221,6.7378,6.0813 222,6.7259,6.0720 223,6.7176,6.0650 224,6.7116,6.0613 225,6.7337,6.0813 226,6.7440,6.0891 227,6.7500,6.0943 228,6.7575,6.1003 229,6.7529,6.0971 230,6.7705,6.1134 231,6.7561,6.1021 232,6.7375,6.0862 233,6.7204,6.0718 234,6.7045,6.0519 235,6.6970,6.0455 236,6.6857,6.0362 237,6.6891,6.0388 238,6.6720,6.0245 239,6.6603,6.0147 240,6.6643,6.0166 241,6.6687,6.0202 242,6.6663,6.0186 243,6.6539,6.0076 244,6.6516,6.0047 245,6.6383,5.9939 246,6.6252,5.9826 247,6.6175,5.9756 248,6.6160,5.9732 249,6.6196,5.9778 250,6.6113,5.9710 251,6.6071,5.9679 252,6.5964,5.9585 253,6.5916,5.9534 254,6.5788,5.9426 255,6.5583,5.9253 256,6.5462,5.9136 257,6.5373,5.9058 258,6.5344,5.9036 259,6.5266,5.8957 260,6.5221,5.8916 261,6.5164,5.8862 262,6.5109,5.8810 263,6.4943,5.8590 264,6.4945,5.8584 265,6.4936,5.8567 266,6.4866,5.8503 267,6.4971,5.8589 268,6.4946,5.8570 269,6.4959,5.8581 270,6.5040,5.8656 271,6.5091,5.8689 272,6.5083,5.8692 273,6.5103,5.8716 274,6.5194,5.8797 275,6.5258,5.8856 276,6.5430,5.9010 277,6.5560,5.9108 278,6.5646,5.9200 279,6.5682,5.9227 280,6.5785,5.9323 281,6.5854,5.9381 282,6.6011,5.9525 283,6.6092,5.9603 284,6.6189,5.9686 285,6.6334,5.9820 286,6.6341,5.9816 287,6.6410,5.9872 288,6.6317,5.9792 289,6.6151,5.9640 290,6.5983,5.9495 291,6.5817,5.9351 292,6.5671,5.9222 293,6.5690,5.9244 294,6.5672,5.9236 295,6.5720,5.9281 296,6.5712,5.9269 297,6.5749,5.9297 298,6.5720,5.9273 299,6.5606,5.9169 300,6.5600,5.9169 301,6.5515,5.9094 302,6.5417,5.9010 303,6.5320,5.8929 304,6.5295,5.8895 305,6.5157,5.8772 306,6.5178,5.8795 307,6.5201,5.8825 308,6.5025,5.8672 309,6.4964,5.8619 310,6.4895,5.8557 311,6.4913,5.8579 312,6.4853,5.8525 313,6.4842,5.8508 314,6.4667,5.8355 315,6.4622,5.8304 316,6.4445,5.8147 317,6.4213,5.7950 318,6.4349,5.8065 319,6.4489,5.8184 320,6.4524,5.8229 321,6.4477,5.8190 322,6.4412,5.8124 323,6.4390,5.8097 324,6.4493,5.8197 325,6.4487,5.8199 326,6.4520,5.8220 327,6.4566,5.8258 328,6.4637,5.8315 329,6.4661,5.8342 330,6.4788,5.8462 331,6.4754,5.8435 332,6.4826,5.8502 333,6.4763,5.8449 334,6.4700,5.8390 335,6.4732,5.8428 336,6.4694,5.8406 337,6.4693,5.8400 338,6.4640,5.8349 339,6.4593,5.8308 340,6.4679,5.8387 341,6.4705,5.8415 342,6.4753,5.8461 343,6.4751,5.8463 344,6.4750,5.8468 345,6.4717,5.8444 346,6.4758,5.8484 347,6.4801,5.8517 348,6.4822,5.8540 349,6.4787,5.8508 350,6.4791,5.8516 351,6.4786,5.8517 352,6.4728,5.8460 353,6.4741,5.8461 354,6.4798,5.8512 355,6.4829,5.8542 356,6.4790,5.8508 357,6.4885,5.8596 358,6.4919,5.8622 359,6.4874,5.8589 360,6.4870,5.8585 361,6.4939,5.8654 362,6.5054,5.8763 363,6.5118,5.8823 364,6.5179,5.8873 365,6.5191,5.8886 366,6.5273,5.8970 367,6.5246,5.8947 368,6.5254,5.8956 369,6.5264,5.8970 370,6.5199,5.8919 371,6.5256,5.8966 372,6.5312,5.9011 373,6.5284,5.8996 374,6.5280,5.8998 375,6.5364,5.9063 376,6.5310,5.9020 377,6.5337,5.9047 378,6.5404,5.9104 379,6.5317,5.9027 380,6.5271,5.8994 381,6.5208,5.8945 382,6.5194,5.8939 383,6.5185,5.8934 384,6.5174,5.8924 385,6.5160,5.8919 386,6.5151,5.8917 387,6.5106,5.8882 388,6.5050,5.8831 389,6.4984,5.8765 390,6.4899,5.8691 391,6.4853,5.8652 392,6.4833,5.8636 393,6.4860,5.8661 394,6.4838,5.8649 395,6.4758,5.8579 396,6.4831,5.8648 397,6.4872,5.8684 398,6.4954,5.8760 399,6.4944,5.8762 400,6.4957,5.8775 401,6.4970,5.8785 402,6.4986,5.8805 403,6.5050,5.8868 404,6.4956,5.8774 405,6.4914,5.8743 406,6.4913,5.8739 407,6.4922,5.8755 408,6.5049,5.8867 409,6.5165,5.8974 410,6.5292,5.9086 411,6.5471,5.9240 412,6.5584,5.9347 413,6.5661,5.9422 414,6.5715,5.9476 415,6.5804,5.9552 416,6.5943,5.9669 417,6.5981,5.9703 418,6.6061,5.9769 419,6.6150,5.9855 420,6.6269,5.9969 421,6.6321,6.0008 422,6.6396,6.0077 423,6.6522,6.0182 424,6.6622,6.0266 425,6.6693,6.0329 426,6.6742,6.0372 427,6.6827,6.0453 428,6.6874,6.0502 429,6.6967,6.0583 430,6.7120,6.0720 431,6.7168,6.0757 432,6.7151,6.0750 433,6.7100,6.0710 434,6.7109,6.0719 435,6.7133,6.0744 436,6.7239,6.0838 437,6.7316,6.0911 438,6.7279,6.0881 439,6.7266,6.0873 440,6.7214,6.0823 441,6.7199,6.0809 442,6.7215,6.0822 443,6.7224,6.0827 444,6.7202,6.0809 445,6.7227,6.0833 446,6.7250,6.0861 447,6.7301,6.0902 448,6.7271,6.0879 449,6.7272,6.0887 450,6.7225,6.0849 451,6.7122,6.0714 452,6.7035,6.0630 453,6.6971,6.0574 454,6.6976,6.0584 455,6.7026,6.0631 456,6.7051,6.0650 457,6.7030,6.0628 458,6.7039,6.0634 459,6.7133,6.0718 460,6.7105,6.0691 461,6.7088,6.0678 462,6.7141,6.0716 463,6.7136,6.0705 464,6.7106,6.0679 465,6.7028,6.0603 466,6.7034,6.0604 467,6.7036,6.0602 468,6.7056,6.0622 469,6.7064,6.0626 470,6.7016,6.0580 471,6.7063,6.0622 472,6.7003,6.0571 473,6.7018,6.0583 474,6.6963,6.0523 475,6.6982,6.0539 476,6.6911,6.0468 477,6.6904,6.0457 478,6.6976,6.0512 479,6.7031,6.0556 480,6.7050,6.0574 481,6.7001,6.0530 482,6.6959,6.0490 483,6.6982,6.0509 484,6.6968,6.0489 485,6.6909,6.0432 486,6.6912,6.0429 487,6.6895,6.0406 488,6.6840,6.0360 489,6.6814,6.0337 490,6.6788,6.0308 491,6.6727,6.0253 492,6.6698,6.0227 493,6.6676,6.0210 494,6.6672,6.0204 495,6.6638,6.0167 496,6.6587,6.0112 497,6.6568,6.0095 498,6.6512,6.0054 499,6.6414,5.9962 500,6.6342,5.9898 501,6.6341,5.9900 502,6.6339,5.9894 503,6.6241,5.9809 504,6.6268,5.9830 505,6.6278,5.9838 506,6.6220,5.9780 507,6.6177,5.9741 508,6.6167,5.9736 509,6.6204,5.9769 510,6.6254,5.9815 511,6.6288,5.9849 512,6.6303,5.9869 513,6.6369,5.9930 514,6.6310,5.9877 515,6.6301,5.9868 516,6.6307,5.9879 517,6.6307,5.9875 518,6.6341,5.9905 519,6.6370,5.9929 520,6.6390,5.9941 521,6.6419,5.9967 522,6.6431,5.9974 523,6.6497,6.0031 524,6.6539,6.0062 525,6.6548,6.0071 526,6.6574,6.0088 527,6.6517,6.0039 528,6.6526,6.0043 529,6.6468,5.9995 530,6.6451,5.9985 531,6.6508,6.0030 532,6.6530,6.0053 533,6.6504,6.0036 534,6.6530,6.0057 535,6.6473,6.0005 536,6.6450,5.9984 537,6.6505,6.0033 538,6.6514,6.0044 539,6.6557,6.0080 540,6.6568,6.0083 541,6.6573,6.0094 542,6.6588,6.0110 543,6.6602,6.0121 544,6.6577,6.0102 545,6.6583,6.0110 546,6.6533,6.0070 547,6.6471,6.0024 548,6.6476,6.0025 549,6.6447,5.9997 550,6.6411,5.9963 551,6.6391,5.9942 552,6.6345,5.9906 553,6.6323,5.9887 554,6.6291,5.9857 555,6.6286,5.9853 556,6.6315,5.9875 557,6.6276,5.9838 558,6.6270,5.9835 559,6.6269,5.9833 560,6.6271,5.9836 561,6.6251,5.9815 562,6.6252,5.9811 563,6.6301,5.9853 564,6.6321,5.9874 565,6.6318,5.9872 566,6.6293,5.9851 567,6.6299,5.9857 568,6.6278,5.9844 569,6.6305,5.9872 570,6.6310,5.9877 571,6.6321,5.9887 572,6.6320,5.9887 573,6.6283,5.9852 574,6.6279,5.9846 575,6.6283,5.9845 576,6.6269,5.9831 577,6.6248,5.9812 578,6.6255,5.9818 579,6.6182,5.9755 580,6.6138,5.9719 581,6.6130,5.9708 582,6.6137,5.9717 583,6.6139,5.9719 584,6.6057,5.9646 585,6.5988,5.9579 586,6.5988,5.9585 587,6.6039,5.9633 588,6.6101,5.9684 589,6.6131,5.9714 590,6.6148,5.9735 591,6.6135,5.9724 592,6.6097,5.9692 593,6.6107,5.9702 594,6.6079,5.9679 595,6.6123,5.9711 596,6.6096,5.9691 597,6.6060,5.9662 598,6.6087,5.9683 599,6.6083,5.9679 600,6.6068,5.9664 601,6.6093,5.9672 602,6.6122,5.9700 603,6.6135,5.9708 604,6.6170,5.9742 605,6.6189,5.9761 606,6.6177,5.9745 607,6.6137,5.9713 608,6.6139,5.9721 609,6.6176,5.9755 610,6.6156,5.9738 611,6.6184,5.9764 612,6.6144,5.9729 613,6.6087,5.9680 614,6.6004,5.9610 615,6.6035,5.9637 616,6.5969,5.9579 617,6.5911,5.9532 618,6.5853,5.9480 619,6.5700,5.9347 620,6.5626,5.9282 621,6.5607,5.9266 622,6.5623,5.9282 623,6.5626,5.9287 624,6.5623,5.9289 625,6.5612,5.9278 626,6.5635,5.9300 627,6.5638,5.9301 628,6.5635,5.9297 629,6.5671,5.9328 630,6.5730,5.9384 631,6.5785,5.9439 632,6.5768,5.9426 633,6.5807,5.9460 634,6.5812,5.9466 635,6.5783,5.9433 636,6.5749,5.9398 637,6.5781,5.9422 638,6.5749,5.9392 639,6.5761,5.9402 640,6.5763,5.9403 641,6.5837,5.9468 642,6.5857,5.9489 643,6.5868,5.9501 644,6.5845,5.9483 645,6.5890,5.9522 646,6.5854,5.9482 647,6.5862,5.9491 648,6.5864,5.9494 649,6.5908,5.9531 650,6.5967,5.9583 651,6.5976,5.9594 652,6.6020,5.9632 653,6.5955,5.9571 654,6.5949,5.9565

Started a $ ./main --perplexity -m models/7B/ggml-model-q4_0.bin -f wiki.test.raw --memory_f16 run now. It already shows a small additional negative hit to perplexity vs baseline 4 bit, but will let it run all the way through:

| 16f | 4b | 4b memory_f16 |

|---|---|---|

| 4.2341 | 4.5970 | 4.6370 |

| 4.7324 | 5.1807 | 5.3228 |

| 5.5847 | 6.0382 | 6.2159 |

edit: further in, it looks much closer actually! at chunk 81 memory_f16 - 6.9297 vs 4b - 6.9262.

I got 589 chunks through a (very slow) 16B FP16 run and then it segfaulted. Still, the number should be pretty accurate by then. Measured at 5.2223, so a decent improvement over the 5.9565 for 7B FP16.