Shot Building

Goal

Enable publish and load of asset combinations.

This should produce a rendered image sequence of the latest approved assets for the given project and shot.

$ set AVALON_PROJECT=hulk

$ set AVALON_ASSET=s010

$ render

Motivation

Rendering is where all 3d resources come together and transform into 2d images. At the moment, resources are brought into the rendering application - Maya - and saved as a Maya scene file, the "master scene".

In this file, a number of decisions reside.

- Which assets are to be part of this render, including characters and cameras?

- Which version of each asset?

- What should be the relationship between the assets, e.g. shaders to model, fur to animation?

- What are the settings for the rendering engine currently used?

- What are the layers and settings for each?

The trouble with this approach is that it limits the ability to assemble and define relationships between assets to the rendering artist (in this case, @Stonegrund) where any change must be made (1) by this artist and (2) within this scene.

Number 2 introduces additional complexity if the file is somewhat heavy in that (1) it takes longer to open and edit (2) the risk for file corruption is increased.

But what if anyone could contribute to a final rendered scene, without the intervention of a rendering artist? What if you the animator produce a new version of your animation, and would like to see this rendered? What about the freelance lookdev specialist who would like to see his shader in context of the actual shot, but doesn't understand or dare touch the "master scene".

In short, how do you parallelise the rendering workflow? Or indeed any workflow requiring any form of assembly, e.g. layout or animation?

One potential answer lies in Shot Building.

Implementation

Building a shot is akin to loading an asset, only this time you load what's called a "package".

A package is a collection of assets along with their versions.

{

"someAsset": 12,

"someOtherAsset": 1

}

Publishing

Publishing of a package could be made via a dedicated tool.

Loading

Loading of a package could be done through Loader, via e.g. a drop-down menu at the top.

In this example, contents of the package is highlighted and open for modification. For example, if the animator doesn't require shaders, or wishes to disregard parts of the package for performance considerations.

This is possible, and I am thinking somewhat the same way. There should be at least a foundation_light_master which gets created for a shot sequence already in the pre-production. It has to be build for each project and shot sequence, but only for shots. Lookdev can be and have a more universal light setups. There can be few prebuilt lookdev scenes for different scenarios and so on... We'll talk further on this subject

Other than that "anyone" should be able to build a render scene, but only lighters who adjust lights and delivers material to the comper.

Request:

lookdevDefault assigner for the assets in the renderscene/shot-scene. There should be a way to load one or multiple lookdevDefault. Like a manager that reads what is loaded in the scene that can also fetch what lookdevDefault each assets has. There you should be able to select one or multiple lookdevDefault to load into your scene.

Scene Description

Shot building should be context sensitive. If the animator builds a shot, rigs are loaded, whereas if a rendering artist builds a shot, caches and shaders are loaded.

Build for Animation

In order to build a shot for animation, we would need the following ingredients.

mindbender.environment- An environment, for animators to either interact with or avoidmindbender.rig- Rig per character, for animators to animatemindbender.curves- Animation per rig, for animators to start off with (i.e. layout), could also be simply position and orientation.mindbender.constraints- Relationships between rigs, e.g. constraintsmindbender.animation- Optional point-cache ofrig+curves

2-5 are related in a sense where one cannot be used without the other, yet version independently. That is, the rig could be updated independently of the animation curves. They form a group.

Groups could be defined per asset in .inventory.toml

[[1000.groups]]

Bruce01 = [

"Bruce:rigDefault",

":animationDefault",

":curvesDefault",

":constraintsDefault"

]

Requirements

- There must be a fixed name per asset group, so that we can reference it as a whole in the scene description.

- Point caches must be swappable for rigs with animation curves

- Members must have some form of relationship, such as what animation curves have to rigs

Here are some more visuals of what I've got in mind.

Questions remain, how does one define what to include in a shot? Does it involve setting one up, and publishing it as individual components? How then does one add anything to this scene, without opening it up again? A likely heavy operation.

Ideally the user would pick parts in isolation, like making a recipe for what is about to be made.

Reference on how asset groups could work, known as "packages" at MPC.

Build for Animation

In order to build a shot for animation, we would need the following ingredients.

- mindbender.environment - An environment, for animators to either interact with or avoid

- mindbender.rig - Rig per character, for animators to animate

- mindbender.curves - Animation per rig, for animators to start off with (i.e. layout), could also be simply position and orientation.

- mindbender.constraints - Relationships between rigs, e.g. constraints

- mindbender.animation - Optional point-cache of rig + curves

I would see a need for a "starter" package for the animator which contains the set and the characters for the current shot but why would you store relations between rigs? What does this contribute? After the animator is done I would assume the animations are baked. If this is all to be able to "rebuild" the animation scene I would only care about 1, 2 and 3. Drop point 4.

After the animator is done I would assume the animations are baked.

That would be used to build a shot for the animators. Such that they can start animating on it. It'd import the rigs, rather than the caches.

For previously existing animation, like from Layout or another animator, that's where ~~(5)~~ (3) comes in.

About (4), one of the issues with building a shot where rigs are constrained to each other is knowing who's the host of the constraints? Character A, or character B? Who connects to who? And how do you specify it?

What they did at Framestore, surely not the only approach, was to make constraints into assets themselves such that you could specify, like on a string attribute, which node was connected to which. This could then be imported as an asset, and connected by the pipeline.

It was really annoying to work with it. But frankly I don't see another way of dealing with it. In theory it's straightforward, the animators would just need to get used to using assets for constraints, instead of heading for the Constraints > Parent menu item.

Oh, and another important attribute of building the scene as an animation scene rather than with pointcaches, is that you can update versions of the assets, without going through the original animator. Like if a rig or model was updated. That'd be doable by production, or automatically by tagging things as "approved".

Evaluate whether USD is a good candidate for this.

- https://graphics.pixar.com/usd/docs/index.html

- https://github.com/AnimalLogic/AL_USDMaya

Expecting it to:

- Describe a shot in its entirety

- Provide the means of building an assembly, ideally visually

- Be importable into Maya prior to rendering, OR rendered directly based on what the given renderer supports (e.g. Redshift, Arnold). The latter being preferable, as it'd enable us to skip import and just render the thing.

- Be importable into other software, such as Nuke

- Ultimately align us with other studios and facilitate sharing of ideas and tools.

Evaluate whether USD is a good candidate for this.

Theoretically USD could be by far the best candidate for this purpose. Have you tried it out yet? I've been following the development since the announcement, but windows support seems to be limited albeit some people have clearly managed to build and use on win.

I haven't tested it no, I just recently had a conversation about it that shed some light on what it could potentially be used for. Attaching it below (with permission).

yawpitchroll [10:10 AM] USD is fundamentally the same idea as DD's Shot Description File and Weta's Hercules file format. It's essentially an XML schema that references assets via some sort of UUID scheme. It very much must be backed up with a DB that actually contains those asset UUIDs. Edit: at least to be of much use. (edited)

marcus [10:17 AM] @yawpitchroll would you recommend assembling shots through USD (at it's current stage) rather than say Maya references, or 3ds max's equivalent?

marcus [10:18 AM] The way I'm seeing a final assembly put together before being sent to a farm is by opening Maya, referencing in all the things to render and assigning shaders (that in turn contain paths to additional files)

I don't know enough about USD to know whether it competes with that workflow, does it? (edited)

A downside is (a) a heavy Maya dependency and (b) a forced interactive use of physically making those references and saving the Maya scene file. And I'd imagine that with USD I could potentially accomplish the same workflow, but through code and application-indendently

What I'm doing myself is abstract the assembly process and create an ad-hoc format for describing the contents of each shot

Here's hoping USD could remove the ad-hoc format

yawpitchroll [10:27 AM] It's trying to solve a different kind of problem.

Think of USD as the world, Alembic as your local portion of the world, and EXR as what you'd see from a specific position and time in that locale.

They're like nested containers.

marcus [10:32 AM] Nice analogy :slightly_smiling_face:

Do you know whether USD includes texture information? As in, their paths

yawpitchroll [10:39 AM] Haven't used USD itself, just looked at it a few years ago, when it was being investigated for parity with Hercules. They've no doubt evolved since, but basically no these high level files NEVER know about paths, they just know about uniquely identifying details, which can be expressed as a UUID or via a URL or URN scheme. (edited)

Ideally the only thing that ever knows about location on disk is an asset database. (edited)

marcus [10:41 AM] That makes sense; so the data actually produced from artists assigning textures to models and things is a USD file where paths used in e.g. Maya is stored as IDs, such that when the files are loaded back in, the ID is re-translated into a path

yawpitchroll [10:44 AM] Well, not quite. A USD isn't an asset database, it's an abstraction of the narrative world that assets exist in.

marcus [10:45 AM] Ok, I'm not really understanding the general workflow I guess :slightly_smiling_face: From artist to file on disk. I was assuming they worked as-per-usual, only when they export/publish, a USD file is produced and later loaded by another arist (edited)

yawpitchroll [10:47 AM] No, that's equating the USD to a Maya (or any DCC-app) scene file.

Unfortunately this is a very large topic, and I'm in transit right now, but let me see if I can summarize.

marcus [10:49 AM] Don't worry, I should take a moment to read up on USD a little more

Didn't think I had misunderstood it this much

yawpitchroll [10:50 AM] It's not the easiest idea.

marcus [10:55 AM] How about this, if you go all-in with USD, do you still need the DCC scene file? For rigs, I'd imagine yes, but models, textures, caches, lights maybe not?

yawpitchroll [10:56 AM] Yes, of course you do, you need all the DCC files, they're the assets that the USD is abstracting.

USD isn't an interchange format in the way Alembic is.

marcus [11:01 AM] Ah, that's where my misconception was at

But so if a USD is pointing at formats exclusive to a particular DCC, like Maya, it wouldn't be usable in e.g. Katana or other software?

yawpitchroll [11:13 AM] Let's not use the term USD, as that's a specific implementation of this idea. Let's just use "Scene Description" or SD.

The SD is a data structure that could be thought of as closest to an EDL for "what" and "where" appears in a movie, instead of being focused on the order of "when" they appear.

In Maya terms you could think of it as a master .ma that contains references to all the component .ma files that make up the film, each of which in turn contains references to the individual textures, models, etc.

But that's too limiting, because Maya files can't be opened in Nuke. So in a SD-based workflow the SD contains references to "assets", and these assets represent usually a bundle of different interchange formats. At Weta a Maya scene with a box and no camera or translations would be exported as a .ma, a .obj. a .fbx, a .alembic, etc... the SD references the bundle, and when Nuke loads in the SD it's loader knows to bring in the interchange formats it understands.

Ideally of course every app could read every file format, but we all know how far we are from that unicorn.

In this way you see the SD becomes a collection of collections ... it represents the whole narrative world ... Or usually at least that portion of it that's relevant to the story idea of a "scene" ... and then allows you access to a filtered subset relevant to your task.

Now some artist can publish a new texture on a model halfway round the narrative world from your shot and you simply don't care, if that asset ever comes into the frustum of your camera you load it in and get whatever textures are attached to it right now.

marcus [11:24 AM]

if that asset ever comes into the frustum of your camera you load it in and get whatever textures are attached to it right now.

It could load things off of disk programmatically at render-time? That sounds amazing

yawpitchroll [11:25 AM] That's part of the goal, yeah.

marcus [11:25 AM] That suddenly clarifies (to me) how a studio is able to render assets with hundreds of gigabytes of textures (edited)

yawpitchroll [11:26 AM] The beauty of it is all artists are working simultaneously on the narrative world. Which allows large scale changes that can't happen if the world is fractured into thousands of component scene files.

Hah, only hundreds?

marcus [11:26 AM] Haha

How they go about actually making that data is still a mystery though; they've got to have some fast texture painters workin' there

yawpitchroll [11:27 AM] The idea is incredibly powerful. But you have to be a certain size vendor for it to really make sense.

As it requires a lot of infrastructure.

marcus [11:28 AM] Yes no, it makes sense. I will be having a look into this next, as the ad-hoc format I mentioned above involves this; I'll be referring to it as a scene description from now on

In the studio I'm currently involved with, we already do export multiple "representations" of each asset with the intention for exactly this, and with a DB set-up to host IDs of each. If I understand (U)SD correctly, it could potentially slot right in and replace my futile attempt at making my own SD

yawpitchroll [11:32 AM] Quite possibly. When I first looked at USD it was still using GUIDs, looks like they've settled on namespaces now. They've taken the concept furthest I think of any of the companies. What it still really needs though is a very good asset database, and there still really isn't one out there that's FOSS.

marcus [11:33 AM] Our of curiosity, what are your thoughts on document-databases?

yawpitchroll [11:35 AM] Same as my thoughts on all databases. Necessary bloody evil.

marcus [11:40 AM] :slightly_smiling_face: I suppose my next question would be, what makes a database very good for assets?

yawpitchroll [11:41 AM] Personally I love them and they map really well to data that's more or less immutable or is relatively infrequently changed. They're a bitch to do analytics on, or build dozens of reports based on questions you didn't know about ahead of time.

Honestly? In my mind it's a mix of two databases.

Document store for metadata and actual object store, Relational or Graph for actual dependency relationships.

Walk the latter to find out what to pull from the former.

Assets in our world are funny things; they die, right? There's a point at which you absolutely never need to know again who published version 7 of an asset that made it into the movie as version 912. That's a really odd fit to databases that were designed to never forget.

I've also been thinking a blockchain has a lot of things going for it, though, so my thoughts on this matter remain unsettled.

yawpitchroll [11:52 AM] USD can be used as a glorified scene interchange file, but that's not its strength.

The beauty of the SD idea comes in when you realize that it's not about boundaries between applications, it's about boundaries between contexts.

yawpitchroll [11:57 AM] Scene interchange is more or less settled ... It's messily settled, no doubt, by you can get 3D data and footage from editorial into maya into nuke back to editorial and out to client. It's ugly, but it's not a game changer to do it less ugly.

yawpitchroll [12:06 PM] A context in this case is just a frame of reference... so in the Shots context (meaning rendering, comp, and editorial) time and space and the camera frustrum are important to things to know about when considering assets. In the Mocap context time is really important, space not so much, and with the exception of the virtual camera itself frustrum is irrelevant. In the FX context perhaps only time matters at all, someone in one of the other contexts will place it in space and in relation to the frustrum, but now you're thinking in terms of simulation time not world time.

The SD allows you to build a world that brings all of these things together logically, fitting them together into a model of the state of the movie's universe from any context, or None.

Now by tasks you mean more "what context am I in right now" and that is mainly relevant to knowing what parts of the SD to actually use right now.

Remember ladies and gentlemen, we are always working on a model of causality. VFX is God work.

Can it be based on the workflow? I mean task dependencies could be used to load what was published from the previous task. For instance to build, a scene for animators, I load the result of the modeling step and the result of the setup step for all assets of the casting (I use the Shotgun terminology here).

NB: don't forget the versioning of these dependencies.

Hey @frankrousseau,

Can it be based on the workflow?

Could you define workflow? Is it different from "task" for example?

(I use the Shotgun terminology here).

Is "workflow" the same as "step" perhaps?

I mean task dependencies could be used to load what was published from the previous task

What is a "task dependency"? For example, does it mean that for rigging to commence, modelling must be done first, making rigging dependent on modeling. In that case, should the loading of anything coming out of rigging also load everything(?) that came out of modeling?

That might be impractical. For example, modeling might produce both render, low resolution and collision meshes. One or many of those may be used in a given rig, such as collision meshes in a simulation rig, and render meshes in another rig.

Asset-to-asset dependencies have been implemented in Avalon already actually, where one asset such as a model, is associated to a rig by simply publishing them together. That gives the publishing step enough information to infer that one asset is now associated with another.

This is how I was thinking that the construction of shot/scene definitions happen as well; interactively. By having an animator, for example, import a series of rigs and placing them around the scene. Then he could publish the scene as a Scene Definition, as opposed to publishing the resulting animation caches. Then that Scene Definition could be found in the Loader/Library like any other asset (versioned).

I am also thinking for the short term of time efficiency that this could be done with the workflow we have.

In the case if scene building for rendering I would like there to be two options "build new scene" and "continue scene".

"Build new scene" would basically build everything that is related to the shot you are trying to setup ex. You navigate to sh010 and to "rendering", you press "build new scene" you can than either choose import all related/published alembics to shot or select the alembics you want to import, when done you press build shot, assemble shot or what ever we want to call it. Then the fun part happends, lets say there is an asset called chair, the render-shot-builder import/references the chair and also imports and assigns lookdevDefault of that asset.

If you have a scene with lots of assets this really would save even more time if you have to rebuild the shot and can start the lighting even faster.

I have read about the USD and it sounds awesome, read an article about a studio who adapted that pipe and it sounds great. And it is something we should go for. But I think we can improve the pipe we have at the moment. Please correct me if there are some threads I have missed to follow and you guys already have discussed about this already :)

On 2 Sep 2017, at 09:45, Marcus Ottosson [email protected] wrote:

Hey Frank,

Can it be based on the workflow?

Could you define workflow? Is it different from "task" for example?

(I use the Shotgun terminology here).

Is "workflow" the same as "step" perhaps?

I mean task dependencies could be used to load what was published from the previous task

What is a "task dependency"? For example, does it mean that for rigging to commence, modelling must be done first, making rigging dependent on modeling. In that case, should the loading of anything coming out of rigging also load everything(?) that came out of modeling?

That might be impractical. For example, modeling might produce both render, low resolution and collision meshes. One or many of those may be used in a given rig, such as collision meshes in a simulation rig, and render meshes in another rig.

Asset-to-asset dependencies have been implemented in Avalon already actually, where one asset such as a model, is associated to a rig by simply publishing them together. That gives the publishing step enough information to infer that one asset is now associated with another.

This is how I was thinking that the construction of shot/scene definitions happen as well; interactively. By having an animator, for example, import a series of rigs and placing them around the scene. Then he could publish the scene as a Scene Definition, as opposed to publishing the resulting animation caches. Then that Scene Definition could be found in the Loader/Library like any other asset (versioned).

— You are receiving this because you are subscribed to this thread. Reply to this email directly, view it on GitHub, or mute the thread.

Summary from community driven conversation earlier today.

Summary below.

Avalon - Set Dress Discussion

26th of September 2017

What are the goals?

-

Is it for Animators or Lighting? We want to have a setdress that can be used across, pushed through animation, through lighting, and basically all the steps.

-

Support both static elements in the environments and rigs that can be used by the animators.

-

Can finally convert into a renderable scene. (Doesn’t have lights, camera or rigs)

-

Switching a positioned asset to another asset.

-

Switching asset in-place (e.g. replace tree with chair)

-

Switch representation in-place (e.g. alembic to arnold stand-in)

-

The animator can choose “I want to animate this” at any point in time.

- What’s the “safe” approach to this?

- Avoid the animator having to know which assets are animated and which aren’t where it’s possible he forgets a specific asset.

-

Preferably extract the data as much as “additive data”. Only extract the changes. (Say only transformations from the animated scene)

-

NOT render specific (e.g. not only arnold)

-

It should allow the lighting artist to assign shaders or tweak shaders after having loaded the setdress file.

-

Can it contain VDB files? Or other FX-data, like particle caches? Not a primary objective, but potentially yes!

-

Can the published files be shaded assets or not? Currently we’re thinking both.

-

Potentially embed “scattered” assets created in another software, e.g. those from Houdini.

What is allowed to be in a setdress scene? It might have Arnold archives, or rigs, or even geometry that is made directly in the scene.

Who’s making the setdress?

The environment artist is making it.

The layout is the rough version of where things are, e.g. like a first version for animators. With a box for a table, a desk. Later these would be updated with the actual model of the asset as it’s created.

Look into

- What goes into the scene?

- Rigs, stand-ins, models.

- What happens when an “Artist” wants to generate a model in the scene.

- How to manage it in Maya?

- Many instances are slow in Maya. The Maya instancer works, but can’t be managed easily in Maya. How to distribute efficiently?

- MASH helps with distributing!

- How can we keep this most editable?

- Easily swap visibility/high-res states.

- Alembic 1.7 layering

- Alembic DIFF to the original set so you’d know what has changed. (Or reference edits on referenced alembics)

- Pixar’s USD

- What if you want to change “render settings” within a single stand-in?

Mkolar: Currently exports the asset (when they assume that might happen) into separated stand-ins. But this choice will need to be made prior to exporting. It’s not the lighting artist that has this power.

- Time offsetting?

- Embedding: Set dress in a set dress (say a layout of a house with many different parts) that would be layouted into a larger environment many times. Sometimes bigger sets were puzzles that contain smaller “sets”. E.g. a big area with many streets, some of the streets might be “puzzle blocks” in the full set.

Use Cases

Use case #1 - Apocalyptic Street

Say you’d have a broken apocalypse kind of street. With lots of rubble on the street. How would you go about it? Buildings and the street can be imported, say these are pre-generated. Say he wants to make the demolished version.

-

One solution: Make the extra geometry locally, then publish it as a model. Then load that into the setdress to publish it.

-

Other solution: Allow the geometry to be generated and published directly with the setdress. Maybe automate publishing “new geo” as embedded geometry. (As a new publish alongside)

Mkolar: For us it’s not possible to disallow completely to, during setdress, start modeling new geometry. So it should be trivial and fast to generate meshes whilst setdressing and start adding them to the setdress.

Use case #2 - Animating over the set dress

A big forest has been setdressed with a nice house in the center of it. In one of the shots an animator needs to reposition one of the trees to get a better view from the camera. What do we publish? And how does the lighting artist get the correct "setdress" that includes this transformation?

- One solution: The animated setdress is also published as a new setdress. This one embeds the original static elements and overwrites or adds the local scene changes into that data. This could be replacing a part of the hierarchy with deformed shapes or setting different transformations than in the original data.

The Format #1

An Alembic format that allows to “embed” differently loaded assets (similar to USD)

- A hierarchy

- With transformations

- Can contain “some content”

- Asset: Any published asset (and what data it is)

- Loader: What loader to use

- This could be overridden by the Artist at load time to load an Alembic for example as VRayMesh.

Example data:

top_GRP

content1_ABC (loader: AlembicLoader)

content2_ABC (loader: AlembicLoader)

content3_MAYA (loader: MayaAscii)

content4_SET (loader: SetDressing)

# pseudocode

class AlembicLoader(api.SetLoader):

def load(representation, parent, data):

"""Implement the loading for this specific `SetLoader`

representation (bson.ObjectId): The database id for the representation to load.

parent (str): The parent node name in the hierarchy.

data (dict): Additional settings that may be stored in the set dress data, e.g.

a time offset for the loaded item, or maybe a shader variation to be set.

"""

path = api.get_representation_path(representation)

alembic = mc.import(path, type="Alembic")

anim_offset = data.get("animOffset", 0)

if anim_offset:

# Apply the time offset applied to this particular loaded asset.

mc.setAttr(alembic + ".timeOffset", anim_offset)

:::info

Maybe implement using OpenMaya.MpxFileTranslator

:::

The Format #2

Maya ascii

Every assets needs to be published: e.g. a low-res model, a high-res model, etc. E.g. animator decides to load tree_A.

-

Pros:

- Not recreating it in every step.

- The data contains all the sources.

-

Cons:

- Proxy references If an artist at any point wants to “switch” to another representation it must already be embedded in the original maya ascii (e.g. multiple groups that are toggled) E.g. a Tree is an object group containing multiple representations by itself.

(MK) this could be solved by scripts that could on the fly trigger between 'lowres' and 'render' asset. Using maya 'proxy referencig' would probably work, where upon loading asset it's 'proxy' would be specified at the same time and then just triggered when needed. [name=Milan Kolar][color=#907bf7]

Notes

Notes by @mrharris

Our requirements may be slightly different as we may not be lighting in maya. So I’m curious in seeing how far we can push gpu representation for entire Set. This essentially means the Set it is not very editable for the animator - anything they would need to touch would need to be identified in advance and supplied separately (as a prop rig?). This is contrary to the “goals” set in the meeting, but dealing with gpu representation and “on-the-fly” Set rigs is an open question for me.

Questions I Forgot to Bring Up

- What do you call the asset type for an item of set dressing (like a tree, rock, flower). We call them

SetElements and they are tagged with data to help filter (Foliage / Debris / Food / Furniture). How do you classify your SetElements? - When modelling, what determines whether something should be dressing, or just modelled as part of the Set? It is kind of subjective. Do you leave it up to the environment artist to decide what should be a reusable

SetElement

Dressing Authoring

Dressing elements may need to be authored "in context" to get proper scale/style etc. This could be a matter of referencing in an environment Set within which to work.

Related to this, is that a Set modeler may want to promote an element in his Set to be a re-usable dressing element. Ie they would need some form of “assetize” tool where a group that they modelled would be exported/promoted to its own asset, and then referenced back into the Set as a sub-asset. Now other artists can iterate on the flower and reuse it in other Sets.

MASH, SOuP instance manager good for particle instancing. Transform instancing is very heavy in maya. Cached sets (abc/usd required for katana) mean losing any proceduralism these instancing tools provide – eg cannot change forest density in lighting. Is there a bbox locator we should use to represent a piece of set dressing? Any good ideas?

Aggregation

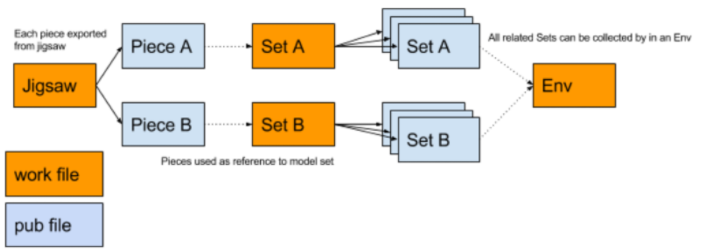

I guess you can aggregate / compound / nest together a Set as deeply as you like but these levels make sense to me

- Dressing: a reusable element, eg Tree, Table

- Jigsaw Piece: a ground with well defined boundary

- Jigsaw: an assembly of jigsaw pieces

- Set: what you think - an asset that is a setting for several shots

- Env: an assembly of related Sets (a high-res jigsaw)

Large environments sometimes need to be broken into smaller Sets, but the "connective tissue" where these Sets join needs to be seamless so Sets snap together when loaded at shot level

Use huge, bare jigsaw environments whose job is to define the ground surfaces and precise seam lines for all contained Sets and give a sense of the "world". A jigsaw for a whole environment can be authored in one file. At publish time each jigsaw piece can be exported to its own file. Each jigsaw piece (ground) is used as the basis for modelling its respective Set.

Splitting up environments like this means we must also think about environmental elements that are common to ALL sets. For example, sky, sunlight, clouds. We don't want each Set to define its own skydome mesh or they won't merge together. One way is to have an additional sky.mb "Set" for these elements that is always connected to the Env.

LOD

How important is supporting LOD for set dressing? Does having low res representation actually help the renderer? Or is managing the swapping and having low and high in memory more of a burden than it is worth?

we actually stopped worrying about LODs for rendering usually more of a burden as you said. [name=Milan Kolar][color=#907bf7]

Render proxies (ass / rsproxy / vrayproxy) are specific to that renderer. More importantly they typically don't allow easy editing of the contents of the proxy after authoring (youtube mooornold as an exception). What if a lighter needs to hide a leaf mesh or tweak a shader inside a proxy? How granular do you make your proxies?

we try to lock assets as much as possible so they wouldn't need to be tweaked during lighting and work with is as physical set. When that's not possible we fix thing on a case by case basis. A solution could be loading render proxies by default if available, but allowing to swap them for render geometry if needed. That way only a particular tree migth get changed to real geo, but most of the forest stays in proxies. [name=Milan Kolar][color=#907bf7]

For viewport, is full res GPU-cache (with option for bbox) good enough? Is a low-res mesh necessary as a middle ground? Our artists would most like to see high res geo in Set if it was fast, and would sacrifice viewport textures to get it. Some block colour indication of different objects would be great though

Can we optimize animation viewport per shot? Eg swap to bbox anything outside the frustum or far from the camera Using GPU cache for Sets introduces problem about how to animate/rig "fixtures" of the set, like windows, doors etc. Should all these be separate prop rigs? Is that feasible for episodic animation?

How to represent LOD in the outliner when authoring? Put all high meshes under "high". Low meshes under "low". Anything common is not in an lod group. That way publisher can delete the unrequired groups to export each rep. Probably LODs should not be split until it has been through rigging and lighting so that controls and light links only need be set up once

Note that I'm using setdress as terminology here. But it's the name I'm using here now for "grouped content" or "shot building content".

We've been playing around here with OpenMayaMPx.MPxFileTranslator for some time and had amazing results except for one crucial thing... it wasn't stable. Things like saving and reopening the scene with the "referenced" setdress would just crash maya instantly. Very unfortunate.

- The reason we chose to investigate this route was because we were able to store the setdress data with just an alembic hierarchy (only groups, no mesh data or anything) and a .json file that would contain loaded instances that could be parented underneath the loaded hierarchy.

{

"representation": "database-id",

"loader": "ModelLoader",

"parent": "parent|in|alembic|hierarchy"

}

It would allow loading anything that supports an Avalon loader (e.g. the ModelLoader) and parent it under the setdress hierarchy.

-

Another reason was the fact that referencing the setdress would load in a single reference in Maya. Which is perfect, because then if the setdress needs to be updated then it could just replace the reference with the next version of the setdress and it would rebuild automatically. And big bonus would support reference edits just like any reference.

-

It would also support switching the "loader" upon load time, say you wanted to swap the ModelLoader with the VRayMesh loader and the content would be loaded with another format.

Nevertheless, with how unstable it ended up being... we'll likely drop investigating this workflow.

The specific instability we had was when the setdress contained the same asset more than once, somehow Maya (2018) was unable to "load" inside the MPxFileTranslator.reader() the same file twice without crashing upon reopening. It would work perfect upon referencing the setdress, also with saving, just not with reopening the file that had this setdress referenced inside it.

I tested with an implementation through Maya Python API in Autodesk Maya 2018.1

Here's an example MPxFileTranslator that crashes: https://gist.github.com/BigRoy/38ca6118c848091ef1c14229d5b4fad4

I have yet to find a method that allows to have these same benefits, where:

- You can reference a setdress, and afterwards change the version (because it's a single linked reference) and it would rebuild itself whilst supporting and preserving local reference edits!

- The artist who is loading the setdress could "override" what loader is used, e.g. he/she could decide to load it as VRayMesh instead of plain alembic inside maya.

- The artist who is loading the setdress could "override" the representation it is loading, as such change a version of a contained asset locally in the scene. For example swap: chair_v01 to chair_v02 without needing to republish the setdress to actually contain chair_v02.

- (Optional, but very nice feature) It would allow loading with any type of loader inside the setdress. It could contain even a

ParticleLoaderin the setdress.

We've taken a stab at this, even though it doesn't exactly cover all the point mentioned in this huge thread 😄

We have a basic framework that let's you specify various families, subsets, and their representations that should be loaded into the scene you're about to work. For instance you define that for any lighting task it will try to find any pointcaches, animations, cameras and so on and load them into the scene.

We're missing asset linking at this point but once that is up and running it would be able to bring all the linked assets into the shot as well.

have a look at the PR here. hard to tell at this point how much of it is relevant for the core, but we'll find out soon enough i think

https://github.com/pypeclub/pype-config/pull/11 https://github.com/pypeclub/pype/pull/24

Ooo most interesting! Could you post a gif of what it looks like?

Currenly nothing visually interesting :D . You press a button in avalon menu and voilà... all the required representation get loaded into the scene. No progress bar or ui right now. Just an MVP.