chainer-fast-neuralstyle-models

chainer-fast-neuralstyle-models copied to clipboard

chainer-fast-neuralstyle-models copied to clipboard

Model Request

Can anyone train a model using this style image?

I really like how it looks on Prisma (the app), and I don't think I have a powerful enough computer to train this model.

Thanks!

The closest we've currently got is Edtaonisl model. It's a similar artwork by the same artist. Check it out. I cannot help you out at the moment, since I already have a queue for styles I'd like to train.

I probably can after my current style is done with processing. Most likely over the weekend.

@gafr please note, whatever the size you're training, it's highly advised to set bicubic resampling when resizing like so: resize((256, 256), 2). This will likely give a more smooth result.

yes! thanks for the advice, I read it in the other thread as well, also I will likely switch to the most recent version of chainer-neuralstyle

Good idea. Curious to see comparison images once they're ready.

Has anyone had any luck with new models?

I've been experimenting mostly with different parameters / number of epochs of the same styles. Lowering total variation tends to produce more artifacts. Increasing epochs to 4 smooths out the dithering pattern and reduces artifacts, but the result isn't much different apart from that. Sometimes the second epoch looks even better. Few new styles didn't come out as good as the first ones, so i didn't bother sharing. Maybe @gafr has something to say?

I'm going to run a pretty intense training session on a very high-res version of starry night at hopefully 512px or better (anyone tried this?). I need any other advice people can impart as we all know it's going to take roughly 1 day to complete with the Amazon instance I'm working with.

Any advice at all, let me know!

@DylanAlloy if you use coco set and 512px on g2.2xlarge you'll finish about 40% of first epoch in 24h. Expect about 50-55h for 1 epoch on this configuration

@DylanAlloy A friend of mine has already trained starry night model. The results are so-so, depend on content image I guess. Or maybe we just didn't guess the right params. You'd be better off choosing smth more unique, with lots of small but better defined and pronounced details (harder edges). Some patterns like this or this might work rather well, or if you're not willing to risk, go for styles that are proven to work well in a variety of today's apps and services, or the one OP suggests

I'd also recommend you to use my fork for training, it has everything setup for quality by default, I made it a priority.

I'd also recommend you to use my fork for training, it has everything setup for quality by default, I made it a priority.

@6o6o That's fantastic output, it really picked up the strokes from Starry Night (that has been the problem in others using the smaller vgg file, I find).

I'll give that fork a try. Having an issue with the chainer implementation not finding nvcc in /usr/local/cuda-7.5/bin since cloning the newest version with the image_size arg so I have to find out why. I'll have some models uploaded in the time it takes to fix that + about 55 hours -___-

Feel free to link to the model for that starry night output.

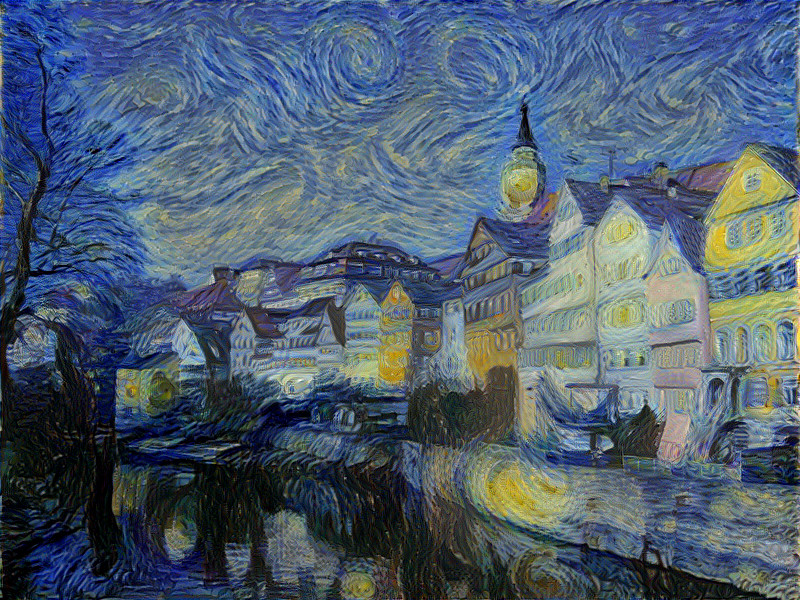

I appreciate your grateful response, but I disagree. That result is mediocre at best. It failed to pick up swirly patterns, the most distinctive feature of all Van Gogh paintings. This is what a good result should look like. Unfortunately, it's done with the slow implementation and I've been unable to reproduce it so far  Since I wasn't satisfied with the result, I didn't see the point of sharing it. I can do that if you wish, but need to ask my friend's permission first, since it's his work.

Since I wasn't satisfied with the result, I didn't see the point of sharing it. I can do that if you wish, but need to ask my friend's permission first, since it's his work.

I understand about asking for permission and I actually do agree with your criticism. I've also run the slow implementations (the ones that require 10 minutes to an hour) and they're much more accurate and they can certainly get the Van Gogh characteristics more reliably.

I remember the setup_model.sh being kind of sparing when it comes to how it takes from the model. Something like 10% of the convolution is kept, this seems to be important to get meta features like what we're talking about in the Van Gogh. My thought would be that a slightly larger model would assist in this speedy implementation?

Yeah, I don't think the full model would make a difference, since non-convolutional layers are irrelevant for this sort of task. Everybody do the stripping. What could be helpful is using higher or more layers when training like conv3_3, conv4_3 and / or switching to a deeper network for feature extraction, like VGG19. I'm not sure though how to achieve that.

Unfortunately, the friend refused since they're using it in an app, the users are sharing it and, as he says, it's their competitive advantage. Sorry about that.

No worries! I understand completely. I'll be researching how to use a deeper network like what some of the other repositories use.

@DylanAlloy @6o6o As the paper says, the quality of fast method is equal to about 100 iteartions of slow method.

Yes, but the point is, even on 100 iterations, the slow method is able to pick up much more complex, higher level features. Compare kandinsky transformation on the same picture, the slow and fast algos

@6o6o, love the model you provided, I wonder can you give some parameter you used to train the model?

Thanks. All at default

--image_size 512

--lambda_tv 10e-4

--lambda_feat 1e0

--lambda_style 1e1

--lr 1e-3

Plus, I used enhanced resampling implemented in my fork: 6o6o/chainer-fast-neuralstyle