wav2letter

wav2letter copied to clipboard

wav2letter copied to clipboard

How to decode by using a pre-trained acoustic model and customized 3-gram LM

i would like to use the pre-trained model which has been trained in the Librispeech dataset into my own customized data, with changing only the language model and the Lexicon file.

i used the sota Acoustic model dev-clean (LibriSpeech | TDS CTC clean) "https://github.com/facebookresearch/wav2letter/tree/master/recipes/sota/2019", furthermore i used the advice from @tlikhomanenko to create my new customize lexicon based on the Sentencepiece model in this link "https://github.com/facebookresearch/wav2letter/issues/737#issuecomment-654621541"

- here is the lexicon looks like:

!\exclamation-mark _exclamation po i n t !\exclamation-mark _exclamation p o i nt !\exclamation-mark _exclamation p o i n t !\exclamation-mark _ex c lam ation po int !\exclamation-mark _ex cla m ation po int "\close-double-quotes _closed ou ble q u ot e "\close-double-quotes _closed ou ble q u o te "\close-double-quotes _close d ou ble q u ot e "\close-double-quotes _closed o u ble q u ot e "\close-double-quotes _closed ou ble q u o t e "\close-double-quotes _closed o ub le q u ot e PLC _ p l s e e PLEDs _pe ee led e es PLEDs _pe e el ed e es PLEDs _pe ee led ee s PLEDs _pe e e led e es complicates _comp li c at es complicates _comp l ic a tes complicating _comp li cat ing complicating _comp l ic ating complicating _comp li c ating complicating _comp li ca ting ›\close-angle-bracket _right ang l e bra ck ets €\euro-sign _eu ro s ign

- here is my customized 3 gram.arpa language model looks like:

\data

ngram 1=51664

ngram 2=8394983

ngram 3=17809566

\1-grams:

-99.00000 0.000000

-99.00000 0.000000

-3.952232 !\exclamation-mark 0.152154

-4.227973 "\close-double-quotes -0.699384

-5.570467 "\double-quotes -0.320485

-4.293772 "\open-double-quotes 0.208263

-7.298170 .asia 0.000000

-6.156094 .at -0.180662

- decoding file:

--lexicon=/var/data/customized_data-wordpiece-dts_ctc_web-10000-nbest10.lexicon --tokensdir=/var/data/training/pretrained_models/w2l/model_from_website/tds_ctc/ --tokens=librispeech-train-all-unigram-10000.tokens

--am=/var/pretrained-model_from_w2l_website/tds_ctc/am_tds_ctc_librispeech_dev_clean.bin --lm=/var/data//model_lm/customized_lm_3gram.arpa

--test=/var/data/lists/customized_data.lst

--listdata=true --lmweight=0.67470637680685 --wordscore=0.62867952607587 --uselexicon=True --decodertype=wrd --lmtype=kenlm --silscore=0 --beamsize=250 --beamsizetoken=100 --beamthreshold=100 --maxtsz=100000000000000 --mintsz=0 --maxisz=100000000000000 --minisz=0 --nthread_decoder=4 --smearing=max --show=true --input=wav

after i used decoding in this customized language model and the pre-trained acoustic model, the recognition is not good at all, however when i used the same technique in Kaldi Speech Recognition Toolkit to evaluate the same customized datasets, i got a very good recognition rate.

-Notes: i used this pretrained-model to evaluate the test-clean Librispeech with the 3gram LM, i got approximately 4% WER. i am wandering if the new lexicon that i created is correct or not (It contains special and capital characters, which in the original lexicon contains only lower characters). i really appreciate your advice.

-

original Lexicon looks like : a _a a _ a a'azam _a ' a za m a'azam _a ' a z am a'azam _a ' a z a m a'azam _ a ' a za m a'azam _ a ' a z am a'azam _ a ' a z a m a'll _a 'll a'll _a ' ll a'll _ a 'll a'll _a ' l l a'll _ a ' ll a'll _ a ' l l a'most _a ' most a'most _a ' mo st a'most _a ' mo s t a'most _a ' m o st a'most _ a ' most a'most _a ' m os t a'most _a ' m o s t a'most _ a ' mo st a'most _ a ' mo s t

-

the original 3-gram.arpa language model looks like:

\data

ngram 1=200003

ngram 2=38229161

ngram 3=49712290

\1-grams:

-2.348754

-2.752519 <UNK> -0.9697837

-99 -2.408548

-2.619969 A -1.56262

-7.211563 A''S -0.1495221

-6.221141 A'BODY -0.2521624

-6.583487 A'COURT -0.1844242

-6.240468 A'D -0.2419162

-7.108924 A'GHA -0.1740987

-6.260695 A'GOIN -0.407374

-5.804425 A'LL -0.3104859

-5.638036 A'M -0.2983787

-6.221141 A'MIGHTY -0.2186911

-6.885612 A'MIGHTY'S -0.1800996

-4.996963 A'MOST -0.4291549

-5.247992 A'N'T -0.4541333

-6.68067 A'PENNY -0.1663875

-5.518031 A'READY -0.3329575

-6.202638 A'RIGHT -0.3302211

As lexicon is mapping of words into AM tokens sequence to restrict the search in the beam-search decoder, your lexicon file should contain mapping of words to the sequence of tokens only from librispeech-train-all-unigram-10000.tokens. Also you LM should use the same words as you have in lexicon. So you need to have either upper case of lower case in both.

thanks for your reply, i really appreciate that.

i did a mistake when i decoded the Librispeech test-clean dataset. the 3 gram.arpa is converted to lower case first and then transferred it to binary format, which match the words in the Lexicon (lower case).

- result with the wrong LM 3-gram.arpa: [Decode /var/data/librispeech/lists/test-clean.lst (2616 samples) in 712.07s (actual decoding time 2.16s/sample) -- WER: 6.97887, LER: 1.4074] -results with right LM 3-gram.bin: [Decode /var/data/librispeech/lists/test-clean.lst (2616 samples) in 679.095s (actual decoding time 2.07s/sample) -- WER: 3.2862, LER: 1.50971]

I will give you an example what i did in the lexicon to get a better recognition in a word. i used a sentence-piece tools and Token WP model to generate the lexicon. the word remarkable dose not recognized at all in my test set. Here is the part of the customized lexicon based on WP tools for the unremarkable word: unremarkable _unre mark able unremarkable _un re mark able unremarkable _unre ma rk able unremarkable _unre mar k able unremarkable _unre mark a ble unremarkable _unre mark ab le unremarkable _unre mar ka ble unremarkable _un r e mark able unremarkable _unre ma r k able unremarkable _unre m ar k able

-by adding in the lexicon this line unremarkable _un _remarkable (_un and _remarkable tokens are already existed in the token list " librispeech-train-all-unigram-10000.tokens.")., it enhances the recognition of this word "unremarkable" from "0 %" to "12 time recognized out of 25 times appeared in my test set".it always recognized as remarkable instead of unremarkable. question 1: i would like to know you advice, because i am a little bit confused (i used two underscore tokens to map one word, is that okay ?). Note: i deactivate this flag from the decode file (--wordseparator ).

question 2: there are some tokens existed in the token list in two different formats, which in my option are similar and confuse the overall system to recognize: (token with underscore VS the same token without underscore) for example _line vs line in the token list.

question 3: Is it better to use Phonetic based lexicon instead of Letter-based or Word-piece lexicon in my case (in Kaldi i used only a Letter-based lexicon and Phonetic-based lexicon and got a better result, do you think also the problem in W2L in my case is caused by the acoustic model has been trained in WP lexicon or by using this deterministic WP Lexicon ) ?

- you should not deactivate wordseparator, and each underscore is a boundary between words that is why it will anyway be separate word "remarkable". You need to set its transcription to "_un remarkable" or just apply word piece model to the word to get it wp sequence

- _line means that you start new word, while "line" is just continue of word so it could be only in the middle/end of the word.

- hard to say, you need to experiment, also the problem could be because your data has different lexicon than librispeech so wps are not appropriate to your data. I would try to train letter based AM.

thanks for your reply.

1- The remarkable token is only appeared with underscore in the token list, like that (_unremarkable). when i used WP model to get the sub-word sequence (with nbest = 10, it is in my previous comment), it does not recognize any word in my test set. and i am wondering, why it performed better when i separate the word in this way (_un _remarkable) in the lexicon.

2- Do you think the similarity between two words (have underscore _ and does not have underscore _ ) in the token list might confuse the DNN to predict the right label (class), or this issue will be handled later during a beam search decoding?

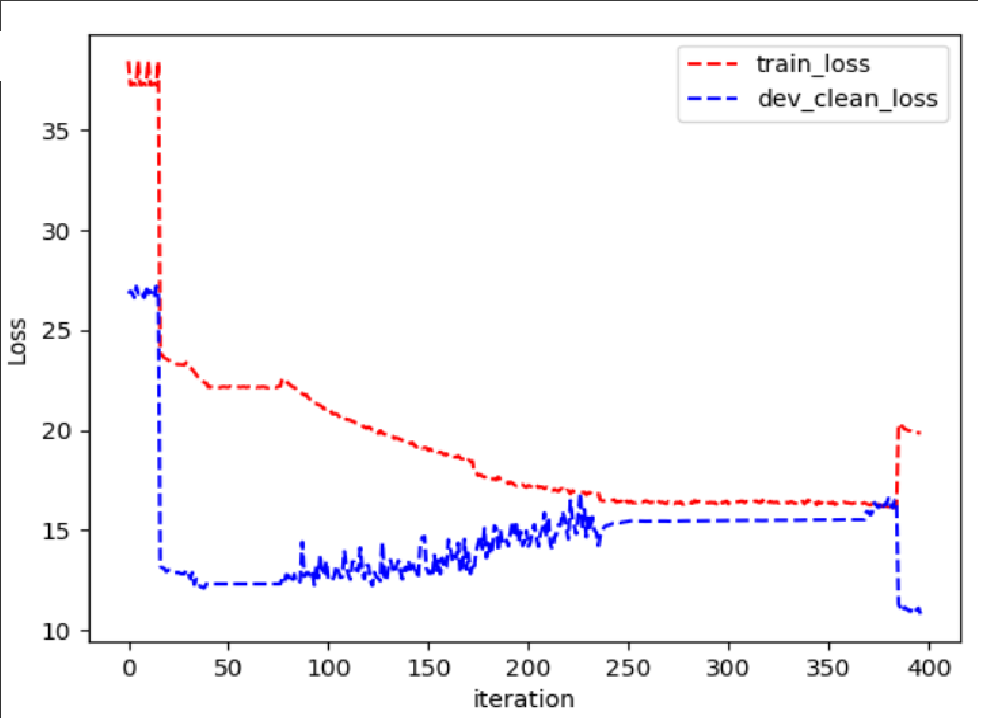

3- I am training the Librispeech dataset 460 hrs using Phonetic-based lexicon (streaming convnet and tds-ctc), but the loss of the training dateset reaches to 16.5 and can not be converged anymore (after epochs = 120). i am using a different token than the original one https://www.openslr.org/resources/11/librispeech-lexicon.txt, because the token is more simpler.

-- The Phonetic-lexicon looks like that: a ah | a ax | a ey | a'azam aa z aa m | a'azam ae aa z aa m | a'azam aa z ae m | a'azam aa z ax m | a'll ae l | a'll ae ax l | a'll ey l | a'll ax l | a'most b ax l ey m ow s t | a'most ax l ey m ow s t | a'most ae m ow s t | a'most ey m ow s t | a'ready ae r eh d iy | a'ready b ax l ey r eh d iy | a'ready ax l ey r eh d iy |

--The Phonetic token looks like that: ay uw aw oy jh dh ch p hh zh uh |

I think the next step i will try to train those two model using Letter-based Lexicon as you mentioned in the previous comment and i will let you know, if this helps to create a generic acoustic model or not . thanks in advance

Hello @tlikhomanenko , 3- I tried to train (SOTA / TDS CTC ) the librispeech dataset based on Letter lexicon. but after 120 epochs, the loss could not be decreased. flagsfile: --runname=/var/data/training/w2l/sota_2019/tds_ctc/am/tds_streaming_librispeech_graphene --tag=001LR_00M_6BS_0R_M --train=/var/data/en/LibriSpeech/lists/train-clean-100.lst,/mnt/data/en/LibriSpeech/lists/train-clean-360.lst --valid=dev-clean:/var/data/en/LibriSpeech/lists/dev-clean.lst,dev-other:/var/data/en/LibriSpeech/lists/dev-other.lst --lexicon=/mnt/data/training/w2l/sota_2019/tds_ctc/token_lexicon/graphene/lexicon.txt --arch=/var/data/training/w2l/sota_2019/tds_ctc/am/am_tds_ctc.arch --tokens=/var/data/training/w2l/sota_2019/tds_ctc/token_lexicon/graphene/tokens.txt --surround=| --criterion=ctc --batchsize=6 --lrcosine=false --lr=0.05 #--lrcrit=0.05 --momentum=0.5 --nthread=8 --mfsc=true --enable_distributed=true --logtostderr --onorm=target --sqnorm --gamma=1.0 --showletters=true --input=flac #--warmup=42000

log:

epoch: 1 | nupdates: 23437 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:42 | bch(ms): 572.70 | smp(ms): 1.28 | fwd(ms): 73.02 | crit-fwd(ms): 2.53 | bwd(ms): 480.26 | optim(ms): 17.66 | loss: 33.32994 | train-TER: 81.54 | train-WER: 104.56 | dev-clean-loss: 23.57488 | dev-clean-TER: 100.00 | dev-clean-WER: 100.00 | dev-other-loss: 22.34571 | dev-other-TER: 100.00 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.30 epoch: 2 | nupdates: 46874 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:44:01 | bch(ms): 573.50 | smp(ms): 1.14 | fwd(ms): 72.94 | crit-fwd(ms): 2.53 | bwd(ms): 481.18 | optim(ms): 17.66 | loss: 32.12797 | train-TER: 99.84 | train-WER: 100.00 | dev-clean-loss: 23.47649 | dev-clean-TER: 100.00 | dev-clean-WER: 100.00 | dev-other-loss: 22.26289 | dev-other-TER: 100.00 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 256.95 epoch: 3 | nupdates: 70311 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:32 | bch(ms): 572.29 | smp(ms): 0.92 | fwd(ms): 72.99 | crit-fwd(ms): 2.53 | bwd(ms): 480.25 | optim(ms): 17.69 | loss: 32.03925 | train-TER: 100.00 | train-WER: 100.00 | dev-clean-loss: 23.45988 | dev-clean-TER: 100.00 | dev-clean-WER: 100.00 | dev-other-loss: 22.24865 | dev-other-TER: 100.00 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.49 epoch: 4 | nupdates: 93748 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:18 | bch(ms): 571.70 | smp(ms): 0.38 | fwd(ms): 73.01 | crit-fwd(ms): 2.53 | bwd(ms): 480.19 | optim(ms): 17.67 | loss: 32.01485 | train-TER: 100.00 | train-WER: 100.00 | dev-clean-loss: 23.45763 | dev-clean-TER: 100.00 | dev-clean-WER: 100.00 | dev-other-loss: 22.24861 | dev-other-TER: 100.00 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.76 epoch: 5 | nupdates: 117185 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:15 | bch(ms): 571.57 | smp(ms): 0.38 | fwd(ms): 72.91 | crit-fwd(ms): 2.52 | bwd(ms): 480.10 | optim(ms): 17.72 | loss: 32.00486 | train-TER: 100.00 | train-WER: 100.00 | dev-clean-loss: 23.44267 | dev-clean-TER: 100.00 | dev-clean-WER: 100.00 | dev-other-loss: 22.23417 | dev-other-TER: 100.00 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.82 epoch: 6 | nupdates: 140622 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:25 | bch(ms): 571.98 | smp(ms): 0.55 | fwd(ms): 72.97 | crit-fwd(ms): 2.53 | bwd(ms): 480.24 | optim(ms): 17.76 | loss: 31.99468 | train-TER: 100.00 | train-WER: 100.00 | dev-clean-loss: 23.43595 | dev-clean-TER: 99.59 | dev-clean-WER: 100.00 | dev-other-loss: 22.22929 | dev-other-TER: 99.53 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.63 epoch: 7 | nupdates: 164059 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:51 | bch(ms): 573.08 | smp(ms): 1.37 | fwd(ms): 72.95 | crit-fwd(ms): 2.53 | bwd(ms): 480.54 | optim(ms): 17.75 | loss: 31.98220 | train-TER: 100.00 | train-WER: 100.00 | dev-clean-loss: 23.42166 | dev-clean-TER: 98.28 | dev-clean-WER: 100.00 | dev-other-loss: 22.21485 | dev-other-TER: 98.15 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.13 epoch: 8 | nupdates: 187496 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:39 | bch(ms): 572.59 | smp(ms): 0.76 | fwd(ms): 72.95 | crit-fwd(ms): 2.53 | bwd(ms): 480.61 | optim(ms): 17.73 | loss: 31.96635 | train-TER: 100.00 | train-WER: 100.00 | dev-clean-loss: 23.40924 | dev-clean-TER: 96.98 | dev-clean-WER: 100.00 | dev-other-loss: 22.19953 | dev-other-TER: 96.50 | dev-other-WER: 100.00 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.36 epoch: 9 | nupdates: 210933 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:57 | bch(ms): 573.33 | smp(ms): 1.43 | fwd(ms): 72.96 | crit-fwd(ms): 2.53 | bwd(ms): 480.72 | optim(ms): 17.74 | loss: 31.94611 | train-TER: 99.99 | train-WER: 100.00 | dev-clean-loss: 23.38037 | dev-clean-TER: 96.24 | dev-clean-WER: 100.01 | dev-other-loss: 22.16496 | dev-other-TER: 95.61 | dev-other-WER: 100.01 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.02 epoch: 10 | nupdates: 234370 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:48 | bch(ms): 572.98 | smp(ms): 1.41 | fwd(ms): 72.83 | crit-fwd(ms): 2.53 | bwd(ms): 480.58 | optim(ms): 17.76 | loss: 31.92239 | train-TER: 99.97 | train-WER: 100.00 | dev-clean-loss: 23.35248 | dev-clean-TER: 94.97 | dev-clean-WER: 99.07 | dev-other-loss: 22.13149 | dev-other-TER: 94.48 | dev-other-WER: 98.95 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.18 epoch: 11 | nupdates: 257807 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 03:43:27 | bch(ms): 572.06 | smp(ms): 0.38 | fwd(ms): 72.85 | crit-fwd(ms): 2.52 | bwd(ms): 480.63 | optim(ms): 17.75 | loss: 31.89829 | train-TER: 99.71 | train-WER: 99.99 | dev-clean-loss: 23.32828 | dev-clean-TER: 96.08 | dev-clean-WER: 99.06 | dev-other-loss: 22.10160 | dev-other-TER: 95.63 | dev-other-WER: 98.93 | avg-isz: 1227 | avg-tsz: 212 | max-tsz: 484 | hrs: 959.35 | thrpt(sec/sec): 257.59

--changging LR to 0.001 : epoch: 12 | nupdates: 279900 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:34:03 | bch(ms): 255.44 | smp(ms): 0.41 | fwd(ms): 72.60 | crit-fwd(ms): 2.57 | bwd(ms): 165.34 | optim(ms): 16.97 | loss: 32.26816 | train-TER: 99.28 | train-WER: 99.97 | dev-clean-loss: 23.31834 | dev-clean-TER: 96.47 | dev-clean-WER: 99.13 | dev-other-loss: 22.09271 | dev-other-TER: 96.03 | dev-other-WER: 98.99 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.63 epoch: 13 | nupdates: 301993 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:34:11 | bch(ms): 255.82 | smp(ms): 0.42 | fwd(ms): 72.67 | crit-fwd(ms): 2.58 | bwd(ms): 165.57 | optim(ms): 17.03 | loss: 32.25658 | train-TER: 98.92 | train-WER: 99.94 | dev-clean-loss: 23.30659 | dev-clean-TER: 96.59 | dev-clean-WER: 99.26 | dev-other-loss: 22.07969 | dev-other-TER: 96.14 | dev-other-WER: 99.15 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.19 epoch: 14 | nupdates: 313039 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:45 | bch(ms): 574.47 | smp(ms): 0.39 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.43 | optim(ms): 17.63 | loss: 32.24838 | train-TER: 98.80 | train-WER: 99.96 | dev-clean-loss: 23.29345 | dev-clean-TER: 96.30 | dev-clean-WER: 99.92 | dev-other-loss: 22.06670 | dev-other-TER: 95.91 | dev-other-WER: 99.88 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.88 epoch: 15 | nupdates: 324085 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:46 | bch(ms): 574.57 | smp(ms): 0.41 | fwd(ms): 74.55 | crit-fwd(ms): 2.57 | bwd(ms): 481.54 | optim(ms): 17.62 | loss: 32.24162 | train-TER: 98.76 | train-WER: 99.96 | dev-clean-loss: 23.28261 | dev-clean-TER: 96.35 | dev-clean-WER: 100.00 | dev-other-loss: 22.05511 | dev-other-TER: 96.00 | dev-other-WER: 99.99 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.84 epoch: 16 | nupdates: 335131 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:47 | bch(ms): 574.63 | smp(ms): 0.39 | fwd(ms): 74.49 | crit-fwd(ms): 2.57 | bwd(ms): 481.62 | optim(ms): 17.67 | loss: 32.23548 | train-TER: 98.68 | train-WER: 99.93 | dev-clean-loss: 23.26952 | dev-clean-TER: 96.57 | dev-clean-WER: 100.00 | dev-other-loss: 22.04149 | dev-other-TER: 96.28 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.81 epoch: 17 | nupdates: 346177 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.85 | smp(ms): 0.40 | fwd(ms): 74.50 | crit-fwd(ms): 2.57 | bwd(ms): 481.85 | optim(ms): 17.65 | loss: 32.22884 | train-TER: 98.67 | train-WER: 99.93 | dev-clean-loss: 23.26252 | dev-clean-TER: 96.76 | dev-clean-WER: 100.00 | dev-other-loss: 22.03384 | dev-other-TER: 96.53 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.71 epoch: 18 | nupdates: 357223 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.84 | smp(ms): 0.39 | fwd(ms): 74.52 | crit-fwd(ms): 2.57 | bwd(ms): 481.80 | optim(ms): 17.67 | loss: 32.22292 | train-TER: 98.69 | train-WER: 99.94 | dev-clean-loss: 23.25410 | dev-clean-TER: 96.92 | dev-clean-WER: 100.00 | dev-other-loss: 22.02613 | dev-other-TER: 96.75 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.72 epoch: 19 | nupdates: 368269 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.85 | smp(ms): 0.39 | fwd(ms): 74.50 | crit-fwd(ms): 2.57 | bwd(ms): 481.86 | optim(ms): 17.64 | loss: 32.21711 | train-TER: 98.67 | train-WER: 99.90 | dev-clean-loss: 23.24680 | dev-clean-TER: 97.20 | dev-clean-WER: 100.00 | dev-other-loss: 22.01814 | dev-other-TER: 97.08 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.71 epoch: 20 | nupdates: 379315 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:48 | bch(ms): 574.70 | smp(ms): 0.39 | fwd(ms): 74.48 | crit-fwd(ms): 2.57 | bwd(ms): 481.68 | optim(ms): 17.69 | loss: 32.21237 | train-TER: 98.68 | train-WER: 99.95 | dev-clean-loss: 23.24048 | dev-clean-TER: 97.38 | dev-clean-WER: 100.00 | dev-other-loss: 22.01091 | dev-other-TER: 97.25 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.78 epoch: 21 | nupdates: 390361 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.92 | smp(ms): 0.39 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.85 | optim(ms): 17.69 | loss: 32.20784 | train-TER: 98.69 | train-WER: 99.92 | dev-clean-loss: 23.23783 | dev-clean-TER: 97.44 | dev-clean-WER: 100.00 | dev-other-loss: 22.00898 | dev-other-TER: 97.30 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.68 epoch: 22 | nupdates: 401407 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.94 | smp(ms): 0.39 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.82 | optim(ms): 17.73 | loss: 32.20394 | train-TER: 98.70 | train-WER: 99.91 | dev-clean-loss: 23.23212 | dev-clean-TER: 97.62 | dev-clean-WER: 100.00 | dev-other-loss: 22.00192 | dev-other-TER: 97.47 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.67 epoch: 23 | nupdates: 412453 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.78 | smp(ms): 0.39 | fwd(ms): 74.53 | crit-fwd(ms): 2.57 | bwd(ms): 481.72 | optim(ms): 17.68 | loss: 32.20091 | train-TER: 98.71 | train-WER: 99.90 | dev-clean-loss: 23.23087 | dev-clean-TER: 97.51 | dev-clean-WER: 100.00 | dev-other-loss: 22.00039 | dev-other-TER: 97.37 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.74 epoch: 24 | nupdates: 423499 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:51 | bch(ms): 575.01 | smp(ms): 0.38 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.89 | optim(ms): 17.74 | loss: 32.19834 | train-TER: 98.71 | train-WER: 99.89 | dev-clean-loss: 23.22733 | dev-clean-TER: 97.67 | dev-clean-WER: 100.00 | dev-other-loss: 21.99540 | dev-other-TER: 97.50 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.64 epoch: 25 | nupdates: 434545 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.91 | smp(ms): 0.39 | fwd(ms): 74.51 | crit-fwd(ms): 2.57 | bwd(ms): 481.84 | optim(ms): 17.71 | loss: 32.19604 | train-TER: 98.72 | train-WER: 99.87 | dev-clean-loss: 23.22579 | dev-clean-TER: 97.67 | dev-clean-WER: 100.00 | dev-other-loss: 21.99502 | dev-other-TER: 97.50 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.69 epoch: 26 | nupdates: 445591 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.89 | smp(ms): 0.39 | fwd(ms): 74.49 | crit-fwd(ms): 2.57 | bwd(ms): 481.82 | optim(ms): 17.73 | loss: 32.19419 | train-TER: 98.69 | train-WER: 99.86 | dev-clean-loss: 23.22600 | dev-clean-TER: 97.56 | dev-clean-WER: 100.00 | dev-other-loss: 21.99595 | dev-other-TER: 97.41 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.69 epoch: 27 | nupdates: 456637 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:45 | bch(ms): 574.46 | smp(ms): 0.39 | fwd(ms): 74.52 | crit-fwd(ms): 2.57 | bwd(ms): 481.39 | optim(ms): 17.70 | loss: 32.19245 | train-TER: 98.69 | train-WER: 99.86 | dev-clean-loss: 23.22276 | dev-clean-TER: 97.62 | dev-clean-WER: 100.00 | dev-other-loss: 21.99202 | dev-other-TER: 97.45 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.89 epoch: 28 | nupdates: 467683 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:57 | bch(ms): 575.55 | smp(ms): 0.90 | fwd(ms): 74.62 | crit-fwd(ms): 2.57 | bwd(ms): 481.86 | optim(ms): 17.74 | loss: 32.19093 | train-TER: 98.68 | train-WER: 99.85 | dev-clean-loss: 23.22073 | dev-clean-TER: 97.59 | dev-clean-WER: 100.00 | dev-other-loss: 21.98951 | dev-other-TER: 97.43 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.39 epoch: 29 | nupdates: 478729 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:53 | bch(ms): 575.14 | smp(ms): 0.54 | fwd(ms): 74.48 | crit-fwd(ms): 2.57 | bwd(ms): 481.92 | optim(ms): 17.73 | loss: 32.18969 | train-TER: 98.67 | train-WER: 99.84 | dev-clean-loss: 23.22065 | dev-clean-TER: 97.58 | dev-clean-WER: 100.00 | dev-other-loss: 21.98904 | dev-other-TER: 97.42 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.58 epoch: 30 | nupdates: 489775 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:51 | bch(ms): 574.99 | smp(ms): 0.39 | fwd(ms): 74.48 | crit-fwd(ms): 2.57 | bwd(ms): 481.93 | optim(ms): 17.73 | loss: 32.18857 | train-TER: 98.66 | train-WER: 99.84 | dev-clean-loss: 23.21835 | dev-clean-TER: 97.63 | dev-clean-WER: 100.00 | dev-other-loss: 21.98689 | dev-other-TER: 97.46 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.65 epoch: 31 | nupdates: 500821 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.89 | smp(ms): 0.39 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.76 | optim(ms): 17.73 | loss: 32.18749 | train-TER: 98.65 | train-WER: 99.86 | dev-clean-loss: 23.21790 | dev-clean-TER: 97.57 | dev-clean-WER: 100.00 | dev-other-loss: 21.98544 | dev-other-TER: 97.42 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.69 epoch: 32 | nupdates: 511867 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:48 | bch(ms): 574.76 | smp(ms): 0.41 | fwd(ms): 74.51 | crit-fwd(ms): 2.57 | bwd(ms): 481.65 | optim(ms): 17.72 | loss: 32.18668 | train-TER: 98.62 | train-WER: 99.84 | dev-clean-loss: 23.21735 | dev-clean-TER: 97.49 | dev-clean-WER: 100.00 | dev-other-loss: 21.98562 | dev-other-TER: 97.34 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.75 epoch: 33 | nupdates: 522913 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:46:03 | bch(ms): 576.08 | smp(ms): 1.50 | fwd(ms): 74.60 | crit-fwd(ms): 2.57 | bwd(ms): 481.83 | optim(ms): 17.73 | loss: 32.18611 | train-TER: 98.61 | train-WER: 99.84 | dev-clean-loss: 23.21653 | dev-clean-TER: 97.61 | dev-clean-WER: 100.00 | dev-other-loss: 21.98328 | dev-other-TER: 97.46 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.15 epoch: 34 | nupdates: 533959 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:48 | bch(ms): 574.75 | smp(ms): 0.39 | fwd(ms): 74.52 | crit-fwd(ms): 2.57 | bwd(ms): 481.65 | optim(ms): 17.73 | loss: 32.18530 | train-TER: 98.61 | train-WER: 99.83 | dev-clean-loss: 23.21458 | dev-clean-TER: 97.63 | dev-clean-WER: 99.99 | dev-other-loss: 21.98136 | dev-other-TER: 97.48 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.76 epoch: 35 | nupdates: 545005 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.91 | smp(ms): 0.39 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.79 | optim(ms): 17.72 | loss: 32.18474 | train-TER: 98.61 | train-WER: 99.82 | dev-clean-loss: 23.21295 | dev-clean-TER: 97.68 | dev-clean-WER: 99.99 | dev-other-loss: 21.97871 | dev-other-TER: 97.52 | dev-other-WER: 99.97 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.68 epoch: 36 | nupdates: 556051 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.78 | smp(ms): 0.38 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.67 | optim(ms): 17.73 | loss: 32.18419 | train-TER: 98.58 | train-WER: 99.83 | dev-clean-loss: 23.21493 | dev-clean-TER: 97.51 | dev-clean-WER: 100.00 | dev-other-loss: 21.98204 | dev-other-TER: 97.38 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.74 epoch: 37 | nupdates: 567097 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:47 | bch(ms): 574.62 | smp(ms): 0.39 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.52 | optim(ms): 17.71 | loss: 32.18374 | train-TER: 98.61 | train-WER: 99.84 | dev-clean-loss: 23.21372 | dev-clean-TER: 97.59 | dev-clean-WER: 99.99 | dev-other-loss: 21.98096 | dev-other-TER: 97.45 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.82 epoch: 38 | nupdates: 578143 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:47 | bch(ms): 574.67 | smp(ms): 0.39 | fwd(ms): 74.58 | crit-fwd(ms): 2.57 | bwd(ms): 481.54 | optim(ms): 17.70 | loss: 32.18313 | train-TER: 98.57 | train-WER: 99.84 | dev-clean-loss: 23.21232 | dev-clean-TER: 97.65 | dev-clean-WER: 99.98 | dev-other-loss: 21.97973 | dev-other-TER: 97.50 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.80 epoch: 39 | nupdates: 589189 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:47 | bch(ms): 574.63 | smp(ms): 0.38 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.51 | optim(ms): 17.74 | loss: 32.18277 | train-TER: 98.58 | train-WER: 99.81 | dev-clean-loss: 23.21204 | dev-clean-TER: 97.57 | dev-clean-WER: 99.99 | dev-other-loss: 21.97894 | dev-other-TER: 97.43 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.81 epoch: 40 | nupdates: 600235 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:48 | bch(ms): 574.76 | smp(ms): 0.39 | fwd(ms): 74.55 | crit-fwd(ms): 2.57 | bwd(ms): 481.67 | optim(ms): 17.70 | loss: 32.18251 | train-TER: 98.57 | train-WER: 99.85 | dev-clean-loss: 23.21113 | dev-clean-TER: 97.69 | dev-clean-WER: 99.98 | dev-other-loss: 21.97805 | dev-other-TER: 97.54 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.75 epoch: 41 | nupdates: 611281 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:46:03 | bch(ms): 576.06 | smp(ms): 1.31 | fwd(ms): 74.57 | crit-fwd(ms): 2.57 | bwd(ms): 481.88 | optim(ms): 17.72 | loss: 32.18196 | train-TER: 98.58 | train-WER: 99.81 | dev-clean-loss: 23.21157 | dev-clean-TER: 97.69 | dev-clean-WER: 99.98 | dev-other-loss: 21.97896 | dev-other-TER: 97.54 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.16 epoch: 42 | nupdates: 622327 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:45 | bch(ms): 574.47 | smp(ms): 0.39 | fwd(ms): 74.52 | crit-fwd(ms): 2.57 | bwd(ms): 481.41 | optim(ms): 17.70 | loss: 32.18154 | train-TER: 98.54 | train-WER: 99.86 | dev-clean-loss: 23.21055 | dev-clean-TER: 97.69 | dev-clean-WER: 99.98 | dev-other-loss: 21.97724 | dev-other-TER: 97.55 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.89 epoch: 43 | nupdates: 633373 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:46:02 | bch(ms): 576.03 | smp(ms): 1.33 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.91 | optim(ms): 17.70 | loss: 32.18134 | train-TER: 98.58 | train-WER: 99.85 | dev-clean-loss: 23.21106 | dev-clean-TER: 97.64 | dev-clean-WER: 99.99 | dev-other-loss: 21.97762 | dev-other-TER: 97.49 | dev-other-WER: 99.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.17 epoch: 44 | nupdates: 644419 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:46 | bch(ms): 574.52 | smp(ms): 0.39 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.42 | optim(ms): 17.71 | loss: 32.18094 | train-TER: 98.55 | train-WER: 99.81 | dev-clean-loss: 23.20969 | dev-clean-TER: 97.65 | dev-clean-WER: 99.98 | dev-other-loss: 21.97632 | dev-other-TER: 97.50 | dev-other-WER: 99.97 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.86 epoch: 45 | nupdates: 655465 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:57 | bch(ms): 575.55 | smp(ms): 1.25 | fwd(ms): 74.48 | crit-fwd(ms): 2.56 | bwd(ms): 481.72 | optim(ms): 17.73 | loss: 32.18080 | train-TER: 98.56 | train-WER: 99.85 | dev-clean-loss: 23.20943 | dev-clean-TER: 97.76 | dev-clean-WER: 99.98 | dev-other-loss: 21.97570 | dev-other-TER: 97.59 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.39 epoch: 46 | nupdates: 666511 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:47 | bch(ms): 574.67 | smp(ms): 0.38 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.59 | optim(ms): 17.70 | loss: 32.18054 | train-TER: 98.57 | train-WER: 99.84 | dev-clean-loss: 23.20902 | dev-clean-TER: 97.70 | dev-clean-WER: 99.98 | dev-other-loss: 21.97493 | dev-other-TER: 97.54 | dev-other-WER: 99.97 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.79 epoch: 47 | nupdates: 677557 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.95 | smp(ms): 0.39 | fwd(ms): 74.53 | crit-fwd(ms): 2.57 | bwd(ms): 481.85 | optim(ms): 17.72 | loss: 32.18015 | train-TER: 98.58 | train-WER: 99.86 | dev-clean-loss: 23.20793 | dev-clean-TER: 97.76 | dev-clean-WER: 99.98 | dev-other-loss: 21.97489 | dev-other-TER: 97.60 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.67 epoch: 48 | nupdates: 688603 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.78 | smp(ms): 0.39 | fwd(ms): 74.51 | crit-fwd(ms): 2.57 | bwd(ms): 481.71 | optim(ms): 17.70 | loss: 32.17995 | train-TER: 98.57 | train-WER: 99.86 | dev-clean-loss: 23.20876 | dev-clean-TER: 97.67 | dev-clean-WER: 99.98 | dev-other-loss: 21.97635 | dev-other-TER: 97.53 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.74 epoch: 49 | nupdates: 699649 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:45 | bch(ms): 574.49 | smp(ms): 0.39 | fwd(ms): 74.57 | crit-fwd(ms): 2.57 | bwd(ms): 481.35 | optim(ms): 17.73 | loss: 32.17969 | train-TER: 98.58 | train-WER: 99.84 | dev-clean-loss: 23.20765 | dev-clean-TER: 97.67 | dev-clean-WER: 99.98 | dev-other-loss: 21.97438 | dev-other-TER: 97.52 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.88 epoch: 50 | nupdates: 710695 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.89 | smp(ms): 0.39 | fwd(ms): 74.53 | crit-fwd(ms): 2.57 | bwd(ms): 481.82 | optim(ms): 17.70 | loss: 32.17936 | train-TER: 98.56 | train-WER: 99.84 | dev-clean-loss: 23.20693 | dev-clean-TER: 97.74 | dev-clean-WER: 99.98 | dev-other-loss: 21.97318 | dev-other-TER: 97.57 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.69 epoch: 51 | nupdates: 721741 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.90 | smp(ms): 0.39 | fwd(ms): 74.59 | crit-fwd(ms): 2.57 | bwd(ms): 481.74 | optim(ms): 17.72 | loss: 32.17923 | train-TER: 98.57 | train-WER: 99.85 | dev-clean-loss: 23.20682 | dev-clean-TER: 97.78 | dev-clean-WER: 99.98 | dev-other-loss: 21.97256 | dev-other-TER: 97.60 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.69 epoch: 52 | nupdates: 732787 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:49 | bch(ms): 574.80 | smp(ms): 0.38 | fwd(ms): 74.53 | crit-fwd(ms): 2.57 | bwd(ms): 481.71 | optim(ms): 17.72 | loss: 32.17888 | train-TER: 98.58 | train-WER: 99.85 | dev-clean-loss: 23.20632 | dev-clean-TER: 97.69 | dev-clean-WER: 99.98 | dev-other-loss: 21.97237 | dev-other-TER: 97.54 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.74 epoch: 53 | nupdates: 743833 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:51 | bch(ms): 575.05 | smp(ms): 0.39 | fwd(ms): 74.62 | crit-fwd(ms): 2.57 | bwd(ms): 481.85 | optim(ms): 17.73 | loss: 32.17853 | train-TER: 98.55 | train-WER: 99.84 | dev-clean-loss: 23.20575 | dev-clean-TER: 97.72 | dev-clean-WER: 99.98 | dev-other-loss: 21.97181 | dev-other-TER: 97.55 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.62 epoch: 54 | nupdates: 754879 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:59 | bch(ms): 575.72 | smp(ms): 1.11 | fwd(ms): 74.55 | crit-fwd(ms): 2.57 | bwd(ms): 481.87 | optim(ms): 17.71 | loss: 32.17814 | train-TER: 98.57 | train-WER: 99.84 | dev-clean-loss: 23.20492 | dev-clean-TER: 97.75 | dev-clean-WER: 99.98 | dev-other-loss: 21.97019 | dev-other-TER: 97.58 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.32 epoch: 55 | nupdates: 765925 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:50 | bch(ms): 574.95 | smp(ms): 0.39 | fwd(ms): 74.62 | crit-fwd(ms): 2.57 | bwd(ms): 481.77 | optim(ms): 17.72 | loss: 32.17789 | train-TER: 98.57 | train-WER: 99.85 | dev-clean-loss: 23.20442 | dev-clean-TER: 97.84 | dev-clean-WER: 99.98 | dev-other-loss: 21.97059 | dev-other-TER: 97.65 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.67 epoch: 56 | nupdates: 776971 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:46 | bch(ms): 574.59 | smp(ms): 0.39 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.47 | optim(ms): 17.72 | loss: 32.17746 | train-TER: 98.58 | train-WER: 99.85 | dev-clean-loss: 23.20349 | dev-clean-TER: 97.72 | dev-clean-WER: 99.98 | dev-other-loss: 21.97039 | dev-other-TER: 97.56 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.83 epoch: 57 | nupdates: 788017 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:47 | bch(ms): 574.63 | smp(ms): 0.39 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.52 | optim(ms): 17.71 | loss: 32.17679 | train-TER: 98.55 | train-WER: 99.86 | dev-clean-loss: 23.20242 | dev-clean-TER: 97.81 | dev-clean-WER: 99.98 | dev-other-loss: 21.96900 | dev-other-TER: 97.62 | dev-other-WER: 99.95 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.81 epoch: 58 | nupdates: 799063 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:52 | bch(ms): 575.11 | smp(ms): 0.39 | fwd(ms): 74.58 | crit-fwd(ms): 2.57 | bwd(ms): 481.96 | optim(ms): 17.72 | loss: 32.17612 | train-TER: 98.57 | train-WER: 99.87 | dev-clean-loss: 23.20060 | dev-clean-TER: 97.71 | dev-clean-WER: 99.98 | dev-other-loss: 21.96666 | dev-other-TER: 97.54 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.59 epoch: 59 | nupdates: 810109 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:45 | bch(ms): 574.49 | smp(ms): 0.39 | fwd(ms): 74.54 | crit-fwd(ms): 2.56 | bwd(ms): 481.38 | optim(ms): 17.73 | loss: 32.17500 | train-TER: 98.56 | train-WER: 99.89 | dev-clean-loss: 23.20022 | dev-clean-TER: 97.66 | dev-clean-WER: 99.98 | dev-other-loss: 21.96860 | dev-other-TER: 97.49 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.88 epoch: 60 | nupdates: 821155 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:46:00 | bch(ms): 575.81 | smp(ms): 1.28 | fwd(ms): 74.54 | crit-fwd(ms): 2.57 | bwd(ms): 481.77 | optim(ms): 17.71 | loss: 32.17340 | train-TER: 98.56 | train-WER: 99.88 | dev-clean-loss: 23.19585 | dev-clean-TER: 97.73 | dev-clean-WER: 99.98 | dev-other-loss: 21.96421 | dev-other-TER: 97.56 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.28 epoch: 61 | nupdates: 832201 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:51 | bch(ms): 575.04 | smp(ms): 0.38 | fwd(ms): 74.62 | crit-fwd(ms): 2.57 | bwd(ms): 481.86 | optim(ms): 17.72 | loss: 32.17105 | train-TER: 98.56 | train-WER: 99.82 | dev-clean-loss: 23.19263 | dev-clean-TER: 97.71 | dev-clean-WER: 99.98 | dev-other-loss: 21.96177 | dev-other-TER: 97.52 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.63 epoch: 62 | nupdates: 843247 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:51 | bch(ms): 575.00 | smp(ms): 0.39 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.88 | optim(ms): 17.71 | loss: 32.16783 | train-TER: 98.57 | train-WER: 99.86 | dev-clean-loss: 23.19060 | dev-clean-TER: 97.71 | dev-clean-WER: 99.99 | dev-other-loss: 21.96098 | dev-other-TER: 97.52 | dev-other-WER: 99.97 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.64 epoch: 63 | nupdates: 854293 | lr: 0.001000 | lrcriterion: 0.000000 | runtime: 01:45:54 | bch(ms): 575.27 | smp(ms): 0.67 | fwd(ms): 74.56 | crit-fwd(ms): 2.57 | bwd(ms): 481.84 | optim(ms): 17.75 | loss: 32.16552 | train-TER: 98.54 | train-WER: 99.86 | dev-clean-loss: 23.18931 | dev-clean-TER: 97.77 | dev-clean-WER: 99.99 | dev-other-loss: 21.96080 | dev-other-TER: 97.57 | dev-other-WER: 99.97 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.52

--changging LR to 0.01 : epoch: 64 | nupdates: 865339 | lr: 0.010000 | lrcriterion: 0.000000 | runtime: 01:45:53 | bch(ms): 575.22 | smp(ms): 0.76 | fwd(ms): 74.57 | crit-fwd(ms): 2.56 | bwd(ms): 481.65 | optim(ms): 17.55 | loss: 32.16085 | train-TER: 98.59 | train-WER: 99.86 | dev-clean-loss: 23.17977 | dev-clean-TER: 98.00 | dev-clean-WER: 99.99 | dev-other-loss: 21.94896 | dev-other-TER: 97.80 | dev-other-WER: 99.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.54 epoch: 65 | nupdates: 876385 | lr: 0.010000 | lrcriterion: 0.000000 | runtime: 01:45:46 | bch(ms): 574.58 | smp(ms): 0.38 | fwd(ms): 74.50 | crit-fwd(ms): 2.56 | bwd(ms): 481.66 | optim(ms): 17.58 | loss: 32.14894 | train-TER: 98.62 | train-WER: 99.86 | dev-clean-loss: 23.16606 | dev-clean-TER: 97.62 | dev-clean-WER: 99.99 | dev-other-loss: 21.93409 | dev-other-TER: 97.45 | dev-other-WER: 99.99 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.84 epoch: 66 | nupdates: 887431 | lr: 0.010000 | lrcriterion: 0.000000 | runtime: 01:45:52 | bch(ms): 575.08 | smp(ms): 0.39 | fwd(ms): 74.49 | crit-fwd(ms): 2.56 | bwd(ms): 482.17 | optim(ms): 17.58 | loss: 32.13364 | train-TER: 98.60 | train-WER: 99.83 | dev-clean-loss: 23.13736 | dev-clean-TER: 97.94 | dev-clean-WER: 99.87 | dev-other-loss: 21.91334 | dev-other-TER: 97.70 | dev-other-WER: 99.91 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.61 epoch: 67 | nupdates: 898477 | lr: 0.010000 | lrcriterion: 0.000000 | runtime: 01:45:52 | bch(ms): 575.12 | smp(ms): 0.38 | fwd(ms): 74.55 | crit-fwd(ms): 2.57 | bwd(ms): 482.12 | optim(ms): 17.61 | loss: 32.11110 | train-TER: 98.55 | train-WER: 99.69 | dev-clean-loss: 23.10704 | dev-clean-TER: 97.52 | dev-clean-WER: 99.63 | dev-other-loss: 21.88270 | dev-other-TER: 97.37 | dev-other-WER: 99.76 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.59

--changging LR to 0.1 : epoch: 68 | nupdates: 920570 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:09 | bch(ms): 255.72 | smp(ms): 0.41 | fwd(ms): 72.74 | crit-fwd(ms): 2.57 | bwd(ms): 165.57 | optim(ms): 16.88 | loss: 31.99841 | train-TER: 98.02 | train-WER: 99.29 | dev-clean-loss: 22.59560 | dev-clean-TER: 96.78 | dev-clean-WER: 99.02 | dev-other-loss: 21.44594 | dev-other-TER: 96.47 | dev-other-WER: 98.99 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.31 epoch: 69 | nupdates: 942663 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:25 | bch(ms): 256.45 | smp(ms): 0.41 | fwd(ms): 73.00 | crit-fwd(ms): 2.58 | bwd(ms): 165.97 | optim(ms): 16.95 | loss: 31.51407 | train-TER: 96.73 | train-WER: 98.90 | dev-clean-loss: 21.62733 | dev-clean-TER: 93.41 | dev-clean-WER: 98.43 | dev-other-loss: 20.82677 | dev-other-TER: 92.78 | dev-other-WER: 98.46 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 294.46 epoch: 70 | nupdates: 964756 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:17 | bch(ms): 256.09 | smp(ms): 0.68 | fwd(ms): 72.79 | crit-fwd(ms): 2.57 | bwd(ms): 165.56 | optim(ms): 16.94 | loss: 30.90656 | train-TER: 94.46 | train-WER: 98.70 | dev-clean-loss: 20.74631 | dev-clean-TER: 89.13 | dev-clean-WER: 97.82 | dev-other-loss: 20.18494 | dev-other-TER: 88.39 | dev-other-WER: 98.03 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 294.87 epoch: 71 | nupdates: 986849 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:56 | bch(ms): 255.11 | smp(ms): 0.41 | fwd(ms): 72.58 | crit-fwd(ms): 2.56 | bwd(ms): 165.06 | optim(ms): 16.94 | loss: 30.31963 | train-TER: 92.60 | train-WER: 98.60 | dev-clean-loss: 19.94783 | dev-clean-TER: 85.52 | dev-clean-WER: 97.14 | dev-other-loss: 19.74339 | dev-other-TER: 84.67 | dev-other-WER: 97.41 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.00 epoch: 72 | nupdates: 1008942 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:55 | bch(ms): 255.09 | smp(ms): 0.41 | fwd(ms): 72.55 | crit-fwd(ms): 2.56 | bwd(ms): 165.00 | optim(ms): 17.00 | loss: 29.74049 | train-TER: 90.07 | train-WER: 98.21 | dev-clean-loss: 19.55888 | dev-clean-TER: 82.14 | dev-clean-WER: 96.56 | dev-other-loss: 19.61514 | dev-other-TER: 81.06 | dev-other-WER: 96.77 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.03 epoch: 73 | nupdates: 1031035 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:21 | bch(ms): 256.24 | smp(ms): 1.58 | fwd(ms): 72.57 | crit-fwd(ms): 2.56 | bwd(ms): 165.00 | optim(ms): 16.97 | loss: 29.25941 | train-TER: 88.62 | train-WER: 97.83 | dev-clean-loss: 18.88633 | dev-clean-TER: 83.00 | dev-clean-WER: 96.02 | dev-other-loss: 18.73161 | dev-other-TER: 82.91 | dev-other-WER: 96.39 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 294.71 epoch: 74 | nupdates: 1053128 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:59 | bch(ms): 255.26 | smp(ms): 0.41 | fwd(ms): 72.64 | crit-fwd(ms): 2.56 | bwd(ms): 165.07 | optim(ms): 17.01 | loss: 28.81696 | train-TER: 86.67 | train-WER: 97.35 | dev-clean-loss: 18.46406 | dev-clean-TER: 78.63 | dev-clean-WER: 95.53 | dev-other-loss: 18.70266 | dev-other-TER: 78.75 | dev-other-WER: 95.80 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.84 epoch: 75 | nupdates: 1075221 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:22 | bch(ms): 256.31 | smp(ms): 1.72 | fwd(ms): 72.58 | crit-fwd(ms): 2.56 | bwd(ms): 164.88 | optim(ms): 17.01 | loss: 28.42337 | train-TER: 85.72 | train-WER: 97.10 | dev-clean-loss: 18.24303 | dev-clean-TER: 74.65 | dev-clean-WER: 94.51 | dev-other-loss: 18.61098 | dev-other-TER: 74.81 | dev-other-WER: 94.85 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 294.62 epoch: 76 | nupdates: 1097314 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:15 | bch(ms): 255.97 | smp(ms): 1.42 | fwd(ms): 72.52 | crit-fwd(ms): 2.56 | bwd(ms): 164.84 | optim(ms): 17.07 | loss: 28.05654 | train-TER: 84.61 | train-WER: 96.83 | dev-clean-loss: 17.40686 | dev-clean-TER: 73.69 | dev-clean-WER: 93.38 | dev-other-loss: 17.97392 | dev-other-TER: 73.98 | dev-other-WER: 93.77 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.01 epoch: 77 | nupdates: 1119407 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:57 | bch(ms): 255.18 | smp(ms): 0.41 | fwd(ms): 72.61 | crit-fwd(ms): 2.56 | bwd(ms): 165.01 | optim(ms): 17.03 | loss: 27.73276 | train-TER: 83.33 | train-WER: 96.45 | dev-clean-loss: 17.43218 | dev-clean-TER: 73.40 | dev-clean-WER: 93.73 | dev-other-loss: 18.25557 | dev-other-TER: 73.65 | dev-other-WER: 93.90 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.93 epoch: 78 | nupdates: 1141500 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:51 | bch(ms): 254.91 | smp(ms): 0.41 | fwd(ms): 72.55 | crit-fwd(ms): 2.56 | bwd(ms): 164.83 | optim(ms): 17.01 | loss: 27.41439 | train-TER: 82.29 | train-WER: 96.16 | dev-clean-loss: 16.63795 | dev-clean-TER: 70.98 | dev-clean-WER: 92.23 | dev-other-loss: 17.45762 | dev-other-TER: 72.09 | dev-other-WER: 92.80 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.24 epoch: 79 | nupdates: 1163593 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:46 | bch(ms): 254.65 | smp(ms): 0.41 | fwd(ms): 72.48 | crit-fwd(ms): 2.56 | bwd(ms): 164.64 | optim(ms): 17.01 | loss: 27.13692 | train-TER: 81.83 | train-WER: 95.75 | dev-clean-loss: 16.89991 | dev-clean-TER: 70.06 | dev-clean-WER: 91.92 | dev-other-loss: 17.73805 | dev-other-TER: 71.03 | dev-other-WER: 92.40 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.54 epoch: 80 | nupdates: 1185686 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:43 | bch(ms): 254.56 | smp(ms): 0.41 | fwd(ms): 72.43 | crit-fwd(ms): 2.55 | bwd(ms): 164.59 | optim(ms): 17.01 | loss: 26.87497 | train-TER: 80.89 | train-WER: 95.64 | dev-clean-loss: 16.47087 | dev-clean-TER: 70.80 | dev-clean-WER: 91.74 | dev-other-loss: 17.25489 | dev-other-TER: 72.45 | dev-other-WER: 92.31 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.65 epoch: 81 | nupdates: 1207779 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:48 | bch(ms): 254.76 | smp(ms): 0.41 | fwd(ms): 72.49 | crit-fwd(ms): 2.56 | bwd(ms): 164.70 | optim(ms): 17.03 | loss: 26.62475 | train-TER: 80.02 | train-WER: 95.17 | dev-clean-loss: 15.99038 | dev-clean-TER: 67.51 | dev-clean-WER: 90.09 | dev-other-loss: 17.05856 | dev-other-TER: 69.13 | dev-other-WER: 90.88 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.41 epoch: 82 | nupdates: 1229872 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:45 | bch(ms): 254.62 | smp(ms): 0.41 | fwd(ms): 72.45 | crit-fwd(ms): 2.56 | bwd(ms): 164.61 | optim(ms): 17.04 | loss: 26.39976 | train-TER: 78.95 | train-WER: 94.89 | dev-clean-loss: 16.29236 | dev-clean-TER: 65.08 | dev-clean-WER: 90.15 | dev-other-loss: 17.53601 | dev-other-TER: 66.84 | dev-other-WER: 91.00 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.58 epoch: 83 | nupdates: 1251965 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:04 | bch(ms): 255.50 | smp(ms): 1.48 | fwd(ms): 72.40 | crit-fwd(ms): 2.56 | bwd(ms): 164.47 | optim(ms): 17.03 | loss: 26.18772 | train-TER: 77.92 | train-WER: 94.54 | dev-clean-loss: 15.41056 | dev-clean-TER: 66.67 | dev-clean-WER: 89.00 | dev-other-loss: 16.66171 | dev-other-TER: 68.67 | dev-other-WER: 90.01 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.55 epoch: 84 | nupdates: 1274058 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:40 | bch(ms): 254.42 | smp(ms): 0.41 | fwd(ms): 72.41 | crit-fwd(ms): 2.56 | bwd(ms): 164.46 | optim(ms): 17.02 | loss: 25.96535 | train-TER: 78.05 | train-WER: 94.50 | dev-clean-loss: 15.75490 | dev-clean-TER: 64.79 | dev-clean-WER: 89.27 | dev-other-loss: 17.15442 | dev-other-TER: 66.26 | dev-other-WER: 89.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.81 epoch: 85 | nupdates: 1296151 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:40 | bch(ms): 254.40 | smp(ms): 0.41 | fwd(ms): 72.41 | crit-fwd(ms): 2.56 | bwd(ms): 164.42 | optim(ms): 17.04 | loss: 25.77880 | train-TER: 77.88 | train-WER: 94.26 | dev-clean-loss: 15.89415 | dev-clean-TER: 64.22 | dev-clean-WER: 87.34 | dev-other-loss: 16.70116 | dev-other-TER: 67.24 | dev-other-WER: 89.11 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.84 epoch: 86 | nupdates: 1318244 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:44 | bch(ms): 254.56 | smp(ms): 0.41 | fwd(ms): 72.45 | crit-fwd(ms): 2.56 | bwd(ms): 164.55 | optim(ms): 17.03 | loss: 25.58324 | train-TER: 77.20 | train-WER: 93.90 | dev-clean-loss: 15.16759 | dev-clean-TER: 62.89 | dev-clean-WER: 87.34 | dev-other-loss: 16.63964 | dev-other-TER: 65.20 | dev-other-WER: 88.50 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.65 epoch: 87 | nupdates: 1340337 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:40 | bch(ms): 254.41 | smp(ms): 0.41 | fwd(ms): 72.40 | crit-fwd(ms): 2.56 | bwd(ms): 164.43 | optim(ms): 17.04 | loss: 25.39662 | train-TER: 75.84 | train-WER: 93.31 | dev-clean-loss: 14.96239 | dev-clean-TER: 63.23 | dev-clean-WER: 86.87 | dev-other-loss: 16.39672 | dev-other-TER: 66.46 | dev-other-WER: 88.60 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.83 epoch: 88 | nupdates: 1362430 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:34:02 | bch(ms): 255.39 | smp(ms): 0.41 | fwd(ms): 72.72 | crit-fwd(ms): 2.57 | bwd(ms): 165.08 | optim(ms): 17.06 | loss: 25.24409 | train-TER: 75.67 | train-WER: 93.33 | dev-clean-loss: 14.80308 | dev-clean-TER: 63.20 | dev-clean-WER: 86.24 | dev-other-loss: 16.21425 | dev-other-TER: 66.04 | dev-other-WER: 87.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 295.68 epoch: 89 | nupdates: 1384523 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:51 | bch(ms): 254.91 | smp(ms): 0.41 | fwd(ms): 72.58 | crit-fwd(ms): 2.56 | bwd(ms): 164.77 | optim(ms): 17.03 | loss: 25.05608 | train-TER: 75.19 | train-WER: 93.11 | dev-clean-loss: 14.87243 | dev-clean-TER: 60.57 | dev-clean-WER: 84.74 | dev-other-loss: 16.34488 | dev-other-TER: 64.29 | dev-other-WER: 87.60 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.24 epoch: 90 | nupdates: 1406616 | lr: 0.100000 | lrcriterion: 0.000000 | runtime: 01:33:40 | bch(ms): 254.42 | smp(ms): 0.63 | fwd(ms): 72.36 | crit-fwd(ms): 2.56 | bwd(ms): 164.26 | optim(ms): 17.05 | loss: 24.91355 | train-TER: 74.42 | train-WER: 92.61 | dev-clean-loss: 14.59600 | dev-clean-TER: 57.97 | dev-clean-WER: 84.09 | dev-other-loss: 16.19192 | dev-other-TER: 61.68 | dev-other-WER: 86.42 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 526 | hrs: 463.43 | thrpt(sec/sec): 296.81

--changging LR to 0.4: epoch: 91 | nupdates: 1417662 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:08 | bch(ms): 576.54 | smp(ms): 1.11 | fwd(ms): 74.53 | crit-fwd(ms): 2.56 | bwd(ms): 482.74 | optim(ms): 17.60 | loss: 26.38083 | train-TER: 79.77 | train-WER: 94.22 | dev-clean-loss: 15.06409 | dev-clean-TER: 66.98 | dev-clean-WER: 87.61 | dev-other-loss: 16.21861 | dev-other-TER: 69.08 | dev-other-WER: 89.13 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 261.94 epoch: 92 | nupdates: 1428708 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:00 | bch(ms): 575.80 | smp(ms): 0.44 | fwd(ms): 74.37 | crit-fwd(ms): 2.56 | bwd(ms): 482.92 | optim(ms): 17.65 | loss: 25.99496 | train-TER: 78.39 | train-WER: 93.96 | dev-clean-loss: 15.83105 | dev-clean-TER: 65.54 | dev-clean-WER: 87.79 | dev-other-loss: 17.18877 | dev-other-TER: 66.51 | dev-other-WER: 88.93 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.28 epoch: 93 | nupdates: 1439754 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:04 | bch(ms): 576.16 | smp(ms): 0.38 | fwd(ms): 74.44 | crit-fwd(ms): 2.56 | bwd(ms): 483.20 | optim(ms): 17.68 | loss: 25.66882 | train-TER: 77.68 | train-WER: 93.57 | dev-clean-loss: 14.61843 | dev-clean-TER: 62.42 | dev-clean-WER: 84.27 | dev-other-loss: 16.23545 | dev-other-TER: 65.09 | dev-other-WER: 86.57 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.11 epoch: 94 | nupdates: 1450800 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:05 | bch(ms): 576.25 | smp(ms): 0.39 | fwd(ms): 74.39 | crit-fwd(ms): 2.56 | bwd(ms): 483.42 | optim(ms): 17.60 | loss: 25.36420 | train-TER: 76.50 | train-WER: 93.08 | dev-clean-loss: 14.53571 | dev-clean-TER: 65.52 | dev-clean-WER: 85.96 | dev-other-loss: 15.81713 | dev-other-TER: 68.33 | dev-other-WER: 87.93 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.07 epoch: 95 | nupdates: 1461846 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:59 | bch(ms): 575.75 | smp(ms): 0.39 | fwd(ms): 74.39 | crit-fwd(ms): 2.56 | bwd(ms): 482.87 | optim(ms): 17.64 | loss: 25.09336 | train-TER: 75.56 | train-WER: 92.35 | dev-clean-loss: 14.28702 | dev-clean-TER: 62.12 | dev-clean-WER: 83.01 | dev-other-loss: 15.72837 | dev-other-TER: 64.67 | dev-other-WER: 85.68 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.30 epoch: 96 | nupdates: 1472892 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:20 | bch(ms): 577.64 | smp(ms): 1.29 | fwd(ms): 74.43 | crit-fwd(ms): 2.56 | bwd(ms): 483.82 | optim(ms): 17.65 | loss: 24.82426 | train-TER: 75.33 | train-WER: 92.27 | dev-clean-loss: 14.35907 | dev-clean-TER: 56.85 | dev-clean-WER: 80.91 | dev-other-loss: 16.66143 | dev-other-TER: 59.55 | dev-other-WER: 83.71 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 261.44 epoch: 97 | nupdates: 1483938 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:05 | bch(ms): 576.23 | smp(ms): 0.39 | fwd(ms): 74.45 | crit-fwd(ms): 2.56 | bwd(ms): 483.26 | optim(ms): 17.67 | loss: 24.57355 | train-TER: 74.62 | train-WER: 91.89 | dev-clean-loss: 14.07352 | dev-clean-TER: 54.05 | dev-clean-WER: 78.83 | dev-other-loss: 16.16417 | dev-other-TER: 58.23 | dev-other-WER: 82.59 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.08 epoch: 98 | nupdates: 1494984 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:06 | bch(ms): 576.38 | smp(ms): 0.39 | fwd(ms): 74.46 | crit-fwd(ms): 2.56 | bwd(ms): 483.39 | optim(ms): 17.69 | loss: 24.37171 | train-TER: 73.99 | train-WER: 91.50 | dev-clean-loss: 13.91105 | dev-clean-TER: 55.46 | dev-clean-WER: 79.26 | dev-other-loss: 16.17228 | dev-other-TER: 59.00 | dev-other-WER: 82.83 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.01 epoch: 99 | nupdates: 1506030 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:05 | bch(ms): 576.29 | smp(ms): 0.76 | fwd(ms): 74.47 | crit-fwd(ms): 2.56 | bwd(ms): 482.86 | optim(ms): 17.68 | loss: 24.13738 | train-TER: 73.26 | train-WER: 90.91 | dev-clean-loss: 13.73925 | dev-clean-TER: 55.07 | dev-clean-WER: 79.34 | dev-other-loss: 15.88490 | dev-other-TER: 59.41 | dev-other-WER: 83.33 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.06 epoch: 100 | nupdates: 1517076 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:59 | bch(ms): 575.70 | smp(ms): 0.39 | fwd(ms): 74.41 | crit-fwd(ms): 2.56 | bwd(ms): 482.76 | optim(ms): 17.69 | loss: 23.96969 | train-TER: 72.89 | train-WER: 90.87 | dev-clean-loss: 13.47274 | dev-clean-TER: 58.30 | dev-clean-WER: 79.43 | dev-other-loss: 15.28514 | dev-other-TER: 62.85 | dev-other-WER: 83.62 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.32 epoch: 101 | nupdates: 1528122 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:01 | bch(ms): 575.91 | smp(ms): 0.39 | fwd(ms): 74.40 | crit-fwd(ms): 2.56 | bwd(ms): 482.99 | optim(ms): 17.68 | loss: 23.82176 | train-TER: 71.62 | train-WER: 90.05 | dev-clean-loss: 13.47325 | dev-clean-TER: 52.63 | dev-clean-WER: 76.68 | dev-other-loss: 15.79679 | dev-other-TER: 56.92 | dev-other-WER: 81.07 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.23 epoch: 102 | nupdates: 1539168 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:04 | bch(ms): 576.14 | smp(ms): 0.39 | fwd(ms): 74.43 | crit-fwd(ms): 2.56 | bwd(ms): 483.15 | optim(ms): 17.71 | loss: 23.63672 | train-TER: 71.46 | train-WER: 89.81 | dev-clean-loss: 13.32893 | dev-clean-TER: 53.11 | dev-clean-WER: 76.21 | dev-other-loss: 15.28155 | dev-other-TER: 58.46 | dev-other-WER: 81.35 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.12 epoch: 103 | nupdates: 1550214 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:46:02 | bch(ms): 576.03 | smp(ms): 0.39 | fwd(ms): 74.43 | crit-fwd(ms): 2.56 | bwd(ms): 483.06 | optim(ms): 17.70 | loss: 23.48562 | train-TER: 70.69 | train-WER: 89.48 | dev-clean-loss: 12.98371 | dev-clean-TER: 51.81 | dev-clean-WER: 74.91 | dev-other-loss: 15.51837 | dev-other-TER: 56.68 | dev-other-WER: 80.11 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.17

--changging LR to 0.4 : epoch: 104 | nupdates: 1561260 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:29 | bch(ms): 573.04 | smp(ms): 0.39 | fwd(ms): 74.45 | crit-fwd(ms): 2.55 | bwd(ms): 480.07 | optim(ms): 17.66 | loss: 23.31123 | train-TER: 70.03 | train-WER: 89.11 | dev-clean-loss: 12.61001 | dev-clean-TER: 53.41 | dev-clean-WER: 75.91 | dev-other-loss: 14.77478 | dev-other-TER: 58.92 | dev-other-WER: 81.11 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.54 epoch: 105 | nupdates: 1572306 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:33 | bch(ms): 573.37 | smp(ms): 0.41 | fwd(ms): 74.44 | crit-fwd(ms): 2.56 | bwd(ms): 480.36 | optim(ms): 17.71 | loss: 23.19171 | train-TER: 70.26 | train-WER: 88.68 | dev-clean-loss: 12.91851 | dev-clean-TER: 53.47 | dev-clean-WER: 75.25 | dev-other-loss: 14.87601 | dev-other-TER: 58.94 | dev-other-WER: 80.42 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.39 epoch: 106 | nupdates: 1583352 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:33 | bch(ms): 573.34 | smp(ms): 0.39 | fwd(ms): 74.40 | crit-fwd(ms): 2.55 | bwd(ms): 480.38 | optim(ms): 17.71 | loss: 23.04856 | train-TER: 69.09 | train-WER: 88.24 | dev-clean-loss: 13.16764 | dev-clean-TER: 49.32 | dev-clean-WER: 73.02 | dev-other-loss: 15.24071 | dev-other-TER: 55.36 | dev-other-WER: 78.86 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.40 epoch: 107 | nupdates: 1594398 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:43 | bch(ms): 574.24 | smp(ms): 0.87 | fwd(ms): 74.35 | crit-fwd(ms): 2.55 | bwd(ms): 480.82 | optim(ms): 17.69 | loss: 22.94502 | train-TER: 68.94 | train-WER: 87.96 | dev-clean-loss: 14.69569 | dev-clean-TER: 49.25 | dev-clean-WER: 73.48 | dev-other-loss: 16.29248 | dev-other-TER: 54.92 | dev-other-WER: 78.96 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.99 epoch: 108 | nupdates: 1605444 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:34 | bch(ms): 573.46 | smp(ms): 0.39 | fwd(ms): 74.41 | crit-fwd(ms): 2.55 | bwd(ms): 480.47 | optim(ms): 17.72 | loss: 22.80540 | train-TER: 69.29 | train-WER: 88.27 | dev-clean-loss: 12.48081 | dev-clean-TER: 47.75 | dev-clean-WER: 71.34 | dev-other-loss: 15.24874 | dev-other-TER: 54.50 | dev-other-WER: 78.04 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.35 epoch: 109 | nupdates: 1616490 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:38 | bch(ms): 573.83 | smp(ms): 0.39 | fwd(ms): 74.31 | crit-fwd(ms): 2.55 | bwd(ms): 480.94 | optim(ms): 17.73 | loss: 22.65597 | train-TER: 67.94 | train-WER: 87.52 | dev-clean-loss: 12.66781 | dev-clean-TER: 48.26 | dev-clean-WER: 69.88 | dev-other-loss: 15.10939 | dev-other-TER: 53.99 | dev-other-WER: 77.25 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.18 epoch: 110 | nupdates: 1627536 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:35 | bch(ms): 573.53 | smp(ms): 0.38 | fwd(ms): 74.31 | crit-fwd(ms): 2.55 | bwd(ms): 480.62 | optim(ms): 17.75 | loss: 22.51182 | train-TER: 68.49 | train-WER: 87.60 | dev-clean-loss: 12.43505 | dev-clean-TER: 46.94 | dev-clean-WER: 70.32 | dev-other-loss: 15.16225 | dev-other-TER: 52.86 | dev-other-WER: 77.01 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.32 epoch: 111 | nupdates: 1638582 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:38 | bch(ms): 573.82 | smp(ms): 0.39 | fwd(ms): 74.36 | crit-fwd(ms): 2.55 | bwd(ms): 480.85 | optim(ms): 17.76 | loss: 22.42870 | train-TER: 68.05 | train-WER: 87.27 | dev-clean-loss: 12.61875 | dev-clean-TER: 46.98 | dev-clean-WER: 69.53 | dev-other-loss: 15.16752 | dev-other-TER: 53.22 | dev-other-WER: 76.81 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.18 epoch: 112 | nupdates: 1649628 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:33 | bch(ms): 573.36 | smp(ms): 0.39 | fwd(ms): 74.32 | crit-fwd(ms): 2.55 | bwd(ms): 480.46 | optim(ms): 17.73 | loss: 22.26799 | train-TER: 67.20 | train-WER: 86.89 | dev-clean-loss: 13.82838 | dev-clean-TER: 44.72 | dev-clean-WER: 68.34 | dev-other-loss: 16.05215 | dev-other-TER: 50.62 | dev-other-WER: 75.40 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.39 epoch: 113 | nupdates: 1660674 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:37 | bch(ms): 573.71 | smp(ms): 0.39 | fwd(ms): 74.31 | crit-fwd(ms): 2.55 | bwd(ms): 480.80 | optim(ms): 17.76 | loss: 22.19084 | train-TER: 67.32 | train-WER: 86.61 | dev-clean-loss: 12.59345 | dev-clean-TER: 44.84 | dev-clean-WER: 66.52 | dev-other-loss: 15.15511 | dev-other-TER: 50.32 | dev-other-WER: 73.90 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.23 epoch: 114 | nupdates: 1671720 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:35 | bch(ms): 573.59 | smp(ms): 0.39 | fwd(ms): 74.36 | crit-fwd(ms): 2.55 | bwd(ms): 480.62 | optim(ms): 17.76 | loss: 22.14299 | train-TER: 66.47 | train-WER: 85.99 | dev-clean-loss: 12.49236 | dev-clean-TER: 48.09 | dev-clean-WER: 69.11 | dev-other-loss: 14.62777 | dev-other-TER: 54.74 | dev-other-WER: 76.70 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.29 epoch: 115 | nupdates: 1682766 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:30 | bch(ms): 573.10 | smp(ms): 0.38 | fwd(ms): 74.29 | crit-fwd(ms): 2.55 | bwd(ms): 480.19 | optim(ms): 17.77 | loss: 22.02790 | train-TER: 66.15 | train-WER: 86.12 | dev-clean-loss: 13.84753 | dev-clean-TER: 47.43 | dev-clean-WER: 70.07 | dev-other-loss: 15.47995 | dev-other-TER: 53.69 | dev-other-WER: 77.03 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.52 epoch: 116 | nupdates: 1693812 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:27 | bch(ms): 572.82 | smp(ms): 0.48 | fwd(ms): 74.26 | crit-fwd(ms): 2.55 | bwd(ms): 479.94 | optim(ms): 17.73 | loss: 21.91156 | train-TER: 66.77 | train-WER: 86.17 | dev-clean-loss: 11.88300 | dev-clean-TER: 46.96 | dev-clean-WER: 67.43 | dev-other-loss: 14.40599 | dev-other-TER: 53.66 | dev-other-WER: 75.60 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.64 epoch: 117 | nupdates: 1704858 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:31 | bch(ms): 573.16 | smp(ms): 0.38 | fwd(ms): 74.34 | crit-fwd(ms): 2.55 | bwd(ms): 480.19 | optim(ms): 17.78 | loss: 21.79802 | train-TER: 65.90 | train-WER: 85.61 | dev-clean-loss: 12.89816 | dev-clean-TER: 44.45 | dev-clean-WER: 67.50 | dev-other-loss: 14.94711 | dev-other-TER: 50.71 | dev-other-WER: 74.79 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.49 epoch: 118 | nupdates: 1715904 | lr: 0.400000 | lrcriterion: 0.000000 | runtime: 01:45:31 | bch(ms): 573.16 | smp(ms): 0.39 | fwd(ms): 74.37 | crit-fwd(ms): 2.55 | bwd(ms): 480.20 | optim(ms): 17.74 | loss: 21.76244 | train-TER: 65.49 | train-WER: 85.44 | dev-clean-loss: 13.08869 | dev-clean-TER: 43.83 | dev-clean-WER: 65.79 | dev-other-loss: 15.45446 | dev-other-TER: 50.30 | dev-other-WER: 73.67 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.49

--changging LR to 0.05 : epoch: 119 | nupdates: 1726950 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:33 | bch(ms): 573.39 | smp(ms): 0.39 | fwd(ms): 74.40 | crit-fwd(ms): 2.55 | bwd(ms): 480.66 | optim(ms): 17.46 | loss: 20.49859 | train-TER: 60.60 | train-WER: 82.56 | dev-clean-loss: 12.24425 | dev-clean-TER: 39.77 | dev-clean-WER: 61.04 | dev-other-loss: 14.96421 | dev-other-TER: 46.47 | dev-other-WER: 69.86 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.38 epoch: 120 | nupdates: 1737996 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:35 | bch(ms): 573.56 | smp(ms): 0.41 | fwd(ms): 74.32 | crit-fwd(ms): 2.55 | bwd(ms): 480.89 | optim(ms): 17.48 | loss: 20.19804 | train-TER: 61.51 | train-WER: 82.79 | dev-clean-loss: 12.65563 | dev-clean-TER: 38.84 | dev-clean-WER: 60.32 | dev-other-loss: 15.17451 | dev-other-TER: 45.75 | dev-other-WER: 69.47 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.30 epoch: 121 | nupdates: 1749042 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:43 | bch(ms): 574.27 | smp(ms): 0.38 | fwd(ms): 74.29 | crit-fwd(ms): 2.55 | bwd(ms): 481.62 | optim(ms): 17.52 | loss: 20.04327 | train-TER: 60.30 | train-WER: 81.24 | dev-clean-loss: 12.92881 | dev-clean-TER: 37.80 | dev-clean-WER: 59.16 | dev-other-loss: 15.48063 | dev-other-TER: 44.18 | dev-other-WER: 68.31 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.98 epoch: 122 | nupdates: 1760088 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:44 | bch(ms): 574.35 | smp(ms): 0.38 | fwd(ms): 74.31 | crit-fwd(ms): 2.55 | bwd(ms): 481.69 | optim(ms): 17.50 | loss: 19.97077 | train-TER: 59.92 | train-WER: 81.16 | dev-clean-loss: 12.84416 | dev-clean-TER: 37.74 | dev-clean-WER: 59.39 | dev-other-loss: 15.37489 | dev-other-TER: 44.28 | dev-other-WER: 68.59 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.94 epoch: 123 | nupdates: 1771134 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:46 | bch(ms): 574.59 | smp(ms): 1.11 | fwd(ms): 74.29 | crit-fwd(ms): 2.55 | bwd(ms): 481.06 | optim(ms): 17.52 | loss: 19.86593 | train-TER: 60.90 | train-WER: 81.29 | dev-clean-loss: 12.94454 | dev-clean-TER: 37.41 | dev-clean-WER: 58.50 | dev-other-loss: 15.64545 | dev-other-TER: 43.55 | dev-other-WER: 67.69 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.83 epoch: 124 | nupdates: 1782180 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:43 | bch(ms): 574.30 | smp(ms): 0.38 | fwd(ms): 74.28 | crit-fwd(ms): 2.55 | bwd(ms): 481.65 | optim(ms): 17.52 | loss: 19.73053 | train-TER: 59.83 | train-WER: 81.54 | dev-clean-loss: 13.63565 | dev-clean-TER: 37.31 | dev-clean-WER: 58.76 | dev-other-loss: 15.78675 | dev-other-TER: 43.67 | dev-other-WER: 67.94 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 262.96 epoch: 125 | nupdates: 1793226 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:38 | bch(ms): 573.85 | smp(ms): 0.38 | fwd(ms): 74.36 | crit-fwd(ms): 2.55 | bwd(ms): 481.08 | optim(ms): 17.55 | loss: 19.64221 | train-TER: 58.49 | train-WER: 80.34 | dev-clean-loss: 12.44721 | dev-clean-TER: 37.42 | dev-clean-WER: 58.11 | dev-other-loss: 15.09858 | dev-other-TER: 44.32 | dev-other-WER: 67.98 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.17 epoch: 126 | nupdates: 1804272 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:37 | bch(ms): 573.77 | smp(ms): 0.38 | fwd(ms): 74.30 | crit-fwd(ms): 2.55 | bwd(ms): 481.06 | optim(ms): 17.56 | loss: 19.61890 | train-TER: 58.68 | train-WER: 80.23 | dev-clean-loss: 13.46364 | dev-clean-TER: 36.94 | dev-clean-WER: 58.37 | dev-other-loss: 15.48717 | dev-other-TER: 43.86 | dev-other-WER: 67.87 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.21 epoch: 127 | nupdates: 1815318 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:37 | bch(ms): 573.75 | smp(ms): 0.38 | fwd(ms): 74.29 | crit-fwd(ms): 2.55 | bwd(ms): 481.05 | optim(ms): 17.57 | loss: 19.50010 | train-TER: 58.77 | train-WER: 80.36 | dev-clean-loss: 12.93299 | dev-clean-TER: 37.11 | dev-clean-WER: 57.83 | dev-other-loss: 15.40969 | dev-other-TER: 43.59 | dev-other-WER: 67.20 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.21 epoch: 128 | nupdates: 1826364 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:41 | bch(ms): 574.07 | smp(ms): 0.39 | fwd(ms): 74.32 | crit-fwd(ms): 2.55 | bwd(ms): 481.34 | optim(ms): 17.56 | loss: 19.48365 | train-TER: 57.93 | train-WER: 79.41 | dev-clean-loss: 12.31749 | dev-clean-TER: 37.26 | dev-clean-WER: 57.55 | dev-other-loss: 15.12513 | dev-other-TER: 43.88 | dev-other-WER: 67.36 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.07 epoch: 129 | nupdates: 1837410 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:40 | bch(ms): 573.99 | smp(ms): 0.39 | fwd(ms): 74.35 | crit-fwd(ms): 2.55 | bwd(ms): 481.22 | optim(ms): 17.57 | loss: 19.47802 | train-TER: 59.61 | train-WER: 80.75 | dev-clean-loss: 13.34316 | dev-clean-TER: 36.66 | dev-clean-WER: 57.88 | dev-other-loss: 15.59411 | dev-other-TER: 43.31 | dev-other-WER: 67.31 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.11 epoch: 130 | nupdates: 1848456 | lr: 0.050000 | lrcriterion: 0.000000 | runtime: 01:45:41 | bch(ms): 574.06 | smp(ms): 0.38 | fwd(ms): 74.33 | crit-fwd(ms): 2.55 | bwd(ms): 481.31 | optim(ms): 17.56 | loss: 19.42388 | train-TER: 59.57 | train-WER: 80.67 | dev-clean-loss: 12.93185 | dev-clean-TER: 36.50 | dev-clean-WER: 57.15 | dev-other-loss: 15.48678 | dev-other-TER: 43.12 | dev-other-WER: 66.89 | avg-isz: 1258 | avg-tsz: 219 | max-tsz: 419 | hrs: 463.38 | thrpt(sec/sec): 263.07

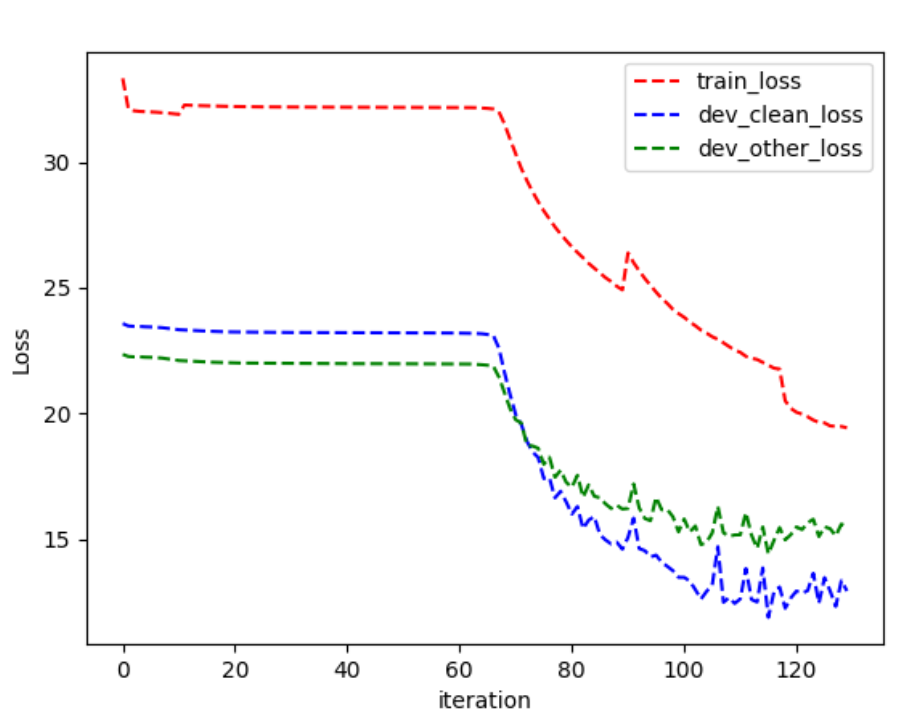

Changing the learning rate does not influence that much to reach the global minimum. as shown in the log file decreasing or increasing the LR does not progress the train process either to converge or to diverge (behavior is the same). what i should change in the Flagsfile to make the model converge very fast? I am using 2 GPU RTX 2080 ti and the train data is 460 hr Librespeech. I really appriciate your advice .

@kerolos: As @tlikhomanenko pointed out in the previous comments, the underscore character "_" denotes that the token starts a new word.

Think about a prefix "ed". Lots of words start with the prefix "ed" - for e.g. Edgbaston, edit, education, etc. In all these instances, the prefix "ed" starts a new word, so token "_ed" would make it into the top 10k tokens if it occurs frequently enough.

The suffix"ed" also appears quite frequently to denote the past tense of a verb - for e.g. summed, listened, banned, etc. In all these instances, it doesn't start a new word, so token "ed" (without an underscore) would also make it into the top 10k tokens.

This is why you may have two instances of token "ed", one with an underscore and one without.

With regards to your 2nd question, I presume (and this could be verified by looking at emissions), both corresponding versions of a token (if they exist in the token set) (with and without underscores) would have a higher probability for an utterance. The word-level LM would help disambiguate which token to chose (whether to start a new word by considering the token starting with the underscore or continue the previous word).

Thanks a lot @abhinavkulkarni for your reply. as you suggest to have a look at emission files, to understand and see what is the output looks like in the specific word (unremarkable).

I am wondering why it is difficult to train such an Acoustic model with a different lexicon such as phonetic-based AM or Letter-Based AM.

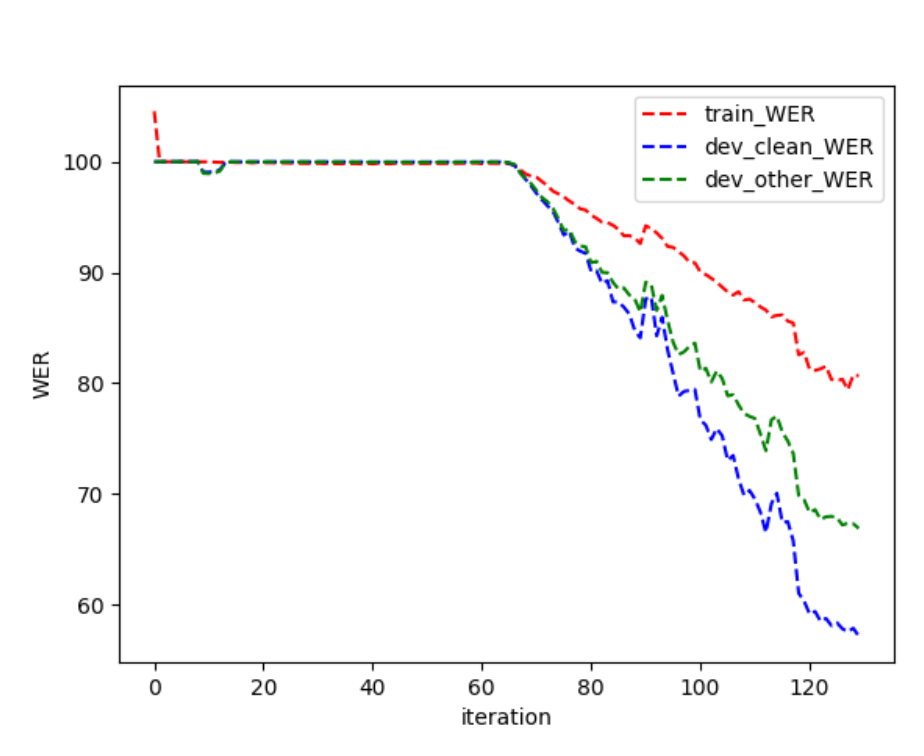

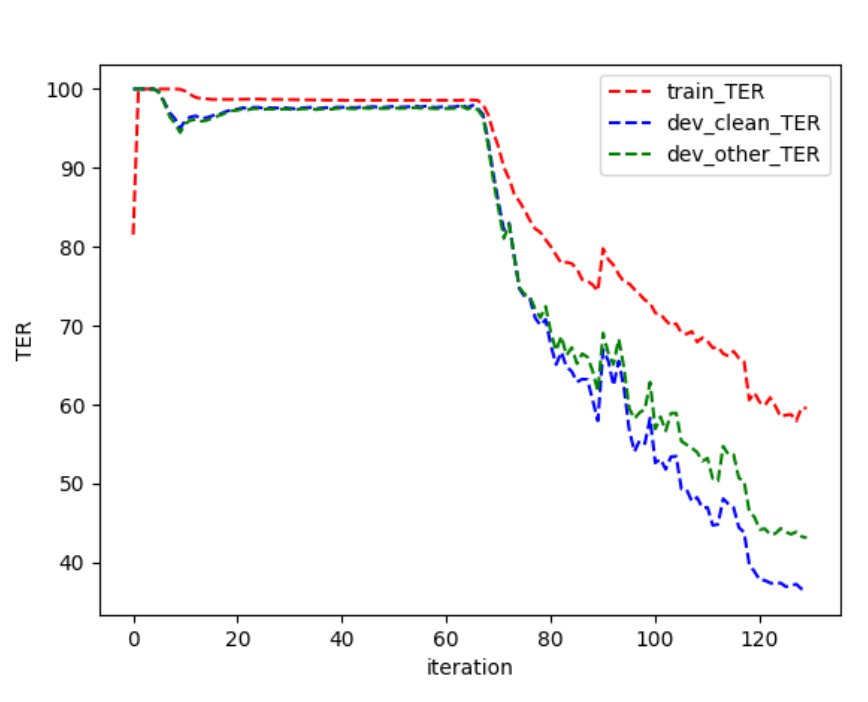

--train AM using Letter-Based lexicon :

from 1 epoch to 63 epoch --> using lr: 0.001000 from 64 epoch to 67 epoch --> using lr: 0.01000 from 68 epoch to 90 epoch --> using lr: 0.1000 from 91 epoch to 118 epoch --> using lr: 0.40 from 119 epoch to 130 epoch --> using lr: .05 epoch = iteration.

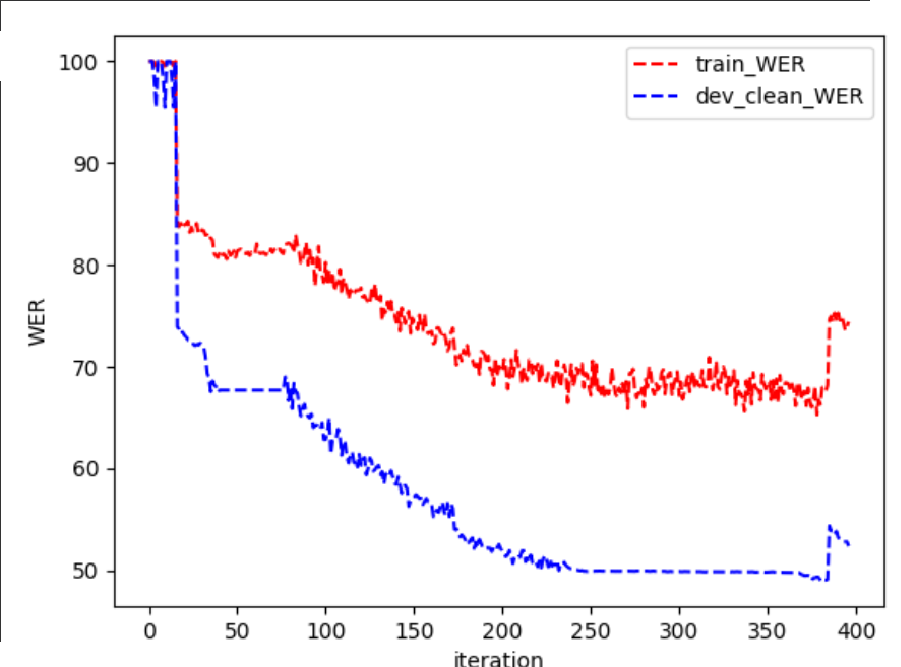

--train AM using phonetic-Based lexicon :

What stride of the model did you use for phoneme and letter-based cases?

the model is TDS-CTC (streaming version): stride in C2 layer is 2 (the default one)

SAUG 80 27 2 100 1.0 2 V -1 NFEAT 1 0 C2 1 10 21 1 2 1 -1 -1 R DO 0.0 LN 1 2 TDS 10 21 80 0.05 2400 TDS 10 21 80 0.05 2400 TDS 10 21 80 0.05 2400 TDS 10 21 80 0.1 2400 TDS 10 21 80 0.1 2400 C2 10 14 21 1 2 1 -1 -1 R DO 0.0 LN 1 2 TDS 14 21 80 0.15 3360 TDS 14 21 80 0.15 3360 TDS 14 21 80 0.15 3360 TDS 14 21 80 0.15 3360 TDS 14 21 80 0.15 3360 TDS 14 21 80 0.15 3360 C2 14 18 21 1 2 1 -1 -1 R DO 0.0 LN 1 2 TDS 18 21 80 0.15 4320 TDS 18 21 80 0.15 4320 TDS 18 21 80 0.15 4320 TDS 18 21 80 0.15 4320 TDS 18 21 80 0.2 4320 TDS 18 21 80 0.2 4320 TDS 18 21 80 0.25 4320 TDS 18 21 80 0.25 4320 TDS 18 21 80 0.25 4320 TDS 18 21 80 0.25 4320 V 0 1440 1 0 RO 1 0 3 2 L 1440 NLABEL

should i convert the stride to 1 like in the tutorial model (letter-based ) ?

V -1 1 NFEAT 0 C2 NFEAT 256 8 1 2 1 -1 -1 --> 2 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R C2 256 256 8 1 1 1 -1 -1 --> 1 stride R RO 2 0 3 1 L 256 512 R L 512 NLABEL

The total stride of the model is 8 which too much for letters and phonemes.

...

C2 1 10 21 1 2 1 -1 -1

...

C2 14 18 21 1 2 1 -1 -1

...

C2 14 18 21 1 2 1 -1 -1

...

You need to use either 2 or 3, maybe 4. So you can just remove lowest layers stride from 2 to 1. FYI: stride 8 means that every 80ms you will output only one letter. With this stride or 16 we can output entire words. So for letters/phonemes you need to do this more frequently.