fanlix

fanlix

by #4560 , `overcommitGuestOverhead: true` help this case?

So, additional 300M(calculated by [the code](https://github.com/kubevirt/kubevirt/blob/18b90f3ff8e174a7a2365f296154ac00329bfe9a/pkg/virt-controller/services/renderresources.go#L272)) is added by `overcommitGuestOverhead: false` config. This is enough for normal kubevirt usage. But VM of heavy load cost more memory than expected. Causing...

top status of an oom ## 1, vm running stable. * the **hv.os** top shows  * the **vm.os** top shows  ## 2, kill & restart app2. * **hv.os**...

yes, this oom happend in HOST side. then how to avoid? * I already decrease ram Overcommit from 150% to 110%. should I decreate to 90% ? * should I...

About the memory-hole problem, any config to force kubevirt pre-alloc whole 64G memory? then HOST donnot need any dynamic complex memory logic, just handle one piece of pure physical ram....

Recently my heavy loading VMs oom-crash everyday. For example a VM running 20G app, I already alloc 72G ram, but skill oom-killed. I really need some method to fix or...

@guangbochen thanks very much. just trying editing k8 yml for cgroup limit.

By increate resoved memory from 100M(default) to 256M, cgroup-oom-kill not seen for 3 days now. In loadtest, both guest-os-oom & guest-os-swap triggered. _But i dont know how much resoved-memory is...

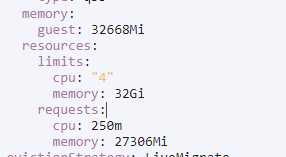

the memory calculate code is here: https://github.com/harvester/harvester/blob/master/pkg/webhook/resources/virtualmachine/mutator.go#L171-L182 different resovle-memory setups result in vmi config: (overcommit=120%) * default 100M  * force 0  * setup 256M

cgroup-oom-kill not happened for 3 weeks. My setup for resolved memory now: * tiny vm = default 100M * normal vm = 256M * ram>64G vm = 512M (maybe overkill,...