theseus

theseus copied to clipboard

theseus copied to clipboard

WIP augmented Lagrangian solver for adding equality constraints

This has some examples and a prototype @dishank-b and I created to help us get in equality constraints with an augmented Lagrangian method as described in this slide deck. It's appealing to have this method in Theseus because it relies mostly on the unconstrained GN/LM NLLS solvers we already have to solve for the update with the constraints moved into the objective as a penalty (via augmenting the Lagnangian). I created a scratch directory (that we can delete before squashing and merging this PR) with file augmented_lagrangian.py that implements an initial prototype of the method along with 2 examples in quadratic.py and car.py that call into it.

The quadratic example (quadratic.py)

This just makes sure we can solve min_x ||x||^2 s.t. x_0 = 1, which has an analytic solution of x^\star = [1., 0] and would make a good unit test once we've better-integrated this.

$ ./quadratic.py

=== [0] mu: 1.00e+00, ||g||: 5.00e-01

{'x': tensor([[0.5000, 0.0000]])}

=== [1] mu: 1.00e+00, ||g||: 2.50e-01

{'x': tensor([[0.7500, 0.0000]])}

=== [2] mu: 2.00e+00, ||g||: 8.33e-02

{'x': tensor([[0.9167, 0.0000]])}

=== [3] mu: 4.00e+00, ||g||: 1.67e-02

{'x': tensor([[0.9833, 0.0000]])}

=== [4] mu: 4.00e+00, ||g||: 3.33e-03

{'x': tensor([[0.9967, 0.0000]])}

=== [5] mu: 4.00e+00, ||g||: 6.67e-04

{'x': tensor([[0.9993, 0.0000]])}

=== [6] mu: 4.00e+00, ||g||: 1.33e-04

{'x': tensor([[0.9999, 0.0000]])}

=== [7] mu: 4.00e+00, ||g||: 2.67e-05

{'x': tensor([[1.0000, 0.0000]])}

=== [8] mu: 4.00e+00, ||g||: 5.30e-06

{'x': tensor([[1.0000, 0.0000]])}

=== [9] mu: 4.00e+00, ||g||: 1.07e-06

{'x': tensor([[1.0000, 0.0000]])}

Car example (car.py)

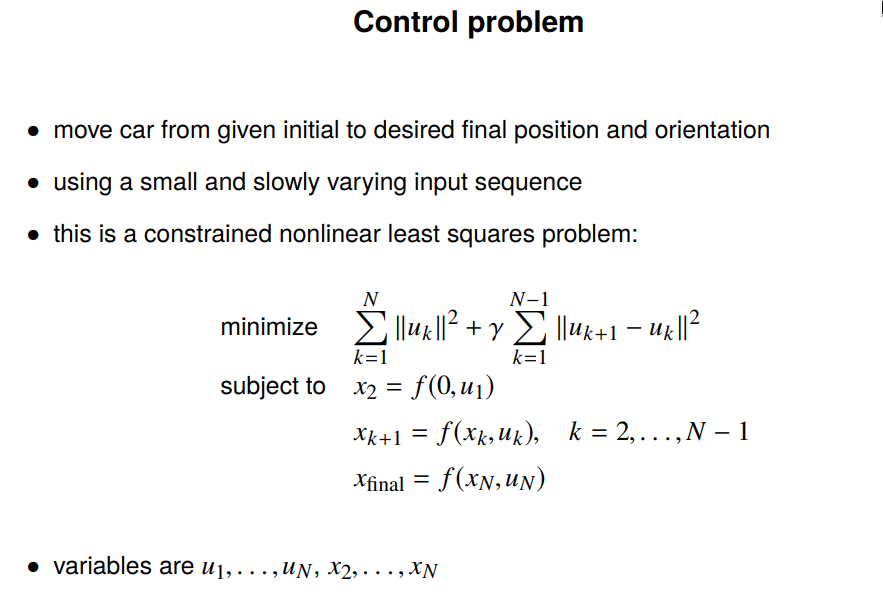

Solves this optimization problem:

We can reproduce this in a simple setting that goes from the origin to the upper right, but in general it doesn't seem as stable as what Vandenberghe has implemented:

Discussion on remaining design choices

I still don't see the best way of integrating the prototype in augmented_lagrangian.py into Theseus. The rough changes are:

- The addition of an

equality_constraint_fn(gin the slides) that should equal zero. - Augmenting the cost with the term from function

- Creating an outer loop that calls into the unconstrained optimizer on the augmented objective

One option is to create a new AugmentedLagrangian optimizer class that adds the constraints, updates the cost, and then iteratively calls into an unconstrained solver. This seems appealing as the existing optimizer could be kept as a child and called into.

Another design choice is how the user should specify the equality constraints. I've currently made the equality_constraint_fn have the same interface as a user-specified err_fn when using AutoDiffCostFunction.

TODOs once design choices are resolved

- [ ] Move the quadratic example to a unit test

- [ ] Add batching support and add a test for that too

- [ ] Add a more general way of augmenting the cost function beyond assuming it's an

AutoDiffCostFunction. I think it could be done similar to https://github.com/facebookresearch/theseus/blob/main/theseus/core/robust_cost_function.py - [ ] Add docs and an example for solving equality-constrained problems

- [ ] Try to stabilize the car example some more and get it to work on some of the harder settings

- [ ] Think about adding any other constrained NLLS examples

- [ ] Work out the details for differentiation/the backward pass and add examples/unit tests for that

- [ ] Delete

scratchdirectory

Even more future directions

- Think about adding support for inequality constraints

- Is it a limitation to ask the user to provide a single function returning all of the equality constraints?