metaseq

metaseq copied to clipboard

metaseq copied to clipboard

`convert_to_singleton` seems to hang for OPT-66B

What is your question?

With the directory prepared

$ ls 66b/

dict.txt reshard-model_part-0-shard0.pt reshard-model_part-3-shard0.pt reshard-model_part-6-shard0.pt

gpt2-merges.txt reshard-model_part-1-shard0.pt reshard-model_part-4-shard0.pt reshard-model_part-7-shard0.pt

gpt2-vocab.json reshard-model_part-2-shard0.pt reshard-model_part-5-shard0.pt

I had to hack checkpoint_utils.py a bit, since this assumption isn't true for OPT-66B:

https://github.com/facebookresearch/metaseq/blob/ac8659de23b680005a14490d72a874613ab59381/metaseq/checkpoint_utils.py#L390-L391

with the following instead

# path to checkpoint...-shared.pt

local_path = local_path.split('.')[0] + '-shard0.pt'

paths_to_load = get_paths_to_load(local_path, suffix="shard")

Running the following

NCCL_SHM_DISABLE=1 NCCL_DEBUG=INFO python -m metaseq.scripts.convert_to_singleton 66b/

is taking a long time (22 hours and counting). Initially nvidia-smi looks like this:

and then the process on

and then the process on GPU 5 terminated first, and it has been in the following state for hours:

$ nvidia-smi

Thu Oct 13 19:24:37 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 460.73.01 Driver Version: 520.61.05 CUDA Version: 11.8 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-SXM2... Off | 00000000:00:16.0 Off | 0 |

| N/A 54C P0 74W / 300W | 20049MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-SXM2... Off | 00000000:00:17.0 Off | 0 |

| N/A 53C P0 72W / 300W | 20133MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Tesla V100-SXM2... Off | 00000000:00:18.0 Off | 0 |

| N/A 52C P0 73W / 300W | 19845MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 Tesla V100-SXM2... Off | 00000000:00:19.0 Off | 0 |

| N/A 50C P0 70W / 300W | 19857MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 Tesla V100-SXM2... Off | 00000000:00:1A.0 Off | 0 |

| N/A 54C P0 76W / 300W | 20073MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 Tesla V100-SXM2... Off | 00000000:00:1B.0 Off | 0 |

| N/A 47C P0 44W / 300W | 1413MiB / 32768MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 Tesla V100-SXM2... Off | 00000000:00:1C.0 Off | 0 |

| N/A 50C P0 72W / 300W | 19977MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 Tesla V100-SXM2... Off | 00000000:00:1D.0 Off | 0 |

| N/A 54C P0 69W / 300W | 19905MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 1335 C python 19788MiB |

| 1 N/A N/A 1419 C ...onda/envs/user/bin/python 19872MiB |

| 2 N/A N/A 1420 C ...onda/envs/user/bin/python 19584MiB |

| 3 N/A N/A 1421 C ...onda/envs/user/bin/python 19596MiB |

| 4 N/A N/A 1422 C ...onda/envs/user/bin/python 19812MiB |

| 6 N/A N/A 1424 C ...onda/envs/user/bin/python 19716MiB |

| 7 N/A N/A 1425 C ...onda/envs/user/bin/python 19644MiB |

+-----------------------------------------------------------------------------+

Is there something obviously wrong here, or something I should try instead? Just in case it's really taking a long time, it's still running. The last few logging lines at INFO level look like this:

(...)

i-0b2d24dbd20c27dd0:1422:3388 [4] NCCL INFO Channel 14 : 4[1a0] -> 2[180] via P2P/indirect/6[1c0]

i-0b2d24dbd20c27dd0:1423:3387 [5] NCCL INFO Channel 14 : 5[1b0] -> 3[190] via P2P/indirect/1[170]

i-0b2d24dbd20c27dd0:1419:3383 [1] NCCL INFO Channel 14 : 1[170] -> 7[1d0] via P2P/indirect/3[190]

i-0b2d24dbd20c27dd0:1422:3388 [4] NCCL INFO Channel 07 : 4[1a0] -> 3[190] via P2P/indirect/0[160]

i-0b2d24dbd20c27dd0:1335:3382 [0] NCCL INFO Channel 07 : 0[160] -> 7[1d0] via P2P/indirect/4[1a0]

i-0b2d24dbd20c27dd0:1422:3388 [4] NCCL INFO Channel 15 : 4[1a0] -> 3[190] via P2P/indirect/0[160]

i-0b2d24dbd20c27dd0:1335:3382 [0] NCCL INFO Channel 15 : 0[160] -> 7[1d0] via P2P/indirect/4[1a0]

i-0b2d24dbd20c27dd0:1419:3383 [1] NCCL INFO comm 0x7f5f78003090 rank 1 nranks 8 cudaDev 1 busId 170 - Init COMPLETE

i-0b2d24dbd20c27dd0:1420:3386 [2] NCCL INFO comm 0x7f7408003090 rank 2 nranks 8 cudaDev 2 busId 180 - Init COMPLETE

i-0b2d24dbd20c27dd0:1422:3388 [4] NCCL INFO comm 0x7fdfc8003090 rank 4 nranks 8 cudaDev 4 busId 1a0 - Init COMPLETE

i-0b2d24dbd20c27dd0:1335:3382 [0] NCCL INFO comm 0x7f5b60003090 rank 0 nranks 8 cudaDev 0 busId 160 - Init COMPLETE

i-0b2d24dbd20c27dd0:1424:3384 [6] NCCL INFO comm 0x7fd82c003090 rank 6 nranks 8 cudaDev 6 busId 1c0 - Init COMPLETE

i-0b2d24dbd20c27dd0:1423:3387 [5] NCCL INFO comm 0x7fd544003090 rank 5 nranks 8 cudaDev 5 busId 1b0 - Init COMPLETE

i-0b2d24dbd20c27dd0:1421:3389 [3] NCCL INFO comm 0x7f9c64003090 rank 3 nranks 8 cudaDev 3 busId 190 - Init COMPLETE

i-0b2d24dbd20c27dd0:1425:3385 [7] NCCL INFO comm 0x7f3fe0003090 rank 7 nranks 8 cudaDev 7 busId 1d0 - Init COMPLETE

i-0b2d24dbd20c27dd0:1335:1335 [0] NCCL INFO Launch mode Parallel

What's your environment?

- metaseq Version: 7828d72815a9a581ab47b95876d38cb262741883 (Oct 5 main)

- PyTorch Version: 1.12.1+cu113

- OS: Ubuntu 18.04.6 LTS

- How you installed metaseq:

pip - Build command you used (if compiling from source): N.A.

- Python version: 3.10

- CUDA/cuDNN version: CUDA 11.8

- GPU models and configuration: 8 x V100 SXM2 32 GB

With the same setup on another (identical) instance, convert_to_singleton seems to be hanging in a similar state, except that now it's the process on GPU 7 that finished first:

$ nvidia-smi

Fri Oct 14 01:58:48 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 520.61.05 Driver Version: 520.61.05 CUDA Version: 11.8 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-SXM2... Off | 00000000:00:16.0 Off | Off |

| N/A 46C P0 72W / 300W | 19791MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-SXM2... Off | 00000000:00:17.0 Off | Off |

| N/A 44C P0 69W / 300W | 19875MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 2 Tesla V100-SXM2... Off | 00000000:00:18.0 Off | Off |

| N/A 46C P0 70W / 300W | 19587MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 3 Tesla V100-SXM2... Off | 00000000:00:19.0 Off | Off |

| N/A 43C P0 67W / 300W | 19599MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 4 Tesla V100-SXM2... Off | 00000000:00:1A.0 Off | Off |

| N/A 45C P0 68W / 300W | 19815MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 5 Tesla V100-SXM2... Off | 00000000:00:1B.0 Off | Off |

| N/A 45C P0 69W / 300W | 19863MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 6 Tesla V100-SXM2... Off | 00000000:00:1C.0 Off | Off |

| N/A 42C P0 69W / 300W | 19719MiB / 32768MiB | 100% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 7 Tesla V100-SXM2... Off | 00000000:00:1D.0 Off | Off |

| N/A 38C P0 45W / 300W | 939MiB / 32768MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 94277 C python 19788MiB |

| 1 N/A N/A 94344 C ...onda/envs/user/bin/python 19872MiB |

| 2 N/A N/A 94345 C ...onda/envs/user/bin/python 19584MiB |

| 3 N/A N/A 94346 C ...onda/envs/user/bin/python 19596MiB |

| 4 N/A N/A 94347 C ...onda/envs/user/bin/python 19812MiB |

| 5 N/A N/A 94348 C ...onda/envs/user/bin/python 19860MiB |

| 6 N/A N/A 94349 C ...onda/envs/user/bin/python 19716MiB |

+-----------------------------------------------------------------------------+

@punitkoura is actually working on this

Isn't this similar to what Binh is working on? Creating a consolidated sharding script https://github.com/facebookresearch/metaseq/issues/376

I don't know exactly what @punitkoura is working on, but the fact that

- I can load OPT-2.7B within reasonable time now as long as the world size matches (i.e. on a 4-GPU instance)

- OPT-66B is the only publicly-released model with files named

reshard-model_part-$i-shard0.ptthrefore requires the hack above

makes me think that this is a separate issue that only affects OPT-66B.

Punit has a patch I believe.

Hi @punitkoura, could you share a bit about the root cause and the current status? I would be more than happy to help if there is something I can do!

@EIFY Ahh sorry for the delay! Could you have a look at this patch which saves memory when trying to consolidate different model parts into a single checkpoint? https://github.com/facebookresearch/metaseq/pull/430

I haven't merged this since the patch requires PyTorch 1.12 at least.

For context, the process you mentioned is probably terminating early because of running out of memory. The patch I tagged in my previous comment does a gather only on the first process instead of consolidating everything on all processes (and thereby creating 8 copies of the model).

@punitkoura I happen to be running PyTorch 1.12 so I will give it a try, but questions:

- Was

convert_to_singletonhanging because one of the processes died of OOM? Ideally we want it to terminate if it's no longer possible to complete. - With #430 does it still require

GPU 0to hold a complete copy of the model? I don't think 32 GB will be enough for OPT-66B...

And sorry about the checkpoint naming confusion... The checkpoints should ideally be named similar to the other checkpoint names. i.e.

reshard-model_part-0.pt , reshard-model_part-1.pt , etc.

instead of having that shard0 suffix. Changing this would enable you to load the checkpoint without having to patch checkpoint_utils.py .

I'll work on getting the names fixed in the meantime.

@punitkoura I happen to be running PyTorch 1.12 so I will give it a try, but questions:

- Was

convert_to_singletonhanging because one of the processes died of OOM? Ideally we want it to terminate if it's no longer possible to complete.

Yes, that is my hypothesis. I observed this when trying to consolidate other larger models as well. I agree, we should detect this condition and terminate instead of hanging.

- With Convert to singleton.py - Gathering parameters only on rank 0 to save memory for large models #430 does it still require

GPU 0to hold a complete copy of the model? I don't think 32 GB will be enough for OPT-66B...

We won't be using GPU 0 to store the whole model. We stitch all parameters on CPU, so we won't need extra GPU memory from what I've observed. (See the .cpu() call in convert_to_singleton). As long as you have enough CPU memory you should be fine. But let me know if you still face issues.

Tl;Dr - The current state of convert_to_singleton seems to waste CPU memory by creating multiple copies of the model. Which we try to fix using the path in https://github.com/facebookresearch/metaseq/pull/430

Using #430 convert_to_singleton completed successfully after writing restored.pt 🎉

However, metaseq-api-local failed to load from it as it tries to put the whole model on GPU 0:

$ metaseq-api-local

2022-10-26 21:15:46 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/restored.pt

2022-10-26 21:30:11 | INFO | metaseq.checkpoint_utils | Done reading from disk

Traceback (most recent call last):

File "/home/jason_chou/.conda/envs/user/bin/metaseq-api-local", line 8, in <module>

sys.exit(cli_main())

File "/home/default_user/metaseq/metaseq_cli/interactive_hosted.py", line 370, in cli_main

distributed_utils.call_main(cfg, worker_main, namespace_args=args)

File "/home/default_user/metaseq/metaseq/distributed/utils.py", line 279, in call_main

return main(cfg, **kwargs)

File "/home/default_user/metaseq/metaseq_cli/interactive_hosted.py", line 176, in worker_main

models = generator.load_model() # noqa: F841

File "/home/default_user/metaseq/metaseq/hub_utils.py", line 579, in load_model

models, _model_args, _task = _load_checkpoint()

File "/home/default_user/metaseq/metaseq/hub_utils.py", line 562, in _load_checkpoint

return checkpoint_utils.load_model_ensemble_and_task(

File "/home/default_user/metaseq/metaseq/checkpoint_utils.py", line 488, in load_model_ensemble_and_task

model = build_model_hook(cfg, task)

File "/home/default_user/metaseq/metaseq/hub_utils.py", line 553, in _build_model

model = task.build_model(cfg.model).cuda()

File "/home/default_user/metaseq/metaseq/tasks/base_task.py", line 531, in build_model

model = models.build_model(args, self)

File "/home/default_user/metaseq/metaseq/models/__init__.py", line 87, in build_model

return model.build_model(cfg, task)

File "/home/default_user/metaseq/metaseq/models/transformer_lm.py", line 185, in build_model

decoder = TransformerDecoder(

File "/home/default_user/metaseq/metaseq/models/transformer_decoder.py", line 127, in __init__

layers.append(self.build_decoder_layer(args))

File "/home/default_user/metaseq/metaseq/models/transformer_decoder.py", line 253, in build_decoder_layer

layer = self.build_base_decoder_layer(args)

File "/home/default_user/metaseq/metaseq/models/transformer_decoder.py", line 250, in build_base_decoder_layer

return TransformerDecoderLayer(args)

File "/home/default_user/metaseq/metaseq/modules/transformer_decoder_layer.py", line 94, in __init__

self.fc2 = self.build_fc2(

File "/home/default_user/metaseq/metaseq/modules/transformer_decoder_layer.py", line 134, in build_fc2

return Linear(

File "/home/default_user/metaseq/metaseq/modules/linear.py", line 41, in __init__

torch.empty(out_features, in_features, device=device, dtype=dtype)

RuntimeError: CUDA out of memory. Tried to allocate 648.00 MiB (GPU 0; 31.75 GiB total capacity; 30.64 GiB already allocated; 270.94 MiB free; 30.65 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

I thought metaseq would shard the model automatically here. Are there configs / env variables that can make it?

I have tried the latest main just in case and it didn't help. @punitkoura

@EIFY Metaseq won't shard the model automatically here... Could you let me know the end result you're trying to achieve? You could have just loaded the model parallel model to be used with metaseq-api-local (without consolidating)

@punitkoura I just want to load the model and run inference (i.e. sentence completion). What should I put as MODEL_FILE in constants.py in order to load the model parallel model?

try:

# internal logic denoting where checkpoints are in meta infrastructure

from metaseq_internal.constants import CHECKPOINT_FOLDER

except ImportError:

# CHECKPOINT_FOLDER should point to a shared drive (e.g. NFS) where the

# checkpoints from S3 are stored. As an example:

# CHECKPOINT_FOLDER = "/example/175B/reshard_no_os"

# $ ls /example/175B/reshard_no_os

# reshard-model_part-0.pt

# reshard-model_part-1.pt

# reshard-model_part-2.pt

# reshard-model_part-3.pt

# reshard-model_part-4.pt

# reshard-model_part-5.pt

# reshard-model_part-6.pt

# reshard-model_part-7.pt

CHECKPOINT_FOLDER = "/home/jason_chou/redspot_home/66b/"

# tokenizer files

BPE_MERGES = os.path.join(CHECKPOINT_FOLDER, "gpt2-merges.txt")

BPE_VOCAB = os.path.join(CHECKPOINT_FOLDER, "gpt2-vocab.json")

MODEL_FILE = os.path.join(CHECKPOINT_FOLDER, "restored.pt")

@EIFY You can see this README on how to override constants.py https://github.com/facebookresearch/metaseq/blob/main/metaseq/cli/README.md

You can actually just follow my_first_override.py

Change the checkpoint location. If the checkpoint is at

/path/to/checkpoint/reshard-model_part-{mp}.pt

where mp goes from [0-7]

You would specify the checkpoint folder as

CHECKPOINT_FOLDER = "/path/to/checkpoint/reshard.pt"

MODEL_PARALLEL will be 8.

"--ddp-backend fully_sharded", - since the checkpoint is FSDP wrapped.

Let me know if you face issues here

@punitkoura I think this is what you meant but it didn't work:

Firstly, I manually renamed the files

for i in {0..7}

do

mv reshard-model_part-$i-shard0.pt reshard-model_part-$i.pt

done

such that

$ ls /home/jason_chou/redspot_home/66b

dict.txt gpt2-vocab.json reshard-model_part-1.pt reshard-model_part-3.pt reshard-model_part-5.pt reshard-model_part-7.pt

gpt2-merges.txt reshard-model_part-0.pt reshard-model_part-2.pt reshard-model_part-4.pt reshard-model_part-6.pt restored.pt

Secondly I edited constants.py accordingly:

$ cat metaseq/metaseq/service/constants.py

# Copyright (c) Meta Platforms, Inc. and affiliates. All Rights Reserved.

#

# This source code is licensed under the MIT license found in the

# LICENSE file in the root directory of this source tree.

import os

MAX_SEQ_LEN = 2048

BATCH_SIZE = 2048 # silly high bc we dynamically batch by MAX_BATCH_TOKENS

MAX_BATCH_TOKENS = 3072

DEFAULT_PORT = 6010

MODEL_PARALLEL = 8

TOTAL_WORLD_SIZE = 8

MAX_BEAM = 16

try:

# internal logic denoting where checkpoints are in meta infrastructure

from metaseq_internal.constants import CHECKPOINT_FOLDER

except ImportError:

# CHECKPOINT_FOLDER should point to a shared drive (e.g. NFS) where the

# checkpoints from S3 are stored. As an example:

# CHECKPOINT_FOLDER = "/example/175B/reshard_no_os"

# $ ls /example/175B/reshard_no_os

# reshard-model_part-0.pt

# reshard-model_part-1.pt

# reshard-model_part-2.pt

# reshard-model_part-3.pt

# reshard-model_part-4.pt

# reshard-model_part-5.pt

# reshard-model_part-6.pt

# reshard-model_part-7.pt

CHECKPOINT_FOLDER = "/home/jason_chou/redspot_home/66b/"

# tokenizer files

BPE_MERGES = os.path.join(CHECKPOINT_FOLDER, "gpt2-merges.txt")

BPE_VOCAB = os.path.join(CHECKPOINT_FOLDER, "gpt2-vocab.json")

MODEL_FILE = os.path.join(CHECKPOINT_FOLDER, "reshard.pt")

LAUNCH_ARGS = [

f"--model-parallel-size {MODEL_PARALLEL}",

f"--distributed-world-size {TOTAL_WORLD_SIZE}",

"--ddp-backend fully_sharded",

"--task language_modeling",

f"--bpe-merges {BPE_MERGES}",

f"--bpe-vocab {BPE_VOCAB}",

"--bpe hf_byte_bpe",

f"--merges-filename {BPE_MERGES}", # TODO(susanz): hack for getting interactive_hosted working on public repo

f"--vocab-filename {BPE_VOCAB}", # TODO(susanz): hack for getting interactive_hosted working on public repo

f"--path {MODEL_FILE}",

"--beam 1 --nbest 1",

"--distributed-port 13000",

"--checkpoint-shard-count 1",

f"--batch-size {BATCH_SIZE}",

f"--buffer-size {BATCH_SIZE * MAX_SEQ_LEN}",

f"--max-tokens {BATCH_SIZE * MAX_SEQ_LEN}",

"/tmp", # required "data" argument.

]

However

$ metaseq-api-local

2022-10-26 23:45:43 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/reshard.pt

Traceback (most recent call last):

File "/home/jason_chou/.conda/envs/user/bin/metaseq-api-local", line 8, in <module>

sys.exit(cli_main())

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 380, in cli_main

distributed_utils.call_main(cfg, worker_main, namespace_args=args)

File "/home/jason_chou/metaseq/metaseq/distributed/utils.py", line 279, in call_main

return main(cfg, **kwargs)

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 186, in worker_main

models = generator.load_model() # noqa: F841

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 147, in load_model

models, _model_args, _task = _load_checkpoint()

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 132, in _load_checkpoint

return checkpoint_utils.load_model_ensemble_and_task(

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 457, in load_model_ensemble_and_task

state = load_checkpoint_to_cpu(filename, arg_overrides)

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 392, in load_checkpoint_to_cpu

paths_to_load = get_paths_to_load(local_path, suffix="shard")

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 332, in get_paths_to_load

if not _is_checkpoint_sharded(checkpoint_files):

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 319, in _is_checkpoint_sharded

raise FileNotFoundError(

FileNotFoundError: We weren't able to find any checkpoints corresponding to the parameters you set. This could mean you have a typo, or it could mean you have a mismatch in distributed training parameters, especially --fsdp or--model-parallel. If you are working on a new script, it may also mean you failed to fsdp_wrap or you have an unnecessary fsdp_wrap.

I might have tried this or something similar before with other sizes.

@EIFY sorry about that. Let me replicate your steps and add some print statements in a separate branch to figure out the root cause. I'll update this issue in a bit.

@EIFY I made a branch here with a couple of print statements and the config I used https://github.com/facebookresearch/metaseq/tree/punitkoura/debug-407

One missing flag is --use-sharded-state which I added, but your error comes before that.

This is the output I get

$ python interactive_hosted.py

> initializing tensor model parallel with size 8

> initializing pipeline model parallel with size 1

> initializing model parallel cuda seeds on global rank 0, model parallel rank 0, and data parallel rank 0 with model parallel seed: 2719 and data parallel seed: 1

2022-10-27 01:10:05 | INFO | metaseq.hub_utils | loading model(s) from /data/66B/reshard_no_os/reshard.pt

In load_model_ensemble_and_task filenames = ['/data/66B/reshard_no_os/reshard.pt'] arg_overrides = {} suffix = -model_part-0

Inside load_checkpoint_to_cpu path = /data/66B/reshard_no_os/reshard-model_part-0.pt arg_overrides = {}

Inside get_paths_to_load local_path = /data/66B/reshard_no_os/reshard-model_part-0.pt suffix = shard checkpoint_files = ['/data/66B/reshard_no_os/reshard-model_part-0.pt']

2022-10-27 01:12:12 | INFO | metaseq.checkpoint_utils | Done reading from disk

2022-10-27 01:12:34 | INFO | metaseq.checkpoint_utils | Done loading state dict

2022-10-27 01:12:41 | INFO | metaseq.cli.interactive | loaded model 0

2022-10-27 01:13:10 | INFO | metaseq.cli.interactive | Worker engaged! 10.37.65.78:6010

* Serving Flask app 'interactive_hosted' (lazy loading)

I ran the interactive_hosted command, but interactive_cli hits the same model loading logic.

@EIFY Could you use this branch and paste the output you get?

It seems to me that distributed process groups weren't initialized properly. In addition to Punit's suggestion, can you also quickly check if Slurm environment variables have been inherited correctly (e.g. simply run echo $SLURM_STEP_NODELIST)? This might be important because with the distributed port set, we often initialize distributed process groups by inferring Slurm, and the checkpoint suffix depends on whether the initialization finishes successfully.

$ metaseq-api-local 2022-10-26 23:45:43 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/reshard.pt Traceback (most recent call last): File "/home/jason_chou/.conda/envs/user/bin/metaseq-api-local", line 8, in <module> sys.exit(cli_main()) File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 380, in cli_main distributed_utils.call_main(cfg, worker_main, namespace_args=args) File "/home/jason_chou/metaseq/metaseq/distributed/utils.py", line 279, in call_main return main(cfg, **kwargs) File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 186, in worker_main models = generator.load_model() # noqa: F841 File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 147, in load_model models, _model_args, _task = _load_checkpoint() File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 132, in _load_checkpoint return checkpoint_utils.load_model_ensemble_and_task( File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 457, in load_model_ensemble_and_task state = load_checkpoint_to_cpu(filename, arg_overrides) File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 392, in load_checkpoint_to_cpu paths_to_load = get_paths_to_load(local_path, suffix="shard") File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 332, in get_paths_to_load if not _is_checkpoint_sharded(checkpoint_files): File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 319, in _is_checkpoint_sharded raise FileNotFoundError( FileNotFoundError: We weren't able to find any checkpoints corresponding to the parameters you set. This could mean you have a typo, or it could mean you have a mismatch in distributed training parameters, especially --fsdp or--model-parallel. If you are working on a new script, it may also mean you failed to fsdp_wrap or you have an unnecessary fsdp_wrap.

@punitkoura running off origin/punitkoura/debug-407 (fbcf3e35b552126f0bfa8ef40f93b11614aaa2f8) with no change other than CHECKPOINT_FOLDER = "/home/jason_chou/redspot_home/66b/":

$ metaseq-api-local

2022-10-27 02:18:18 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/reshard.pt

In load_model_ensemble_and_task filenames = ['/home/jason_chou/redspot_home/66b/reshard.pt'] arg_overrides = {} suffix =

Inside load_checkpoint_to_cpu path = /home/jason_chou/redspot_home/66b/reshard.pt arg_overrides = {}

Inside get_paths_to_load local_path = /home/jason_chou/redspot_home/66b/reshard.pt suffix = shard checkpoint_files = []

Traceback (most recent call last):

File "/home/jason_chou/.conda/envs/user/bin/metaseq-api-local", line 8, in <module>

sys.exit(cli_main())

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 380, in cli_main

distributed_utils.call_main(cfg, worker_main, namespace_args=args)

File "/home/jason_chou/metaseq/metaseq/distributed/utils.py", line 279, in call_main

return main(cfg, **kwargs)

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 186, in worker_main

models = generator.load_model() # noqa: F841

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 147, in load_model

models, _model_args, _task = _load_checkpoint()

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 132, in _load_checkpoint

return checkpoint_utils.load_model_ensemble_and_task(

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 464, in load_model_ensemble_and_task

state = load_checkpoint_to_cpu(filename, arg_overrides)

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 397, in load_checkpoint_to_cpu

paths_to_load = get_paths_to_load(local_path, suffix="shard")

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 335, in get_paths_to_load

if not _is_checkpoint_sharded(checkpoint_files):

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 321, in _is_checkpoint_sharded

raise FileNotFoundError(

FileNotFoundError: We weren't able to find any checkpoints corresponding to the parameters you set. This could mean you have a typo, or it could mean you have a mismatch in distributed training parameters, especially --fsdp or--model-parallel. If you are working on a new script, it may also mean you failed to fsdp_wrap or you have an unnecessary fsdp_wrap.

As for interactive_hosted.py:

~/metaseq/metaseq/cli$ python interactive_hosted.py

2022-10-27 02:24:33 | WARNING | metaseq.cli.interactive | Missing slurm configuration, defaulting to 'use entire node' for API

2022-10-27 02:24:34 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/reshard.pt

In load_model_ensemble_and_task filenames = ['/home/jason_chou/redspot_home/66b/reshard.pt'] arg_overrides = {} suffix =

Inside load_checkpoint_to_cpu path = /home/jason_chou/redspot_home/66b/reshard.pt arg_overrides = {}

Inside get_paths_to_load local_path = /home/jason_chou/redspot_home/66b/reshard.pt suffix = shard checkpoint_files = []

Traceback (most recent call last):

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 394, in <module>

cli_main()

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 380, in cli_main

distributed_utils.call_main(cfg, worker_main, namespace_args=args)

File "/home/jason_chou/metaseq/metaseq/distributed/utils.py", line 279, in call_main

return main(cfg, **kwargs)

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 186, in worker_main

models = generator.load_model() # noqa: F841

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 147, in load_model

models, _model_args, _task = _load_checkpoint()

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 132, in _load_checkpoint

return checkpoint_utils.load_model_ensemble_and_task(

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 464, in load_model_ensemble_and_task

state = load_checkpoint_to_cpu(filename, arg_overrides)

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 397, in load_checkpoint_to_cpu

paths_to_load = get_paths_to_load(local_path, suffix="shard")

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 335, in get_paths_to_load

if not _is_checkpoint_sharded(checkpoint_files):

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 321, in _is_checkpoint_sharded

raise FileNotFoundError(

FileNotFoundError: We weren't able to find any checkpoints corresponding to the parameters you set. This could mean you have a typo, or it could mean you have a mismatch in distributed training parameters, especially --fsdp or--model-parallel. If you are working on a new script, it may also mean you failed to fsdp_wrap or you have an unnecessary fsdp_wrap.

@tangbinh I don't think I have Slurm installed but I don't think that's the issue. It seems that the suffix -model_part-0 is missing.

I don't think I have Slurm installed but I don't think that's the issue. It seems that the suffix -model_part-0 is missing.

You're right, Slurm isn't required and might not relevant here. But indeed, I think the distributed process groups weren't initialized properly for some reason, which resulted in the missing checkpoint suffix (the suffix is set in distributed_init). You can see that Punit's log has lines such as initializing tensor model parallel with size 8 and yours didn't.

Perhaps you can print out cfg.distributed_training.distributed_init_method here and try to figure out if distributed_main was invoked.

@tangbinh Yes, you're right, distributed init is not happening for @EIFY since slurm cannot be found. I think we can hack together the distributed config to fix this.

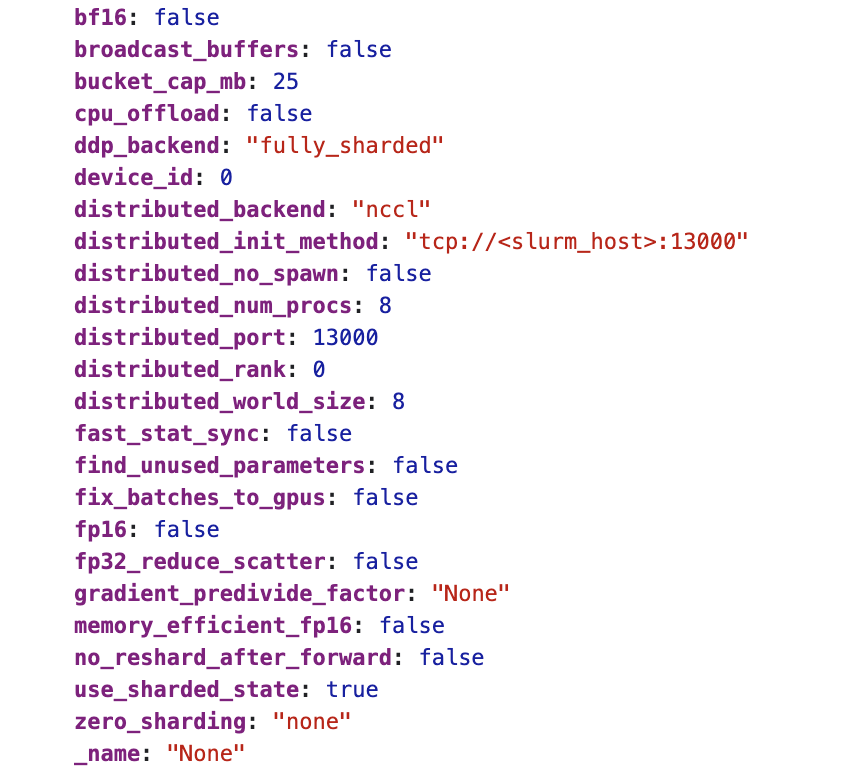

@EIFY could you pull the changes in the same branch (origin/punitkoura/debug-407) and run again to print your cfg.distributed_training config? For me it looks like

@EIFY I'm guessing distributed_init_method would be None for you. In that case, we can replace it with "tcp://localhost:13000". But let's get to that once we see your output.

@punitkoura At 8500e88 I got:

$ metaseq-api-localcfg.distributed_training = {'_name': None, 'distributed_world_size': 8, 'distributed_rank': 0, 'distributed_backend': 'nccl', 'distributed_init_method': None, 'distributed_port': 13000, 'device_id': 0, 'distributed_no_spawn': False, 'ddp_backend': 'fully_sharded', 'bucket_cap_mb': 25, 'fix_batches_to_gpus': False, 'find_unused_parameters': False, 'fast_stat_sync': False, 'broadcast_buffers': False, 'zero_sharding': 'none', 'fp16': False, 'memory_efficient_fp16': False, 'bf16': False, 'no_reshard_after_forward': False, 'fp32_reduce_scatter': False, 'cpu_offload': False, 'use_sharded_state': True, 'gradient_predivide_factor': None, 'distributed_num_procs': 8}

2022-10-27 04:53:30 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/reshard.pt

In load_model_ensemble_and_task filenames = ['/home/jason_chou/redspot_home/66b/reshard.pt'] arg_overrides = {} suffix =

Inside load_checkpoint_to_cpu path = /home/jason_chou/redspot_home/66b/reshard.pt arg_overrides = {}

Inside get_paths_to_load local_path = /home/jason_chou/redspot_home/66b/reshard.pt suffix = shard checkpoint_files = []

Traceback (most recent call last):

File "/home/jason_chou/.conda/envs/user/bin/metaseq-api-local", line 8, in <module>

sys.exit(cli_main())

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 380, in cli_main

distributed_utils.call_main(cfg, worker_main, namespace_args=args)

File "/home/jason_chou/metaseq/metaseq/distributed/utils.py", line 282, in call_main

return main(cfg, **kwargs)

File "/home/jason_chou/metaseq/metaseq/cli/interactive_hosted.py", line 186, in worker_main

models = generator.load_model() # noqa: F841

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 147, in load_model

models, _model_args, _task = _load_checkpoint()

File "/home/jason_chou/metaseq/metaseq/hub_utils.py", line 132, in _load_checkpoint

return checkpoint_utils.load_model_ensemble_and_task(

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 464, in load_model_ensemble_and_task

state = load_checkpoint_to_cpu(filename, arg_overrides)

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 397, in load_checkpoint_to_cpu

paths_to_load = get_paths_to_load(local_path, suffix="shard")

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 335, in get_paths_to_load

if not _is_checkpoint_sharded(checkpoint_files):

File "/home/jason_chou/metaseq/metaseq/checkpoint_utils.py", line 321, in _is_checkpoint_sharded

raise FileNotFoundError(

FileNotFoundError: We weren't able to find any checkpoints corresponding to the parameters you set. This could mean you have a typo, or it could mean you have a mismatch in distributed training parameters, especially --fsdp or--model-parallel. If you are working on a new script, it may also mean you failed to fsdp_wrap or you have an unnecessary fsdp_wrap.

Sounds like 'distributed_init_method': None is the issue: It should fall back to something instead of nothing...?

@EIFY One last thing, could you try with 517d7addd514546371de1c5ebe6a0af4ce2fe120 ? I think this should work.

And if it works, I think I might know what's going wrong...

@punitkoura 517d7ad indeed works 🎉:

$ git checkout remotes/origin/punitkoura/debug-407

M metaseq/service/constants.py

Previous HEAD position was 8500e88 Add logging

HEAD is now at 517d7ad Add localhost

$

$ metaseq-api-local

cfg.distributed_training = {'_name': None, 'distributed_world_size': 8, 'distributed_rank': 0, 'distributed_backend': 'nccl', 'distributed_init_method': 'tcp://localhost:13000', 'distributed_port': 13000, 'device_id': 0, 'distributed_no_spawn': False, 'ddp_backend': 'fully_sharded', 'bucket_cap_mb': 25, 'fix_batches_to_gpus': False, 'find_unused_parameters': False, 'fast_stat_sync': False, 'broadcast_buffers': False, 'zero_sharding': 'none', 'fp16': False, 'memory_efficient_fp16': False, 'bf16': False, 'no_reshard_after_forward': False, 'fp32_reduce_scatter': False, 'cpu_offload': False, 'use_sharded_state': True, 'gradient_predivide_factor': None, 'distributed_num_procs': 8}

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 0

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 1

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 6

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 5

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 3

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 2

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 4

2022-10-27 05:06:21 | INFO | metaseq.distributed.utils | initialized host i-050656823f6e88c4b as rank 7

In distributed utils - cfg.common.model_parallel_size = 8

> initializing tensor model parallel with size 8

> initializing pipeline model parallel with size 1

> initializing model parallel cuda seeds on global rank 0, model parallel rank 0, and data parallel rank 0 with model parallel seed: 2719 and data parallel seed: 1

2022-10-27 05:06:25 | INFO | metaseq.hub_utils | loading model(s) from /home/jason_chou/redspot_home/66b/reshard.pt

In load_model_ensemble_and_task filenames = ['/home/jason_chou/redspot_home/66b/reshard.pt'] arg_overrides = {} suffix = -model_part-0

Inside load_checkpoint_to_cpu path = /home/jason_chou/redspot_home/66b/reshard-model_part-0.pt arg_overrides = {}

Inside get_paths_to_load local_path = /home/jason_chou/redspot_home/66b/reshard-model_part-0.pt suffix = shard checkpoint_files = ['/home/jason_chou/redspot_home/66b/reshard-model_part-0.pt']

2022-10-27 05:10:48 | INFO | metaseq.checkpoint_utils | Done reading from disk

2022-10-27 05:10:52 | INFO | metaseq.checkpoint_utils | Done loading state dict

2022-10-27 05:10:52 | INFO | metaseq.cli.interactive | loaded model 0

2022-10-27 05:10:55 | INFO | metaseq.cli.interactive | Worker engaged! 172.21.41.241:6010

* Serving Flask app 'metaseq.cli.interactive_hosted' (lazy loading)

* Environment: production

WARNING: This is a development server. Do not use it in a production deployment.

Use a production WSGI server instead.

* Debug mode: off

2022-10-27 05:10:55 | INFO | werkzeug | WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:6010

* Running on http://172.21.41.241:6010

2022-10-27 05:10:55 | INFO | werkzeug | Press CTRL+C to quit

2022-10-27 05:11:16 | INFO | metaseq.hub_utils | Preparing generator with settings {'_name': None, 'beam': 1, 'nbest': 1, 'max_len_a': 0, 'max_len_b': 70, 'min_len': 42, 'sampling': True, 'sampling_topp': 0.9, 'temperature': 1.0, 'no_seed_provided': False, 'buffer_size': 4194304, 'input': '-'}

2022-10-27 05:11:16 | INFO | metaseq.hub_utils | Executing generation on input tensor size torch.Size([1, 38])

2022-10-27 05:11:18 | INFO | metaseq.hub_utils | Total time: 1.235 seconds; generation time: 1.228

2022-10-27 05:11:18 | INFO | werkzeug | 127.0.0.1 - - [27/Oct/2022 05:11:18] "POST /completions HTTP/1.1" 200 -

I have checked the generated tokens and they look reasonable.

So, the problem is that we are providing the

"--distributed-port 13000",

flag in constants.py

Because of which the code branches into slurm.

If we remove that param, we would go into this code https://github.com/facebookresearch/metaseq/blob/main/metaseq/distributed/utils.py#L112 which does set the init-method appropriately (same as what we did here).

This is where the branching happens https://github.com/facebookresearch/metaseq/blob/main/metaseq/distributed/utils.py#L42

Could you try this once? Remove the hardcoded "tcp://localhost:13000" and just remove "--distributed-port 13000" from constants.py