habitat-lab

habitat-lab copied to clipboard

habitat-lab copied to clipboard

[WIP] Port Spot/Stretch with control interface, training script, etc

Motivation and Context

Port the Spot robot into Habitat sim for future research. Two features are implemented: (1) collision detection with an option of sliding (either using the contact_test() or the existing NevMesh), and (2) sensor camera. https://github.com/facebookresearch/habitat-sim/pull/1563 Thanks Alex and Zach for working on this together!How Has This Been Tested

We run two tests. The first test is included in test_robot_wrapper.py. It tests Spot's basic function such as open and close the gripper. The second test is included in interactive_play.py, in which a user can port play_spot.yaml file into the code, and use a keyboard to move the Spot. Please see the video below.Types of changes

- New feature (non-breaking change which adds functionality)

Checklist

- [x ] My code follows the code style of this project.

- [ ] My change requires a change to the documentation.

- [ ] I have updated the documentation accordingly.

- [x ] I have read the CONTRIBUTING document.

- [x] I have completed my CLA (see CONTRIBUTING)

- [x] I have added tests to cover my changes.

- [x] All new and existing tests passed.

The videos

Can we see the video :-) ?

Very rough now, needs more work :)

https://drive.google.com/file/d/1Gzdq28UlFzI4n9dwdMfXE2kyXDPdcQ1q/view?usp=sharing

[BetaVersion] Used contact_test() along with the sliding to avoid penetration.

https://user-images.githubusercontent.com/55121504/192892121-ccccc171-46b6-44d6-b17c-e7ab205274de.mp4

TODO: (1) Better way to detect collision (2) Smooth out the movement

Very cool!

NavMesh: https://drive.google.com/file/d/1vsINqBDgGX17UAgqiQeg8TwDN9EaRu-f/view?usp=sharing

Contact test with sliding (smoother than the previous version): https://drive.google.com/file/d/16dYM3PE2m3mDSdaH3VJdGpH7lWqi_q-9/view?usp=sharing

[Profiling] Average time of calling the following functions (run by using interactive_play.py) step_filter(): 1.932358741760254e-05 sec contact_test(): 1.7936015129089357e-04 sec

step_filter() is 9.28 times faster than contact_test(), but the arm will have penetration in step_filter() since the arm is not modeled properly in NavMesh.

Cool to see that DDPPO training is getting in. Can we see a reward/success vs steps curve?

Cool to see that DDPPO training is getting in. Can we see a reward/success vs steps curve?

Hi Dhruv, thanks for asking! Here are the learning curves of Spot using the rearrange_easy dataset for picking up object

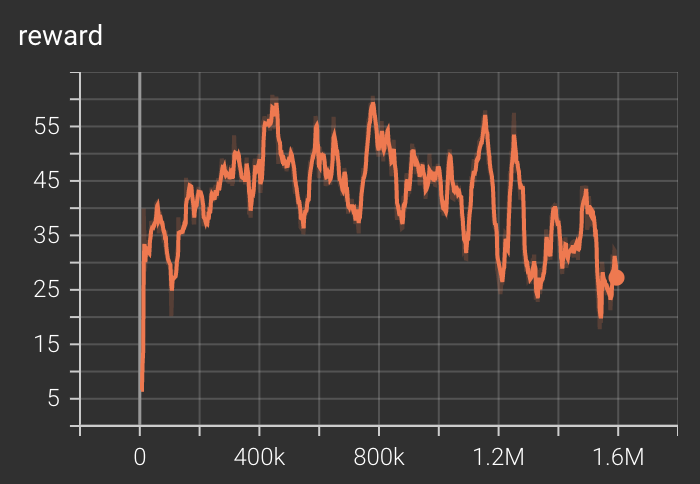

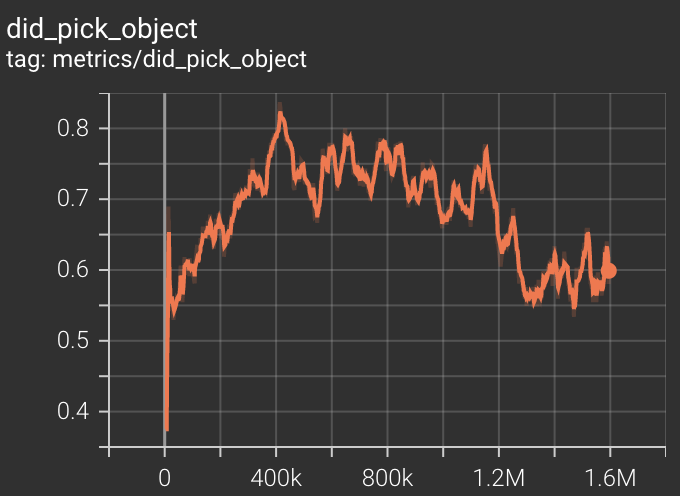

Reward vs Steps

Did_pick_object_rate vs Steps

I have not played with hyperparameters throughly yet, and here is the video https://drive.google.com/file/d/1On7ayo9XPmO7687MQmZTt2niJvJwOaT0/view?usp=sharing

And if you do not tune the reward function well, then Spot does the hack https://drive.google.com/file/d/18DTGnj8P-uFE8HYd4m0wu8emZ9h1u5XN/view?usp=sharing

Stretch results will come later.

TODO

- more complex dataset

- hyperparameters tuning

- Stretch results for the same task

Thank you!

Interesting. Agreed that the untuned-reward policy indeed looks pretty bad. If you haven't already, consider chatting with @naokiyokoyama about his "gaze" policy.

Interesting. Agreed that the untuned-reward policy indeed looks pretty bad. If you haven't already, consider chatting with @naokiyokoyama about his "gaze" policy.

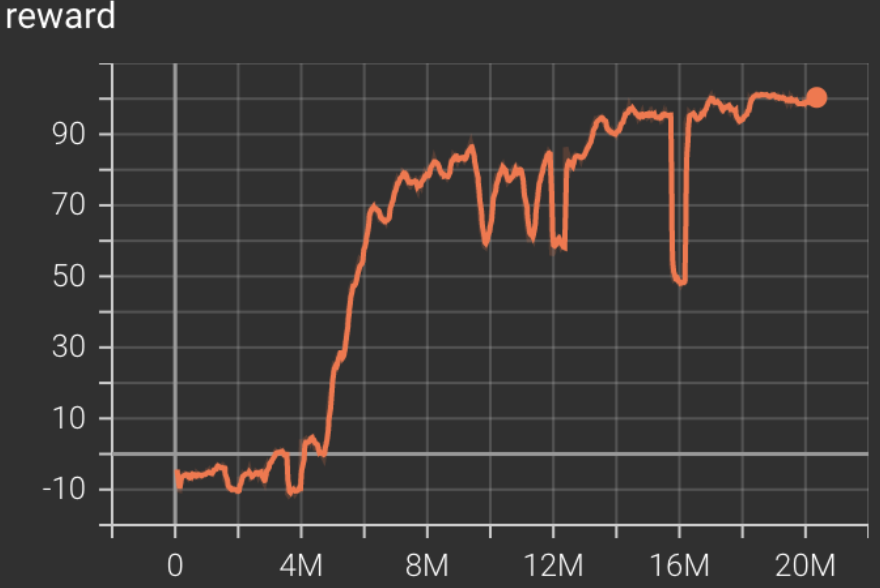

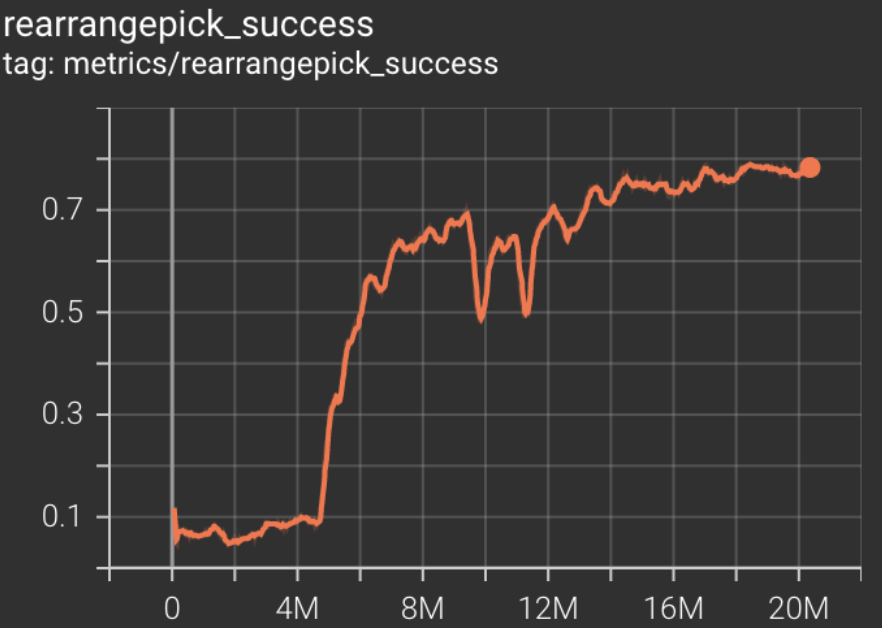

After training Spot extensively in the pick task using the “full” all_receptacles_10k_1k dataset, we see the training reward of 100.4 in the default rearrangement pick reward function. The training rearrangepick_success rate is 0.78. On the evaluation dataset, Spot has the reward of 103.14 and rearrangepick_success rate of 0.84. This shows the generalization of the agent. Here are the learning curves during training:

The runs continue, and I am optimistic about their performance. Here are some videos of Spot on the evaluation dataset:

https://user-images.githubusercontent.com/55121504/198380662-17ff109f-0c52-46de-bb42-bfa931456b1b.mp4

https://user-images.githubusercontent.com/55121504/198380686-d3fa2be5-408b-4bc1-8401-6ae20a8dfaa6.mp4

For Stretch, the result will come later.

Some TODO items

- Spot for nav, place, and other tasks

- Document the training and robot-related parameters

- Prepare checkpoints

Great! Glad to see this working. Agreed with the todos and look forward to them.

Question -- what is the sensor suite for the spot-pick experiments? Just proprioception or proprioception+arm depth?

Great! Glad to see this working. Agreed with the todos and look forward to them.

Question -- what is the sensor suite for the spot-pick experiments? Just proprioception or proprioception+arm depth?

Yes, and I followed the yaml file in the repo, it is (1) robot_arm_depth (2) joint of arm (3) is_holding: if the robot is holding the object or not (4) robot_arm_semantic: this follows Naoki's design (5) relative_resting_position (6) obj_start_sensor: this tells the robot where to find the object, and it was used in the repo. Although I was not sure how to get this information in the real world, maybe using Mocap?

Thank you!

Hi @jimmytyyang : This is interesting work. I was wondering if the spot arm only urdf and the pick/place/open/close skill checkpoints are publicly available somewhere? I tried to segregate the spot arm urdf from the hab_spot_arm urdf folder, but appears pybullet is not able to load a urdf that has .glb paths. Any pointers will be helpful.