fairseq

fairseq copied to clipboard

fairseq copied to clipboard

Config for Wav2Vec2 training from scratch

Hi,

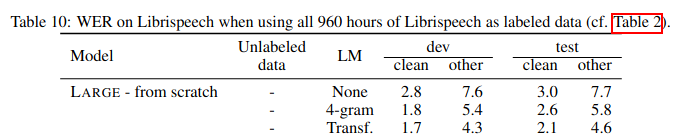

I am trying to train a Wav2Vec2-Large model on LibriSpeech without using the pretrained weights. By just using the no_pretrained_weight=False and freeze_finetune_updates=0 options I can train a model, however I am unable to match the performance reported in the Wav2Vec2 paper:

Despite trying to tune the learning rate, warmup steps, etc... my dev-clean WER keeps converging to 9 at best, instead of 2.8.

Despite trying to tune the learning rate, warmup steps, etc... my dev-clean WER keeps converging to 9 at best, instead of 2.8.

Would it be possible to access the configuration used to achieve the reported results?

Thank you.