fairseq

fairseq copied to clipboard

fairseq copied to clipboard

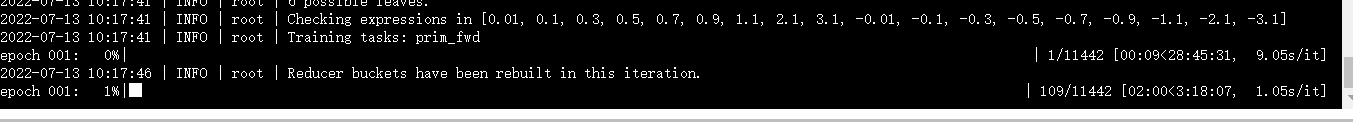

when I train with transformer_align model,Error happened training one of my training set, other datasets are ok。

❓when I train with transformer_align model,No errors are reported, the program still run but is stuck。

Before asking:

-

search the issues.

-

search the docs.

What is your question?

Code

What have you tried?I've tried changing the learning rate, or rerunning, and the problem persists.The program still runs, but is stuck

What's your environment?

- fairseq Version (e.g., 1.0 or main):fairseq 1.0.0a0+b554f5e

- PyTorch Version (e.g., 1.0)Python 3.8.10

- OS (e.g., Linux):ubuntu

- How you installed fairseq (

pip, source):pip - Build command you used (if compiling from source):fairseq-train

autodl-tmp/autodl-tmp/align_databin/ode2_new

--arch transformer_align

--dropout 0.3 --attention-dropout 0.1 --weight-decay 0.0001

--load-alignments --criterion label_smoothed_cross_entropy_with_alignment --label-smoothing 0.1

--optimizer adam --adam-betas '(0.9, 0.98)' --clip-norm 0.1 --activation-fn relu

--lr 5e-4 --lr-scheduler inverse_sqrt --warmup-updates 4000 --warmup-init-lr 1e-07 --fp16

--eval-bleu

--eval-bleu-print-samples

--max-tokens 512 --batch-size 1024 --tensorboard-logdir autodl-tmp/autodl-tmp/dumped2/ode2_new_align/实验2/tensorboard

--best-checkpoint-metric acc --maximize-best-checkpoint-metric

--save-dir autodl-tmp/autodl-tmp/dumped2/ode2_new_align/实验2/checkpoint

--patience 5 --max-epoch 200 --update-freq 8

--keep-last-epochs 5 --min-loss-scale 1e-06

--log-interval 1000 --fp16-scale-tolerance=0.25 --fp16-init-scale 32 --threshold-loss-scale 2 \ - Python version:Python 3.8.10

- CUDA/cuDNN version:torch1.8.1+cu111

- GPU models and configuration:6 A4000

- Any other relevant information: