WER on base_noise_pt_noise_ft_30h.pt

I'm trying to get decoding result from your avsr-fintuend model (avhubert_pretrained/model/lrs3_vox/avsr/base_noise_pt_noise_ft_30h.pt)

(I thought) the configuration is not wrong, but i couldn't get same result on my system.

The c-wer of downloaded avsr-fituned (PT type=Noisy,FT_type=Noisy) shows 4.29% on my own system.

Did i miss something?

inference command :

python -B infer_s2s.py --config-dir conf --config-name s2s_decode.yaml dataset.gen_subset=test common_eval.path=./multimodal/avhubert_pretrained/model/lrs3_vox/avsr/base_noise_pt_noise_ft_30h.pt common_eval.results_path=fb_base_noise_pt_noise_ft_30h override.modalities=['video','audio'] common.user_dir=pwd override.data=./multimodal/lrs3/30h_data/ override.label_dir=./multimodal/lrs3/30h_data

s2s_decode.yaml: same as github

Thank you for your consideration. ;)

Hi,

You need to tune the decoding hyperparameters (mostly generation.beam and generation.lenpen) a bit. For that particular number, setting generation.beam=20 generation.lenpen=1 will lead to WER of 4.1%.

I'm trying to get decoding result from your avsr-fintuend model (avhubert_pretrained/model/lrs3_vox/avsr/base_noise_pt_noise_ft_30h.pt)

(I thought) the configuration is not wrong, but i couldn't get same result on my system.

The c-wer of downloaded avsr-fituned (PT type=Noisy,FT_type=Noisy) shows 4.29% on my own system.

Did i miss something?

inference command : python -B infer_s2s.py --config-dir conf --config-name s2s_decode.yaml dataset.gen_subset=test common_eval.path=./multimodal/avhubert_pretrained/model/lrs3_vox/avsr/base_noise_pt_noise_ft_30h.pt common_eval.results_path=fb_base_noise_pt_noise_ft_30h override.modalities=['video','audio'] common.user_dir=

pwdoverride.data=./multimodal/lrs3/30h_data/ override.label_dir=./multimodal/lrs3/30h_datas2s_decode.yaml: same as github

Thank you for your consideration. ;)

Hi, may I ask how do you load a official provided finetuned model? I'm also trying to load a finetuned lipreading model. Here is my step:

- load the pretrained model, replace cfg.model.w2v_args.task.data, cfg.model.w2v_args.task.label_dir, cfg.task.data, cfg.task.label_dir, cfg.toeknizer_bpe_model to my own path, save the fixed model

- load the fixed model and infer.

However, the resulted WER is super high. I guess my "dict.wrd.txt" is inconsistent with the official provided pretrained model?

I'm trying to get decoding result from your avsr-fintuend model (avhubert_pretrained/model/lrs3_vox/avsr/base_noise_pt_noise_ft_30h.pt) (I thought) the configuration is not wrong, but i couldn't get same result on my system.

The c-wer of downloaded avsr-fituned (PT type=Noisy,FT_type=Noisy) shows 4.29% on my own system. Did i miss something? inference command : python -B infer_s2s.py --config-dir conf --config-name s2s_decode.yaml dataset.gen_subset=test common_eval.path=./multimodal/avhubert_pretrained/model/lrs3_vox/avsr/base_noise_pt_noise_ft_30h.pt common_eval.results_path=fb_base_noise_pt_noise_ft_30h override.modalities=['video','audio'] common.user_dir=

pwdoverride.data=./multimodal/lrs3/30h_data/ override.label_dir=./multimodal/lrs3/30h_data s2s_decode.yaml: same as githubThank you for your consideration. ;)

Hi, may I ask how do you load a official provided finetuned model? I'm also trying to load a finetuned lipreading model. Here is my step:

- load the pretrained model, replace cfg.model.w2v_args.task.data, cfg.model.w2v_args.task.label_dir, cfg.task.data, cfg.task.label_dir, cfg.toeknizer_bpe_model to my own path, save the fixed model

- load the fixed model and infer.

However, the resulted WER is super high. I guess my "dict.wrd.txt" is inconsistent with the official provided pretrained model?

There is no need to change the checkpoint in loading a fine-tuned model. Just doing python infer.py --args... override.data=/path/to/test-data/ override.label_dir=/path/to/test-label/ for inference should work.

Thanks for your reply!

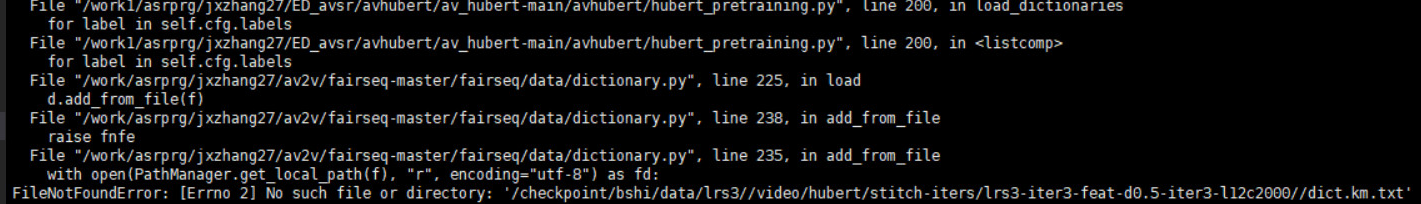

If I don't do that, it will encounter an error:

I suppose it's your dict path ? So I have to replace them to make the code work.

A saved model should be related to a proper dictionary file. The error above is dictionary used for pretrained, which is not important and I can cheat the code to work. The real important thing is when decoding your pretrained model outputs ID is not corresponding exactly with my dictionary when decoding.

Thanks for your reply! If I don't do that, it will encounter an error:

I suppose it's your dict path ? So I have to replace them to make the code work.

A saved model should be related to a proper dictionary file. The error above is dictionary used for pretrained, which is not important and I can cheat the code to work. The real important thing is when decoding your pretrained model outputs ID is not corresponding exactly with my dictionary when decoding.

Which checkpoint were you using? The dictionary is saved in the checkpoint and will be loaded automatically at test time. You can refer to a decoding example in the demo.

Thanks for your reply! If I don't do that, it will encounter an error:

I suppose it's your dict path ? So I have to replace them to make the code work. A saved model should be related to a proper dictionary file. The error above is dictionary used for pretrained, which is not important and I can cheat the code to work. The real important thing is when decoding your pretrained model outputs ID is not corresponding exactly with my dictionary when decoding.

Which checkpoint were you using? The dictionary is saved in the checkpoint and will be loaded automatically at test time. You can refer to a decoding example in the demo.

I found the reason. I used a different version fairseq, that made the task lost the saved target dictionary state. So I comment this line task = tasks.setup_task(saved_cfg.task) in infer_s2s.py, then the code work now.

Hi, @minkyu119 ! I've seen that you're using avsr model. Did you try running it in colab? I'm trying to modify the provided colab notebook, but it crashes when I try it with base_noise_pt_noise_ft_30h.pt