TensorComprehensions

TensorComprehensions copied to clipboard

TensorComprehensions copied to clipboard

auto tune for cuda tensor not on device 0 does not working

Tensor Comprehensions Github Issues Guidelines

If you have a feature request or a bug report (build issue), please open an issue on Github and fill the template below so we can help you better and faster. If you have some general questions about Tensor Comprehensions, please visit our slack channel or email us at [email protected]

For build issues, please add [Build] at the beginning of issue title.

When submitting a bug report, please include the following information (where relevant):

-

OS: ubuntu 16.04 LTS

-

How you installed TC (docker, conda, source): conda

-

Python version: 3.6

-

CUDA/cuDNN version: 8/6.0

-

Conda version (if using conda): 3

-

Docker image (if using docker):

-

GCC/GXX version (if compiling from source):

-

LLVM/Tapir git hash used (if compiling from source):

To get the hash, run:

$HOME/clang+llvm-tapir5.0/bin/clang --version -

Commit hash of our repo and submodules (if compiling from source):

In addition, including the following information will also be very helpful for us to diagnose the problem:

- A script to reproduce the issue (highly recommended if its a build issue)

- Error messages and/or stack traces of the issue (create a gist)

- Context around what you are trying to do

`mat1 = torch.ones(batch, total_length, width, height).cuda(device=1) mat2 = torch.ones(total_length).cuda(device=1)

mat3 = torch.IntTensor(temp_list) mat3.t_() mat3=mat3.cuda(device=1)

function.autotune(mat1, mat2, mat3, options=tc.Options("conv"), cache="function.tc", pop_size=100, generations=25, gpus="0,1,2,3", threads=15)`

print following error

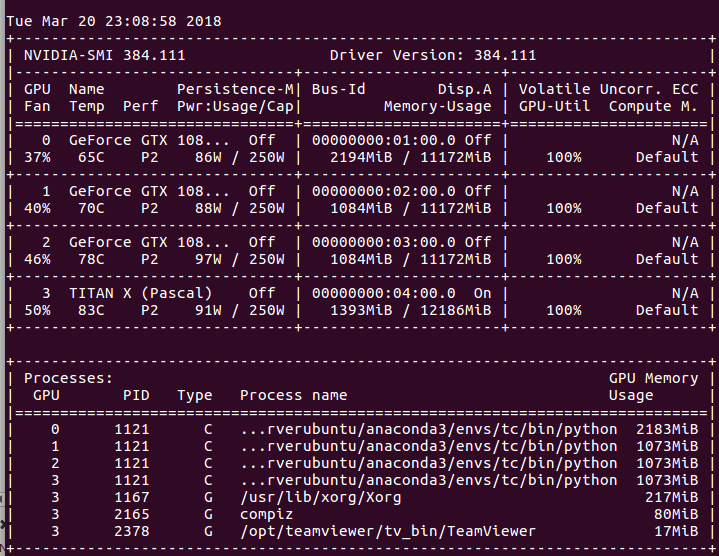

THCudaCheck FAIL file=/opt/conda/conda‑bld/pytorch_1518243271935/work/torch/lib/THC/THCTensorCopy.cu line=85 error=77 : an illegal memory access was encountered WARNING: Logging before InitGoogleLogging() is written to STDERR W0320 22:49:40.423420 30986 rtc.cc:44] Error at: /opt/conda/conda‑bld/tensor_comprehensions_1520457708651/work/src/core/rtc.cc:44: CUDA_ERROR_ILLEGAL_ADDRESS terminate called after throwing an instance of 'std::runtime_error' what(): Error at: /opt/conda/conda‑bld/tensor_comprehensions_1520457708651/work/src/core/rtc.cc:44: CUDA_ERROR_ILLEGAL_ADDRESS

but with

`mat1 = torch.ones(batch, total_length, width, height).cuda(device=0) mat2 = torch.ones(total_length).cuda(device=0)

mat3 = torch.IntTensor(temp_list) mat3.t_() mat3=mat3.cuda(device=0)

function.autotune(mat1, mat2, mat3, options=tc.Options("conv"), cache="function.tc", pop_size=100, generations=25, gpus="0,1,2,3", threads=15)` works well

but when i want auto tune function with very large input. input should be in device 0 it cause imbalance usage of gpu memory which is not helpful(example is under here)

if we give not cuda tensor for autotuner and autotuner automatically converting from tensor to cuda tensor and copy them to each device, it would be better