Question about the `MemoryDecision` task of BB3

The format of MemoryDecision task seems to conflict during training and interactive mode. On the Agent Information Page, MemoryDecision task is described as below, and we observe the same format during interactive mode,

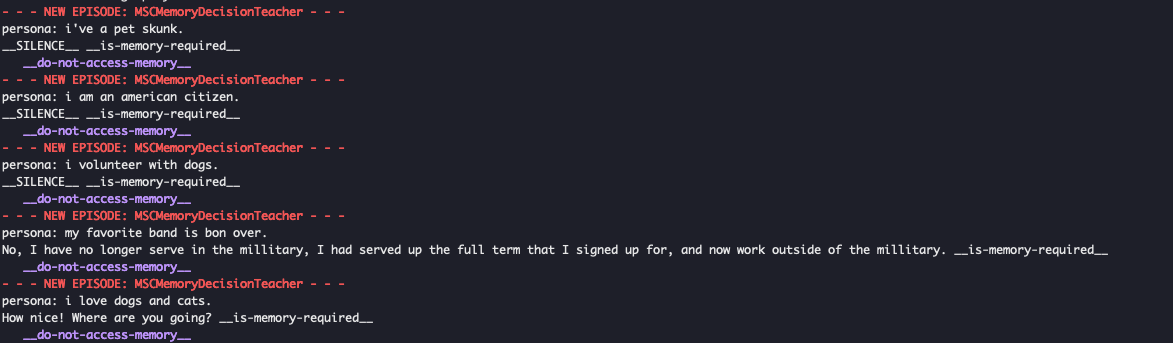

But the display of taskMSCMemoryDecisionTeacher seems to provide another format,

parlai dd --task projects.bb3.tasks.opt_decision_tasks:MSCMemoryDecisionTeacher

What I missed?

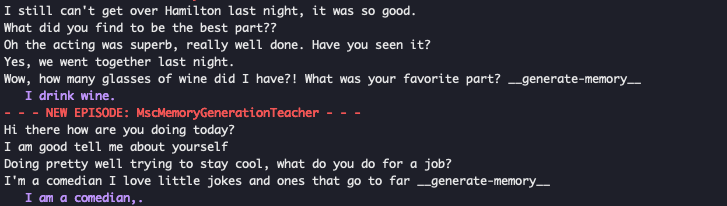

The same conflicts exist on memory generation task,

according to Agent Information Page:

But when run the script: parlai dd --task projects.bb3.tasks.r2c2_memory_generation_tasks:MscMemoryGenerationTeacher, it generates the below samples. Apparently, a full history of dialogue is used as the context.

I think you are confusing the dataset example with the process that happens inside the agent. The examples you show here are the datasets that we used for training modules in BB3. However, the context of the example goes through extra processing (in this case choosing the last turn) to train the model. In fact, we often try different approaches for this extra processing and the flags for controlling it might be available in agent. The final released models are based on what had the best performance.

So what is the best way to print out the training examples after all the extra processing? For example, the task projects.bb3.tasks.r2c2_decision_tasks:MSCMemoryDecisionTeacher when we train bb3.

Appreciate your comment here @klshuster.

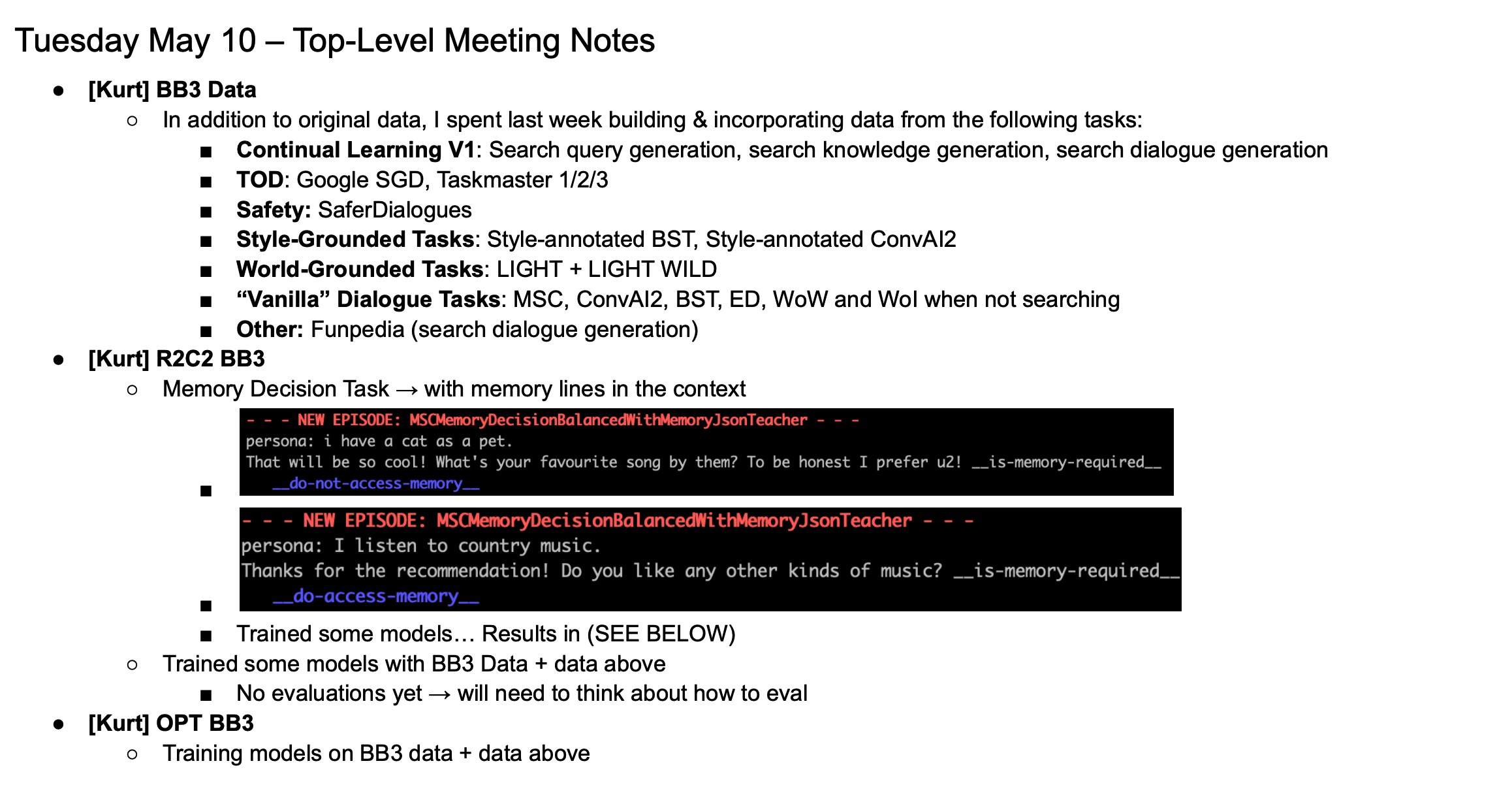

I have checked the logbook of bb3 carefully. I'm wondering if the task projects.bb3.tasks.opt_decision_tasks:MSCMemoryDecisionTeacher is up-to-date. Since I did find the below descriptions in the logbook, which seems to be the initial trail to introduce personas in the context of memory decision tasks. And it's also the current implementation of the task projects.bb3.tasks.opt_decision_tasks:MSCMemoryDecisionTeacher.

However, the final version described in Agent Information Page, which uses the full personas as the context, seems not available in current task implementation for training. Correct me if I'm wrong, and thanks for your reply.

Hi there, yes you've identified a mismatch between training and test-time inference.

The memory decision task during training only shows a single persona; this is meant to teach the model whether using such a persona is helpful in responding to the one turn of context; we want to give maximum signal to the model during training. During inference, however, we have several memories on which to possibly condition, so we show them all at the same time. This is simply a design choice.

The memory generation task is similarly setup. During training, we show the model several turns of context, but during inference (in our setup) we only show one turn of context.

For both of these cases, one could setup inference to be exactly as is seen during training, but again these are design decisions.

Thanks for your reply. It confused me for a long time.

It's impressive that the model could figure out the mismatch and give a reasonable response. Great work!

This issue has not had activity in 30 days. Please feel free to reopen if you have more issues. You may apply the "never-stale" tag to prevent this from happening.