AITemplate

AITemplate copied to clipboard

AITemplate copied to clipboard

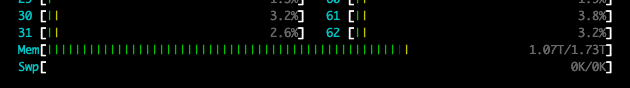

Memory usage increases when tableDiffusionAITPipeline is run repeatedly.

summary

After loading the model with from_pretrained, memory usage increases as the pipeline is used repeatedly. Each execution consumes 50 MB to 100 MB of memory. Eventually, the process stops, eating up memory. Oddly enough, the first few times the memory usage does not seem to increase significantly.

code

import torch

from pipeline_stable_diffusion_ait import StableDiffusionAITPipeline

pipe = StableDiffusionAITPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=token,

).to("cuda")

while True:

with torch.autocast("cuda"):

image = pipe(prompt).images[0]

environment

$ nvcc --version nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2022 NVIDIA Corporation Built on Tue_Mar__8_18:18:20_PST_2022 Cuda compilation tools, release 11.6, V11.6.124 Build cuda_11.6.r11.6/compiler.31057947_0

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.47.03 Driver Version: 510.47.03 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

GPU:Nvidia-A100-40GB

$ sudo pip list

Package Version

------------------------ --------------------

aiohttp 3.8.3

aiosignal 1.2.0

aitemplate 0.1.dev0

async-timeout 4.0.2

attrs 19.3.0

Automat 0.8.0

blinker 1.4

CacheControl 0.12.11

cachetools 5.2.0

certifi 2019.11.28

chardet 3.0.4

charset-normalizer 2.1.1

Click 7.0

cloud-init 22.2

colorama 0.4.3

command-not-found 0.3

configobj 5.0.6

constantly 15.1.0

cryptography 2.8

cupshelpers 1.0

dbus-python 1.2.16

defer 1.0.6

diffusers 0.3.0

distro 1.4.0

distro-info 0.23ubuntu1

entrypoints 0.3

filelock 3.8.0

firebase-admin 5.4.0

frozenlist 1.3.1

ftfy 6.1.1

future 0.18.2

google-api-core 2.10.1

google-api-python-client 2.64.0

google-auth 2.12.0

google-auth-httplib2 0.1.0

google-cloud-core 2.3.2

google-cloud-firestore 2.7.1

google-cloud-storage 2.5.0

google-crc32c 1.5.0

google-resumable-media 2.4.0

googleapis-common-protos 1.56.4

grpcio 1.49.1

grpcio-status 1.49.1

httplib2 0.20.4

huggingface-hub 0.10.0

hyperlink 19.0.0

idna 2.8

importlib-metadata 1.5.0

incremental 16.10.1

install 1.3.5

Jinja2 3.1.2

jsonpatch 1.22

jsonpointer 2.0

jsonschema 3.2.0

keyring 18.0.1

language-selector 0.1

launchpadlib 1.10.13

lazr.restfulclient 0.14.2

lazr.uri 1.0.3

line-bot-sdk 2.3.0

macaroonbakery 1.3.1

MarkupSafe 2.1.1

more-itertools 4.2.0

msgpack 1.0.4

multidict 6.0.2

netifaces 0.10.4

numpy 1.23.3

oauthlib 3.1.0

packaging 21.3

pexpect 4.6.0

pika 1.3.0

Pillow 9.2.0

pip 22.2.2

proto-plus 1.22.1

protobuf 4.21.7

pyasn1 0.4.2

pyasn1-modules 0.2.1

pycairo 1.16.2

pycups 1.9.73

PyGObject 3.36.0

PyHamcrest 1.9.0

PyJWT 1.7.1

pymacaroons 0.13.0

PyNaCl 1.3.0

pyOpenSSL 19.0.0

pyparsing 3.0.9

pyRFC3339 1.1

pyrsistent 0.15.5

pyserial 3.4

python-apt 2.0.0+ubuntu0.20.4.8

python-debian 0.1.36ubuntu1

python-dotenv 0.21.0

pytz 2019.3

PyYAML 5.3.1

regex 2022.9.13

requests 2.22.0

requests-unixsocket 0.2.0

rsa 4.9

scipy 1.9.1

screen-resolution-extra 0.0.0

SecretStorage 2.3.1

service-identity 18.1.0

setuptools 45.2.0

simplejson 3.16.0

six 1.14.0

sos 4.3

ssh-import-id 5.10

systemd-python 234

tokenizers 0.12.1

torch 1.12.1+cu116

torchaudio 0.12.1+cu116

torchvision 0.13.1+cu116

tqdm 4.64.1

transformers 4.22.2

Twisted 18.9.0

typing_extensions 4.3.0

ubuntu-advantage-tools 27.10

ufw 0.36

unattended-upgrades 0.1

uritemplate 4.1.1

urllib3 1.25.8

wadllib 1.3.3

wcwidth 0.2.5

wheel 0.34.2

xkit 0.0.0

yarl 1.8.1

zipp 1.0.0

zope.interface 4.7.1

Sounds like possible to be torch cache allocator issue. Will investigate today. Thanks for letting us know.

We didn't notice the mem usage increase when running multiple inference. Can you please provide the reproducible script?

code

# test.py

import torch

from pipeline_stable_diffusion_ait import StableDiffusionAITPipeline

token = "huggingface_token"

pipe = StableDiffusionAITPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

revision="fp16",

torch_dtype=torch.float16,

use_auth_token=token,

).to("cuda")

prompt = "cat"

for i in range(15):

with torch.autocast("cuda"):

image = pipe(prompt).images[0]

print("increase mem")

while True:

with torch.autocast("cuda"):

image = pipe(prompt).images[0]

environment

- machine:GCE a2-highgpu-1g

- OS:Ubuntu 20.04 LTS

- Cuda:11.6.2

#Cuda Install wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin sudo mv cuda-ubuntu2004.pin /etc/apt/preferences.d/cuda-repository-pin-600 wget https://developer.download.nvidia.com/compute/cuda/11.6.2/local_installers/cuda-repo-ubuntu2004-11-6-local_11.6.2-510.47.03-1_amd64.deb sudo dpkg -i cuda-repo-ubuntu2004-11-6-local_11.6.2-510.47.03-1_amd64.deb sudo apt-key add /var/cuda-repo-ubuntu2004-11-6-local/7fa2af80.pub sudo apt-get update sudo apt-get -y install cuda echo "export PATH=$PATH:/usr/local/cuda/bin export LD_LIBRARY_PATH="/usr/local/cuda/lib64:$LD_LIBRARY_PATH"" | sudo tee -a /etc/profile#nano(/etc/sudoers) # comment out #Defaults secure_path="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin" # add Defaults env_keep += "PATH" - python:3.8.10

- pip:

sudo apt install python3-pip- modules:

sudo pip install diffusers transformers scipy ftfy sudo pip install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu116 git clone --recursive https://github.com/facebookincubator/AITemplate cd AITemplate/python python3 setup.py bdist_wheel sudo pip install dist/*.whl --force-reinstall sudo reboot

- modules:

test:

sudo python3 examples/05_stable_diffusion/compile.py --token ACCESS_TOKEN

sudo python3 examples/05_stable_diffusion/test.py

After "increase mem" is displayed, CPU memory usage increases.

Just wanna double check you mean CPU memory, not GPU memory?

Just wanna double check you mean CPU memory, not GPU memory?

Yes, CPU memory.

https://github.com/facebookincubator/AITemplate/pull/43

fyi - confirming the same thing:

According to https://github.com/facebookincubator/AITemplate/pull/43 it looks like CUDA graph caused CPU memory leaking. While we are debugging whether it is caused by AIT side or CUDA Graph side, we can disable the cuda graph for now it is only small difference for the speed.

This still occurs for me.

@mikeiovine fixed the bug. Will do a sync today to fix this issue.