physics2vec

physics2vec copied to clipboard

physics2vec copied to clipboard

Things to do with arXiv metadata :)

physics2vec

Things to do with arXiv metadata :-)

Summary

This repository is (currently) a collection of python scripts and notebooks that

-

Do a Word2Vec encoding of physics jargon (using gensim's CBOW or skip-gram, if you care for specifics).

Examples: "particle + charge = electron" and "majorana + braiding = non-abelian"

Remark: These examples were learned from the cond-mat section titles only. -

Analyze the n-grams (i.e. fixed n-word expressions) in the titles over the years (what should we work on? ;-))

-

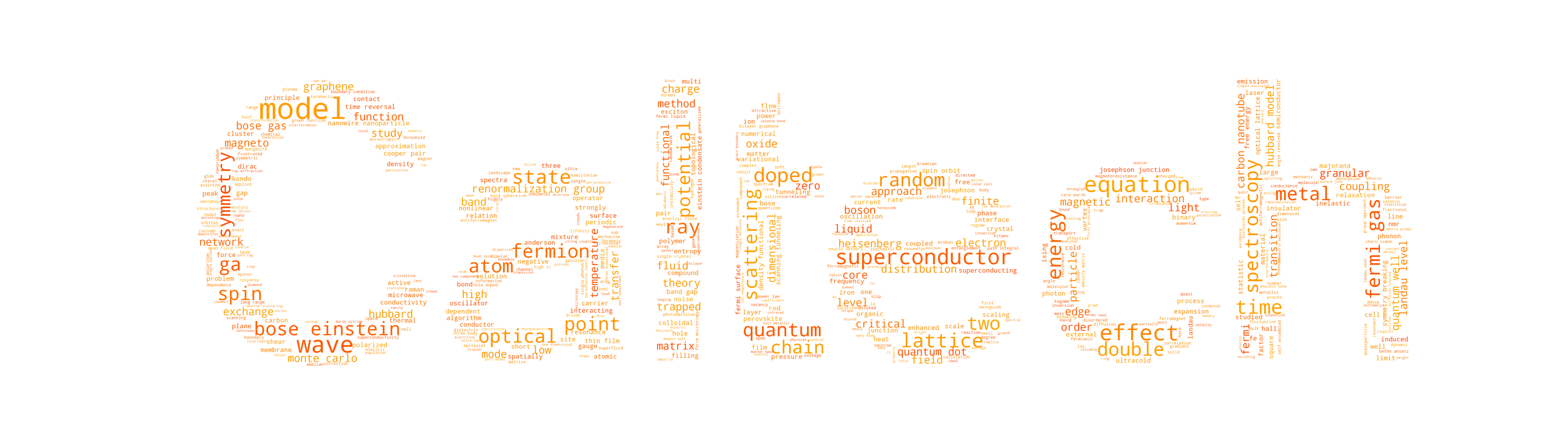

Produce a WordCloud of your favorite arXiv section (such as the above, from the cond-mat section)

Notes

These scripts were tested and run using Python 3. I have not checked backwards compatibility, but I have heard from people who managed to get it to work in Python 2 too! Feel free to reach out to me in case things don't work out-of-the-box. I have not (yet) tried to make the scripts and notebooks super user-friendly, though I did try to comment the code such that you may figure things out by trial-and-error.

Quickstart

If you're already familiar with python, all you need to have are the modules numpy, pyoai, inflect and gensim. These should all be easy to install using pip/pip3. Then the workflow is as follows (I used python3):

- python arXivHarvest.py --section physics:cond-mat --output condmattitles.txt

- python parsetitles.py --input condmattitles.txt --output condmattitles.npy

- python trainmodel.py --input condmattitles.npy --size 100 --window 10 --mincount 5 --output condmatmodel-100-10-5

- python askmodel.py --input condmatmodel-100-10-5 --add particle charge

In step 1, we get the titles from arXiv. This is a time-consuming step; it took 1.5hrs for the physics:cond-mat section, and so I've provided the files for those in the repository already (i.e. you can skip steps 1 and 2). In step 2 we take out the weird symbols etc, and parse it into a *.npy file. In the third step, we train a model with vector size 100, window size 10 and minimum count for words to participate of 5. Step 4 can be repeated as often as one desires.

More details

Apart from the above scripts, I provide 3 python notebooks that perform more than just the analysis of arXiv titles. I highly recommend using notebooks. Very easy to install, and super useful. See here: http://jupyter.org/. You can also just copy-and-paste the code from the notebooks into a *.py script and run those.

You are going to need to following python modules in addition, all installable using pip3 (sudo pip3 install [module-name]).

-

numpy

Must-have for anything scientific you want to do with python (arrays, linalg)

Numpy (http://www.numpy.org/) -

pyoai

Open Archive Initiaive module for querying the arXiv servers for metadata

https://pypi.python.org/pypi/pyoai -

inflect

Module for generating/checking plural/singular versions of words

https://pypi.python.org/pypi/inflect -

gensim

Very versatile module for topic modelling (analyzing basically anything you want from text, including word2vec)

https://radimrehurek.com/gensim/

Not required, but highly recommended is the module "matplotlib" for creating plots. You can comment/remove the sections in the code that refer to it if you really don't want to.

Optionally, if you wish to make a WordCloud, you will need

- Matplotlib (https://matplotlib.org/)

- PIL (http://www.pythonware.com/products/pil/)

- WordCloud (https://github.com/amueller/word_cloud)