Parsing large (~MB) files in BER format too lengthy

Dear Ilya,

first, thanks for a complex and very capable library!

However, I have a question / suggestion. We are parsing large files (telco data) in BER format (associated with the .asn description) that typically contain hundreds or thousands of records (saved one after another), being hundreds of kB to MB's large.

As the file size grew, so did the time necessary to parse it - roughly quadratically for larger files. Given that the stream of data is linear with no back-and-forth jumps, this behaviour was a bit surprising. Indeed, if we "cheat" (first finding where each record ends) and split the files into fragments, suddenly we observe a speedup of ~8 times.

What seems to be the root of this issue, is the frequent copying of strings which is cheap but finally has the O(n^2) characteristics. There is a lot of lines like octet, substrate = substrate[0], substrate[1:] that unfortunately end in copying most of the string over and over again.

Having studied the code a bit (not completely though), I don't see any reason why random access to the string is strictly required (or is it? I may have missed some important piece) and I believe that a sequential approach based on BytesIO (and hopefully O(n)) would be perfectly valid. What do you think?*

*I understand that this would require changing the API a little bit, it would help only for large files and otherwise disrupt a stable code base. Still, I think it is worth giving a thought in the longer perspective.

I am happy to provide as much detail as possible (as far as the sensitive nature of data allows) and eventually I can suggest code the new implementation if you find this useful.

Thanks, Jan

Practically, the following plot shows how lengthy (in microseconds) is to process each of the 3000 records in a file (all of them are of similar complexity).

An unclean hack-until-the-code-works-in-your-specific-Python-version-for-your-concrete-example-file style solution is implemented in https://github.com/janpipek/pyasn1/tree/naive_bytesio_attempt . I don't consider the code ready or correct or anything, it would take days to make sure everything is right and proper. But I hope the gist of it is clear.

Hi Jan,

Thank you for digging into the code and profiling the slow spots.

I totally support doing something to speed it up! My only concern is if the evil is indeed in string copying... I can't help but wonder where O^2 comes from...

I did some profiling in the past, so my impression was that pyasn1 has too much of the generality to be fast - all these composable tags, constraint trees, etc - these features are CPU hogs... But I'd be happy to be wrong!

You say that with your patch you observe 8-fold decoder speed-up on large files, is that correct? How does it behave time-wise on small (tens of bytes) data structures? What if you have many small pieces together? Or just a few larger ones? When does it choke?

To answer your questions: I think BER-based de/serializers do not require random access to substrate. There is even so-called "indefinite length encoding mode" in BER which operates on indefinitely large, partial substrate. I am not so sure about PER, though it's not yet implemented.

I plan on a major release (1.x) deprecating wrong choices of the past, dropping Python 2.6 support and breaking backward compatibility in many subtile ways. In that light, switching over to BytesIO does not look disastrous.

One of potentially relevant features I had in mind is to turn the decoder (and may be the encoder as well?) into some sort of coroutine consuming potentially incomplete substrate and emitting Python objects (i.e. ASN.1 data structures), then yielding control back to the caller waiting for more input. Perhaps encoder can work similarly, but reversed. The ultimate goal is to support en/decoding indefinitely large and not fully available at once data structures.

If you can pursue the BytesIO (or some other) effort to improve decoder performance, I will try to support you from code review side as much as time allows.

Thank you!

Hi,

thanks for the comments.

I made some effort to implement the ideas correctly (passing most tests but one functionality I am not able to deal easily with), see PR #175 .

I totally support doing something to speed it up! My only concern is if the evil is indeed in string copying... I can't help but wonder where O^2 comes from...

Well, one string copying (e.g. substrate[1:]) is linear in size of the data - O(n), but with (multiple) large structures, you tend to make O(n) of these operations, thus O(n^2). This holds asymptotically - practically, the speed of copying middle-sized data depends on many HW/OS/interpreter-dependent factors.

You say that with your patch you observe 8-fold decoder speed-up on large files, is that correct? How does it behave time-wise on small (tens of bytes) data structures? What if you have many small pieces together? Or just a few larger ones? When does it choke?

In the end, I am able to get to ~4-5 times speed up with the large file ("only"). I am currently designing the benchmarks for small data. If you had a set of representative set of typical data (other than those in the tests), I will include them.

Not too optimistic yet...

I will think about it...

Not too optimistic yet...

Your benchmarks look similar to mine! Feels like too many moving parts under the hood...

If you had a set of representative set of typical data (other than those in the tests), I will include them.

We have a whole lot of real-world data in pyasn1-modules test suite, thanks to all the recent work of @russhousley!

Hi Ilya, thanks for the comments and for the detailed code review. I hope I will get to another attempt at the beginning of the week.

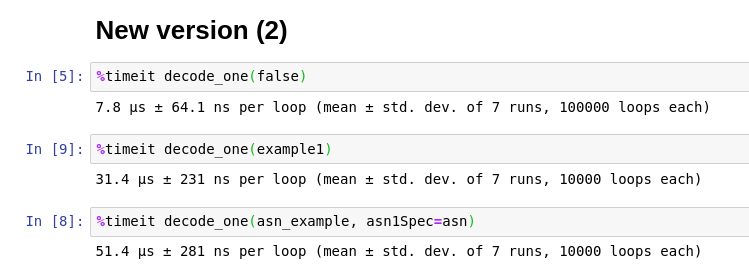

Hi Ilya, after another two re-implementations (currently in https://github.com/janpipek/pyasn1/tree/new_stream_implementation - later, I will merge this into the PR), I am getting to more comparable results:

I have still a few ideas left so hopefully it gets almost on par later today.

Great news! Thank you for keep working on that!

Does the new implementation exhibit much better performance on the large files compared to the original (string copying) implementation?

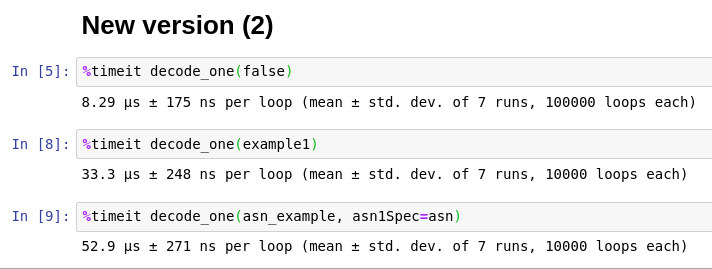

And... after a few more optimizations, I actually got to being slightly faster for all but the most simple boolean example with Python 3.7 at the price of (still) pre-reading files in 2.7 (as of commit https://github.com/janpipek/pyasn1/commit/d8f8b667020dc6fd116f2de3c7a2b8bd2079b6a8) :

If I change to accepting file as a stream in Python 2.7 (commit https://github.com/janpipek/pyasn1/commit/2a729e6e0c922ebddbc894396f9aac7538ae803c ), I must pass the **options and get a bit slower:

Does the new implementation exhibit much better performance on the large files compared to the original (string copying) implementation?

Yes, about 5 times for the file of interest :-)

What do you think about the balance between speed in 3.7 and memory-effectiveness in 2.7?

Note: I was not able to test against the pyasn1-modules (master) because of:

tests/test_rfc6211.py:18: in <module>

from pyasn1_modules import rfc6211

pyasn1_modules/rfc6211.py:51: in <module>

constraint.WithComponentsConstraint(

E AttributeError: module 'pyasn1.type.constraint' has no attribute 'WithComponentsConstraint

Have you changed the API lately?

A new feature has been added to pyasn1. You need to rebase your work on top of master (or the latest release) to make it functional with pyasn1-modules master.

Alternatively, you could test your code with older version of pyasn1-modules e.g. the latest release.

On 6 Sep 2019, at 11:24, Jan Pipek [email protected] wrote:

Note: I was not able to test against the pyasn1-modules (master) because of:

tests/test_rfc6211.py:18: in

from pyasn1_modules import rfc6211 pyasn1_modules/rfc6211.py:51: in constraint.WithComponentsConstraint( E AttributeError: module 'pyasn1.type.constraint' has no attribute 'WithComponentsConstraint Have you changed the API lately?

A new feature has been added to pyasn1. You need to rebase your work on top of master (or the latest release) to make it functional with pyasn1-modules master.

I see - ok, thanks :-)