"Database compacting, degraded performance database" for 2 days already

My node was already fully synced and working fine, randomly started doing that and it's not finishing or giving any progress.

Geth version: Version: 1.9.25-stable

OS version: Ubuntu 20.04.1 LTS

Parameters: geth --cache 4096 --http --http.api "txpool,personal,db, eth, net, web3" --ws --ws.api "web3, eth" --maxpeers=15 --datadir /mnt/geth --datadir.ancient /mnt/freezer --http.corsdomain * --ws.origins * --rpcvhosts=* --port 2600

/mnt/geth is an SSD, /mnt/freezer is an HDD

Any help is appreciated. I need to get my node working.

I saw a bunch of people in forums with this issue and they ended up deleting chaindata and resyncing, which is something that with my connection would take quite a while. I'm trying the --nocompaction flag now, will report

What kind of IO performance do you have on your SSD and what kind of FileSystem are you running on the SSD? Also it would be great if you could provide some logs.

Please also do not use the --nocompaction flag.

This issue has been automatically closed because there has been no response to our request for more information from the original author. With only the information that is currently in the issue, we don't have enough information to take action. Please reach out if you have more relevant information or answers to our questions so that we can investigate further.

hey @fer662! what happened here? did you ever fix the problem?

Sadly i had to re sync from scratch. there was no way to resolve it.

On Fri, Feb 12, 2021 at 9:55 PM Enz [email protected] wrote:

hey @fer662 https://github.com/fer662! what happened here? did you ever fix the problem?

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/ethereum/go-ethereum/issues/22056#issuecomment-778533279, or unsubscribe https://github.com/notifications/unsubscribe-auth/AACR7YYYHL5PZPK3ELJYLYLS6XEXNANCNFSM4VF3Z7BA .

have you finished syncing it?

my database was compacting for 10m then continued on it's regular stuff.

Yes, it took a reasonable amount of time that i cannot remember exactly and has been running fine since. The hardware is the same, and the issue didn't stem from it for sure.

On Fri, Feb 12, 2021 at 9:57 PM Enz [email protected] wrote:

have you finished syncing it?

— You are receiving this because you were mentioned. Reply to this email directly, view it on GitHub https://github.com/ethereum/go-ethereum/issues/22056#issuecomment-778533622, or unsubscribe https://github.com/notifications/unsubscribe-auth/AACR7Y7UID5H3ZWD3IYP4S3S6XE6TANCNFSM4VF3Z7BA .

I recommend closing this issue.

Happened to me when I upgrade to Geth 1.10.1 from 1.9.25 on Ropsten. The 1.9.25 was fully synced at time of doing the operation.

I first stopped the running 1.9.25 instance. Took a GCP volume snapshot. Created a new container with a new disk created using the snapshot I just took and started the 1.10.1 instance.

At launch, Geth 1.1.0.1 took around 10m even before initializing the IPC file. While doing this, htop showed heavy disk usage, around 160Mb/s (I don't recall exactly if it was more on the read or the write side).

After ~10m, the node started the IPC, connected to the network and synced around 10 blocks, then entered the database compacting stage. This ran for around 5h until I gave up.

Here the leveldb.stats on the 1.9.25 instance

Level | Tables | Size(MB) | Time(sec) | Read(MB) | Write(MB)

-------+------------+---------------+---------------+---------------+---------------

1 | 50 | 99.27759 | 35.00957 | 814.65889 | 771.45813

2 | 647 | 999.75740 | 46.79872 | 1487.34292 | 1485.75268

3 | 4844 | 9097.79491 | 325.22367 | 5466.02774 | 4459.89624

4 | 539 | 400.50786 | 0.00000 | 0.00000 | 0.00000

5 | 68207 | 136884.92513 | 0.00000 | 0.00000 | 0.00000

-------+------------+---------------+---------------+---------------+---------------

Total | 74287 | 147482.26288 | 407.03196 | 7768.02954 | 6717.10706

And here the same on the 1.10.1 after running 5 hours (in fact, after having forced kill it):

Level | Tables | Size(MB) | Time(sec) | Read(MB) | Write(MB)

-------+------------+---------------+---------------+---------------+---------------

0 | 74352 | 148558.54873 | 0.00000 | 0.00000 | 0.00000

-------+------------+---------------+---------------+---------------+---------------

Total | 74352 | 148558.54873 | 0.00000 | 0.00000 | 0.00000

Seems it was trying to compact this gigantic amount of L0 tables. The LOG file in the chaindata folder seems to agree:

cat LOG | grep com

23:06:01.461484 table@compaction L0·74352 -> L1·0 S·145GiB Q·1243574076

And for some level of estimation of the time it would have take:

cat LOG | grep com

23:06:01.461484 table@compaction L0·74352 -> L1·0 S·145GiB Q·1243574076

23:08:28.748009 table@build created L1@8383020 N·9976 S·2MiB "\x00\x00\x00..'\xc6P,v268503960":"\x00\x01\x10..\x86t\xd9,v591541816"

...

04:27:32.131617 table@build created L1@8384256 N·9834 S·2MiB

So from my (limited) understanding of levedb, it compacted 1236 tables (8384256 - 8383020) in ~5h so ~247 tables/h and I imagine probably 12 days (74532 / 247 / 24 ~= 12). The compaction read throughput it really really low, like 150Kb/s to 300Kb/s in both read & write. A single CPU core was maxed out at 100% during the whole time.

Now, why I turned having that amount of L0 tables, I have no idea. No sure what happened to cause this. I'll redo a round of restore + restart to see if I can avoid the switch to all L0 tables.

I upgrade other nodes (4 in totals) in-place (i.e. without a volume snapshot + new disk) correctly without problems, not sure what volume snapshot part caused this.

Another try of restoring from snapshot lead to similar results. I now early stopped the Geth process, then ran geth db stats, which waited around ~8 minutes before returning a result.

I better monitored the disk usage this time, I can say the bulk of I/O was read operation around 360MB/s (which probably tops the cloud provider disk throughput) with some infrequent write operations but with high peaks.

The problem is probably on our side though, I think at least, something must have changed. I'll report if I find what my problem is.

I just realized that this particular node in the 1.9.25 version as no L0 tables at all, could it be what causes leveldb to return everyone else to L0 when I restored the volume?

The node from which I create the volume 1.9.25:

Level | Tables | Size(MB) | Time(sec) | Read(MB) | Write(MB)

-------+------------+---------------+---------------+---------------+---------------

1 | 50 | 99.27759 | 35.00957 | 814.65889 | 771.45813

2 | 647 | 999.75740 | 46.79872 | 1487.34292 | 1485.75268

3 | 4844 | 9097.79491 | 325.22367 | 5466.02774 | 4459.89624

4 | 539 | 400.50786 | 0.00000 | 0.00000 | 0.00000

5 | 68207 | 136884.92513 | 0.00000 | 0.00000 | 0.00000

-------+------------+---------------+---------------+---------------+---------------

Total | 74287 | 147482.26288 | 407.03196 | 7768.02954 | 6717.10706

Another different node we have that is now migrated to 1.10.1 (from 1.9.25, in place update):

> debug.chaindbProperty("")

Compactions

Level | Tables | Size(MB) | Time(sec) | Read(MB) | Write(MB)

-------+------------+---------------+---------------+---------------+---------------

0 | 2 | 579.18027 | 150.50175 | 0.00000 | 6482.17941

1 | 52 | 98.23994 | 216.76490 | 9124.62485 | 9057.91622

2 | 515 | 998.03997 | 232.92075 | 9384.97616 | 9234.87690

3 | 5041 | 9998.09568 | 507.32804 | 20616.17042 | 19961.03069

4 | 4345 | 3344.61014 | 85.07847 | 2852.64561 | 2853.14748

5 | 68214 | 136918.26574 | 9.72853 | 1030.72084 | 1021.91198

-------+------------+---------------+---------------+---------------+---------------

Total | 78169 | 151936.43174 | 1202.32244 | 43009.13788 | 48611.06267

Same problem here, after a power outage that stopped my node for 4 days. Until then the node had been working without problems.

So most people had to resync the whole chaindata. Unreliable. Seems a joke.

This happened to me while syncing an archive node, about two days (1.2 TB) in. I just let it run for a while, and it resumed its normal behavior after ~5 minutes.

I started to see this issue after upgrading my geth version to:

Version: 1.10.4-stable

Git Commit: aa637fd38a379db6da98df0d520fb1c5139a18ce

Architecture: amd64

Go Version: go1.16.4

Operating System: linux

GOPATH=

GOROOT=go

With my earlier version, of which version number I did not record, I managed to sync geth just fine.

I start with a fresh data folder. After a while (~1h), for the snap sync, geth stops syncing and only does database compaction.

Here is a log extract:

INFO [06-22|19:23:23.632] Imported new block headers count=2048 elapsed=354.813ms number=2,837,698 hash=426fb5..cbbc99 age=4y6mo3w

INFO [06-22|19:23:24.001] Imported new block headers count=2048 elapsed=359.921ms number=2,839,746 hash=097d57..0381e9 age=4y6mo3w

INFO [06-22|19:23:24.582] Imported new block receipts count=2048 elapsed=1.018s number=2,795,628 hash=182155..b305b6 age=4y7mo3d size=4.13MiB

WARN [06-22|19:23:26.231] Database compacting, degraded performance database=/root/.ethereum/geth/chaindata

WARN [06-22|19:23:28.438] Dropping unsynced node during sync id=b3f5796c6727a343 conn=inbound addr=86.125.31.39:60186 type=ethereumjs-devp2p/v4...

ERROR[06-22|19:24:10.244] Snapshot extension registration failed peer=3af25a30 err="peer connected on snap without compatible eth support"

WARN [06-22|19:24:15.699] Dropping unsynced node during sync id=9b986db754dae7bf conn=inbound addr=15.161.212.85:54304 type=Nethermind/v1.10.73-...

WARN [06-22|19:24:18.716] Dropping unsynced node during sync id=82b68c45c1649e07 conn=dyndial addr=45.77.49.160:30305 type=Geth/v1.10.2-stable-...

WARN [06-22|19:24:26.242] Database compacting, degraded performance database=/root/.ethereum/geth/chaindata

WARN [06-22|19:24:39.669] Dropping unsynced node during sync id=d0ad027d764435b3 conn=inbound addr=139.99.9.58:59418 type=Geth/v1.9.24-stable-...

WARN [06-22|19:25:26.254] Database compacting, degraded performance database=/root/.ethereum/geth/chaindata

WARN [06-22|19:25:27.664] Dropping unsynced node during sync id=9571cfd7011a9334 conn=dyndial addr=45.32.166.77:30305 type=Geth/v1.10.2-stable-...

WARN [06-22|19:25:28.378] Dropping unsynced node during sync id=c3ce42a8b5ccb91f conn=dyndial addr=143.198.114.251:30303 type=Geth/v1.10.1-stable-...

WARN [06-22|19:26:26.263] Database compacting, degraded performance database=/root/.ethereum/geth/chaindat

Geth does not never seem to resume the syncing after encountering the Database compacting issue, but is forever stuck compacting the database.

I tried to explicitly disable the database compacting with the command line:

geth --http --cache 8000 --ipcpath /root/geth.ipc --gcmode "archive" --syncmode snap --nocompaction

... but it still does the Database compatcing and --nocompaction flag seem to have no effect.

The result is that I cannot get geth synced. I am not sure if this is related to geth version upgrade or something else.

The server is running with NVMe disk directly attached to it, so it should have plenty of IO power and lack of IO should not be an issue.

If I abort the sync with CTRL+C and restart, geth seems to be syncing just fine until it hits the first Database compacting and ends up to the same compact loop.

Even after issuing shutdown with CTRL+C the database seems to be compacting and does not have an end in sight.

^CINFO [06-22|20:00:58.344] Got interrupt, shutting down...

INFO [06-22|20:00:58.347] HTTP server stopped endpoint=127.0.0.1:8545

INFO [06-22|20:00:58.347] IPC endpoint closed url=/root/geth.ipc

WARN [06-22|20:00:58.348] Rewinding blockchain target=3,980,454

WARN [06-22|20:01:06.332] Checkpoint challenge timed out, dropping id=dce3679d582caf1b conn=inbound addr=79.142.129.218:34916 type=erigon/v2021.06.4/li...

WARN [06-22|20:01:09.887] Database compacting, degraded performance database=/root/.ethereum/geth/chaindata

WARN [06-22|20:02:09.897] Database compacting, degraded performance database=/root/.ethereum/geth/chaindata

WARN [06-22|20:03:09.908] Database compacting, degraded performance database=/root/.ethereum/geth/chaindata

I downgraded to Geth 1.10.1 and now was able to sync fine. Though the database compacting still happens, it does not grind the process to a halt. Maybe some database compacting settings have been changed more aggressive recently?

Does anyone know why this happens? I am trying to sync a new node (1.10.4) which is going very well, but then grinds to a halt for ~25 minutes. It goes completely AWOL to do this compacting. I'm scared to run my validator on a node that just stops for 25 minutes.

Hi there. Facing the same issue here, using geth 1.10.5-stable-33ca98ec

Due to it seems --nocompacting is not working, any other feedback/recommendation? Thanks!

Jul 16 08:34:42 baremetal01 geth[2830]: WARN [07-16|08:34:42.850] Database compacting, degraded performance database=/var/lib/goethereum/geth/chaindata Jul 16 08:35:42 baremetal01 geth[2830]: WARN [07-16|08:35:42.862] Database compacting, degraded performance database=/var/lib/goethereum/geth/chaindata Jul 16 08:36:42 baremetal01 geth[2830]: WARN [07-16|08:36:42.872] Database compacting, degraded performance database=/var/lib/goethereum/geth/chaindata Jul 16 08:37:42 baremetal01 geth[2830]: WARN [07-16|08:37:42.880] Database compacting, degraded performance database=/var/lib/goethereum/geth/chaindata

note: current information on this issue as of 2021-11-16 is https://github.com/ethereum/go-ethereum/issues/22056#issuecomment-970486232

This issue is tagged 'performance' but it brings down an entire node.

This happened to me (long term compacting, nothing else) after a power failure. [edit] It looks like it could be exacerbated by failure to shut down properly. My ethereum folder is on a RAID 6 with only 4/5 disks present; the filesystem is ext4 and is mounted with journal_data . Replaying the journal took a very long time on powerup. My system is a raspberry pi running ubuntu amd64 with only 3.3G of userspace ram and 8G of microsd swap.

I tried downgrading from 1.10.4 to 1.10.1 and this did not resolve the issue.

My node was still syncing when the power failure happened. It was doing a normal sync, after previously trying it for a day or two doing a fast sync. It was running into occasional crashes and I had it on a scripted loop to auto-reboot.

Well, there was no interruption on my system. It is on battery backup in a data center. Geth was running with no issues when this happened.

I am now getting this reliably, even after wiping chaindata. What's considered an acceptable time to wait to get through database compaction? Here's a trace log of a run: https://gateway.ipfs.io/ipfs/QmUGSGz9QPo2fcU6zSpZ2EsPzo7Zo3PLM4jMoZ9HTQDGH7 (just pinned on my pi atm, so download if needed) edit: ping me if you want me to bring this back up, it's on my ubuntu annexpi's ipfs snap

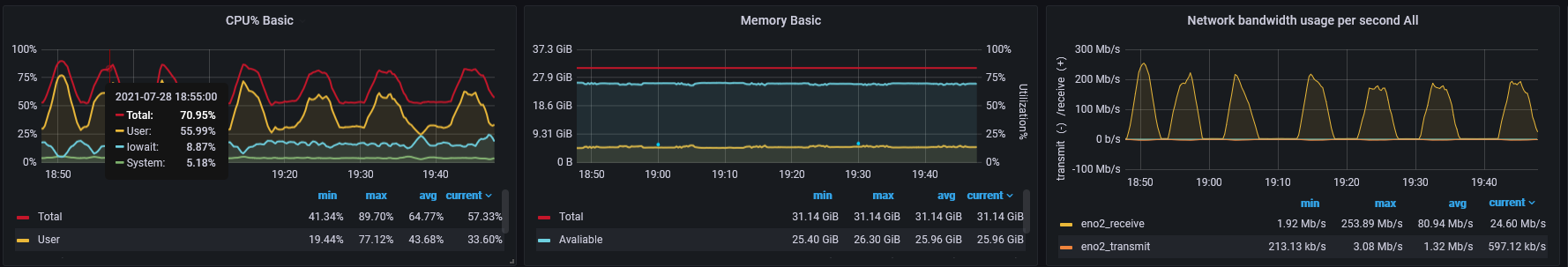

I'm on Geth 1.10.6 and syncing for the first time and getting this Database compacting, degraded performance message as well on a regular basis. it seems to resolve itself after a while and then start again. In fact his can be easily visualized from a System Metrics dashboard:

CPU usage drops and network usage as well during compaction. compaction runs for about 5min. This of course also greatly slows down beaconchain synctime. I do have an infura fallback but not sure how fast that kicks in.

I have a 6-core Xeon however ssd is a crucial p2, no dram so not the fastest breed. Could this be the cause? Didn't observe this behavior on testnet.

My raspberry pi is working after being left alone for a few days. I have not seen the issue since. I personally wonder if it relates to network behavior, as I've had other p2p issues, but I have no expertise or experience here.

i'm on a pi as well and experiencing this issue...did we find any definitive solution?

i'm on a pi as well and experiencing this issue...did we find any definitive solution?

I haven't fully synced yet due to other issues, but if you can keep your pi online this long, giving it up to a week to resolve on its own seems like it would be a helpful litmus test.

I kept my pi on for about 7 hrs before just rebooting. I didn't wait a week or any longer cuz I was pretty sure the process had grinded to a halt. I used to nohup and & to run the process in the background and exit my ssh connection without it stopping. I was monitoring it with htop, and there was no process running related to geth, and barely any processor power was being consumed. So I concluded it probably stopped. Restarted and now it seems to be humming along again.

Seeing the same issue even after a rebuild...

I have this problem after a sudden power cut. It persists even after removing the data directory and starting fresh. My hypothesis is that the SSD filesystem somehow got corrupted by experiencing a power loss during writing.

I am running an eth2 node with geth.

This has happened to me as well since upgrading to the geth version that had corruption issues (1.10.10 to 1.10.11). This has persisted over a month often throwing ext4-fs errors. I have run SMARTmon and fsck from a live CD on the drives, resynced chaindata from scratch (several times). And I cannot get geth to run anymore without causing my node to hang up.

I am able to sync with Alchemy in the meantime, but would love any help to get back to having my local geth operational.