embassy

embassy copied to clipboard

embassy copied to clipboard

SysTick time driver

Arm Cortex processor cores commonly include a SysTick peripheral.

SysTick offers some significant advantages. It is a 24-bit downcounter, which means it wraps 256 times less frequently than e.g. an STM32 16-bit timer peripheral, which is desirable at high tick rates. Reading the SYST_CSR returns and implicitly clears COUNTFLAG, so absolute counter values can be reliably accumulated without needing two interrupts per cycle. Because SysTick is an Arm peripheral, code which uses SysTick is readily shared between devices from different vendors.

SysTick poses some significant challenges. SysTick supports two clock sources: either the processor clock or a vendor-defined "reference clock". Neither of these speeds are known at compile time, nor is there a vendor-agnostic way to determine them, so there will need to be a vendor-specific runtime translation from SysTick ticks to Embassy ticks. Additionally, there is no way to trigger an interrupt from any value except 0. Setting an alarm for a specific point in the current cycle therefore requires modifying the counter register while it's counting – a read-modify-write cycle which perturbs the overall tick count – or using SysTick as a free-running timebase plus a separate hardware timer for near-term events. SysTick is a core peripheral, so dual-core devices like the RP2040 have two independent SysTick timers and no way for one core to access its opposite's SysTick.

I intend to take a stab at implementing an SysTick-based embassy::time::driver::Driver for STM32. My motivating use case a) requires a high tick rate and b) targets a device with zero 32-bit timers (#784), so a 24-bit timer at 64 MHz (SYSCLK) or especially 8 MHz ("reference clock", here HCLK/8) downsampled to 1 MHz ticks would be a win over a 16-bit timer counting 1 MHz directly. The STM32 time driver using a 16-bit timer peripheral triggers at least two interrupts per cycle – every 32.768ms – vs a hypothetical SysTick driver every 262.144ms or 2097.152ms.

My initial impressions:

- A SysTick-based central timer is so not worth it for multi-core devices. It would need to be gated for single-core devices. This shouldn't really be a loss because I don't know of any multi-core devices where SysTick is one of the better timers, but it does suggest a loss of generality.

- Setting alarms is not fun. You set the reload register, then write to the counter to trigger a reload. But you also have to read the counter first to see how many ticks are remaining in this cycle, and read how long this cycle was originally to figure out how many ticks have already elapsed, and you have to accumulate that in a 64-bit counter. All this takes a nonzero amount of time, and if you don't want to lose those ticks, you have to have a calibration step where you measure how long this all takes. And of course you're racing the counter too, so if you're close to a reload event you can do something complicated, or you can just spin, wait for an automatic reload, and try to set the alarm again afterwards. All this adds up to program size.

- Mapping SysTick ticks to Embassy ticks is a performance sink.

armv6mdoes not have an integer division instruction, it has a library function. I couldn't make a divisor-decided-at-runtime version ofnow()anything resembling fast.

Some ideas for improvements which I may or may not pursue:

- It would be advantageous to know at compile time that SysTick will run at (say) 8 MHz while Embassy ticks will run at 1 MHz. With that knowledge,

now()could be specialized at compile time to use a bit shift. This would be a lot faster than dividing by an arbitrary quotient determined at runtime. - SysTick is part of the core so its tick rate will always be closely related to the speed with which instructions are processed. If the code which alters it takes constant time (or constant enough time), SysTick could be stopped, read, altered, and restarted without functionally losing count. This would be a lot simpler and a lot smaller than leaving it running.

:+1: to having a "portable" time driver that only uses ARM stuff, but ARM isn't making it easy, yeah... :sweat_smile:

"Calibrating" how much time stopping+restarting systick takes doesn't seem like a solid solution to me. There's lots of factors that could influence it, like flash cache state, being preempted by interrupts...

There's a few alternative options. Taking inspiration on impls for RTIC's Monotonic impls (roughly equivalent to embassy's time driver):

- Make systick overflow at a fixed rate. This means if you want 1kHz precision you need the irq to fire at 1kHz which is not great. https://github.com/rtic-rs/systick-monotonic/blob/master/src/lib.rs

- Using systick for alarms and DWT CYCCNT for free-running tick count. Using something intended for debug seems a bit ugly, but it looks very promising otherwise: https://github.com/rtic-rs/dwt-systick-monotonic/blob/master/src/lib.rs

- Make systick overflow at a fixed rate. This means if you want 1kHz precision you need the irq to fire at 1kHz which is not great.

The attraction of SysTick for me is that it can offer high precision timekeeping (MHz) with enough bits to not need servicing at high intervals, particularly for low-end devices which lack 32-bit timer peripherals. This means reading the counter itself instead of accumulating ticks on overflow.

• Using systick for alarms and DWT CYCCNT for free-running tick count. Using something intended for debug seems a bit ugly, but it looks very promising otherwise

I looked into that, but… compare the Cortex-M3 DWT docs to the Cortex-M0 DWT docs. Notice anything missing? ☹️

CYCCNT is less available than SysTick, and in particular is not available on the bottom of the barrel devices.

"Calibrating" how much time stopping+restarting systick takes doesn't seem like a solid solution to me. There's lots of factors that could influence it, like flash cache state, being preempted by interrupts...

It's definitely sloppy but I'm not convinced that's a dealbreaker. Devices which are too small to have 32-bit timer peripherals aren't likely to have complex cache subsystems, so the assumption that reload timing is reasonably deterministic is less wrong in that context than elsewhere.

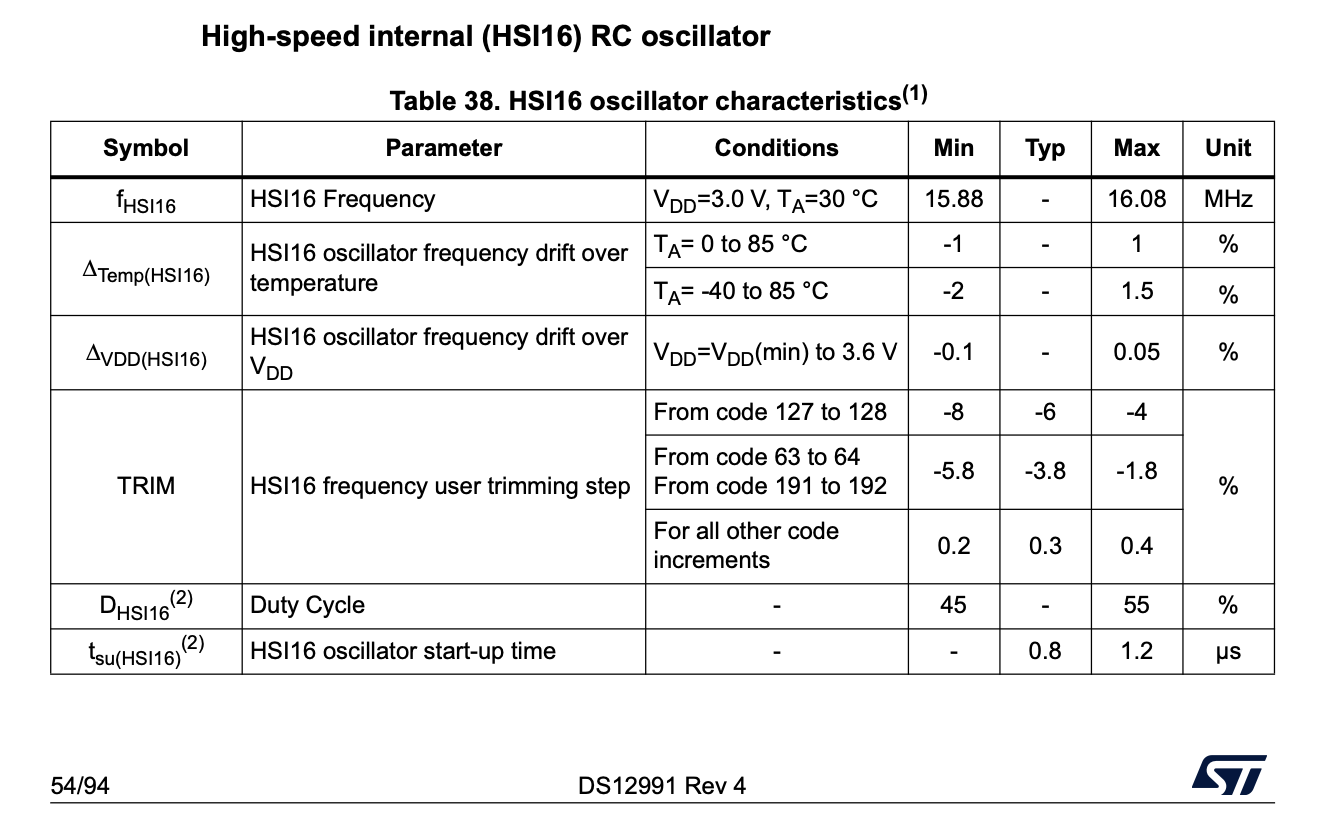

In my case, SysTick can run at either 64 MHz or 8 MHz, so getting the reload timing wrong even by a few SysTick ticks would still be under 1 ppm of error. Crystals are generally specified at ±50 ppm or ±20 ppm, so that seems… fine? And in my project, I don't even have a crystal, so I'm at the mercy of the onboard RC oscillator:

Having said that, I'm pretty sure the simplest and most accurate way to use SysTick as a high precision Embassy time driver would be:

- Given Embassy's tick rate is 1 MHz, configure SysTick to tick at 8 MHz.

- Handle SysTick underflow by incrementing an underflow counter.

- Implement

now()by reading SysTick, dividing by 8, reading the underflow counter, and combining. - Implement

allocate_alarm()by returningNone😛

There's a precise and cheap timer right there! We can even bolt on an alarm without making things difficult:

- Given Embassy's tick rate is 1 MHz, configure SysTick to tick at 8 MHz.

- Implement

now()by reading SysTick, dividing by 8, reading the underflow counter, and combining. - Implement

allocate_alarm()by returning an alarm handle on the first call, orNoneotherwise. - Implement

set_alarm()by calculating the value of the underflow counter in which the alarm will fire. (Here, that's>> 27.) - Handle SysTick underflow by incrementing an underflow counter. If the new counter is after the alarm value, call the alarm callback.

This satisfies* the embassy::time::driver::Driver contract:

When callback is called, it is guaranteed that now() will return a value greater or equal than timestamp.

It just… might not deliver the alarm event until 2 seconds later, which is awful. Still, maybe SysTick is a good way to implement now(), it just needs to be paired with another mechanism for alarms.

Using SYSTICK and CYCCNT would be fine for many CPUs, just gate it's availability.

One of the issues I have is that I need ALL the timers on an H7, yes, all of them. So I can't spare one for embassy's time driver...

Not having the SYSTICK driver is actually a serious gap. There is a whole class of devices where time precision is less important than power consumption. Even a very naive systick (interrupt - stop - get next planned task time - restart) is enough for those, because the biggest contributor to time jitter is the wakeup time from deep sleep. Typically based on RTC or LPTIM (which is not supported by embassy either atm).

Edit (a small note):

For real low power devices (esp. on STM32L0 or EM Gecko) you want an equivalent of linux's "nohz". No regular ticks that would wake up the CPU. You want to sleep and wait right to the planned wakeup time using LPTIM / RTC or wake up early on device interrupt, then recompute and resume SYSTICK, do the work, stop SYSTICK, recompute LPTIM / RTC, go to deep sleep.

Lost ticks can be compensated or plainly ignored as a sensor that only sends data every "about 5 minutes" does not care about the precise time (or uses the low power RTC peripheral).

Anybody implemented this ? As I am trying to run embassy on some LM4F120 boards.