POC: "Recent Memory" ring-buffer queue type

What's This?

This proof-of-concept demonstrates a new queue type that is implemented as a ring-buffer that does not block when full.

Use Case

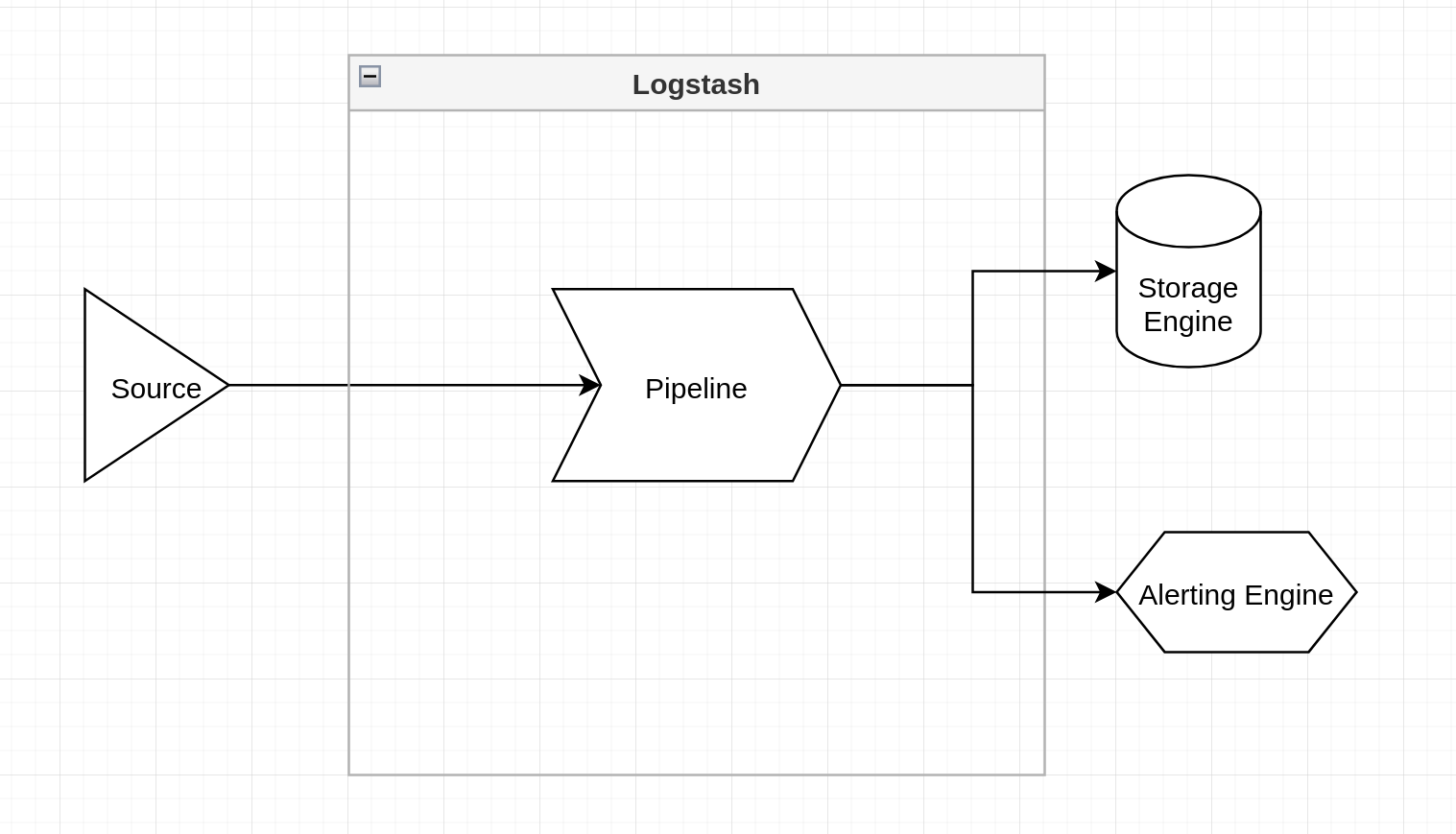

An operator uses Logstash as an event router to ship events to both a long-term storage engine (eg. Elasticsearch), and to a real-time, stream-based alerting system. The naïve implementation uses Logstash, with a single pipeline, to send copies of all events to both the storage engine and the alerting engine.

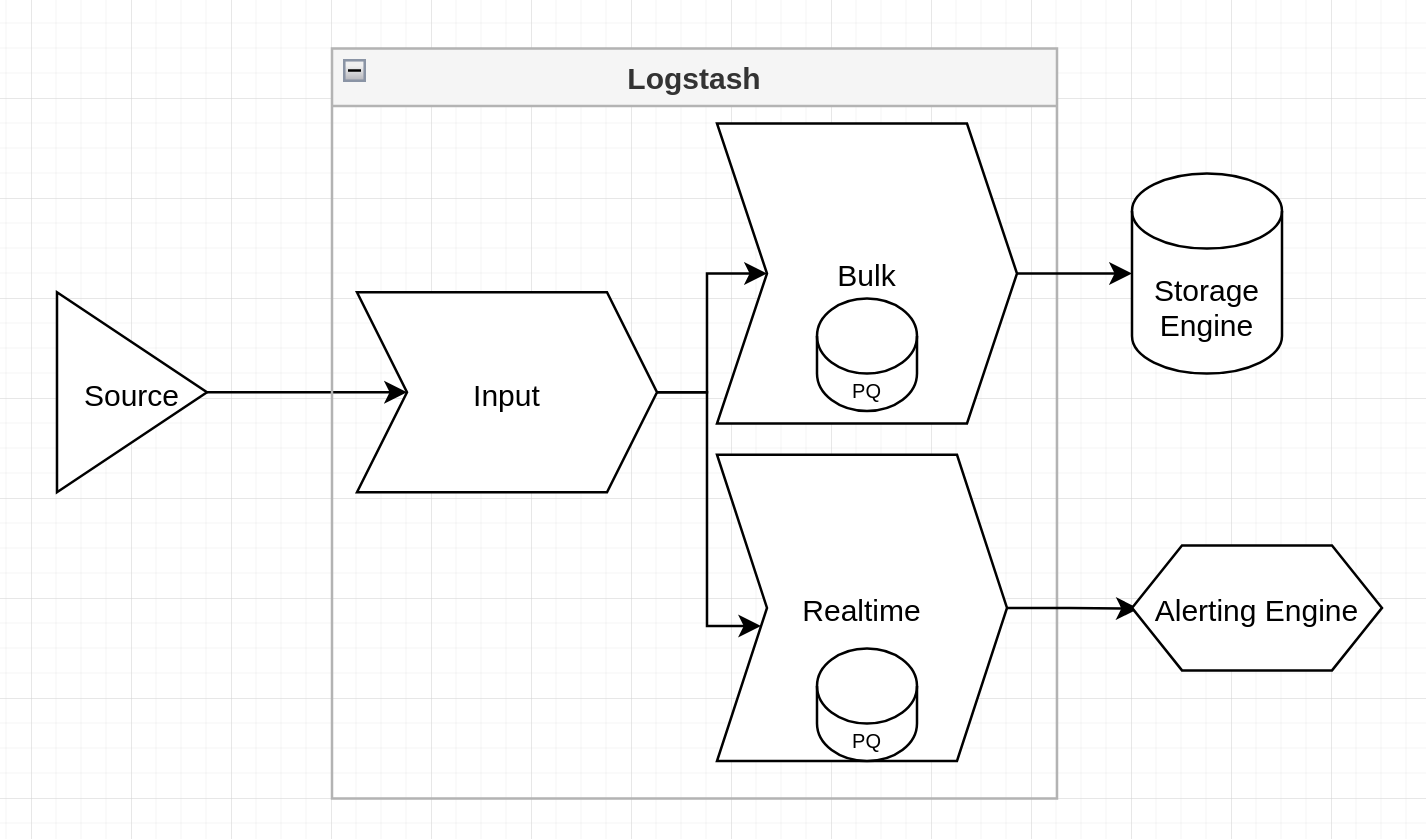

This design is not very resilient if one of the event destinations becomes unavailable. For example, if the storage engine is unable to accept events, the in-memory pipeline queue rapidly fills to capacity and stalls. This stops the event stream to the alerting engine, even though it's quite prepared to accept events itself. Realising this, the operator implements the Output Isolator Pattern.

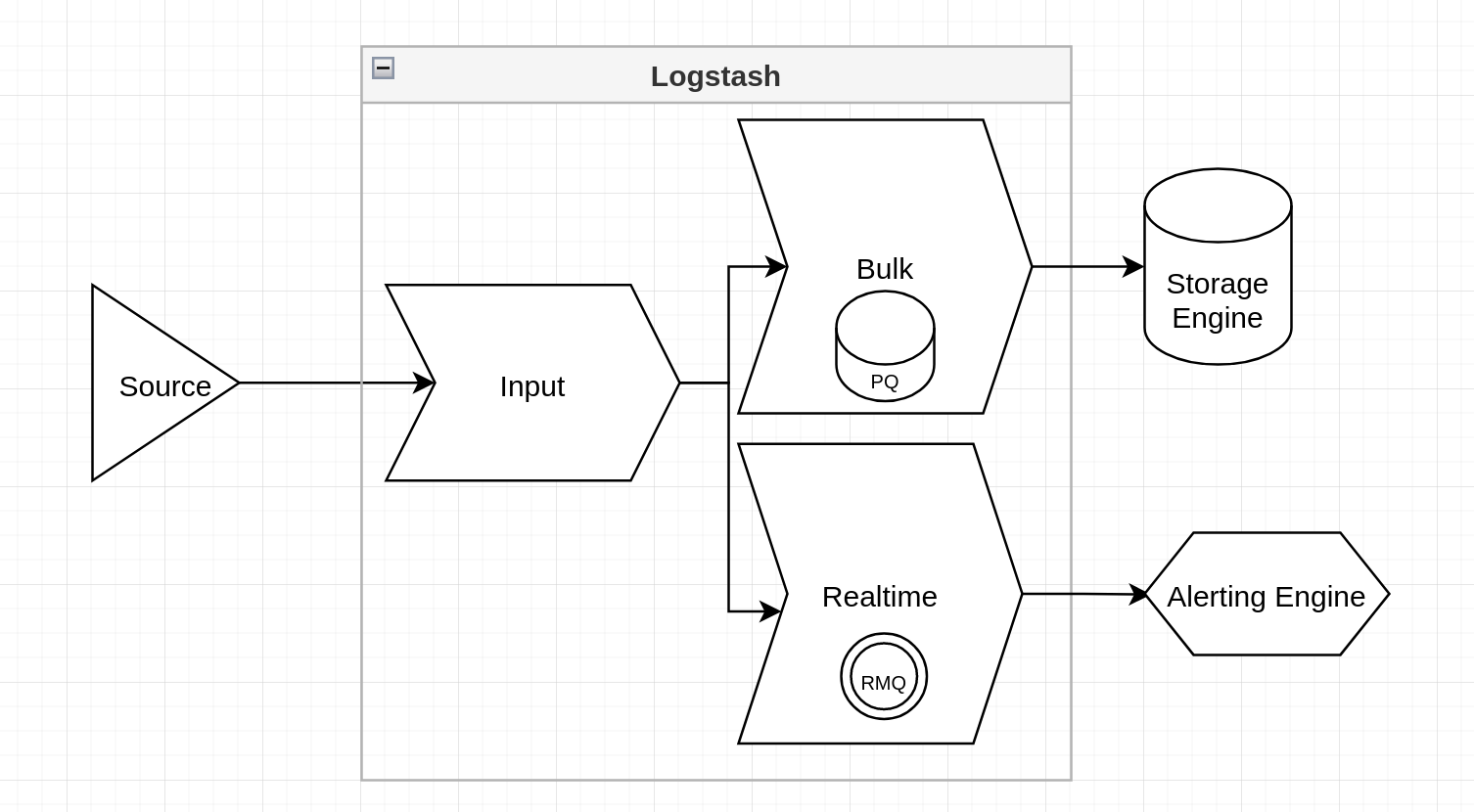

This places large, independent cues in front of each output so that one can stall without affecting the other. By necessity, these queues are defined as persisted cues, because memory queues can't provide the needed capacity. Unfortunately, this has a significant performance cost. Each event now incurs the cost of being processed by a persisted queue twice. The cost is acceptable for events that are destined for the storage engine, because the operator never wants to lose an event in that context. For the real-time alerting engine, the cost is much harder to justify. Buffering events for long periods of time has little value in this context. Data destined for the alerting engine have rapidly diminishing value over time. Here, the operator has been forced to make an unpleasant decision. They don't actually want a persisted queue, they just need a queue that won't block, and thus also block the upstream input queue.

Using the "recent_memory" queue, they achieive the goals of the Output Isolator Pattern, without the cost of unneeded PQs.

The recent_memory queue will never block, always accepting new events (at the expense of old ones).

Expressed in terms of the CAP theorem, recent_memory provides A,P. The standard memory queue provides C,P, and the persisted queue provides C,A. So, the addition of this new queue type allows the operator to choose any of the three vertices on the "CAP triangle", depending on their design goals.

cc @Crazybus, @drewr

Sorry about the close/reopen. Mistakenly clicked the wrong button!

@jarpy conceptually, this is AWESOME. Our team talked about the work briefly in a sync today and there is some serious enthusiasm for adding this vertex of the CAP triangle, and addressing a very real use-case.

We'll need to add docs and tie in metrics so that users can reason about the expected data loss under back-pressure, and will be working to get this remaining effort into the roadmap.

That is amazing news. Thanks so much for considering this and for championing the effort.

Shall we close this for real, then? The implementation presented here is obviously rudimentary, I just wanted to see if it could be done.

Let's keep this one open, and close it only when we have the work fully specified and on the roadmap.

This PR would implement the enhancement request from https://github.com/elastic/logstash/issues/11601

I am currently facing a similar issue as described above, having one stable "persistent" backend and one unreliable "streaming" backend and that ring-buffer mechanism would solve all my worries! Thanks a lot for starting this effort, i would really love to see this implementation available upstream :+1:

Hey, is there any chance to get this PR (or at least the functionalty) to get upstream? Right now i am thinking about working around this with an rsyslog receiving all logs from logstash and in turn forwarding these logs to a queued remote output. Cheers!

What is missing to complete this and merge?