skaffold: first attempt to run unit-test

What does this pull request do?

Use https://skaffold.dev/docs/ to leverage the testing of several python versions against several frameworks.

Actions

- [x] Add support for more versions.

- [x] Docs for the how to use it

- [ ] UTs for the existing python code

How to use it

See https://github.com/elastic/apm-agent-python/pull/1665/files#diff-92f8b8798d32cfd383b2c7d24b5940990d88714525869eb264a3f2881db12895 but in a nutshell

Generate the skaffold filesystem

$ python3 .k8s/cli.py generate

Generate the docker images synced with your workspace

$ python3 .k8s/cli.py build

Test agains a list of frameworks

$ python3 .k8s/cli.py test -f django -f none -f opentelemetry -f psutil

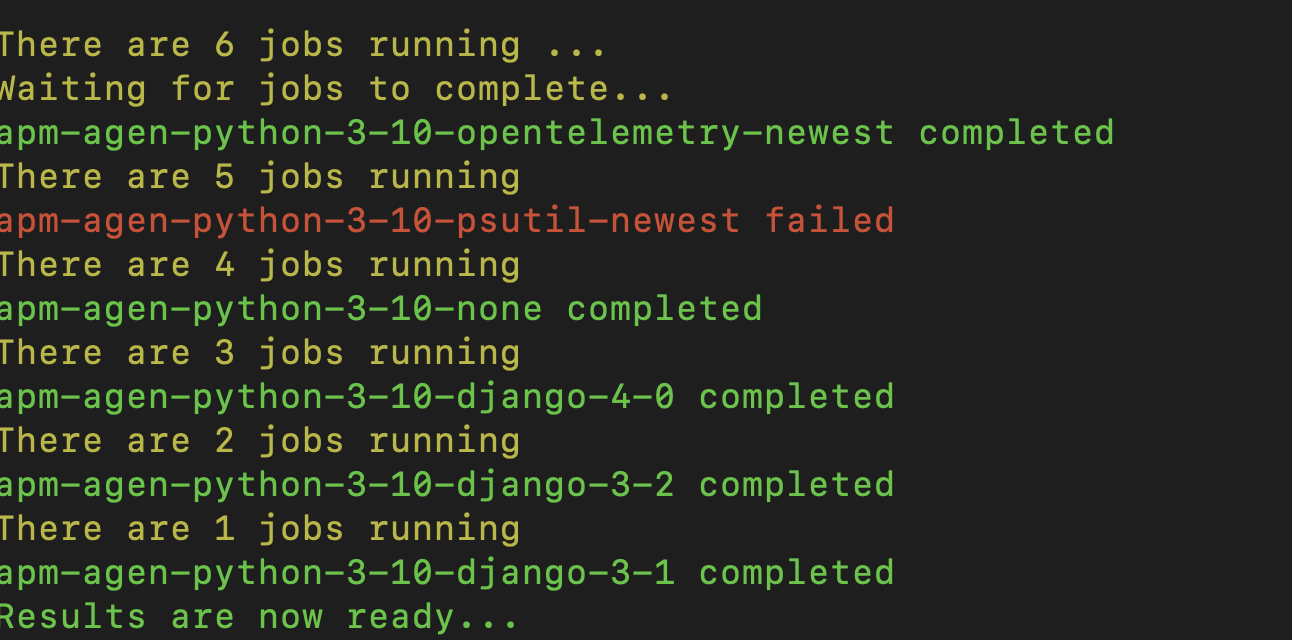

Then the status of those jobs running on K8s are reported in the console:

and logs can be found in the build folder:

$ ls -l build

total 176

-rw-r--r-- 1 vmartinez staff 23319 Oct 12 15:59 apm-agen-python-3-10-django-3-1.log

-rw-r--r-- 1 vmartinez staff 22924 Oct 12 15:59 apm-agen-python-3-10-django-3-2.log

-rw-r--r-- 1 vmartinez staff 22922 Oct 12 15:59 apm-agen-python-3-10-django-4-0.log

-rw-r--r-- 1 vmartinez staff 78960 Oct 12 15:59 apm-agen-python-3-10-none.log

-rw-r--r-- 1 vmartinez staff 11494 Oct 12 15:59 apm-agen-python-3-10-opentelemetry-newest.log

-rw-r--r-- 1 vmartinez staff 11606 Oct 12 15:59 apm-agen-python-3-10-psutil-newest.log

Actions

- [x] Configure the

skaffoldnamespace - [x] Configure the

skaffoldlabels so they are unique per user

Follow ups

- [ ] Generate output in a json/yaml/table format with the results.

- [ ] Export junit reports from the pods

- [ ] Run frameworks against services they need to run with.

:green_heart: Build Succeeded

the below badges are clickable and redirect to their specific view in the CI or DOCS

Expand to view the summary

Build stats

-

Start Time: 2023-03-07T12:56:24.908+0000

-

Duration: 3 min 53 sec

:grey_exclamation: Flaky test report

No test was executed to be analysed.

:robot: GitHub comments

Expand to view the GitHub comments

To re-run your PR in the CI, just comment with:

-

/test: Re-trigger the build. -

/test linters: Run the Python linters only. -

/test full: Run the full matrix of tests. -

/test benchmark: Run the APM Agent Python benchmarks tests. -

runelasticsearch-ci/docs: Re-trigger the docs validation. (use unformatted text in the comment!)

@elastic/observablt-robots , I'd like to gather some feedback from you, this is just a PoC, just wanna validate if this way of running things in K8s could help us to reduce the complexity in the CI. I'll see if I can run some GitHub actions with this and get some idea how it behaves.

There is plenty of room for improving this, ideally a common tool could be used so the code won't be hosted in each project but they will use a binary or similar tool

We are not working on this atm, so I'll close this for now