jetson-inference

jetson-inference copied to clipboard

jetson-inference copied to clipboard

2 Questions about re-training detection ssd on your own dataset

First question, can I train custom dataset using Mobilenet V2 SSD or only V1 available ?

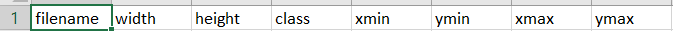

Second question, I had a dataset which I took photos of, and I've a csv file which includes the columns as attached to the picture, should I do this from all over again like u suggested in https://github.com/dusty-nv/jetson-inference/blob/master/docs/pytorch-collect-detection.md

Hi @r2ba7, only the SSD-Mobilenet-v1 is tested + working with the ONNX export and import into TensorRT. SSD-Mobilenet-v2 doesn't offer much improvement anyways.

You can either write a tool or script to convert your CSV to a dataset in the Pascal VOC format, or use CVAT.org to re-label your images and export as Pascal VOC from cvat.

@dusty-nv I want to try inception and mobilenet for research purposes also, thats why I was asking about mobilenet v2 or inception compatibility if that's possible

I believe these are the different network architectures that train_ssd.py supports: https://github.com/qfgaohao/pytorch-ssd/blob/f61ab424d09bf3d4bb3925693579ac0a92541b0d/run_ssd_example.py#L22

However the only one that I have tested + working with ONNX is SSD-Mobilenet-v1.

If you are conducting research and aren't as concerned with deployment, then you can use other object detection code with PyTorch/DarkNet/TensorFlow/ect and not be concerned with the TensorRT aspect.

@dusty-nv I'm actually concerned about both I want to test fps using TRT on the jetson nano for few models and pick the one with the optimal performance Of course it's mobilenet but anyway research purposes since it's for graduation project, and after then using it to deploy. I like this repo too much in deploying, I actually use the pre-trained mobilenet v2 and UART from the detectnet right away so it made it much easier, the problem I want to test it also with the other models. Can I deploy TRT models in the detectnet ?

If you wanna try a bunch of different PyTorch models in TensorRT, you might wanna try torch2trt: https://github.com/NVIDIA-AI-IOT/torch2trt

You can make other models work in detectnet, it may require adapting the pre/post-processing in c/detectNet.cpp though