Overpass-API

Overpass-API copied to clipboard

Overpass-API copied to clipboard

Optimize runtime and memory footprint of augmented diff queries

Here's a relatively simple query, producing an augmented diff that has a hard time running on the main Overpass' instance:

http://overpass-turbo.eu/s/iYU

{{dateFrom=2016-10-03T00:00:00Z}}

{{dateTo=2016-10-03T23:59:59Z}}

[timeout:300]

[maxsize:1573741824]

//[bbox:{{bbox}}]

[adiff:"{{dateFrom}}","{{dateTo}}"];

(

node(changed:"{{dateFrom}}","{{dateTo}}");

way (changed:"{{dateFrom}}","{{dateTo}}");

rel (changed:"{{dateFrom}}","{{dateTo}}");

);

out tags;

This works fine if restricted on a small bounding box (uncomment line 6 of the query above), but results in timeouts or out-of-memory exceptions when run on the full planet.

@drolbr mentioned that there are some post-0.7.53 improvements in the pipeline. So, maybe it makes sense to keep track of them in this ticket.

#278 may be related (for changed:)

Related issues:

- https://github.com/nrenner/achavi/issues/9 -> Large bbox / timeframe spanning several hours completely different approach. must read

- https://github.com/drolbr/Overpass-API/issues/162 - Search objects by changeset

- https://github.com/drolbr/Overpass-API/pull/174 (reconstruct_items)

- https://github.com/openstreetmap/openstreetmap-website/issues/1376

Example:

- http://dev.overpass-api.de/tmp/psv/achavi/index.html?changeset=43740191

Any chance that we can fix/improve this behaviour? At least for me, achavi displays lately in several cases "osmDiff: runtime error: Query run out of memory using about 2048 MB of RAM. " (see https://github.com/nrenner/achavi/issues/42)

Added prototype patch to filter changed by changeset id: https://github.com/mmd-osm/Overpass-API/commit/8238518f596cad87ab5bf7a18acbfeeb62ed9571

Can you explain how this works? Do we still need adiff timestamps then?

I added a comment in the following Github issue along with some examples: https://github.com/nrenner/achavi/issues/9

The new approach is very similar to the previous one, e.g. we provide a list of object ids as a starting point and determine the delta only based on those objects (as opposed to running out of memory, because we're preparing the delta for all objects in a bounding box for a given timeframe). By pulling the list of relevant ids out of the (changed) and filtering it immediately by changeset id, we can skip downloading a full changeset via the OSM API only to extract the ids. Another plus is that the memory consumption will be much lower this way.

Since release 0.7.55, there's an additional changeset filter, which in theory should accomplish the same: node(changed)(if:changeset() == 43740191). Unfortunately the results don't include deleted objects (yet), and it is much slower, as this part of the query is getting executed twice (for the start and end date), although the results don't depend on a timestamp.

Do we still need adiff timestamps then?

Yes, they're still needed. It would be too expensive to figure those out based on the current data model.

Example:

[adiff:"2015-02-11T01:30:39Z","2015-02-11T01:31:44Z"];(

node(changed)(if:changeset() == 28764270);

way(changed)(if:changeset() == 28764270);

);

out meta geom;

-> no result

vs.

http://dev.overpass-api.de/tmp/psv/achavi/index.html?changeset=28764270

-> includes deleted ways

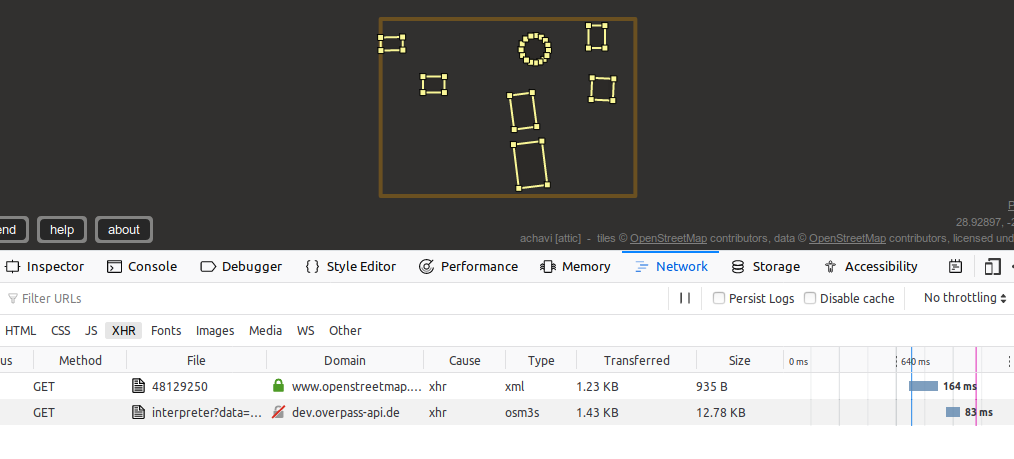

In combination with other optimizations in branch 0.7.59_mmd, augmented diff for small changesets could be generated in <100ms now:

http://dev.overpass-api.de/tmp/psv/achavi/index.html?changeset=48129250

Regarding the if:changeset() filter see my comment in #162.

Thanks! This looks a bit bogus, I've created a new issue to track the deviating results: #519

In my prototype, I'm bypassing this logic altogether and added something like (changed!{{changeset id}}) right inside the (changed) processing. Of course, we need to find some sane syntax for production use.