mxnet_Realtime_Multi-Person_Pose_Estimation

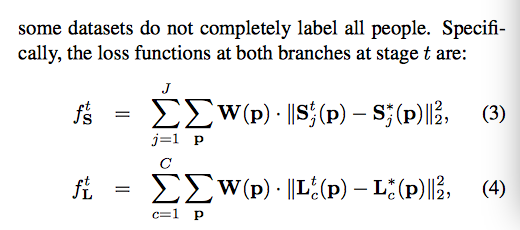

mxnet_Realtime_Multi-Person_Pose_Estimation copied to clipboard

mxnet_Realtime_Multi-Person_Pose_Estimation copied to clipboard

Training process

Hi @dragonfly90! Many thanks to you for this awesome git. Now I'm trying to make my own version of Realtime MultiPerson Pose Estimation, and I have some questions about your code and your approaches:

- Why do you change LinearRegression in previous version to your own loss, calculated as sqr(prediction-label) * mask ? What is need to use these mask? How is the final loss in

MakeLoss(loss_symbol)calculated, is it equals mean(sqr(...)) ? - What is need to augment data like you do? Why do we need to crop images and not just resize them?

- Here is a some code from your batch generator:

for i in range(self._batch_size):image, mask, heatmap, pagmap = getImageandLabel(self.data[self.keys[self.cur_batch]])For better understanding, let batch size =4. As I understand, you create 4 same images and maps and put them into a batch, so you have 4 same instances in one batch. Why? Or you make a mistake and here should be something likeimage, mask, heatmap, pagmap = getImageandLabel(self.data[self.keys[self.cur_batch * self._bacth_size + i]])? - Do you try to use learning rate scheduler?

I ask these questions, because i can't learn my net, it always predicts a constant (trainable constant, loss decreases to some value and stop changing). The model I use is same as yours. I also tried to change each of the possible network parameters, but nothing helps.

Thankful in advance for the answer, and sorry for my bad English :)

In fact, in my test, the result is not so good. MASK must be used, because it can make the model converge more easily. augment data can import accuracy. learning rate scheduler is not needed, though it may takes more than two days to train, you can change the learning rate by hand.

@abelyaev-vmk The square loss is actually linear regression loss. Correct me if I am wrong. kohillyang gives good answers. For question 3, these are four images in sequence because self.cur_batch += 1. The original one uses batch_size = 10. @kohillyang Thank you. I have not reproduced the training process yet. Did you try it?

As I said, the mask is very import, but in this demo, mask was be replaced by all 1 if there is no available MASK information. The MASK I used in that case is a rectangle. that is, for A 368x368 image, you need a 368x368 binary MASK, and if a point is in the rectangle,the weight for the point will be 1, or it will be 0.

I have reproduced the training process, in MPI dataset, the loss can converge to about 80-100 after 10000 iterations with learning rate of 0.001(after 5000 iterations, I change the learning rate to 0.0001)

click me to see the MASK generator@dragonfly90

@kohillyang Got it. Thank you. Do you have the performance in MPI dataset?

@dragonfly90 using OKS(Object Keypoint Similarit) as score method, I got a score of about 0.145, but there are some method which can achieve over 0.51.

@kohillyang Which code do you use? The original caffe one or the mxnet. I am worried that my augmentation code has some bugs.

I used your code, but I only used your model file, and wrote my own mpi parser code,for the result was not so good, I didn't wrote any augmentation code for that. currently my code is in private repository, I'll make it public if you agree.

@kohillyang Of course. I wish you could make it public.

The code is here:https://github.com/kohillyang/mx-openpose

@kohillyang, Cool!

@dragonfly90 I retrained the model, and this picture probably can help you. http://oj5adp5xv.bkt.clouddn.com/trainng_process.png

Good! Thank you. Is this on mpi or coco dataset. Could you send me an email([email protected]) about your contact information. I'd like to ask you some questions about the mpii implementation.