ck-crowdsource-dnn-optimization

ck-crowdsource-dnn-optimization copied to clipboard

ck-crowdsource-dnn-optimization copied to clipboard

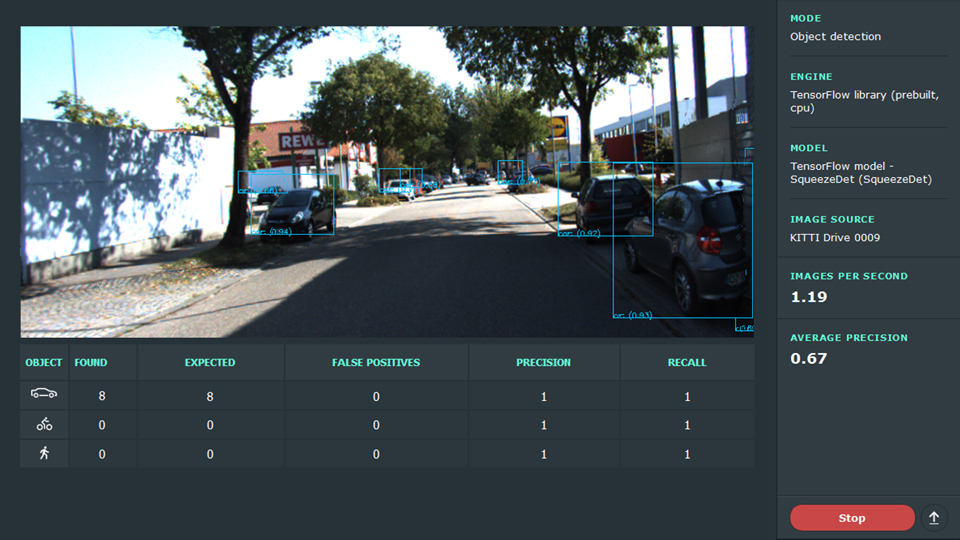

CK GUI application to crowdsource benchmarking and optimization of DNN engines and models across diverse Linux or Windows platforms. Further info:

Introduction

This is a CK-powered QT-based open-source cross-platform and customizable desktop tool set (at least, for Linux and Windows) to benchmark and optimize deep learning engines, models and inputs across diverse HW/SW via CK. It complements our Android application to crowdsource deep learning optimization across mobile and IoT devices provided by volunteers.

At the moment, it calculates the sustainable rate of continuous image classification via CK-Caffe or CK-TensorFlow DNN engines needed for life object recognition!

See General Motors presentation and the vision paper.

We validated this app on ARM, Intel and NVidia-based devices with Linux and Windows (from laptops, servers and powerful high-end tablets such as Surface Pro 4 to budget devices with constrained resources such as Raspberry Pi 3 and Odroid-XU3).

Maintainers

License

- Permissive 3-clause BSD license. (See

LICENSE.txtfor more details).

Minimal requirements

-

Linux, Windows or MacOS operation system

-

QT5+ library. You can install it as follows:

Ubuntu:

$ sudo apt-get install qtdeclarative5-dev

RedHat/CentOS:

$ sudo yum install qt5-declarative-devel

Windows: If you use Anaconda python, CK will then automatically pick up its Qt version (we used 5.6.2 for our builds).

Alternatively, you can download Qt from official website and install to C:\Qt.

However, we experienced some minor issues when compiling this app with such versions.

Troubleshooting

On some Linux-based platforms including odroid and Raspberry Pi you may get a message:

/usr/bin/ld: **cannot find -lGL**

This means that either you OpenCL drivers are not installed or corrupted. To solve this problem you can either try to reinstall your video driver (for example, by reinstalling original NVidia drivers) or installing mesa driver:

$ sudo apt-get install libgl1-mesa-dev

You may also try to soft link the required library as described at StackOverflow.

Preparation to run (object classification)

To make application run you have to install ck-caffe and at least one caffemodel and imagenet dataset.

- ck-caffe:

$ ck pull repo:ck-caffe --url=https://github.com/dividiti/ck-caffe

- caffe models:

$ ck install package:caffemodel-bvlc-alexnet

$ ck install package:caffemodel-bvlc-googlenet

- imagenet datasets. Note: one dataset consists of two packages:

auxandval, you need to install both of them to use the dataset. More datasets may be added in the future:

$ ck install package:imagenet-2012-aux

$ ck install package:imagenet-2012-val-min

You also need to compile and be able to run caffe-classification:

$ ck compile program:caffe-classification

$ ck run program:caffe-classification

Finally, you need to pull this repo and compile this application as a standard CK program (CK will attempt to detect suitable compiler and installed Qt before compiling this app)

$ ck pull repo --url=https://github.com/dividiti/ck-crowdsource-dnn-optimization

$ ck compile program:dnn-desktop-demo

Preparation to run (object detection)

For the object detection mode to work, you also need to setup SqueezeDet from CK-Tensorflow.

First, pull the repo:

$ ck pull repo:ck-tensorflow

Then install working model (other may work too but not yet thoroughly tested):

$ ck install package --tags=model,squeezedet

After that, you should be able to successfully run a simple demo:

$ ck run program:squeezedet --cmd_key=default

(it may ask a couple of questions and install some stuff like a dataset at the first run).

Note that we managed to run it on Windows using Python 3.5 only (lower versions or Python 3.x didn't work well with TF)!

If this works for you, the object detection mode in the UI will be available.

You can then install KITTI image set to test it in our application:

$ ck install package --tags=kitti-drive-0009

Running the app

Execute the following to run this GUI-based app:

$ ck run program:dnn-desktop-demo [parameters]

Parameters supported:

-

--params.fps_update_interval_ms: integer value in milliseconds, controls the maximum frequency of 'Images per second' (FPS) updates. The actual value of FPS will still be shown accurately, but it will be updated at most once per the given value of milliseconds. The default value is500. Set it to0to get the real-time speed (it may be hard to read). Example:ck run program:dnn-desktop-demo --params.fps_update_interval_ms=0 -

--params.recognition_update_interval_ms: integer value in milliseconds, controls the maximum frequency of updates in the object recognition mode. The image and metrics below it will be updated at most once per the given value of milliseconds. The default value is1000. Set it to0to get the real-time speed (it may be hard to read). Example:ck run program:dnn-desktop-demo --params.recognition_update_interval_ms=2000 -

--params.footer_right_text: text to be shown in the bottom right corner of the application -

--params.footer_right_url: if specified, andfooter_right_textis also specified, the text becomes clickable, and this URL is opened on click -

--params.recognition_auto_restart: can be enabled or disabled (1/0). Enabled by default. If enabled, and an object detection experiment finishes normally (i.e. the dataset is exhausted), the experiment restarts automatically with the same parameters. Example:ck run program:dnn-desktop-demo --params.recognition_auto_restart=0

Parameter values are saved for the future use. So, next time you call ck run, you don't need to specify them again.

Select DNN engine, data set and press start button! Enjoy!

Future work

We plan to extend this application to optimize deep learning (training and predictions) across different models and their topologies, data sets, libraries and diverse hardware while sharing optimization statistics with the community via public repo.

This should allow end-users select the most efficient solution for their tasks (DNN running on a supercomputer or in a constrained IoT device), and computer engineers designers deliver next generation of efficient libraries and hardware.

See our vision papers below for more details.

Related Publications with our long term vision

@inproceedings{Lokhmotov:2016:OCN:2909437.2909449,

author = {Lokhmotov, Anton and Fursin, Grigori},

title = {Optimizing Convolutional Neural Networks on Embedded Platforms with OpenCL},

booktitle = {Proceedings of the 4th International Workshop on OpenCL},

series = {IWOCL '16},

year = {2016},

location = {Vienna, Austria},

url = {http://doi.acm.org/10.1145/2909437.2909449},

acmid = {2909449},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {Convolutional neural networks, OpenCL, collaborative optimization, deep learning, optimization knowledge repository},

}

@inproceedings{ck-date16,

title = {{Collective Knowledge}: towards {R\&D} sustainability},

author = {Fursin, Grigori and Lokhmotov, Anton and Plowman, Ed},

booktitle = {Proceedings of the Conference on Design, Automation and Test in Europe (DATE'16)},

year = {2016},

month = {March},

url = {https://www.researchgate.net/publication/304010295_Collective_Knowledge_Towards_RD_Sustainability}

}

Testimonials and awards

- 2015: ARM and the cTuning foundation use CK to accelerate computer engineering: HiPEAC Info'45 page 17, ARM TechCon'16 presentation and demo, public CK repo

Acknowledgments

CK development is coordinated by dividiti and the cTuning foundation (non-profit research organization) We are also extremely grateful to all volunteers for their valuable feedback and contributions.

Feedback

Feel free to engage with our community via this mailing list:

- http://groups.google.com/group/collective-knowledge