image-segmentation-keras

image-segmentation-keras copied to clipboard

image-segmentation-keras copied to clipboard

VGG32 not giving any predictions after training on same datasets as other models

Hello!

I am using the various models of this repo for my thesis. I try to predict zebra crossings from aerial images. It has the following setup:

- I trained 6 different models in 6 different datasets.

- evaluated the models by letting them predict the same test set.

image info:

- All images are 800x800, in .PNG format (this should not matter, as the predictions from the small weights performs well, in these are the same images used in the other predictions)

This is the setup for all the models:

# set model parameters

model_params = {"input_height": 800,

"input_width": 800,

"n_classes" : 2}

# set models

Vanilla8 = fcn_8(**model_params)

Vanilla32 = fcn_32(**model_params)

VGG8 = fcn_8_vgg(**model_params)

VGG32 = fcn_32_vgg(**model_params)

Resnet8 = fcn_8_resnet50(**model_params)

Resnet32 = fcn_32_resnet50(**model_params)

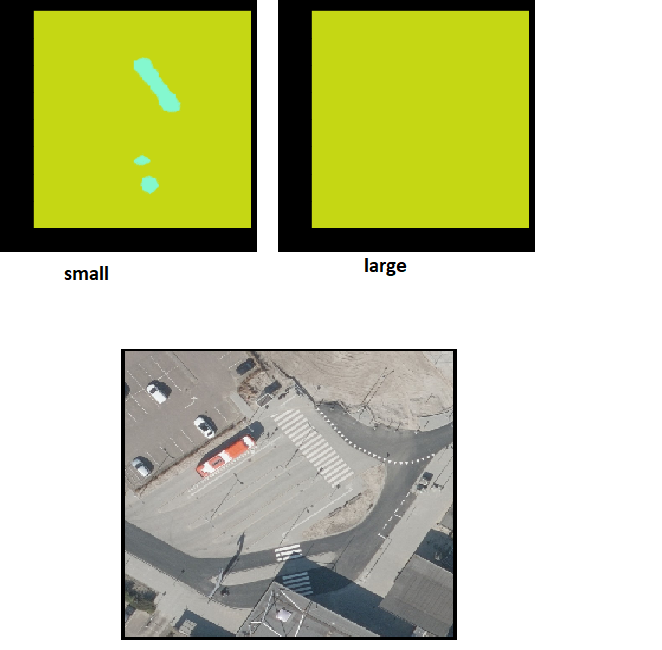

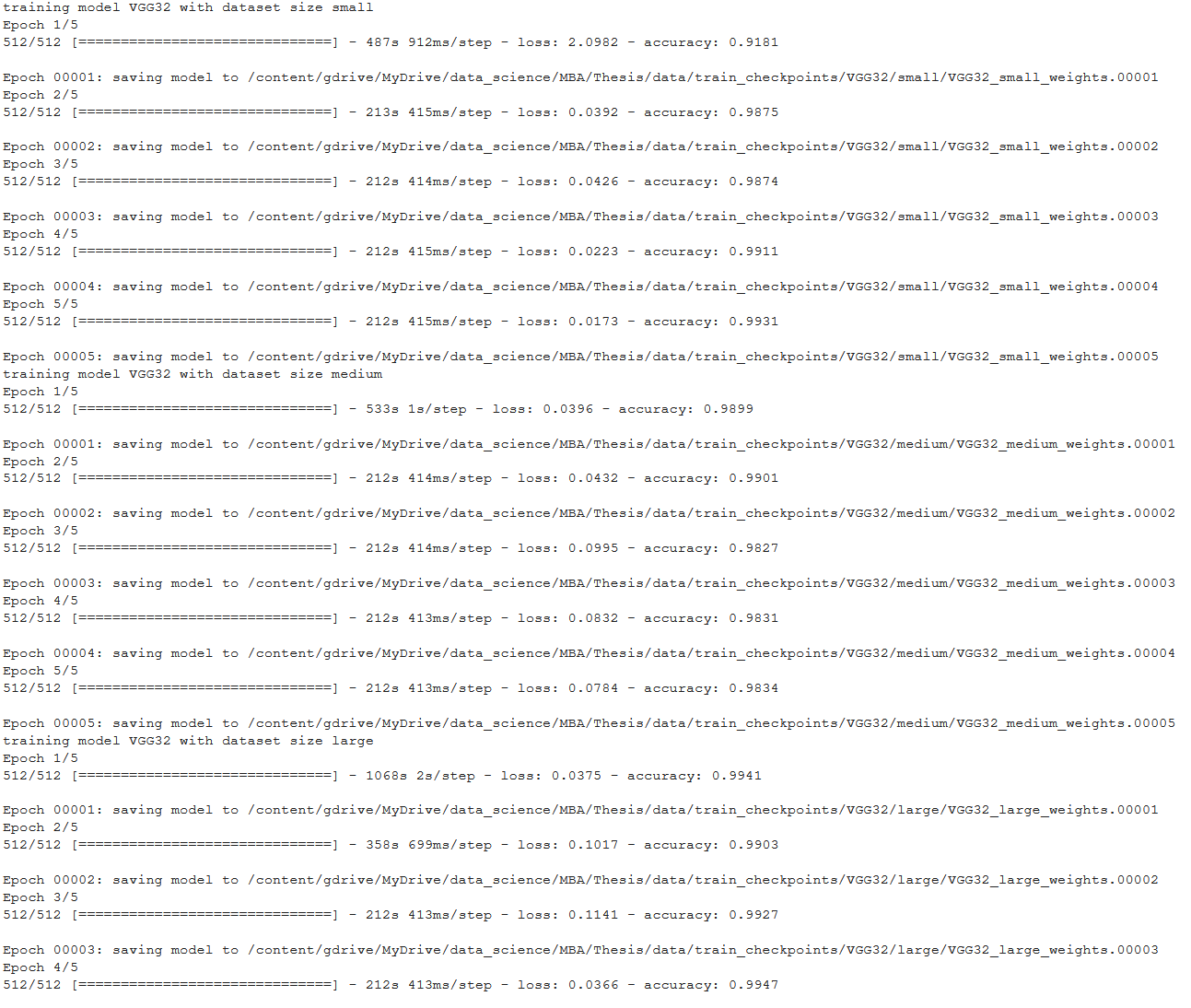

However, I notice that the VGG32 model gives normal predictions for 2 datasets, and for the other 4 it fails to predict anything at all. I just get blank outcomes!

This, while during training the epoch notifications show a high accuracy.

As an example, I ran the code below on the same image, using the weights from training on the small and large dataset. Along with the ground truth.

All other models provide correct predictions on the same images, and have been trained on the same images. Heck, even the VGG32 model with the small and small + transformed data performs fine, just the models with the larger dataset don't. It's really weird, and I am at a loss at what the possible reason could be. Any help is greatly appreciated!

# load weights small

weightfile = base_path+"data/train_checkpoints/VGG32/small/VGG32_small_weights.00005"

VGG32.load_weights(filepath = weightfile)

VGG32.predict_segmentation(

inp= "/content/gdrive/MyDrive/data_science/MBA/Thesis/data/testset/images/21025.png",

out_fname= "/content/gdrive/MyDrive/data_science/MBA/Thesis/data/testset/test_small.png"

)

image_small = cv2.imread("/content/gdrive/MyDrive/data_science/MBA/Thesis/data/testset/test_small.png")

plt.imshow(image_small)

# load weights large

weightfile = base_path+"data/train_checkpoints/VGG32/large/VGG32_large_weights.00005"

VGG32.load_weights(filepath = weightfile)

VGG32.predict_segmentation(

inp= "/content/gdrive/MyDrive/data_science/MBA/Thesis/data/testset/images/21025.png",

out_fname= "/content/gdrive/MyDrive/data_science/MBA/Thesis/data/testset/test_large.png"

)

image_large = cv2.imread("/content/gdrive/MyDrive/data_science/MBA/Thesis/data/testset/test_large.png")

plt.imshow(image_large)

anyone has an idea? help would be kindly appreciated!

I have the same problem with my vgg implementation

@ssnirgudkar @SjoerdBraaksma I'm having some issues with the vgg model as well, may I know the tensorflow version you are using to train the model?