Use blasoxide for BLAS operations

Hi,

I am writing a BLAS implementation in rust: https://github.com/oezgurmakkurt/blasoxide

It has all level1 except complex, sgemv, dgemv, sgemm and dgemm functions with ~max multicore performance. Only limitation in matrix multiplication is it ignores the transpose arguments for now.

Is blasoxide viable to use in nalgebra? If it isn't what tests, benchmarks, features I need to add to make it viable.

Hi!

This looks interesting.

- Do you have any comparative benchmark with the existing implementations on

nalgebra? -

rayonis a quite heavy dependency, it would be better if it was optional. - Will this compile/work when targeting architectures other than x86_64?

Gemm implementations are in 10% of openblas so it is probably way faster than anything in rust right now.

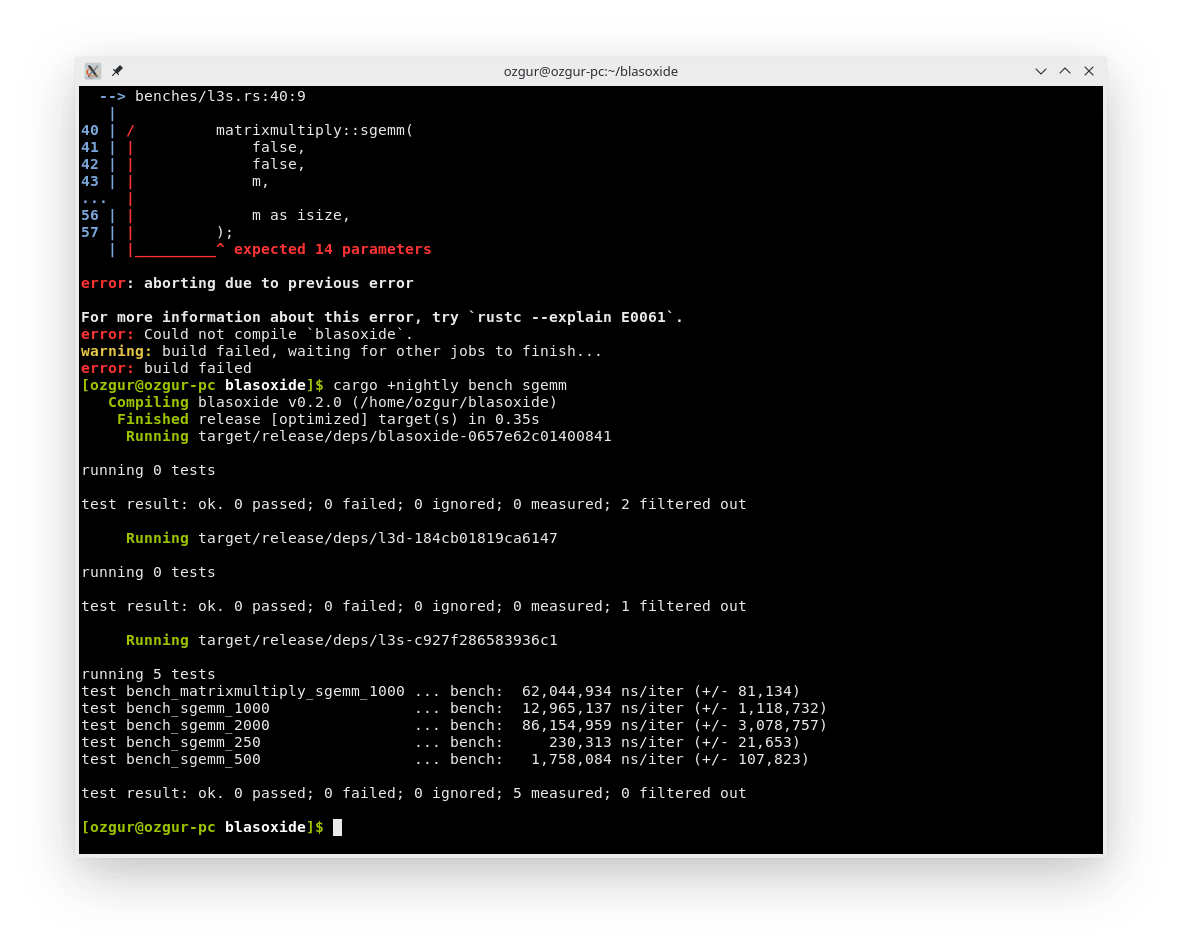

Here is a comparison of sgemm with matrixmultiply crate:

OpenBLAS multiplies 1000x1000 matrices in 13ms on my cpu also so this is equal to OpenBLAS

OpenBLAS multiplies 1000x1000 matrices in 13ms on my cpu also so this is equal to OpenBLAS

I was thinking about not including rayon also, but I thought its like openmp for rust so any computation oriented program would use it for something. I might write a simple threadpool to replace rayon but I don't think it would be as fast. Also I don't like generics and traits, blasoxide doesn't have them so it compiles very fast.

I am working on a new version that optionally produces portable binary that checks for simd on runtime on x86_64 runs on other architectures normally. It should be done by tomorrow.

I am actually learning linear algebra right now so I will be implementing all level2 and level3 functions maybe even complex functions so it will only get better from it's current state.

Hi @oezgurmakkurt, this looks very cool! Great job :-)

Question: is the benchmark against matrixmultiply single-threaded for both matrixmultiply and blasoxide, or is blasoxide using parallelization? Afaik, matrixmultiply is only single-threaded.

Regarding rayon: I think what @sebcrozet had in mind was the ability to only use rayon optionally, in the sense that if rayon is toggled off, then the crate would simply perform no parallelization at all (and not depend on rayon). I think this would be a nice feature.

Would you mind sharing a little bit what your inspiration is? For example, what makes it so much faster than matrixmultiply? Is the architecture similar to e.g. BLIS, or some other BLAS-like library?

Hi @Andlon thanks for your interest,

My sgemm implementation is multithreaded and matrixmultiply is not. Benchmark is run on a 4 core ryzen cpu, I just improved gemm implementations and blasoxide is faster 5.9 times now.

I can add a feature to disable rayon but I don't think it makes much sense to do this because cargo build --release takes 7 seconds(is fast, especially compared to something like OpenBLAS), gemm operations scale ~100% with parallelization and almost everyone should have multithreaded cpus now.

I read this first: https://github.com/flame/how-to-optimize-gemm/wiki

This was almost all I needed for single threaded, then I added scale arguments and cleaned corner cases. Then I made packing routines and execution of second loop around microkernel multithreaded which gives ~100% scaling with multiple cores, I found this idea on a powerpoint slide on internet.

Architecture is similar to blis. I read some of their paper and based my implementation on it. It should be fairly easy to add new cpu architectures to supported list but I don't have access to any hardware other than my cpu so thats not likely to happen soon.

My priorities with this library are:

- should be as fast as OpenBLAS

- Easy to use: zero configuration needed, just

cargo buildand it works fast - Fast build

- Easy to read source code and rust only (this helps with ease of building also).

That's really cool! Having a library competitive with OpenBLAS in pure Rust is amazing, because we can avoid all the painful issues surrounding compilation and dependency management.

One issue with rayon is that, as far as I know, it's not no-std compatible. This means that if nalgebra depends on rayon, then nalgebra cannot be no-std, which is a no-go since nalgebra is already being used in no-std environments (such as WASM).

Alright that makes sense I will be adding a feature that disables rayon

edit: just realized that means it can't use vector either so I will have to change that also, this might take some time

Well, as excited as I am about this, in the end this is all the decision of @sebcrozet, so perhaps wait to see if he agrees with what I wrote before you put too much work into my suggested improvements.

That said, having your BLAS-like crate work in no-std environments would be a massive benefit, in any case!

Unfortunately I think this issue can be closed as OP is now a ghost

matrixmultiply has improved default performance since this issue was written, and it also supports multithreading and complex numbers (quite immature support for complex, maybe.)