dgraph

dgraph copied to clipboard

dgraph copied to clipboard

Add a validator for bulk and live loader

Experience Report

What you wanted to do

I wanted to load a big dataset use bulk loader.

Bulk loading and live loading can take quite a while for large datasets, it's very annoying that because of a badly formatted entry the process crashes after hours of running.

What you actually did

I had to fix the issue and rerun the whole process from scratch.

Why that wasn't great, with examples

It's a waste of time and resources. Instead, I would have expected the input to be validated before the process started so any errors would be detected early on.

Any external references to support your case

I ran into similar issues when first starting out using Dgraph and built a basic validator leveraging the bulk loader codebase with support for:

- RDF validation

- Schema validation

- Empty or corrupt gzip file validation

All three conditions can potentially terminate the bulk load process and if you're unlucky, several hours into the map phase. Fortunately it's possible to support a basic validator leveraging the existing codebase with little effort and adding a --dryrun or similar CLI flag to the bulk loader.

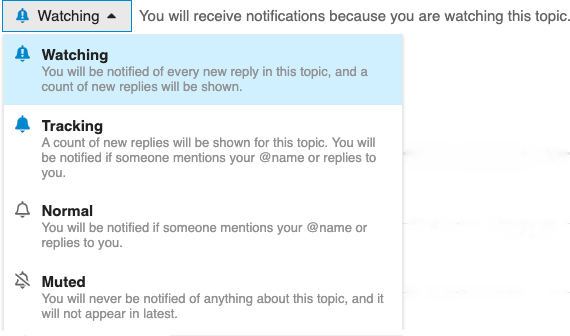

Github issues have been deprecated. This issue has been moved to discuss. You can follow the conversation there and also subscribe to updates by changing your notification preferences.

Because a load may also fail due to temporary system issues (network etc.) we may instead want to solve this by making it easier to pick up where a load left off. This could involve splitting huge inputs into smaller files and resuming at the right point.

This should allow a failed job to be restarted after a corrupt or invalid file, and should also allow resume after some outage.

Separately, faster reporting of invalid data is also helpful, but may not be as important if the jobs are resumeable.