SURVEY: Who is using Badger?

Hey there :wave:, we're doing an informal survey to gauge the interest in this project and it would be very helpful if you could answer the following questions-

- If applicable, what company/organization do you represent?

- How are you using Badger? Do you use it as a temporary store? Or have you built systems on top of Badger?

- What kind of problems are you solving with Badger? Do you use features such as compression and encryption?

- Can you provide any information about the amount of data that you store in Badger?

- If you are not using Badger but chose another key-value store, what were the reasons?

- If applicable, please share a link to your project/product built with Badger.

Thank you for your help in improving Badger!

Hey, I'm a developer on dcrandroid, it's a Decred Android Wallet and it has a gomobile backend in dcrlibwallet. I use Badger as permanent storage for wallet and blockchain data which is kind of a lot for mobile but Badger kind of makes it possible. I have my Badger implementation as a driver, my database interface require a bucket so there's a bucket implementation.

The problems I've faced with badger is usually with memory, I have Badger implemented as a driver so it's tough for me to bring out test data to replicate the issue but it's usually a panic and I've been unable to point out the exact reason this is happening. I have an old Badger db version but I'm currently trying out v1.6.1 and I'm getting this

Mmap value log file. Path=/data/user/0/com.decred.dcrandroid.testnet/files/wallets/testnet3/1/wallet.db/000000.vlog. Error=cannot allocate memory

I've reduced my config to this but I keep getting that error and I have no idea what to do about it.

opts := badger.DefaultOptions(dbPath)

opts.WithValueDir(dbPath)

opts.WithValueLogLoadingMode(options.MemoryMap)

opts.WithTableLoadingMode(options.MemoryMap)

Hey @C-ollins, since you've set opts.WithValueLogLoadingMode(options.MemoryMap), Badger will try to allocate 2 GB of memory upfront. If you're running badger in limited memory environment, you should set the loading mode to FileIO for tables and value log.

It works now but writing get stuck as soon as I get this log, then it freezes till the app shuts down without a reason. I've changed the version to the latest release(not sure if this will conflict with my previous db?) and I've also tried setting sync writes to false but it keeps freezing after I get this log.

16:06:31 DEBUG: Flushing memtable, mt.size=33990725 size of flushChan: 0

16:06:31 DEBUG: Storing value log head: {Fid:1 Len:44 Offset:36022908}

16:06:33 INFO: Got compaction priority: {level:0 score:1 dropPrefix:[]}

16:06:33 INFO: Running for level: 0

16:06:34 DEBUG: LOG Compact. Added 648141 keys. Skipped 0 keys. Iteration took: 755.212128ms

16:06:34 DEBUG: LOG Compact. Added 180079 keys. Skipped 0 keys. Iteration took: 240.403369ms

16:06:35 DEBUG: Discard stats: map[]

16:06:35 INFO: LOG Compact 0->1, del 2 tables, add 2 tables, took 2.451989141s

16:06:35 INFO: Compaction for level: 0 DONE

Full config

WithValueLogLoadingMode(options.FileIO).

WithTableLoadingMode(options.FileIO).

WithSyncWrites(false).

WithNumMemtables(1).

WithNumLevelZeroTables(1).

WithNumLevelZeroTablesStall(2).

WithNumCompactors(1).

WithMaxTableSize(32 << 20).

WithValueLogFileSize(100 << 20)

@C-ollins I don't see anything crashing. Can you please create a new issue with all the details? I would be happy to help.

-

If applicable, what company/organization do you represent? Mixin Network

-

How are you using Badger? Do you use it as a temporary store? Or have you built systems on top of Badger? We use it as the main storage.

-

What kind of problems are you solving with Badger? Do you use features such as compression and encryption? We are building a distributed ledger to store transaction snapshots. We don't use the compression and encryption feature of badger, and we do them in our application code. Actually the compression in badger causes bad performance issues for our project.

-

Can you provide any information about the amount of data that you store in Badger? About 100GB for all 25,000,000 snapshots.

-

If applicable, please share a link to your project/product built with Badger. https://github.com/MixinNetwork/mixin

* If applicable, what company/organization do you represent?

https://oyatocloud.com/

* How are you using Badger? Do you use it as a temporary store? Or have you built systems on top of Badger?

We're using it mostly as a SQLite replacement where we don't actually need SQL and temporary-ish storage like logs, HTTPS certificates storage and mail storage.

* What kind of problems are you solving with Badger? Do you use features such as compression and encryption?

Yes! compression and encryption were the main reasons we upgraded to V2.

We also like its rsync-ability, which was one of the pros for switching from Boltdb.

* Can you provide any information about the amount of data that you store in Badger?

Typically up to a few GiBs compressed before rotation or deletion.

* If applicable, please share a link to your project/product built with Badger.

The only one open-sourced so far is https://github.com/oyato/certmagic-badgerstorage

Hey @jarifibrahim,

I hope the IPFS team is alright that I write a bit about the usage of badger in go-ipfs.

I wrote a little about the use-case of go-ipfs here.

- How are you using Badger? Do you use it as a temporary store? Or have you built systems on top of Badger?

go-ipfs uses badger v1 as a replacement for writing files flat out on the disk. It is used as temporary storage as well as permanent storage.

- What kind of problems are you solving with Badger?

Using badger greatly improves the performance of ipfs compared to using the default filestore.

- Do you use features such as compression and encryption?

ipfs does not use those features.

- Can you provide any information about the amount of data that you store in Badger?

The amount of data varies, from user to user. I think most home users will add just several GB, while servers probably store multiple terabytes in badger in the foreseeable future - since support for it is marked stable just for a short while.

- If applicable, please share a link to your project/product built with Badger.

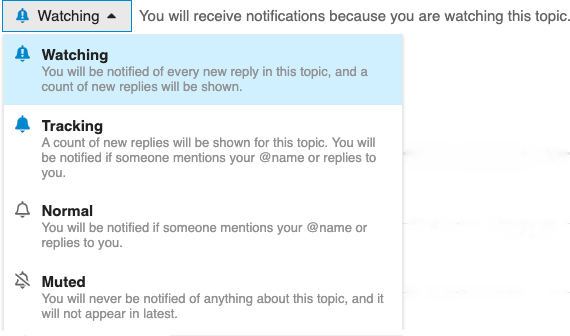

Github issues have been deprecated. This issue has been moved to discuss. You can follow the conversation there and also subscribe to updates by changing your notification preferences.

无法使用,当我清理数据之后,给同样的key 重新添加数据, 以前的部分旧数据又会回来,这样的项目start 9.3k 确定不是开玩笑的?

I am using Badger as the storage layer for Rony framework. Rony is a scalable RPC framework which copies distributed database architecture but in application layer. In other words, it let us write apis in a sharded/replicated manner, hence we can write distributed apps with no need of a separate scalable database.

github.com/ronaksoft/rony

go-ssb is using Badger, which renders it useless on iOS devices, because it attempts to mmap 2GB of data, which will never work on an embedded device.