cnn-text-classification-tf

cnn-text-classification-tf copied to clipboard

cnn-text-classification-tf copied to clipboard

First train step extremely slow

Hi,

thanks for the code sharing.

I just found when set a large sequence_length, for example, 100 times 56 (original use case), the first train batch would take really long time, but the following train batches just behave normal. Does this means I should change feed_dict to some sort of input pipeline using FIFOqueue? Is this a bug of feed_dict or just the normal thing?

Specifically, I run this code under Ubuntu 16.04, virtualenv in python3.5, pip install tensorflow-gpu, and the change made to the code is in train_step() like

x = tuple(np.concatenate(list(x_batch[i] for _ in range(100))) for i in range(len(x_batch)))

feed_dict = {

cnn.input_x: x,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: FLAGS.dropout_keep_prob

}

Also I changed the init code

cnn = TextCNN(

sequence_length=x_train.shape[1] * 100,

...

When i run python train.py --batch_size=64, i got

...

2017-08-01 08:51:24.857667: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1030] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce GTX TITAN X, pci bus id: 0000:05:00.0)

Writing to .../cnn-text-classification-tf/runs/1501548685

2017-08-01T08:55:21.885429: step 1, loss 2.72347, acc 0.421875

2017-08-01T08:55:22.391577: step 2, loss 2.37839, acc 0.421875

2017-08-01T08:55:22.889940: step 3, loss 2.28013, acc 0.484375

2017-08-01T08:55:23.375749: step 4, loss 1.92451, acc 0.484375

2017-08-01T08:55:23.853319: step 5, loss 2.29158, acc 0.484375

2017-08-01T08:55:24.398173: step 6, loss 1.976, acc 0.484375

...

the first batch took ~4 min, however the following batches took ~0.5s. I also noticed that setting batch_size=10 would reduce the first_batch_time. During its first batch training, the gpu usage is 100%, not full 100% after. Original code runs at not full 100%, feed_dict related thing I think, correct me if i am wrong. Run on tensorflow (CPU) does not have time gap like this.

So, my question is what is tf doing in its first train batch sess.run?

I used gpu trace as https://github.com/tensorflow/tensorflow/issues/1824#issuecomment-225754659 (don't know whether it is out of date) and results show it IS doing something. But why the first batch would be so slow?

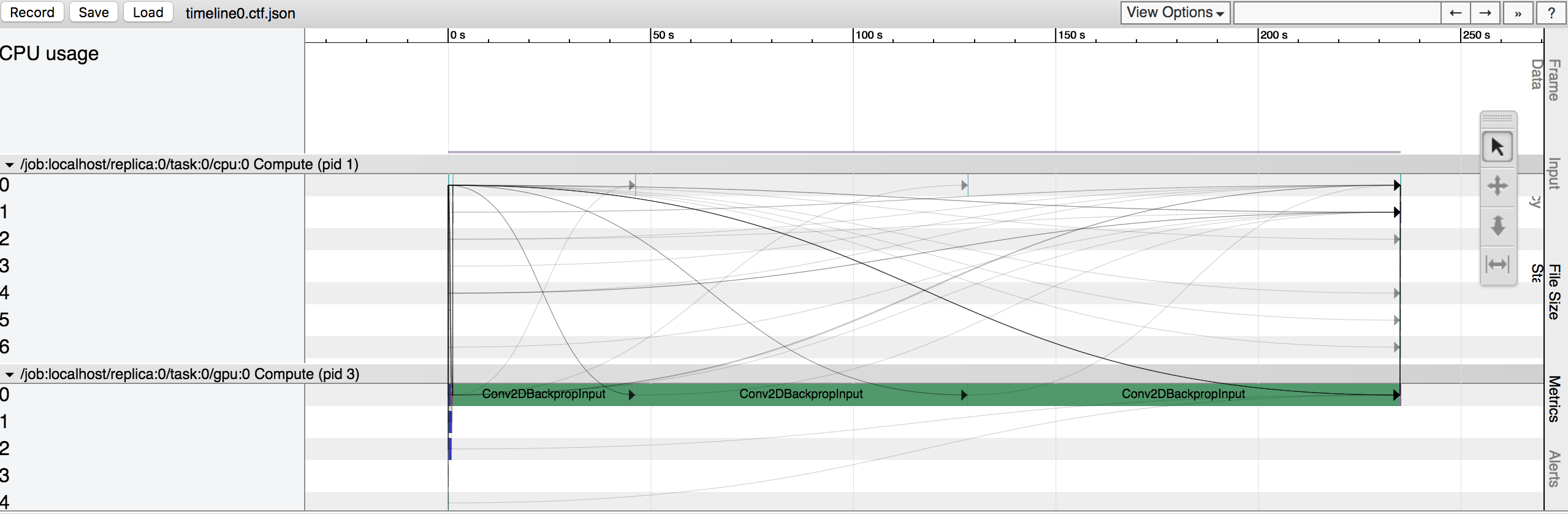

first batch

those three Conv2DBackpropInput stands for 3,4,5 size filters.

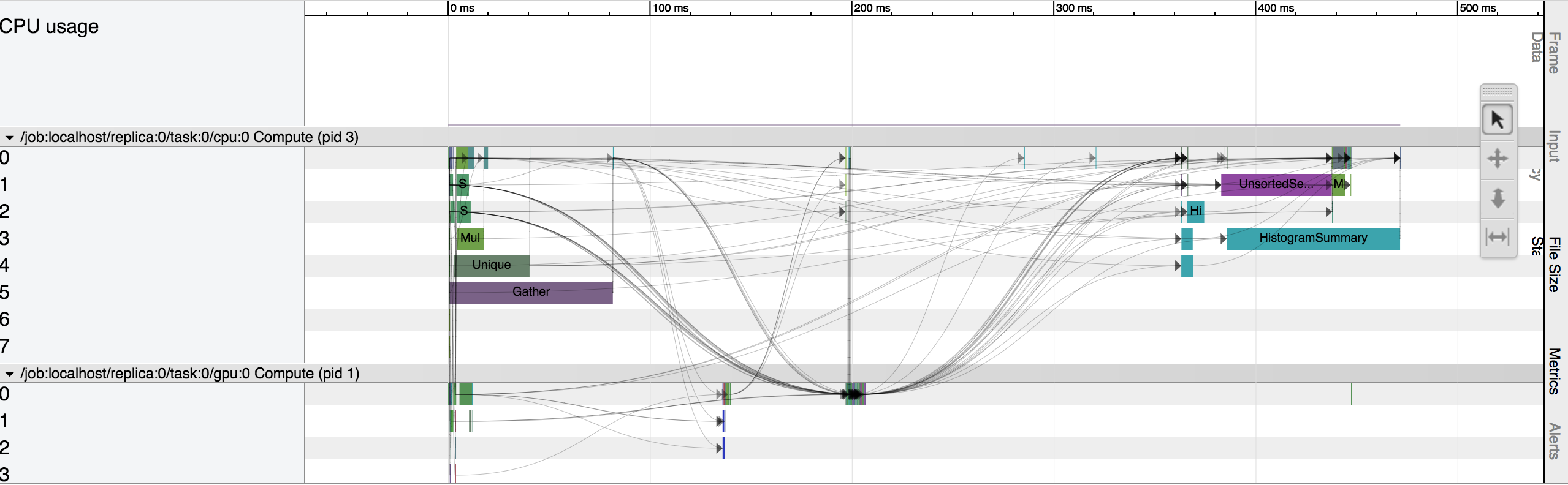

second batch

@GxvgiuU did you manage to solve this? I'm having this issue too (and another issue on every 100 steps)

I also have the same problem with 1.12, it is extremely slow for the 1st epoch with Conv, no cnn (only lstm) is fine